Create a minimal AI assistant using .NET

In this quickstart, you'll learn how to create a minimal AI assistant using the OpenAI or Azure OpenAI SDK libraries. AI assistants provide agentic functionality to help users complete tasks using AI tools and models. In the sections ahead, you'll learn the following:

- Core components and concepts of AI assistants

- How to create an assistant using the Azure OpenAI SDK

- How to enhance and customize the capabilities of an assistant

Prerequisites

- Install .NET 8.0 or higher

- Visual Studio Code (optional)

- Visual Studio (optional)

- An access key for an OpenAI model

- Install .NET 8.0 or higher

- Visual Studio Code (optional)

- Visual Studio (optional)

- Access to an Azure OpenAI instance via Azure Identity or an access key

Core components of AI assistants

AI assistants are based around conversational threads with a user. The user sends prompts to the assistant on a conversation thread, which directs the assistant to complete tasks using the tools it has available. Assistants can process and analyze data, make decisions, and interact with users or other systems to achieve specific goals. Most assistants include the following components:

| Component | Description |

|---|---|

| Assistant | The core AI client and logic that uses Azure OpenAI models, manages conversation threads, and utilizes configured tools. |

| Thread | A conversation session between an assistant and a user. Threads store messages and automatically handle truncation to fit content into a model's context. |

| Message | A message created by an assistant or a user. Messages can include text, images, and other files. Messages are stored as a list on the thread. |

| Run | Activation of an assistant to begin running based on the contents of the thread. The assistant uses its configuration and the thread's messages to perform tasks by calling models and tools. As part of a run, the assistant appends messages to the thread. |

| Run steps | A detailed list of steps the assistant took as part of a run. An assistant can call tools or create messages during its run. Examining run steps allows you to understand how the assistant is getting to its final results. |

Assistants can also be configured to use multiple tools in parallel to complete tasks, including the following:

- Code interpreter tool: Writes and runs code in a sandboxed execution environment.

- Function calling: Runs local custom functions you define in your code.

- File search capabilities: Augments the assistant with knowledge from outside its model.

By understanding these core components and how they interact, you can build and customize powerful AI assistants to meet your specific needs.

Create the .NET app

Complete the following steps to create a .NET console app and add the package needed to work with assistants:

In a terminal window, navigate to an empty directory on your device and create a new app with the

dotnet newcommand:dotnet new console -o AIAssistantAdd the OpenAI package to your app:

dotnet add package OpenAI --prereleaseOpen the new app in your editor of choice, such as Visual Studio Code.

code .

In a terminal window, navigate to an empty directory on your device and create a new app with the

dotnet newcommand:dotnet new console -o AIAssistantAdd the Azure.AI.OpenAI package to your app:

dotnet add package Azure.AI.OpenAI --prereleaseOpen the new app in your editor of choice, such as Visual Studio Code.

code .

Create the AI assistant client

Open the Program.cs file and replace the contents of the file with the following code to create the required clients:

using OpenAI; using OpenAI.Assistants; using OpenAI.Files; using Azure.AI.OpenAI; using Azure.Identity; // Create the OpenAI client OpenAIClient openAIClient = new("your-apy-key"); // For Azure OpenAI, use the following client instead: AzureOpenAIClient azureAIClient = new( new Uri("your-azure-openai-endpoint"), new DefaultAzureCredential()); #pragma warning disable OPENAI001 AssistantClient assistantClient = openAIClient.GetAssistantClient(); OpenAIFileClient fileClient = openAIClient.GetOpenAIFileClient();Create an in-memory sample document and upload it to the

OpenAIFileClient:// Create an in-memory document to upload to the file client using Stream document = BinaryData.FromBytes(""" { "description": "This document contains the sale history data for Contoso products.", "sales": [ { "month": "January", "by_product": { "113043": 15, "113045": 12, "113049": 2 } }, { "month": "February", "by_product": { "113045": 22 } }, { "month": "March", "by_product": { "113045": 16, "113055": 5 } } ] } """u8.ToArray()).ToStream(); // Upload the document to the file client OpenAIFile salesFile = fileClient.UploadFile( document, "monthly_sales.json", FileUploadPurpose.Assistants);Enable file search and code interpreter tooling capabilities via the

AssistantCreationOptions:// Configure the assistant options AssistantCreationOptions assistantOptions = new() { Name = "Example: Contoso sales RAG", Instructions = "You are an assistant that looks up sales data and helps visualize the information based" + " on user queries. When asked to generate a graph, chart, or other visualization, use" + " the code interpreter tool to do so.", Tools = { new FileSearchToolDefinition(), // Enable the assistant to search and access files new CodeInterpreterToolDefinition(), // Enable the assistant to run code for data analysis }, ToolResources = new() { FileSearch = new() { NewVectorStores = { new VectorStoreCreationHelper([salesFile.Id]), } } }, };Create the

Assistantand a thread to manage interactions between the user and the assistant:// Create the assistant Assistant assistant = assistantClient.CreateAssistant("gpt-4o", assistantOptions); // Configure and create the conversation thread ThreadCreationOptions threadOptions = new() { InitialMessages = { "How well did product 113045 sell in February? Graph its trend over time." } }; ThreadRun threadRun = assistantClient.CreateThreadAndRun(assistant.Id, threadOptions); // Sent the prompt and monitor progress until the thread run is complete do { Thread.Sleep(TimeSpan.FromSeconds(1)); threadRun = assistantClient.GetRun(threadRun.ThreadId, threadRun.Id); } while (!threadRun.Status.IsTerminal); // Get the messages from the thread run var messages = assistantClient.GetMessagesAsync( threadRun.ThreadId, new MessageCollectionOptions() { Order = MessageCollectionOrder.Ascending });Print the messages and save the generated image from the conversation with the assistant:

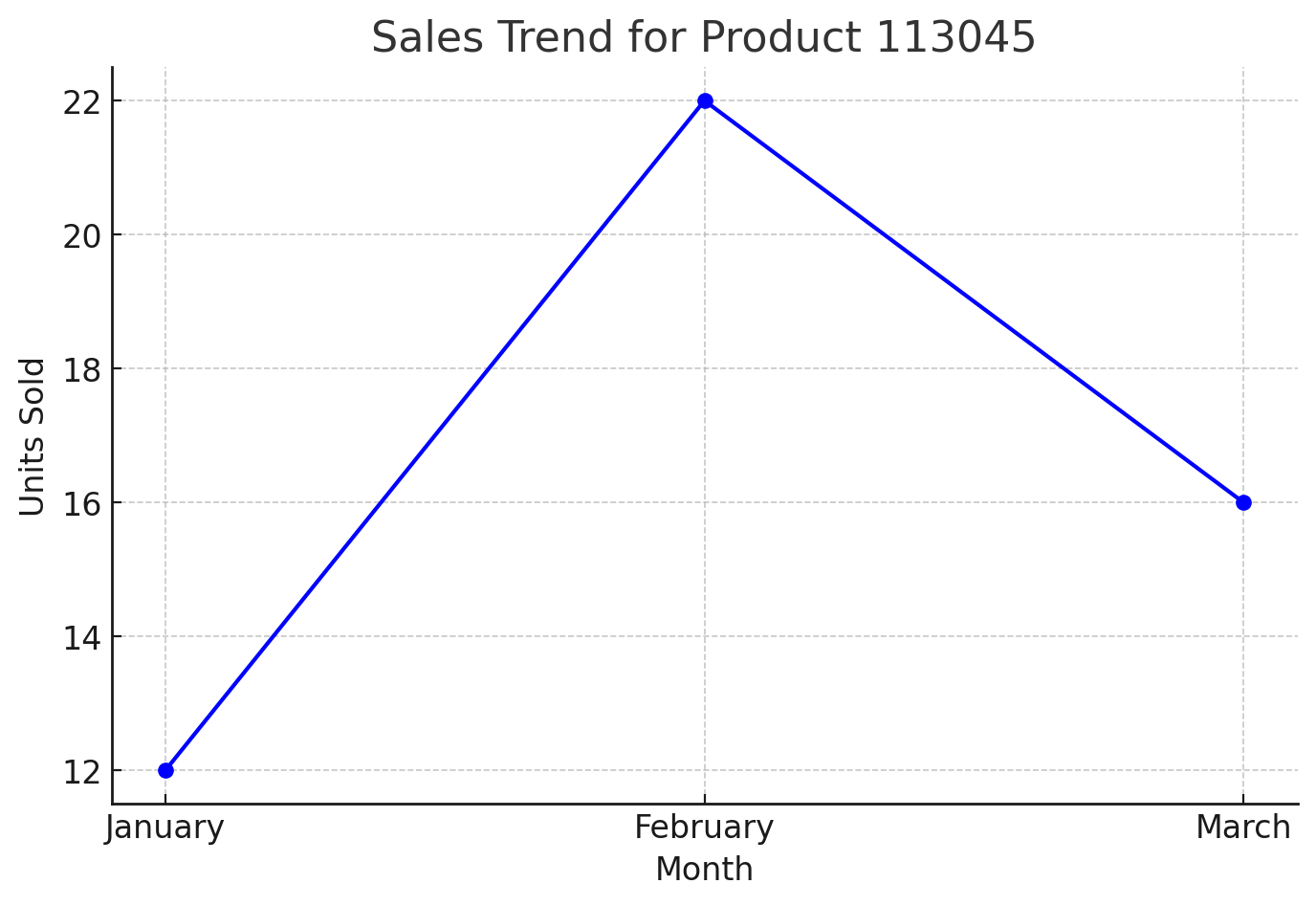

await foreach (ThreadMessage message in messages) { // Print out the messages from the assistant Console.Write($"[{message.Role.ToString().ToUpper()}]: "); foreach (MessageContent contentItem in message.Content) { if (!string.IsNullOrEmpty(contentItem.Text)) { Console.WriteLine($"{contentItem.Text}"); if (contentItem.TextAnnotations.Count > 0) { Console.WriteLine(); } // Include annotations, if any foreach (TextAnnotation annotation in contentItem.TextAnnotations) { if (!string.IsNullOrEmpty(annotation.InputFileId)) { Console.WriteLine($"* File citation, file ID: {annotation.InputFileId}"); } if (!string.IsNullOrEmpty(annotation.OutputFileId)) { Console.WriteLine($"* File output, new file ID: {annotation.OutputFileId}"); } } } // Save the generated image file if (!string.IsNullOrEmpty(contentItem.ImageFileId)) { OpenAIFile imageInfo = fileClient.GetFile(contentItem.ImageFileId); BinaryData imageBytes = fileClient.DownloadFile(contentItem.ImageFileId); using FileStream stream = File.OpenWrite($"{imageInfo.Filename}.png"); imageBytes.ToStream().CopyTo(stream); Console.WriteLine($"<image: {imageInfo.Filename}.png>"); } } Console.WriteLine(); }Locate and open the saved image in the app bin directory, which should resemble the following: