BotFramework: Image Processing Bot using MS Bot Framework and Computer Vision SDK

Introduction

This article discusses how the Microsoft Bot Framework SDK v4 and Microsoft ComputerVision SDK can be used together to create a fun bot which can analyze the image, generate a thumbnail and extract text from the printed and handwritten text on images (OCR). The bot discussed here performs following options.

- Process the Image to Identify Type of Image and provide its description.

- Create a Thumbnail.

- Extract Text from Image containing printed text.

- Extract Text from Image containing hand written text.

Tools and Frameworks

Following tools and frameworks while developing the bot.

- Visual Studio 2017

- Microsoft Bot Framework SDK v4 and above

- ASP.NET core 2.1

- Microsoft Computer Vision SDK

Basics

Some of the basic information which is required before the code is presented is discussed here.

Computer Vision API

Computer Vision API is a cognitive Service offered by Microsoft under their Azure umbrella. This API helps perform various tasks like analyzing the images, extracting text from handwritten and printed images, recognize important faces, provide the break up of images to extract out information based upon the co ordinates of various facial aspects, determine gender etc. More information about the Computer Vision API is available at Computer Vision API- Home

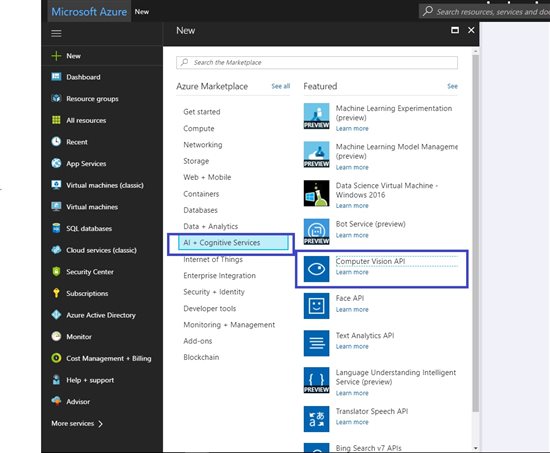

Subscribe To API

Following steps show how the computer Vision API can be subscribed to from the Azure Portal.

Navigate to the Azure Portal and Click on the New Resource and Select AI + Machine Learning. Select the computer Vision API.

Fill out the necessary details.

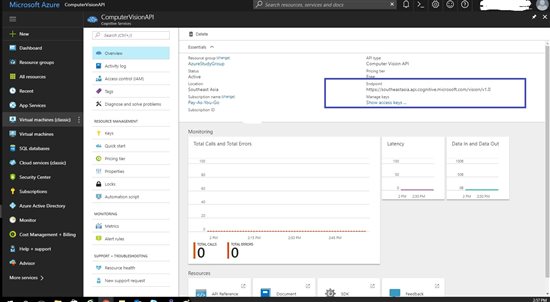

Copy the access keys and the endpoint.

Microsoft Bot Framework

The Microsoft Bot Framework version 4 SDK is used to develop the the bot. This framework uses the ASP.NET core 2 and above and is a beautiful framework to develop the chat bots. The framework however does require some time and effort to learn and implement it, but once done, it is very easy to do so. Official documentation for the bot framework can be found at Azure Bot Service Documentation

Code

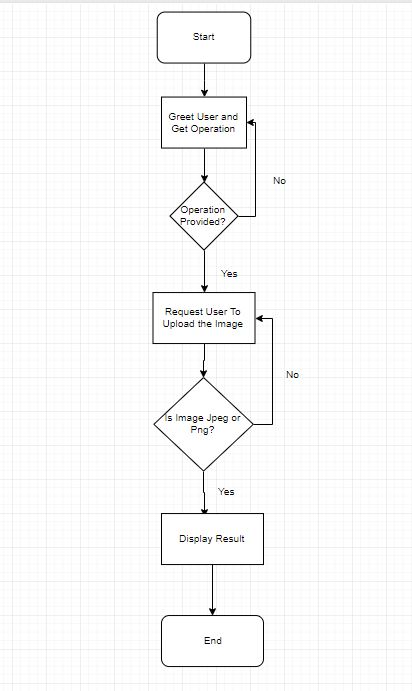

Conversation Flow

Following is the flow of conversation that we want to achieve.

Initial Setup

The Echo Bot template in Visual Studio can be used to create a skeleton bot for the project and then make the changes as required. The skeleton bot is nothing more than a ASP.NET core MVC based web application with classes attributed to the bot. Before the coding the Nuget packages for the Microsoft Bot Framework and Microsoft Azure Computer Vision API need to be added to the project. It can be done by adding following Package references to the .csproj file. And the solution will restore the Nuget packages.

<ItemGroup>

<PackageReference Include="Microsoft.AspNetCore" Version="2.1.3" />

<PackageReference Include="Microsoft.AspNetCore.All" Version="2.0.9" />

<PackageReference Include="AsyncUsageAnalyzers" Version="1.0.0-alpha003" PrivateAssets="all" />

<PackageReference Include="Microsoft.Bot.Builder" Version="4.0.8" />

<PackageReference Include="Microsoft.Bot.Builder.Integration.AspNet.Core" Version="4.0.6" />

<PackageReference Include="Microsoft.Bot.Configuration" Version="4.0.8" />

<PackageReference Include="Microsoft.Bot.Connector" Version="4.0.8" />

<PackageReference Include="Microsoft.Bot.Schema" Version="4.0.8" />

<PackageReference Include="Microsoft.Bot.Builder.Dialogs" Version="4.0.8" />

<PackageReference Include="Microsoft.Extensions.Logging.AzureAppServices" Version="2.1.1" />

<PackageReference Include="Microsoft.AspNetCore.App" />

<PackageReference Include="Microsoft.Azure.CognitiveServices.Vision.ComputerVision" Version="3.2.0" />

</ItemGroup>

Next step is to set up the appsettings.json file to include the Computer Vision API end point and the subscription key. The appsettings.json file will look something like below.

{

"botFilePath": "ImageProcessingBot.bot",

"botFileSecret": "",

"computerVisionKey" : "Enter Key Here",

"computerVisionEndpoint" : "https://southeastasia.api.cognitive.microsoft.com"

}

These values are required at the runtime to call the ComputerVision API through the SDK. These can be made available during the runtime by Injecting the IConfiguration in the ConfigureServices method of the Startup class. Example as below.

public IConfiguration Configuration { get; }

services.AddSingleton<IConfiguration>(Configuration);

The code needs to store the command given by the user so that the image can be passed to particular functions. For this the bot Accessors class is used.

#region References

using System;

using System.Collections.Generic;

using Microsoft.Bot.Builder;

using Microsoft.Bot.Builder.Dialogs;

#endregion

namespace ImageProcessingBot

{

public class ImageProcessingBotAccessors

{

public ImageProcessingBotAccessors(ConversationState conversationState, UserState userState)

{

ConversationState = conversationState ?? throw new ArgumentNullException(nameof(ConversationState));

UserState = userState ?? throw new ArgumentNullException(nameof(UserState));

}

public static readonly string CommandStateName = $"{nameof(ImageProcessingBotAccessors)}.CommandState";

public static readonly string DialogStateName = $"{nameof(ImageProcessingBotAccessors)}.DialogState";

public IStatePropertyAccessor<string> CommandState { get; set; }

public IStatePropertyAccessor<DialogState> ConversationDialogState { get; set; }

public ConversationState ConversationState { get; }

public UserState UserState { get; }

}

}

These accessors are added to the bot by injecting them at the runtime (using ConfigureServices method of the Startup.cs class)

services.AddSingleton<ImageProcessingBotAccessors>(sp =>

{

var options = sp.GetRequiredService<IOptions<BotFrameworkOptions>>().Value;

if (options == null)

{

throw new InvalidOperationException("BotFrameworkOptions must be configured prior to setting up the state accessors");

}

var conversationState = options.State.OfType<ConversationState>().FirstOrDefault();

if (conversationState == null)

{

throw new InvalidOperationException("ConversationState must be defined and added before adding conversation-scoped state accessors.");

}

var userState = options.State.OfType<UserState>().FirstOrDefault();

if (userState == null)

{

throw new InvalidOperationException("User State mjust be defined and added befor the conversation scoping");

}

// Create the custom state accessor.

// State accessors enable other components to read and write individual properties of state.

var accessors = new ImageProcessingBotAccessors(conversationState, userState)

{

ConversationDialogState = userState.CreateProperty<DialogState> (ImageProcessingBotAccessors.DialogStateName),

CommandState = userState.CreateProperty<string>(ImageProcessingBotAccessors.CommandStateName)

};

return accessors;

});

Computer Vision API SDK Helper

A wrapper helper class is created to consume the Computer Vision API SDK in the bot. The SDK works on token based authorization to authenticate to the Computer Vision API and hence requires the API endpoint and the subscription key. These keys are available in the appsettings.json file and can be picked up during the run time as IConfiguration interface is added already as a singleton in previous step. The reference to the Computer Vision SDK can be made by including following namespaces in the class.

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision;

using Microsoft.Azure.CognitiveServices.Vision.ComputerVision.Models;

Following are the properties and constructors to access the values from appsetting.json and the Computer Vision Client to communicate with the API.

public class ComputerVisionHelper

{

private readonly IConfiguration _configuration;

private ComputerVisionClient _client;

private static readonly List<VisualFeatureTypes> features =

new List<VisualFeatureTypes>()

{

VisualFeatureTypes.Categories, VisualFeatureTypes.Description,

VisualFeatureTypes.Faces, VisualFeatureTypes.ImageType,

VisualFeatureTypes.Tags

};

public ComputerVisionHelper(IConfiguration configuration)

{

_configuration = configuration ?? throw new ArgumentNullException(nameof(configuration));

_client = new ComputerVisionClient(

new ApiKeyServiceClientCredentials(_configuration["computerVisionKey"].ToString()), new System.Net.Http.DelegatingHandler[] {}

);

_client.Endpoint = _configuration["computerVisionEndpoint"].ToString();

}

}

The functions to analyze the image, generate the thumbnail and extract the text from the images are as follows

public async Task<ImageAnalysis> AnalyzeImageAsync(Stream image)

{

ImageAnalysis analysis = await _client.AnalyzeImageInStreamAsync(image, features);

return analysis;

}

public async Task<string> GenerateThumbnailAsync(Stream image)

{

Stream thumbnail = await _client.GenerateThumbnailInStreamAsync(100, 100, image, smartCropping: true);

byte[] thumbnailArray;

using (var ms = new MemoryStream())

{

thumbnail.CopyTo(ms);

thumbnailArray = ms.ToArray();

}

return System.Convert.ToBase64String(thumbnailArray);

}

public async Task<IList<Line>> ExtractTextAsync(Stream image, TextRecognitionMode recognitionMode)

{

RecognizeTextInStreamHeaders headers = await _client.RecognizeTextInStreamAsync(image, recognitionMode);

IList<Line> detectedLines = await GetTextAsync(_client, headers.OperationLocation);

return detectedLines;

}

private async Task<IList<Line>> GetTextAsync(ComputerVisionClient client,string operationLocation)

{

_client = client;

string operationId = operationLocation.Substring(operationLocation.Length - 36);

TextOperationResult result = await _client.GetTextOperationResultAsync(operationId);

// Wait for the operation to complete

int i = 0;

int maxRetries = 5;

while ((result.Status == TextOperationStatusCodes.Running ||

result.Status == TextOperationStatusCodes.NotStarted) && i++ < maxRetries)

{

await Task.Delay(1000);

result = await _client.GetTextOperationResultAsync(operationId);

}

var lines = result.RecognitionResult.Lines;

return lines;

}

These helper methods are called based upon the command given by the user.

Bot Code

The configuration and the bot accessors that were injected during the startup are accessible using the constructor for the bot code. It can be as follows.

public class ImageProcessingBot : IBot

{

private readonly ImageProcessingBotAccessors _accessors;

private readonly IConfiguration _configuration;

private readonly DialogSet _dialogs;

public ImageProcessingBot(ImageProcessingBotAccessors accessors, IConfiguration configuration)

{

_accessors = accessors ?? throw new ArgumentNullException(nameof(accessors));

_configuration = configuration ?? throw new ArgumentNullException(nameof(configuration));

_dialogs = new DialogSet(_accessors.ConversationDialogState);

}

}

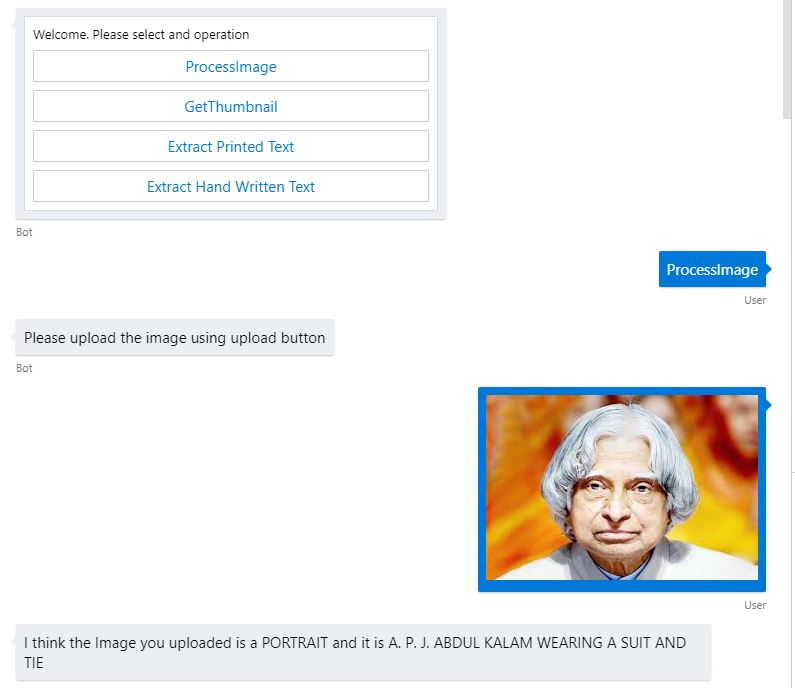

To help the user to select the command a hero card is sent to them. It is created as follows.

public async Task<Activity> CreateReplyAsync(ITurnContext context, string message)

{

var reply = context.Activity.CreateReply();

var card = new HeroCard()

{

Text = message,

Buttons = new List<CardAction>()

{

new CardAction {Text = "Process Image", Value = "ProcessImage", Title = "ProcessImage", DisplayText = "Process Image", Type = ActionTypes.ImBack},

new CardAction {Text = "Get Thumbnail", Value = "GetThumbnail", Title = "GetThumbnail", DisplayText = "Get Thumbnail", Type = ActionTypes.ImBack},

new CardAction {Text = "Extract Printed Text", Value = "printedtext", Title = "Extract Printed Text", DisplayText = "Extract Printed Text", Type = ActionTypes.ImBack},

new CardAction {Text = "Extract Hand Written Text", Value = "handwrittentext", Title = "Extract Hand Written Text", DisplayText = "Extract Hand Written Text", Type = ActionTypes.ImBack}

}

};

reply.Attachments = new List<Attachment>(){card.ToAttachment()};

return reply;

}

The Bot Class implements the IBot interface and must implement the OnTurnAsync method. This method is invoked by the bot framework every time either a user or the bot has to send messages, events etc to each other. We send out the welcome message to the user using the ConversationUpdate event in our code as follows.

case ActivityTypes.ConversationUpdate:

foreach(var member in turnContext.Activity.MembersAdded)

{

if(member.Id != turnContext.Activity.Recipient.Id)

{

reply = await CreateReplyAsync(turnContext, "Welcome. Please select and operation");

await turnContext.SendActivityAsync(reply, cancellationToken:cancellationToken);

}

}

break;

The logic to read the commands and ask the user to upload the images is as follows

case ActivityTypes.Message:

int attachmentCount = turnContext.Activity.Attachments != null ? turnContext.Activity.Attachments.Count() : 0;

var command = !string.IsNullOrEmpty(turnContext.Activity.Text) ? turnContext.Activity.Text : await _accessors.CommandState.GetAsync(turnContext, () => string.Empty, cancellationToken);

command = command.ToLowerInvariant();

if(attachmentCount == 0)

{

if(string.IsNullOrEmpty(command))

{

reply = await CreateReplyAsync(turnContext, "Please select operation before uploading the image");

await turnContext.SendActivityAsync(reply, cancellationToken:cancellationToken);

}

else

{

await _accessors.CommandState.SetAsync(turnContext, turnContext.Activity.Text, cancellationToken);

await _accessors.UserState.SaveChangesAsync(turnContext, cancellationToken: cancellationToken);

await turnContext.SendActivityAsync("Please upload the image using upload button", cancellationToken: cancellationToken);

}

}

else

{

HttpClient client = new HttpClient();

Attachment attachment = turnContext.Activity.Attachments[0];

if(attachment.ContentType == "image/jpeg" || attachment.ContentType == "image/png")

{

Stream image = await client.GetStreamAsync(attachment.ContentUrl);

if(image != null)

{

ComputerVisionHelper helper = new ComputerVisionHelper(_configuration);

IList<Line> detectedLines;

switch(command)

{

case "processimage":

ImageAnalysis analysis = await helper.AnalyzeImageAsync(image);

await turnContext.SendActivityAsync($"I think the Image you uploaded is a {analysis.Tags[0].Name.ToUpperInvariant()} and it is {analysis.Description.Captions[0].Text.ToUpperInvariant()} ", cancellationToken:cancellationToken);

break;

case "getthumbnail":

string thumbnail = await helper.GenerateThumbnailAsync(image);

reply = turnContext.Activity.CreateReply();

reply.Text = "Here is your thumbnail.";

reply.Attachments = new List<Attachment>()

{

new Attachment()

{

ContentType = "image/jpeg",

Name="thumbnail.jpg",

ContentUrl = string.Format("data:image/jpeg;base64,{0}", thumbnail)

}

};

await turnContext.SendActivityAsync(reply, cancellationToken: cancellationToken);

break;

case "printedtext":

detectedLines = await helper.ExtractTextAsync(image, TextRecognitionMode.Printed);

sb = new StringBuilder("I was able to extract following text. \n");

foreach(Line line in detectedLines)

{

sb.AppendFormat("{0}.\n", line.Text);

}

await turnContext.SendActivityAsync(sb.ToString(), cancellationToken: cancellationToken);

break;

case "handwrittentext":

detectedLines = await helper.ExtractTextAsync(image, TextRecognitionMode.Printed);

sb = new StringBuilder("I was able to extract following text. \n");

foreach(Line line in detectedLines)

{

sb.AppendFormat("{0}.\n", line.Text);

}

await turnContext.SendActivityAsync(sb.ToString(), cancellationToken: cancellationToken);

break;

}

await _accessors.CommandState.DeleteAsync(turnContext, cancellationToken: cancellationToken);

await _accessors.UserState.SaveChangesAsync(turnContext, cancellationToken: cancellationToken);

reply = await CreateReplyAsync(turnContext, "Please select an operation and Upload the image");

await turnContext.SendActivityAsync(reply, cancellationToken:cancellationToken);

//Clear out the command as the task for this command is finished.

}

else

{

reply = await CreateReplyAsync(turnContext, "Incorrect Image. /n Please select an operation and Upload the image");

await turnContext.SendActivityAsync(reply, cancellationToken:cancellationToken);

}

}

else

{

reply = await CreateReplyAsync(turnContext, "Only Image Attachments(.jpeg or .png) are supported. /n Please select an operation and Upload the image");

await turnContext.SendActivityAsync(reply, cancellationToken:cancellationToken);

}

}

break;

Above bot code will work in tandem with the Computer Vision SDK helper class to process the images as per users direction.

Testing

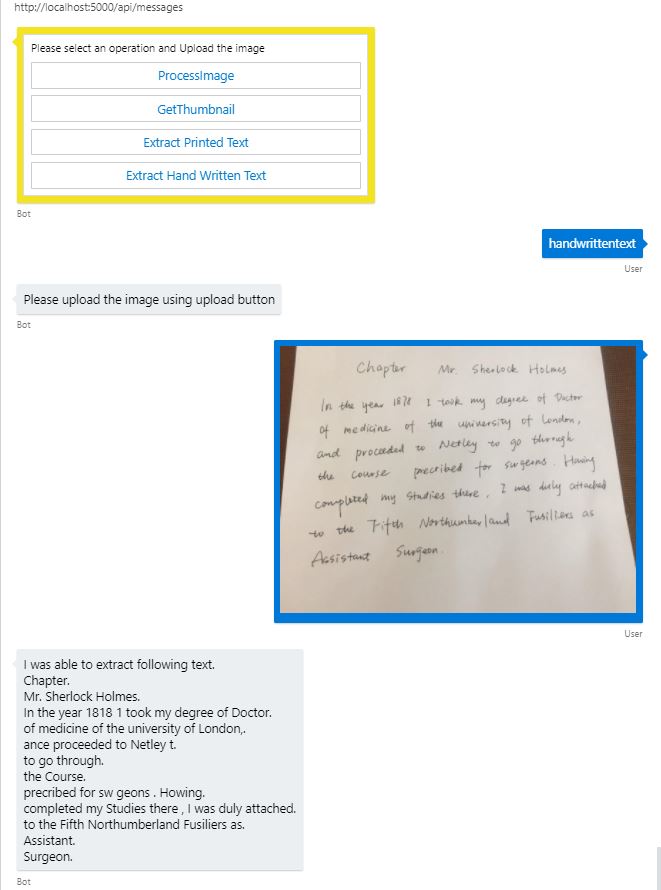

Following are the test results for various operations.

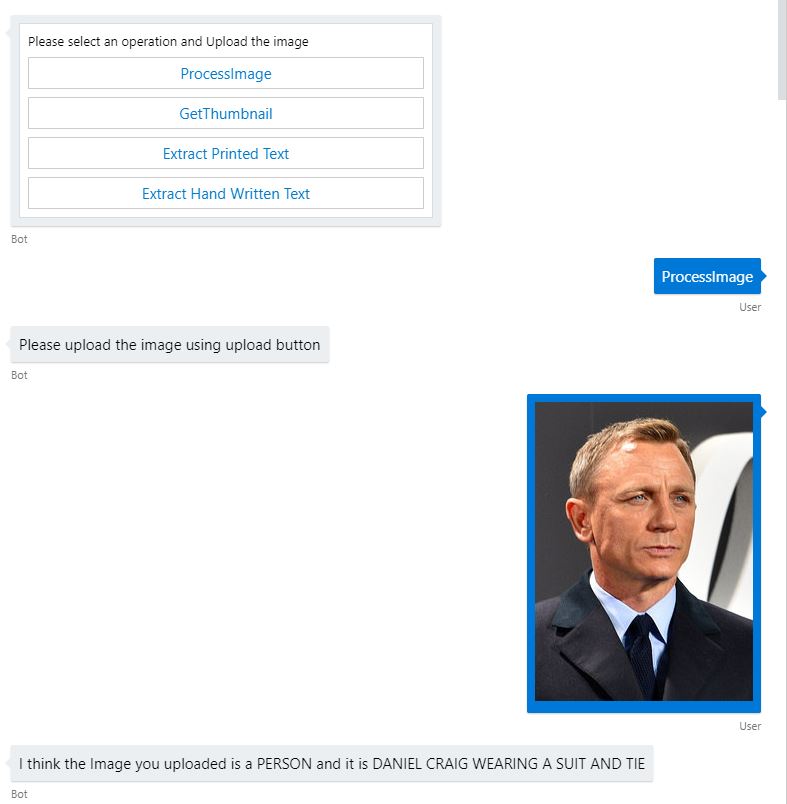

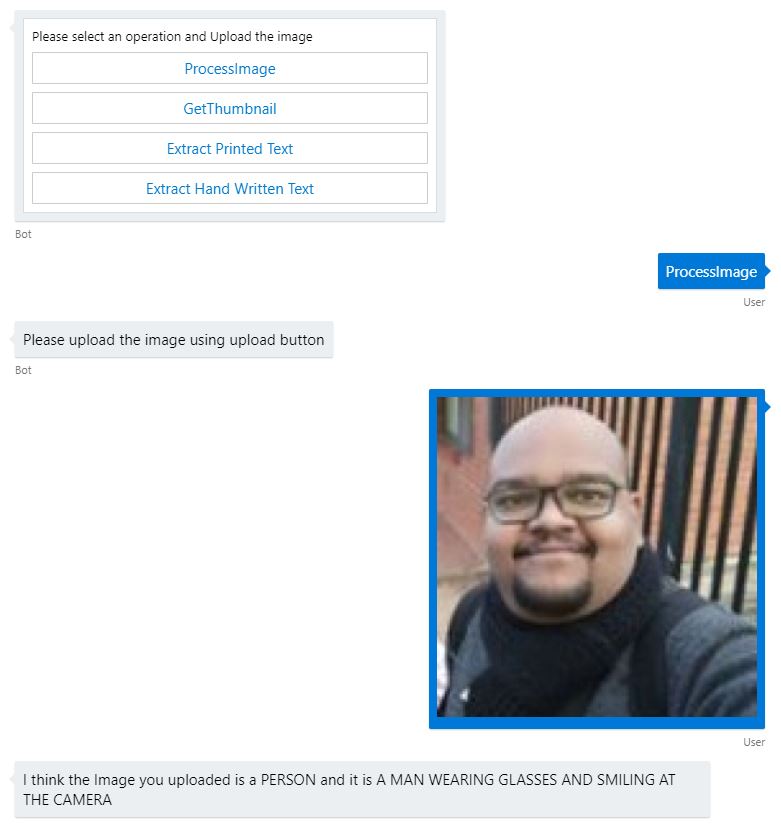

Analyze The Image

Following screen shots show show the bot recognized the famous personalities and also deducted the type of image that was used.

Bot recognizes the portrait of Dr APJ Abdul Kalam (11th President of India)

Bot recognizes Daniel Craig (6th Actor To Play James Bond)

In case the person is not a famous, the bot will share the attributes of the person in the image. Sample as following.

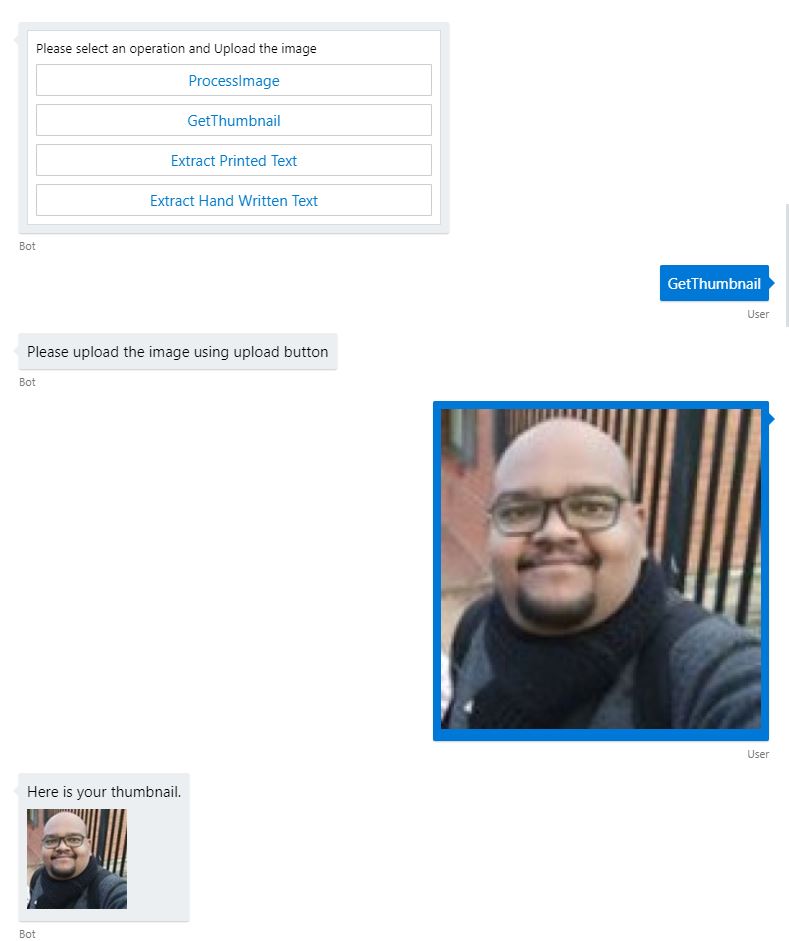

Generate Thumbnail

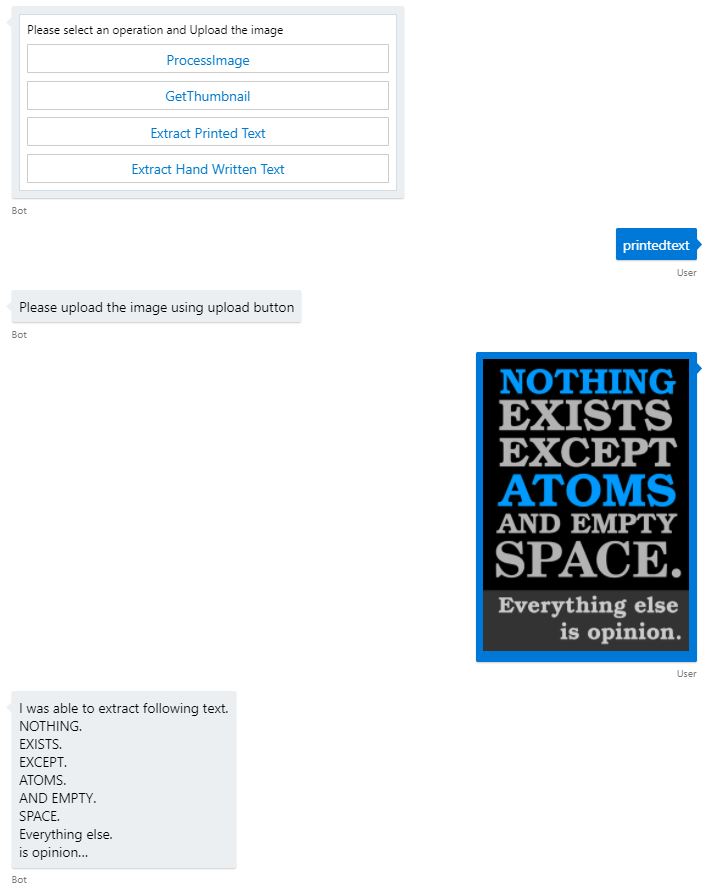

Extract Text

Conclusion

This article successfully describes how to create a fun Image Processing bot using the Microsoft Bot Framework and Computer Vision SDK

Working Sample

A working sample of the code discussed in this article can be downloaded from the TechNet gallery from Image Processing Bot using MS Bot Framework and Computer Vision SDK .

To work with the sample, following are the steps

- Subscribe to the Computer Vision API from Azure portal as discussed above.

- Download the bot emulator from Bot Framework Emulator - GitHub.

- Download the sample.

- Open and build the sample and launch it using IIS Express.

- Launch the bot emulator and navigate to the ImageProcessingBot.bot file in the sample.

- Test with the data provided in the Sample TestData folder (Other images can be used to)

See Also

Following articles on the TechNet wiki discuss the bot framework and can be referred for additional reading.

- Microsoft Bot Framework: Real Life Example of Authentication Using Azure Bot Service And FitBit Web API

- ASP.NET Core 2.0: Building Weather Assistant Chatbot using Bot Builder SDK v4

- Bot Framework: How to Read Data on Action Buttons of Adaptive Cards in a Chat Bot

Further Reading

Microsoft Bot Framework is a beautiful framework which makes it easy to create chat bots. That being said, the bot framework requires a lot of initial learning and the developers need to invest quality time to understand the concept and nuances of the bot framework. It is highly suggested that the readers of this article familiarize themselves with the concepts. Following resources will prove invaluable in the learning process.

- Azure Bot Service Documentation

- Official Bot Framework Blog

- Bot Builder Gitter Page(To discuss and ask questions)

To learn more about adaptive card and to play around with them, following resources will prove useful.

To learn about how ASP.NET core uses the in built dependency injection, refer Dependency Injection in ASP.NET core

References

Following articles were referred while writing this article.