Run Map Reduce WordCount Example on HDInsight using PowerShell

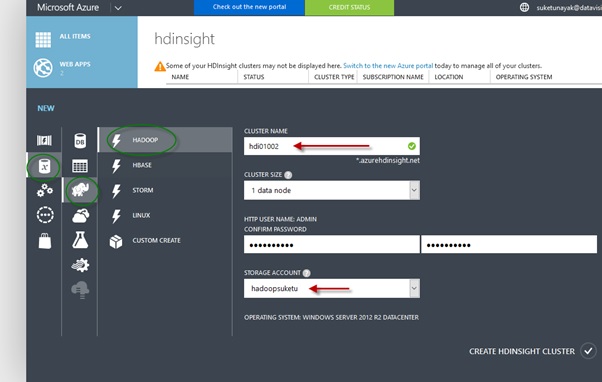

Create Hadoop Cluster of HDInsight in Microsoft Azure portal, First open Microsoft azure portal using your active subscription, Click on New and in Data Service menu there is option of HDInsight in that Click on Hadoop option. Than shown in below screenshot enter cluster name, select cluster size and enter password (Password should be combination of numeric character both lower case and upper case with special character), Select Storage account name which u already created for Hadoop and click on create HDInsight cluster button it will take 10 to 15 minutes to successfully run the Hadoop cluster.

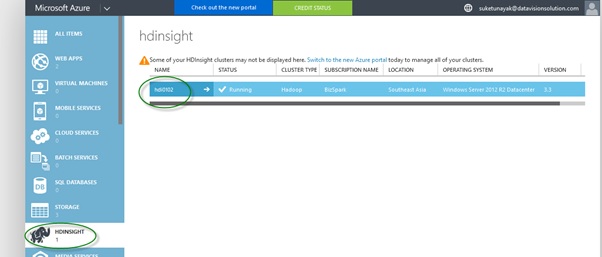

After successfully run the Hadoop cluster status will be shown Running shown on below screenshot.

[

](resources/25120.22.jpg)

Add-AzureAccount

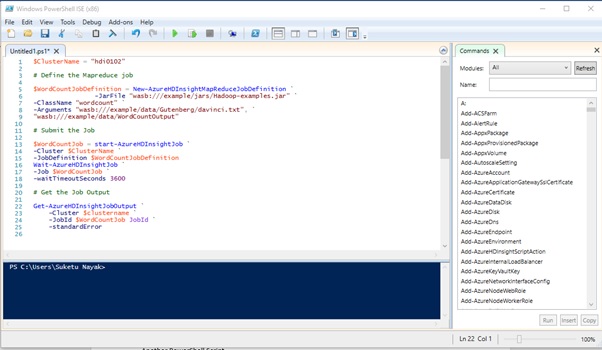

Than Click on new ps1 file enter following powershell commands in that we are accessing Hadoop cluster we are running Map Reduce Job on davinci.txt novel to count word instance using wordcount class.

Editor – Untitled1.ps1

$ClusterName = “hdi0102”

# Define the Mapreduce job

$WordCountJobDefinition = New-AzureHDInsightMapReduceJobDefinition `

-JarFile “wasb:///example/jars/Hadoop-examples.jar” `

-ClassName “wordcount” `

-Arguments “wasb:///example/data/Gutenberg/davinci.txt”, `

“wasb:///example/data/WordCountOutput”

# Submit the Job

$WordCountJob = start-AzureHDInsightJob `

-Cluster $ClusterName `

-JobDefinition $WordCountJobDefinition

Wait-AzureHDInsightJob `

-Job $WordCountJob `

-waitTimeoutSeconds 3600

# Get the Job Output

Get-AzureHDInsightJobOutput `

-Cluster $clustername `

-JobId $WordCountJob JobId `

-standardError

THAN RUN the ps1 file.

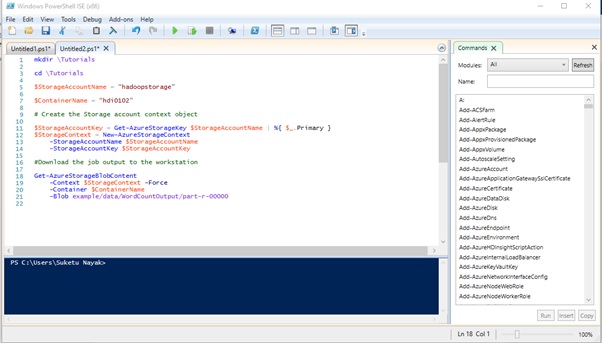

TO GET THE DATA of output click on another .ps1 file and apply following powershell commands in that we are accessing that storage account by storage name and access key and fetching the resultant data of wordcount, the output file name is part-r-00000

Untitled2.ps1

mkdir \Tutorials

cd \Tutorials

$StorageAccountName = “hadoopstorage”

$ContainerName = “hdi0102”

# Create the Storage account context object

$StorageAccountKey = Get-AzureStorageKey $StorageAccountName | %{ $_.Primary }

$StorageContext = New-AzureStorageContext

-StorageAccountName $StorageAccountName

-StorageAccountKey $StorageAccountKey

#Download the job output to the workstation

Get-AzureStorageBlobContent

-Context $StorageContext -Force

-Container $ContainerName

-Blob example/data/WordCountOutput/part-r-00000

Cat ./example/data/WordCountOutput/part-r-00000 | Findstr “there”|

RUN THE untitled2.ps1 file after that we can fetch all resultant data in Excel Sheet for that

Open Excel – Click on PowerQuery Menu

Click on From OtherSources

Click on From HDInsight

Enter Account Name (Storage Account)-

Enter Account Key –

Now Right Side Navigator Run and it will fetch all the data in excel sheet.