SharePoint 2010 : Custom BCS connector for Search with Security Trimming, Batching and Incremental Crawling

Introduction / Background

This guide is designed to highlight the solution for enabling security trimming on search results in SharePoint 2010 for external database. It also focuses on large datasets and file system crawling. It provides an overview of the requirement, a solution for the same, and concludes with implementation of the solution suggested. In order to have item level security implemented and search result trimming based on security, we need to have the security descriptor generated for each record and present in the table for each row. BCS is used to pull data and index in SharePoint for implementing search driven applications, we need constant updation of data from the external system. In such systems we need incremental update to work to ensure CRUD operations. If you have documents stored in your External Systems then it is possible using the Business Connectivity Services (BCS) give your end users the ability to access and crawl these artifacts with ease. The Stream Accessor method allows you to pull System.Byte[] data from your External System and make it available from within SharePoint.

Intended Audience

Audience should have a basic understanding of following:

- Microsoft SharePoint Server 2010

- BCS for SharePoint 2010

- Search for SharePoint Server 2010

- Visual Studio 2010 for writing BCS Component

Requirement Statement

This section describes requirement of custom BCS component. Below are features which we are going to achieve by custom BCS connector:-

- Fetch the data from custom SQL database.

- Crawl time item level security trimming.

- Custom Batching for large amount of data.

- Custom incremental crawling.

- fetch data from documents resides in External Systems by use of stream accessor

Trimming search results in CRAWL time is preferred approach, because you can build up an Access Control List (ACL) during the crawl that becomes part of the Search Index.

Solution

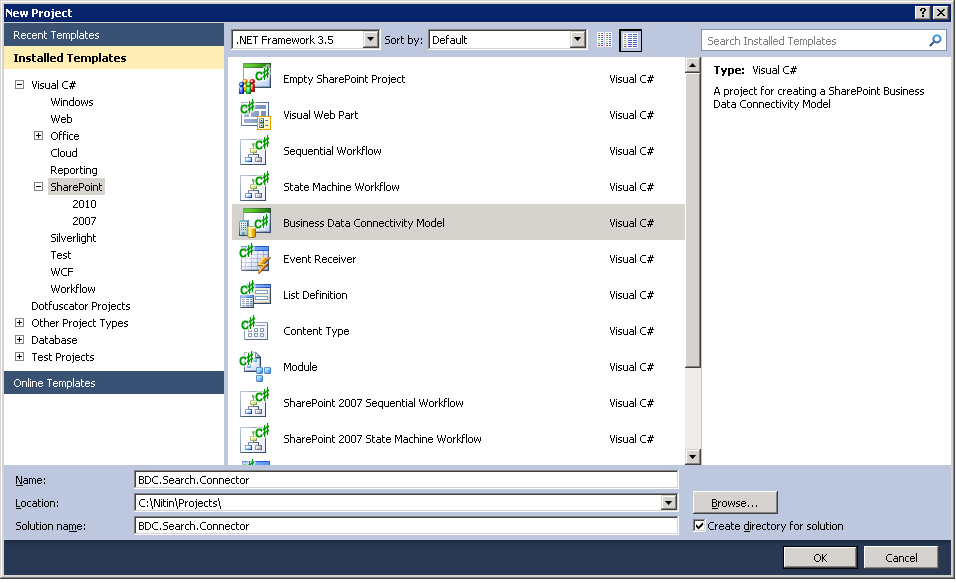

First, create a new empty SharePoint 2010 project in Visual Studio 2010

Create a new project selecting the SharePoint –> 2010 –>Business Data Connectivity Model. Lets name it as BDC.Search.Connector.

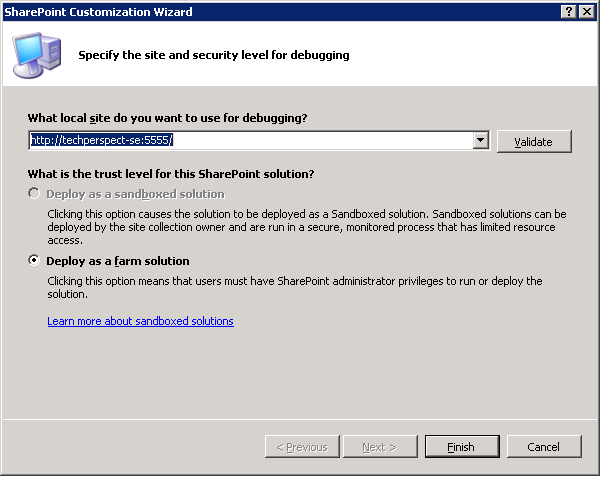

At the SharePoint Customization Wizard screen, select Deploy as a Farm Solution. Be sure to put in the address of your base web application.

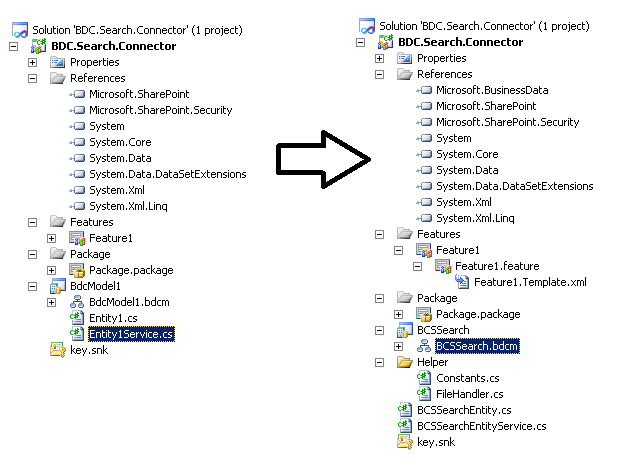

Click Finish. Visual Studio will create the new project. Once complete you should be presented with the model designer surface as below:

By Default VS will create Entity1.cs, Entity1Service.cs and BdcModel1.bdcm files. Rename them according to your naming conventions. For this demo purpose we have renamed them to BCSSearchEntity.cs , BCSSearchEntityService.cs and BCSSearch.bdcm respectively.

Added a reference to business data dll from

C:\Program Files\Common Files\Microsoft Shared\Web Server Extensions\14\ISAPI\Microsoft.BusinessData.dll

Other files also added which are described below in the article.

Create one static class “Constants.cs” which contains all the properties and external DB field name constants.

/// <summary> /// Constants /// </summary> /// <remarks> /// ==================================================================== /// Name: Constants.cs /// /// This static class contains necessary constants that can be changed from one place only /// (except those which have its values in the xml files) /// ==================================================================== /// </remarks> using System; using System.Collections.Generic; using System.Linq; using System.Text;

namespace BCS.Search.Connector.Helper { public static class Constants { //BDCM Properties: mapped with properties tags inside bdc file public const String BDC_CONNECTION_STRING = "ConnectionString"; public const String BDC_BATCH_SIZE = "BatchSize"; public const String BDC_NETWORK_PATH = "NetworkPath";

//External DB Field Name Constants public const String FIELD_ID = "ID"; public const String FIELD_BLOCKEDUSERS = "BlockedUsers"; public const String FIELD_DOCUMENTLINK = "DocumentLink"; public const String FIELD_DATE = "Date"; } } |

Update Entity class “BCSSearchEntity.cs”, code snippet of Entity class as below which contains the properties of entity –

/// <summary> /// BCSSearchEntity /// </summary> /// <remarks> /// ==================================================================== /// Name: BCSSearchEntity.cs /// /// This entity actually maps the BCSSearch DB with SharePoint Metadata created after Crawl /// ==================================================================== /// </remarks> using System; namespace BCS.Search.Connector.BCSSearch { /// <summary> /// This class contains the properties for BCSSearchEntity. The properties keep the data for BCSSearchEntity. /// </summary> public partial class BCSSearchEntity { public int ID { get; set; } public string BlockedUsers { get; set; } public string DocumentText { get; set; } public string DocumentLink { get; set; } public string DocumentFileName { get; set; } public string DocumentExtension { get; set; } public string DocumentMimeType { get; set; } public DateTime Date { get; set; } } } |

Next, let’s update the EntityService class “BCSSearchEntityService.cs”. The sample class created had 2 methods, ReadItem() and ReadList().

These are the two methods needed in an External Content Type in order to consume information in SharePoint. But in order to make use of these functions we need some basic building blocks ready before hand like fetching connection string , page size etc first.

We are storing connection string in BDCM file so that we can change it as per the environment basis .Following code fetches the and connection string property defined in BCSSearch.bdcm file.

/// <summary> /// Property: ConnectionString /// </summary> private String ConnectionString { get { string connectionString = string.Empty; try { INamedPropertyDictionary properties = this.LobSystemInstance.GetProperties(); if (properties.ContainsKey(Constants.BDC_CONNECTION_STRING)) { connectionString = string.Format(Constants.BDC_CONNECTION_STRING); } else { SPDiagnosticsService.Local.WriteTrace(0, new SPDiagnosticsCategory("BCSSearch", TraceSeverity.Unexpected, EventSeverity.Error), TraceSeverity.Unexpected, "Invalid Connection String.", null); } } catch (Exception Ex) { SPDiagnosticsService.Local.WriteTrace(0, new SPDiagnosticsCategory("BCSSearch", TraceSeverity.Unexpected, EventSeverity.Error), TraceSeverity.Unexpected, Ex.Message + Ex.StackTrace, null); } return connectionString; } } |

In our example we are using a predefined UNC path where all our documents are stored and in our database only document names with relative paths are saved, so we need to have a property that should be configurable in nature and hence this property is fetched from BCSSearch.bdcm file as connection string property.

/// <summary> /// Property: NetworkPath /// </summary> private String NetworkPath { get { string networkPath = string.Empty; INamedPropertyDictionary properties = this.LobSystemInstance.GetProperties(); if (properties.ContainsKey(Constants.BDC_NETWORK_PATH)) { networkPath = Convert.ToString(properties[Constants.BDC_NETWORK_PATH]); } else { // logging exception handling } return networkPath; } } |

We are using batching in our code to process data in batches so that large databases can be crawled so batch size property is required from .bdcm file to process in batches while crawling.

/// <summary> /// Property: BatchSize /// </summary> private Int32 BatchSize { get { Int32 batchSize = 1000; INamedPropertyDictionary properties = this.LobSystemInstance.GetProperties(); if (properties.ContainsKey(Constants.BDC_BATCH_SIZE)) { batchSize = Convert.ToInt32(properties[Constants.BDC_BATCH_SIZE]); } if (batchSize == 0) { batchSize = 1000; } return batchSize; } } |

Now lets focus on the methods that we need to start the crawling. “ReadList” method is the initiator that executes first when a FULL CRAWL is started, It gets all items from SQL database by the batch size. See the comments below for detailed understanding.

/// <summary> /// ReadList: gets the listitems by batch size /// <param name="BatchingCookie"></param> /// <param name="HasMoreActivties"></param> /// <returns></returns> public IEnumerable<BCSSearchEntity> ReadList(ref string BatchingCookie, ref System.String HasMoreActivties) { HasMoreActivties = "false"; BCSSearchEntity[] bachedBCSSearchEnteties = null; try { int rowcount; using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetFullCrawlData", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@ID", SqlDbType.BigInt).Value = BatchingCookie; SqlCommand.Parameters.Add("@COUNT", SqlDbType.BigInt).Value = BatchSize; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds);

rowcount = ds.Tables[0].Rows.Count; if (rowcount > BatchSize) { HasMoreActivties = "true"; // gets last item id for batching to start next batch process. This batching cookie is permenantly stored in BDCM file and passed from there only as a reference parameter which is updated here BatchingCookie = Convert.ToString(ds.Tables[0].Rows[rowcount - 1]["ID"]); rowcount = BatchSize; }

//now we need not to process the last record as it will be coming in next batch also bachedBCSSearchEnteties = new BCSSearchEntity[rowcount]; for (int i = 0; i < rowcount; i++) { bachedBCSSearchEnteties[i] = FillEntity(ds.Tables[0].Rows[i], NetworkPath); } } } catch (Exception ex) { // logging exception handling } return bachedBCSSearchEnteties; } |

Once we have the batched data ready to process, Engine requires “ReadItem” method to fetch specific item based on queried key from SQL database.

/// <summary> /// This is a sample specific finder method for BCSSearchEntity. /// If you want to delete or rename the method think about changing the xml in the BDC model file as well. /// </summary> /// <param name="key"></param> /// <returns>BCSSearchEntity</returns> public BCSSearchEntity ReadItem(Int32 ID) { BCSSearchEntity BCSSearchEntity = new BCSSearchEntity(); try { using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetCrawlDataByID", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@ID", SqlDbType.BigInt).Value = ID; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds);

if (ds.Tables[0].Rows.Count > 0) { BCSSearchEntity = FillEntity(ds.Tables[0].Rows[0], NetworkPath); } } } catch (Exception ex) { // logging exception handling } return BCSSearchEntity; } |

In order to have item level security implemented and search result trimming based on security, we need to have the security descriptor generated for each record and present in the table for each row. It checks if there is any user who doesn't have access on a particular record then it blocks that user based on the ACLs. There is a limit of 64KB to store ACL values as this limit is posed by windows server and applied to every where. If you are getting any exception on this function then make sure that this limit is not exceeded. In order to overcome this problem one has to create AD Groups and use them for blocking access in case lots of users needs to be blocked.

public byte[] ReadSecurityDescriptor(Int32 ID, string User) { byte[] secDesc = null; try { string userLogins = string.Empty; INamedPropertyDictionary properties = this.LobSystemInstance.GetProperties(); using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetAclByID", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@ID", SqlDbType.BigInt).Value = ID; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds);

if (ds.Tables[0].Rows.Count > 0) { userLogins = ds.Tables[Constants.FIELD_BLOCKEDUSERS].ToString(); } }

if (!string.IsNullOrEmpty(userLogins)) { string[] users = userLogins.Split(",".ToCharArray(), StringSplitOptions.RemoveEmptyEntries); NTAccount oAcc = new NTAccount(User.Split('\\')[0], User.Split('\\')[1]);

// Creates a Security Identifier for the currently logged on user who is crawling ; DefaultContentAccess account SecurityIdentifier crawlerUserIdentity = (SecurityIdentifier)oAcc.Translate(typeof(SecurityIdentifier));

// Creates a Security descriptor to build the ACL by keeping the Crawling user as Owner (SecurityIdentifier) of the ACL

CommonSecurityDescriptor csd = new CommonSecurityDescriptor(false, false, ControlFlags.None, crawlerUserIdentity, null, null, null);

//access to all SecurityIdentifier everyone = new SecurityIdentifier(WellKnownSidType.WorldSid, null); csd.DiscretionaryAcl.AddAccess(AccessControlType.Allow, everyone, unchecked((int)0xffffffffL), InheritanceFlags.None, PropagationFlags.None);

//deny access to blocked users

for (int i = 0; i < users.Count(); i++) { string user = users[i]; string[] splitedUser = user.Split('\\'); if (splitedUser.Length == 2) { NTAccount ntAcc = new NTAccount(splitedUser[0], splitedUser[1]); if (securityID(ntAcc) != null) { //deny access to blocked users csd.DiscretionaryAcl.RemoveAccess(AccessControlType.Allow, securityID(ntAcc), unchecked((int)0xffffffffL), InheritanceFlags.None, PropagationFlags.None); } } } secDesc = new byte[csd.BinaryLength]; csd.GetBinaryForm(secDesc, 0); } } catch (Exception ex) { // logging exception handling } return secDesc; }

// Security Identifier method private SecurityIdentifier securityID(NTAccount ntAcc) { SecurityIdentifier Sid = null; try { Sid = (SecurityIdentifier)ntAcc.Translate(typeof(SecurityIdentifier));

} catch //(IdentityNotMappedException ex) { Sid = null; } return Sid; } |

For incremental crawling we need two methods. One for the changed record set and other for the deleted record set.

“GetChangedIds” Returns an enumerator of Int32. It contains the modified items. LastCrawlDate indicates time that will be used as reference for this crawl for finding the modified items.

/// <summary> /// /// </summary> /// <param name="lastCrawlDate"></param> /// <returns></returns> public IEnumerable<Int32> GetChangedIds(ref DateTime lastCrawlDate) { List<Int32> changedEntities = new List<Int32>(); try {

using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetUpdatedCrawlData", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@date", SqlDbType.DateTime).Value = lastCrawlDate; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds); foreach (DataRow dr in ds.Tables[0].Rows) { changedEntities.Add(Convert.ToInt32(dr[Constants.FIELD_ID])); } }

//now we need to set the last crawl date lastCrawlDate = DateTime.Now; } catch (Exception ex) { // logging exception handling } return changedEntities; } |

“GetDeletdIds” Returns an enumerator of Int32. It contains the deleted items. LastCrawlDate indicates time that will be used as reference for this crawl for finding the deleted items. We have used a field to store the deleted ids in database only and have not actually deleted the records. If this is not the case in your implementation then you can use a trigger to store the deleted records in other table and make use of the method to identify the deleted ids since last crawl time. Logic can vary based on the business rules and actual implementation.

/// <summary> /// /// </summary> /// <param name="lastCrawlDate"></param> /// <returns></returns> public IEnumerable<Int32> GetDeletedIds(ref DateTime lastCrawlDate) { List<Int32> changedEntities = new List<Int32>(); try { using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetDeletedCrawlData", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@date", SqlDbType.DateTime).Value = lastCrawlDate; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds); foreach (DataRow dr in ds.Tables[0].Rows) { changedEntities.Add(Convert.ToInt32(dr[Constants.FIELD_ID])); } //now we need to set the last crawl date lastCrawlDate = DateTime.Now; } } catch (Exception ex) { // logging exception handling }

return changedEntities; } |

**ReadFile **method is needed in case you need to read file from file system. ID is the unique identifier of the database row. It merges the network path with the database stored name and then read its content to get it crawled from file system. Make sure that the app pool account of the BCS Service has read access to this network path.

/// <summary> /// returns the stream for the column name specified /// </summary> /// <param name="key"></param> /// <param name="column"></param> /// <returns></returns> private Stream ReadFile(Int32 ID, string column) { Stream stream = new MemoryStream(); string link; string path = string.Empty; try { INamedPropertyDictionary properties = this.LobSystemInstance.GetProperties(); using (SqlConnection SqlConnection = new SqlConnection(ConnectionString)) { SqlConnection.Open(); SqlCommand SqlCommand = new SqlCommand("GetCrawlDataByID", SqlConnection); SqlCommand.CommandType = CommandType.StoredProcedure; SqlCommand.Parameters.Add("@ID", SqlDbType.BigInt).Value = ID; SqlDataAdapter da = new SqlDataAdapter(SqlCommand); DataSet ds = new DataSet(); da.Fill(ds);

if (ds.Tables[0].Rows.Count > 0) { link = Convert.ToString(ds.Tables[0].Rows[0][column]); if (!string.IsNullOrEmpty(link)) { //now we need to parse this document link path = FileHandler.GetPath(link, NetworkPath); if (!string.IsNullOrEmpty(path)) { stream = new FileStream(path, FileMode.Open); } } } } } catch (Exception ex) { // logging exception handling } return stream; }

/// <summary> /// Reads the file from file system and returns the stream /// </summary> /// <param name="key"></param> /// <returns>Stream</returns> public Stream ReadDocumentLink(Int32 ID) { return ReadFile(ID, Constants.FIELD_DOCUMENTLINK); } |

“FillEntity” private method fills the entity from SQL database row.

/// <summary> /// Fill BCSSearch entity /// </summary> /// <param name="dr"></param> /// <param name="NetworkPath"></param> /// <returns></returns> private BCSSearchEntity FillEntity(DataRow dr, string NetworkPath) { BCSSearchEntity = new BCSSearchEntity(); try { BCSSearchEntity.ID =Convert.ToInt32(dr[Constants.FIELD_ID]); BCSSearchEntity.BlockedUsers =Convert.ToString(dr[Constants.FIELD_BLOCKEDUSERS]); BCSSearchEntity.DocumentText =FileHandler.GetTitle(Convert.ToString(dr[Constants.FIELD_DOCUMENTLINK])); string path =FileHandler.GetPath(Convert.ToString(dr[Constants.FIELD_DOCUMENTLINK]), NetworkPath);

if (!string.IsNullOrEmpty(path)) { BCSSearchEntity.DocumentLink = path; BCSSearchEntity.DocumentFileName = FileHandler.GetFileName(path); BCSSearchEntity.DocumentExtension =FileHandler.GetFileExtension(path); BCSSearchEntity.DocumentMimeType =FileHandler.GetMimeType(BCSSearchEntity.DocumentExtension); } BCSSearchEntity.Date = Convert.ToDateTime(dr[Constants.FIELD_DATE]); } catch (Exception ex) { // logging exception handling } return BCSSearchEntity; } |

Create one FileHandler.cs class handling document file operations.

“GetTitle” method returns the title of document.

/// <summary> /// Get the title of file /// </summary> /// <param name="value"></param> /// <returns></returns> internal static string GetTitle(string value) { string returnValue = string.Empty; try { if (!string.IsNullOrEmpty(value) && value != "empty") { if (value.IndexOf("#") > -1) { returnValue = value.Substring(0, value.LastIndexOf("#")); } } } catch (Exception ex) { // logging exception handling } return returnValue; } |

“GetPath” method returns path of document.

/// <summary> /// Get the path of the file /// </summary> /// <param name="value"></param> /// <param name="NetworkPath"></param> /// <returns></returns> internal static string GetPath(string value, string NetworkPath) { string returnValue = string.Empty; try { if (!string.IsNullOrEmpty(value) && !string.IsNullOrEmpty(NetworkPath)) { returnValue = NetworkPath + value; } } catch (Exception ex) { SPDiagnosticsService.Local.WriteTrace(0, new SPDiagnosticsCategory("BCSSearch", TraceSeverity.Unexpected, EventSeverity.Error), TraceSeverity.Unexpected, ex.Message + ex.StackTrace, null); }

return returnValue; } |

“GetFileName” method returns document file name.

/// <summary> /// Get file name /// </summary> /// <param name="path"></param> /// <returns></returns> internal static string GetFileName(string path) { string returnValue = string.Empty; try { returnValue = Path.GetFileName(path); } catch (Exception ex) { // logging exception handling } return returnValue; } |

“GetFileExtension” method returns file extension.

/// <summary> /// Get File Extension /// </summary> /// <param name="path"></param> /// <returns></returns> internal static string GetFileExtension(string path) { string returnValue = string.Empty; try { returnValue = Path.GetExtension(path).TrimStart('.'); } catch (Exception ex) { // logging exception handling } return returnValue; } |

“GetMimeType” method returns document mime type. You can add and remove the file mime types based on your business requirements.

/// <summary> /// Get mime type of file /// </summary> /// <param name="extension"></param> /// <returns></returns> internal static string GetMimeType(string extension) { switch (extension.ToLower()) { case "doc": return "application/msword"; case "docx": return "application/vnd.openxmlformats-officedocument.wordprocessingml.document"; case "dotm": return "application/vnd.ms-word.template.macroenabled.12"; case "xls": return "application/vnd.ms-excel"; case "xlsx": return "application/vnd.openxmlformats-officedocument.spreadsheetml.sheet"; case "pdf": return "application/pdf"; case "ppt": return "application/vnd.ms-powerpoint"; case "pptx": return "application/vnd.openxmlformats-officedocument.presentationml.presentation"; case "xhtml": return "application/xhtml+xml"; case "jpg": case "jpeg": return "image/jpeg"; case "gif": return "image/gif"; case "png": return "image/png"; case "txt": return "text/plain"; case "mdb": return "application/x-msaccess"; case "xps": return "application/vnd.ms-xpsdocument"; case "eml": return "message/rfc822"; case "zip": return "application/zip, application/octet-stream"; case "rar": return "application/x-rar-compressed, application/octet-stream"; case "docm": return "application/vnd.ms-word.document.macroenabled.12"; case "dotx": return "application/vnd.openxmlformats-officedocument.wordprocessingml.template"; case "mht": case "mhtml": case "nws": return "message/rfc822"; case "odp": return "application/vnd.oasis.opendocument.presentation"; case "ods": return "application/vnd.oasis.opendocument.spreadsheet"; case "odt": return "application/vnd.oasis.opendocument.text"; case "ppsx": return "application/vnd.openxmlformats-officedocument.presentationml.slideshow"; case "pptm": return "application/vnd.ms-powerpoint.presentation.macroenabled.12"; case "tif": return "image/tif"; case "tiff": return "image/tiff"; case "wpd": return "application/vnd.wordperfect"; case "xlsb": return "application/vnd.ms-excel.sheet.binary.macroenabled.12"; case "xlsm": return "application/vnd.ms-excel.sheet.macroenabled.12"; case "xml": return "application/xml"; default: return string.Empty; } } |

Business Data Catalog Model

Following BDCM file is being used in the article prepration :-

<?xml version="1.0" encoding="utf-8"?> <Model xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns="http://schemas.microsoft.com/windows/2007/BusinessDataCatalog" Name="BCSSearch"> <LobSystems> <LobSystem Name="BCSSearch" Type="DotNetAssembly"> <LobSystemInstances> <LobSystemInstance Name="BCSSearch"> <Properties> <Property Name="ShowInSearchUI" Type="System.Boolean">true</Property> <Property Name="ConnectionString" Type="System.String">{ConnectionString}</Property> <Property Name="BatchSize" Type="System.Int32">20000</Property> <Property Name="NetworkPath" Type="System.String">{NetworkPath}</Property> </Properties> </LobSystemInstance> </LobSystemInstances> <Entities> <Entity Name="BCSSearchEntity" Namespace="BCS.Search.Connector.BCSSearch" EstimatedInstanceCount="1000" Version="1.0.0.15" DefaultDisplayName="BCSSearchEntity"> <Properties> <Property Name="Class" Type="System.String">BCS.Search.Connector.BCSSearch.BCSSearchEntityService, BCSSearch</Property> <Property Name="Title" Type="System.String">Title</Property> </Properties> <Identifiers> <Identifier Name="ID" TypeName="System.Int32" /> </Identifiers> <Methods> <!-- start finder method --> <Method Name="ReadList" LobName="ReadList" IsStatic="false" IsCached="true"> <Properties> <Property Name="RootFinder" Type="System.String"> </Property> <Property Name="UseClientCachingForSearch" Type="System.String"> </Property> </Properties> <FilterDescriptors> <FilterDescriptor Name="BatchingStateCookie" Type="Batching"> <Properties> <Property Name="BatchingCookie" Type="System.String"> </Property> </Properties> </FilterDescriptor> <FilterDescriptor Name="HasMoreBatches" Type="BatchingTermination"> <Properties> <Property Name="CaseSensitive" Type="System.Boolean">false</Property> <Property Name="Comparator" Type="System.String">!=</Property> <Property Name="TerminationValue" Type="System.String">true</Property> </Properties> </FilterDescriptor> </FilterDescriptors> <Parameters> <Parameter Name="BatchingCookie" Direction="InOut"> <TypeDescriptor Name="BatchingCookieTypeDescriptor" TypeName="System.String" AssociatedFilter="BatchingStateCookie"> <DefaultValues> <DefaultValue MethodInstanceName="ReadList" Type="System.String">0</DefaultValue> </DefaultValues> </TypeDescriptor> </Parameter> <Parameter Name="HasMoreActivties" Direction="InOut"> <TypeDescriptor Name="HasMoreActivtiesTypeDescriptor" TypeName="System.String" AssociatedFilter="HasMoreBatches"> <DefaultValues> <DefaultValue MethodInstanceName="ReadList" Type="System.String">true</DefaultValue> </DefaultValues> </TypeDescriptor> </Parameter> <Parameter Direction="Return" Name="returnParameter"> <TypeDescriptor TypeName="System.Collections.Generic.IEnumerable`1[[BCS.Search.Connector.BCSSearch.BCSSearchEntity, BCSSearch]]" IsCollection="true" Name="BCSSearchEntityList"> <TypeDescriptors> <TypeDescriptor TypeName="BCS.Search.Connector.BCSSearch.BCSSearchEntity, BCSSearch" Name="BCSSearchEntity"> <TypeDescriptors> <TypeDescriptor TypeName="System.Int32" IdentifierName="ID" Name="ID" /> <TypeDescriptor Name="DocumentText" TypeName="System.String" /> <TypeDescriptor Name="DocumentLink" TypeName="System.String" /> <TypeDescriptor Name="DocumentFileName" TypeName="System.String" /> <TypeDescriptor Name="DocumentExtension" TypeName="System.String" /> <TypeDescriptor Name="DocumentMimeType" TypeName="System.String" /> <TypeDescriptor Name="Date" TypeName="System.DateTime" IsCollection="false"> <Interpretation> <NormalizeDateTime LobDateTimeMode="Local" /> </Interpretation> </TypeDescriptor> </TypeDescriptors> </TypeDescriptor> </TypeDescriptors> </TypeDescriptor> </Parameter> </Parameters> <MethodInstances> <MethodInstance Type="Finder" ReturnParameterName="returnParameter" Default="true" Name="ReadList" DefaultDisplayName="BCSSearchEntity List"> <Properties> <Property Name="RootFinder" Type="System.String"> </Property> <Property Name="UseClientCachingForSearch" Type="System.String"> </Property> </Properties> </MethodInstance> </MethodInstances> </Method> <!-- end finder method --> <!-- start specific finder method --> <Method Name="ReadItem" LobName="ReadItem" IsStatic="false" IsCached="true"> <Properties> <Property Name="UseClientCachingForSearch" Type="System.String"> </Property> </Properties> <Parameters> <Parameter Direction="In" Name="@ID"> <TypeDescriptor TypeName="System.Int32" Name="ID" IdentifierName="ID" /> </Parameter> <Parameter Direction="Return" Name="returnParameter"> <TypeDescriptor TypeName="BCS.Search.Connector.BCSSearch.BCSSearchEntity, BCSSearch" Name="BCSSearchEntity"> <TypeDescriptors> <TypeDescriptor TypeName="System.Int32" IdentifierName="ID" Name="ID" /> <TypeDescriptor Name="DocumentText" TypeName="System.String" /> <TypeDescriptor Name="DocumentLink" TypeName="System.String" /> <TypeDescriptor Name="DocumentFileName" TypeName="System.String" /> <TypeDescriptor Name="DocumentExtension" TypeName="System.String" /> <TypeDescriptor Name="DocumentMimeType" TypeName="System.String" /> <TypeDescriptor Name="Date" TypeName="System.DateTime" IsCollection="false"> <Interpretation> <NormalizeDateTime LobDateTimeMode="Local" /> </Interpretation> </TypeDescriptor> </TypeDescriptors> </TypeDescriptor> </Parameter> </Parameters> <MethodInstances> <MethodInstance Type="SpecificFinder" ReturnParameterName="returnParameter" Default="true" Name="ReadItem" DefaultDisplayName="Read BCSSearchEntity"> <Properties> <Property Name="UseClientCachingForSearch" Type="System.String"> </Property> </Properties> </MethodInstance> </MethodInstances> </Method> <!-- end specific finder method -->

<!-- start document StreamAccessor method --> <Method Name="ReadDocumentLink" IsStatic="false" LobName="ReadDocumentLink" IsCached="true"> <Parameters> <Parameter Direction="In" Name="@ID"> <TypeDescriptor TypeName="System.Int32" IdentifierName="ID" Name="ID" /> </Parameter> <Parameter Name="StreamData" Direction="Return"> <TypeDescriptor Name="StreamData" TypeName="System.IO.Stream" /> </Parameter> </Parameters> <MethodInstances> <MethodInstance Type="StreamAccessor" ReturnParameterName="StreamData" ReturnTypeDescriptorPath="StreamData" Default="true" Name="MainDataStream" DefaultDisplayName="Document"> <Properties> <Property Name="MimeTypeField" Type="System.String">DocumentMimeType</Property> <Property Name="FileNameField" Type="System.String">DocumentFileName</Property> <Property Name="Extension" Type="System.String">DocumentExtension</Property> </Properties> </MethodInstance> </MethodInstances> </Method> <!-- end document StreamAccessor method -->

<Method Name="GetChangedIds" IsStatic="false" LobName="GetChangedIds"> <FilterDescriptors> <FilterDescriptor Name="LastCrawl" Type="InputOutput"> <Properties> <Property Name="SynchronizationCookie" Type="System.String">ChangedItemCookie</Property> </Properties> </FilterDescriptor> <FilterDescriptor Name="timestamp" Type="Timestamp" /> </FilterDescriptors> <Parameters> <Parameter Name="lastCrawlDate" Direction="InOut"> <TypeDescriptor Name="LastCrawlDate" TypeName="System.DateTime" IsCollection="false" AssociatedFilter="LastCrawl"> <Interpretation> <NormalizeDateTime LobDateTimeMode="Local" /> </Interpretation> </TypeDescriptor> </Parameter> <Parameter Name="returnIds" Direction="Return"> <TypeDescriptor Name="returnIds" TypeName="System.Collections.Generic.IEnumerable`1[System.Int32]" IsCollection="true"> <TypeDescriptors> <TypeDescriptor TypeName="System.Int32" IdentifierName="ID" Name="ID" /> </TypeDescriptors> </TypeDescriptor> </Parameter> </Parameters> <MethodInstances> <MethodInstance Name="ReadIncrementalListInstance" Type="ChangedIdEnumerator" ReturnParameterName="returnIds"> </MethodInstance> </MethodInstances> </Method>

<Method Name="GetDeletedIds" IsStatic="false" LobName="GetDeletedIds"> <FilterDescriptors> <FilterDescriptor Name="LastCrawl" Type="InputOutput"> <Properties> <Property Name="SynchronizationCookie" Type="System.String">DeletedItemCookie</Property> </Properties> </FilterDescriptor> <FilterDescriptor Name="timestamp" Type="Timestamp" /> </FilterDescriptors> <Parameters> <Parameter Name="lastCrawlDate" Direction="InOut"> <TypeDescriptor Name="LastCrawlDate" TypeName="System.DateTime" IsCollection="false" AssociatedFilter="LastCrawl"> <Interpretation> <NormalizeDateTime LobDateTimeMode="Local" /> </Interpretation> </TypeDescriptor> </Parameter> <Parameter Name="DeletedIds" Direction="Return"> <TypeDescriptor Name="DeletedIds" TypeName="System.Collections.Generic.IEnumerable`1[System.Int32]" IsCollection="true"> <TypeDescriptors> <TypeDescriptor TypeName="System.Int32" IdentifierName="ID" Name="ID" /> </TypeDescriptors> </TypeDescriptor> </Parameter> </Parameters> <MethodInstances> <MethodInstance Name="GetDeletedIdsInstance" Type="DeletedIdEnumerator" ReturnParameterName="DeletedIds"> </MethodInstance> </MethodInstances> </Method>

<!-- SecurityDescriptor method applies the crawl time security --> <Method Name="ReadSecurityDescriptor" IsStatic="false" LobName="ReadSecurityDescriptor"> <FilterDescriptors> <FilterDescriptor Name="UserFilter" Type="UserContext" /> </FilterDescriptors> <Parameters> <Parameter Name="Id" Direction="In"> <TypeDescriptor Name="IdTypeDescriptor" TypeName="System.Int32" IdentifierName="ID" /> </Parameter> <Parameter Name="User" Direction="In"> <TypeDescriptor Name="CurrentUser" TypeName="System.String" AssociatedFilter="UserFilter" /> </Parameter> <Parameter Name="Acl" Direction="Return"> <TypeDescriptor Name="SecurityDescriptor" TypeName="System.Collections.Generic.IEnumerable`1[System.Byte]" IsCollection="true"> <TypeDescriptors> <TypeDescriptor Name="TypeDescriptor" TypeName="System.Byte" IsCollection="false" /> </TypeDescriptors> </TypeDescriptor> </Parameter> </Parameters> <MethodInstances> <MethodInstance Name="ReadSecurityDescriptorInstance" Type="BinarySecurityDescriptorAccessor" ReturnParameterName="Acl" /> </MethodInstances> </Method> </Methods> </Entity> </Entities> </LobSystem> </LobSystems> </Model> |

Database

Following is the DB script that is used for the database schema generation. This script generates the tables, stored procedures. Data needs to be populated as per the actual requirement and database schema can be changed basis on the business need

GO /****** Object: Table [dbo].[SearchData] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO SET ANSI_PADDING ON GO CREATE TABLE [dbo].[SearchData]( [ID] [int] IDENTITY(1,1) NOT NULL, [DocumentLink] [nvarchar](512) NULL, [BlockedUsers] [nvarchar](512) NULL, [Date] [datetime] NOT NULL, [Deleted] [bit] NULL DEFAULT 0, CONSTRAINT [PK_SearchData] PRIMARY KEY CLUSTERED ( [ID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY] GO SET ANSI_PADDING OFF GO

/****** Object: StoredProcedure [dbo].[GetFullCrawlData] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO -- ============================================= -- Description: Returns crawl data based on id and count -- ============================================= CREATE PROCEDURE [dbo].[GetFullCrawlData] @ID int = 0, @COUNT int = 1000 AS BEGIN -- SET NOCOUNT ON added to prevent extra result sets from -- interfering with SELECT statements. SET NOCOUNT ON; select top(@count + 1) * from SearchData where [ID] >= @ID AND Deleted = 0 END GO

/****** Object: StoredProcedure [dbo].[GetCrawlDataByID] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO -- ============================================= -- Description: Returns crawl row based on id -- ============================================= CREATE PROCEDURE [dbo].[GetCrawlDataByID] @ID int = 1 AS BEGIN -- SET NOCOUNT ON added to prevent extra result sets from -- interfering with SELECT statements. SET NOCOUNT ON; select * from SearchData where [ID] = @ID AND Deleted = 0 END GO

-- ============================================= -- Description: Returns Acl User based on id -- ============================================= CREATE PROCEDURE [dbo].[GetAclByID] @ID int = 1 AS BEGIN -- SET NOCOUNT ON added to prevent extra result sets from -- interfering with SELECT statements. SET NOCOUNT ON; select BlockedUsers from SearchData where [ID] = @ID AND Deleted = 0 END GO

/****** Object: StoredProcedure [dbo].[GetUpdatedCrawlData] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO -- ============================================= -- Description: Returns crawl data based on id and count -- ============================================= CREATE PROCEDURE [dbo].[GetUpdatedCrawlData] @date datetime AS BEGIN -- SET NOCOUNT ON added to prevent extra result sets from -- interfering with SELECT statements. SET NOCOUNT ON; select * from SearchData where [Date] > @date AND Deleted = 0 END GO

/****** Object: StoredProcedure [dbo].[GetDeletedCrawlData] ******/ SET ANSI_NULLS ON GO SET QUOTED_IDENTIFIER ON GO -- ============================================== -- Description: Returns crawl data based on id and count -- ============================================= CREATE PROCEDURE [dbo].[GetDeletedCrawlData] @date datetime AS BEGIN -- SET NOCOUNT ON added to prevent extra result sets from -- interfering with SELECT statements. SET NOCOUNT ON; select * from SearchData where [Date] >= @date AND Deleted = 1 END GO |

After successful Project build deploy this connector component on SharePoint environment to get crawled data from custom database available for search. below are the steps mentioned for deployment.

Deployment Steps

List of High Level steps listed below:-

1. Deploying Business Data Catalog Model File (.bdcm)

2. Set Permission on external content type.

3. Create Line of Business Content source under Search Service.

4. Configure Search Scope.

5. Configure Search Settings.

Please note: We assume that SharePoint Enterprise/Fast search is already configured.

1. Deploying Business Data Catalog Model File

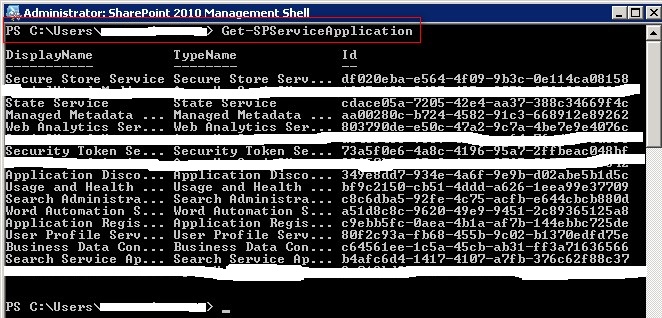

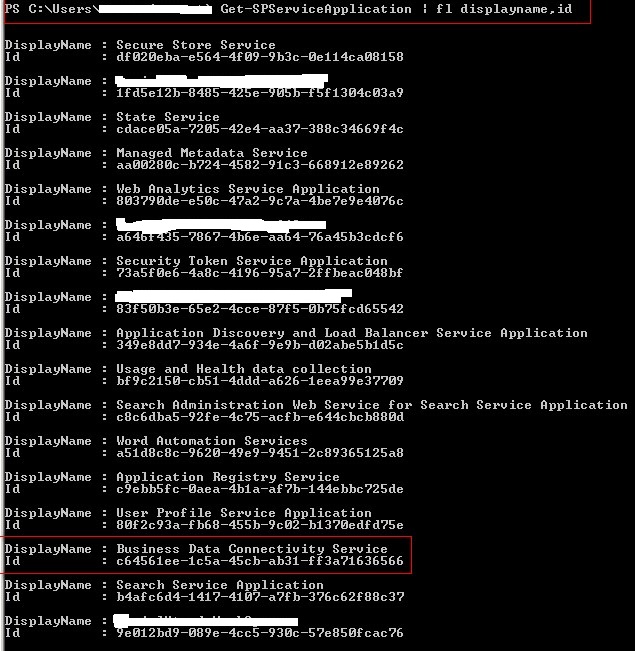

Open PowerShell with Local Administrator privilege and run the following command:

Get-SPServiceApplication

Get the ID of “Business Data Connectivity Service”

Copy the ID of Business Data Connectivity Service

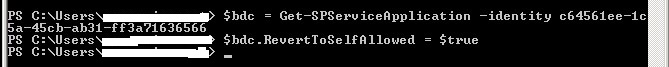

$bdc = Get-SPServiceApplication -identity <ID of Business Data Connectivity Service >

$bdc.RevertToSelfAllowed = $true

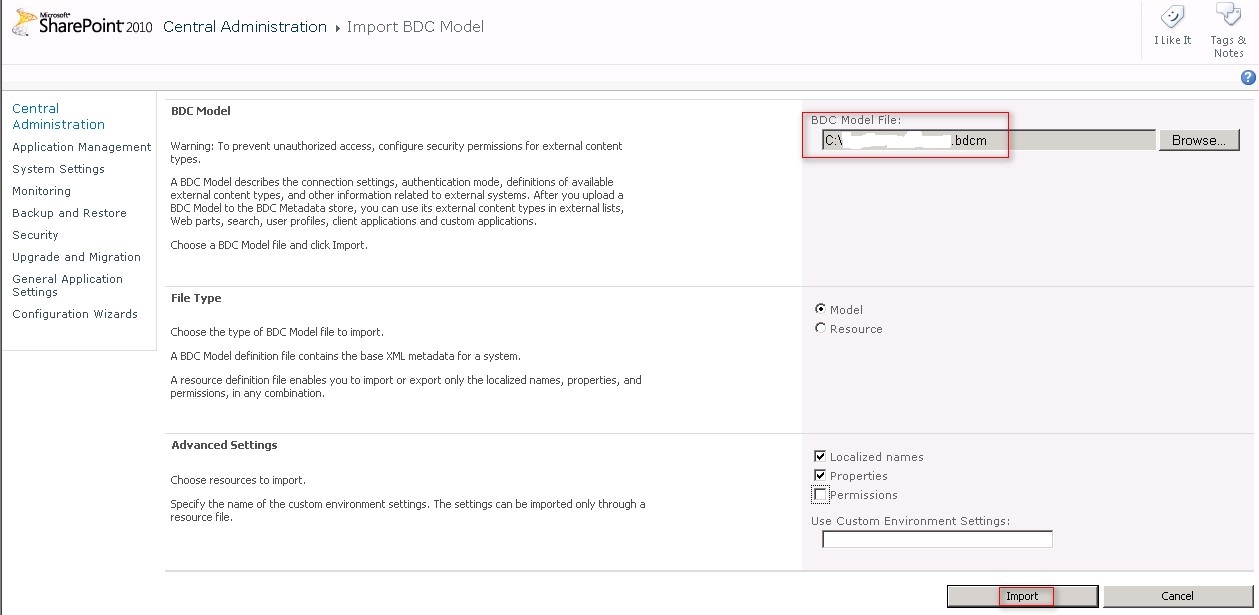

Import the BCS model (.BDCM file) from the BCS Application Import functionality

- Before BCS model (.BDCM file) import verify "Connection String, Service Account, and Profile Page URL in BCS Model (.BDCM file).

- Copy BCSSearch.bdcm file to Server C: drive.

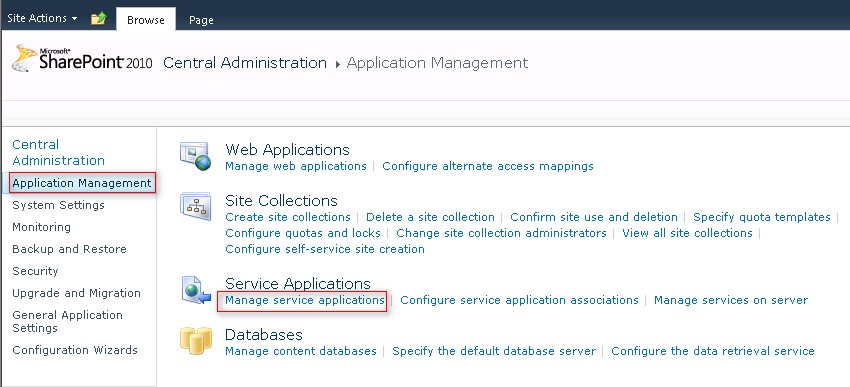

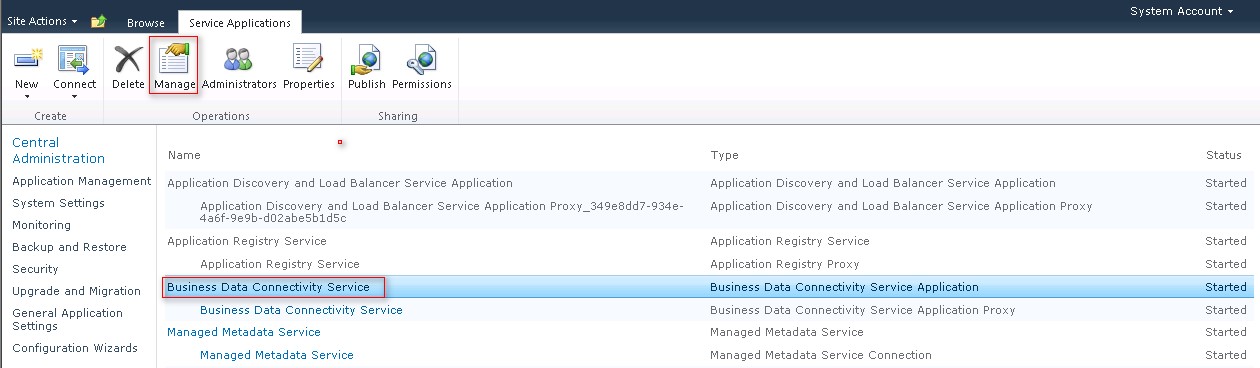

- Go to Central Admin >> Manage Service Application >> Business Connectivity Service >> Manage

Click Import.

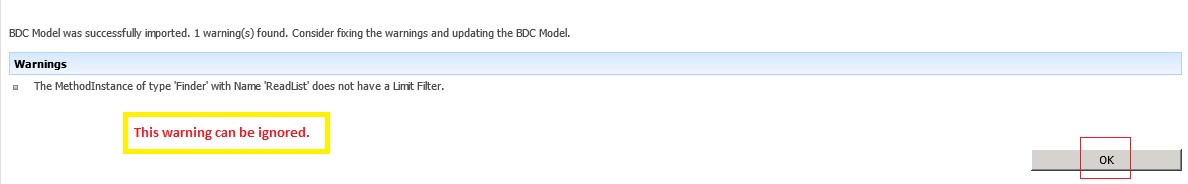

Click OK.

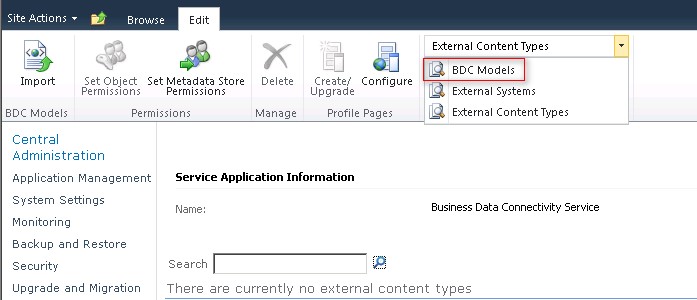

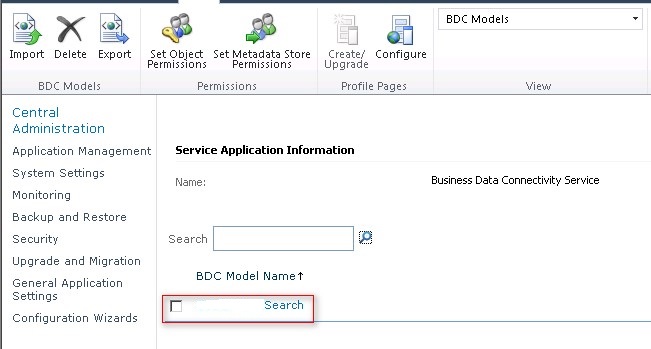

Change the View to BDC Models From the Ribbon.

Verify the BCSSearch BDC Model is available.

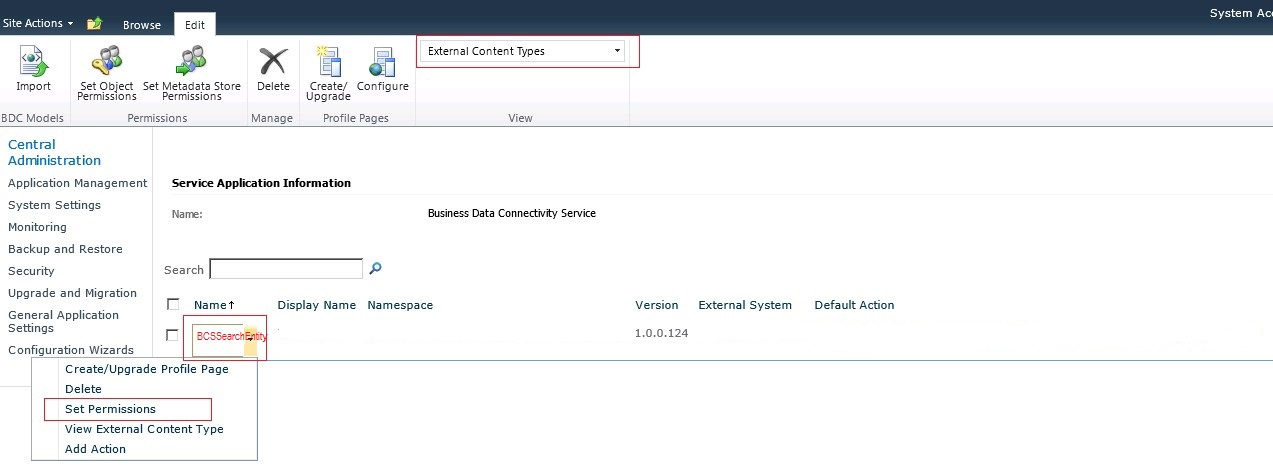

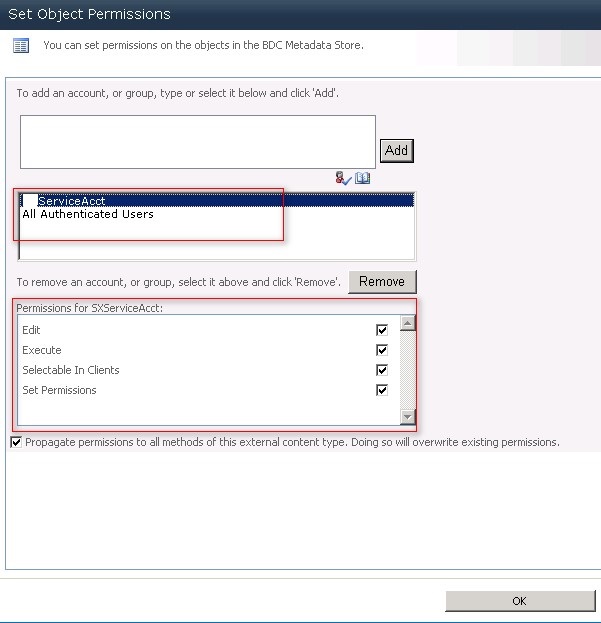

2. Set Permission on external content type

Go to Central Administration >>> Application Management >>> Service Applications >>> Manage Service Applications >>> Select “Business Data Connectivity Service” and click Manage

- Change the View to External Content type From the Ribbon

- Select the BCSSearchEntity >>> Set Permission

Add the Search Service Account, All Authenticated Users and check all the permissions in order to allow crawling of the content and Users can view the Contents.

Deploy BCS.Search.Connector.dll to GAC on All Servers

Open PowerShell 2010 Management Shell and execute below command:- Registering DLL to LOB System

$url = "<webapplication url>"

$assemblyPath = "<DLL location(BCS.Search.Connector.dll)>"

$lobSystem = "BCSSearch" Write-Host "Adding assembly to LOBSystem" $serviceContext = Get-SPServiceContext $url $lobSystem = Get-SPBusinessDataCatalogMetadataObject -ServiceContext $serviceContext -BdcObjectType lobsystem -Name $lobSystem Import-SPBusinessDataCatalogDotNetAssembly -LobSystem $lobSystem -Path $assemblyPath |

** **

After that do iisreset.

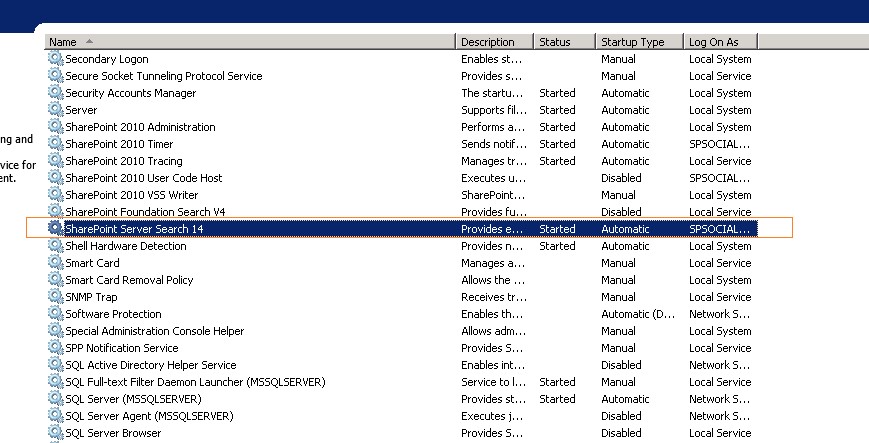

Restart SharePoint Server Search 14

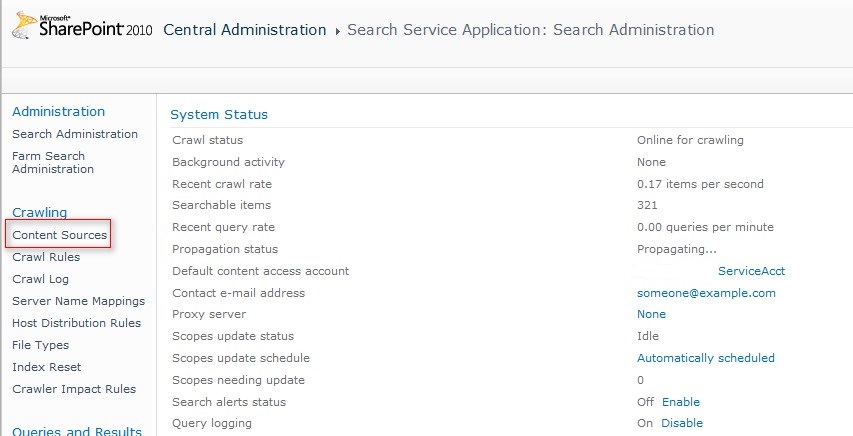

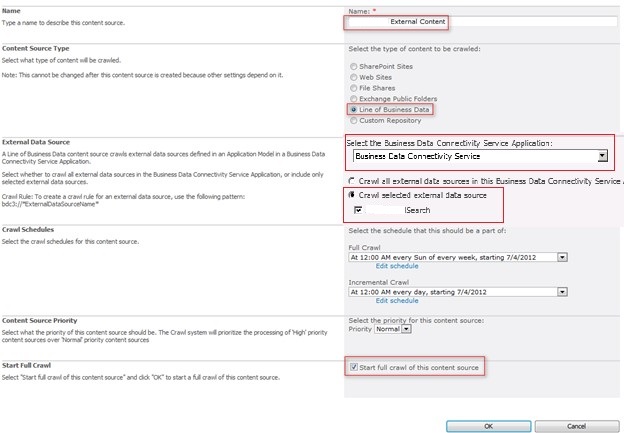

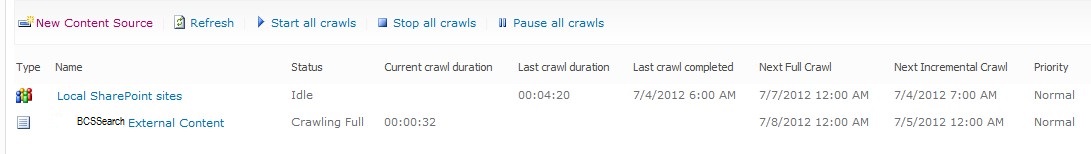

3. Create Line of Business Content source under Search Service

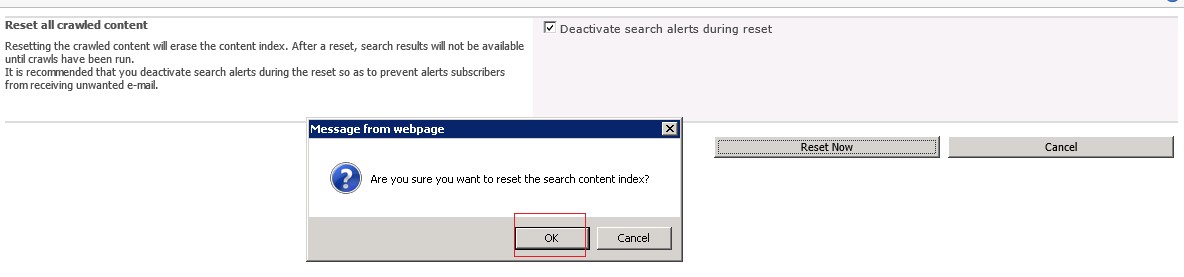

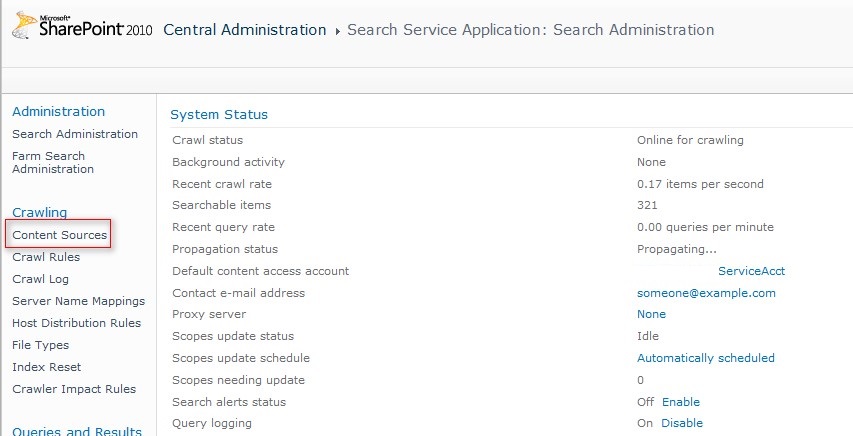

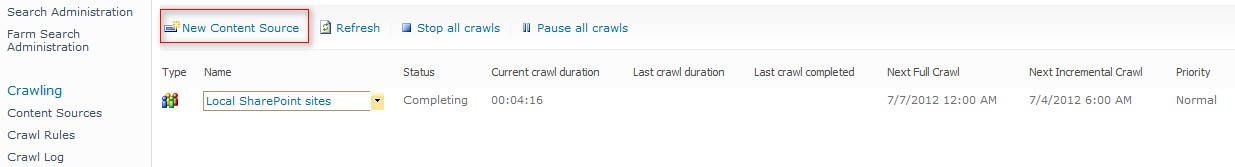

Go to Central Administration >>> Application Management >>> Service Applications >>> Manage Service Applications >>> Select “Search Service Application”

Go To Search Service Application >>> Content Sources

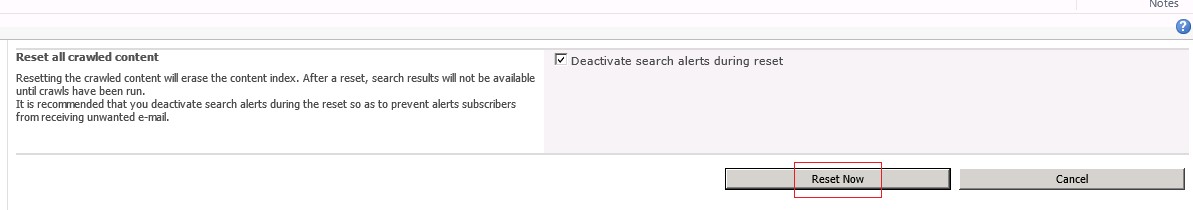

**Index Reset – (**note: - This action also reset the index of other Content sources)

- Go to Central Administration >>> Application Management >>> Service Applications >>> Manage Service Applications >>> Select “Search Service Application”

- Click on Index Reset option in the left menu.

Go To Search Service Application >>> Content Sources >>> New Content Source

- Enter Name “BCSSeach External Content”.

- Select Line of Business Data option in Content Source Type section.

- Select the BCSSearch data source.

- Provide the crawl schedules for Incremental and Full Crawl. This is optional and can be done later.

- Start the Full Crawl.

Note: You should add Search Service Account in your BCS External Content type Object permission and give appropriate permission before Crawl. Full Crawl of External Data Source should complete and Successful before proceeding to next step.

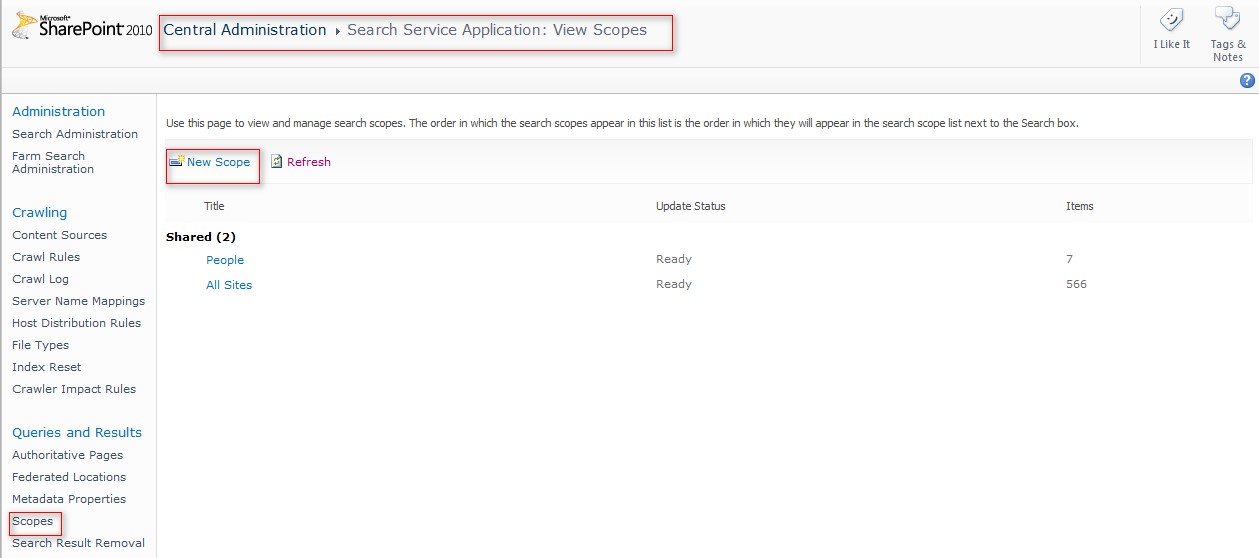

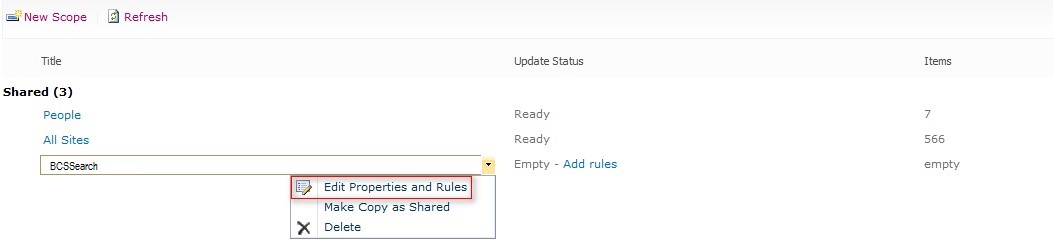

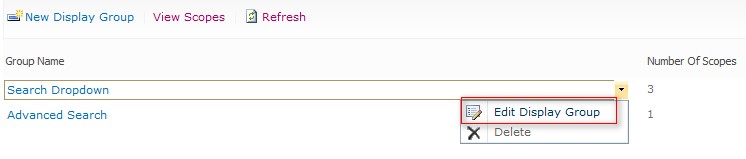

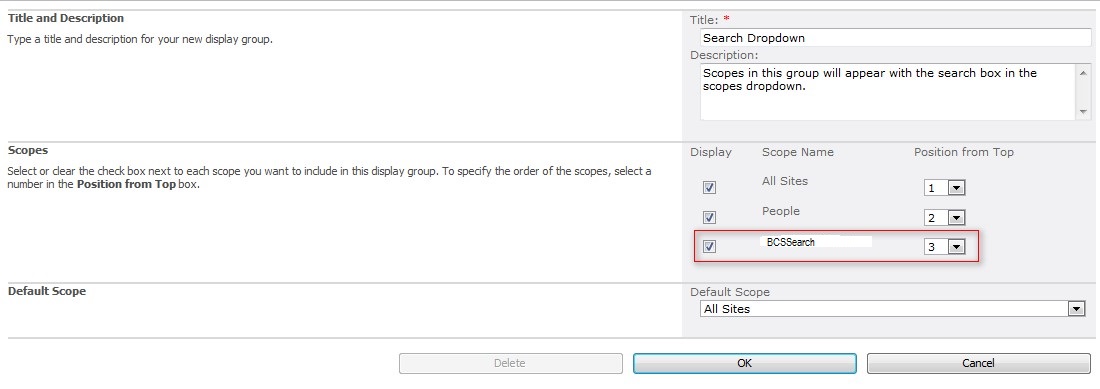

4.Configure Search Scope

- Go to Central Administration >>> Application Management >>> Service Applications >>> Manage Service Applications >>> Select “Search Service Application”

- Go To Search Service Application >>> Scopes.

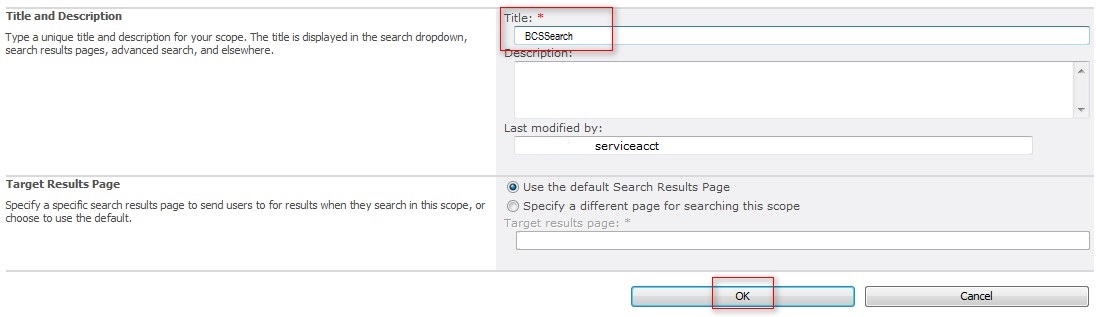

- Click on “New Scope” option.

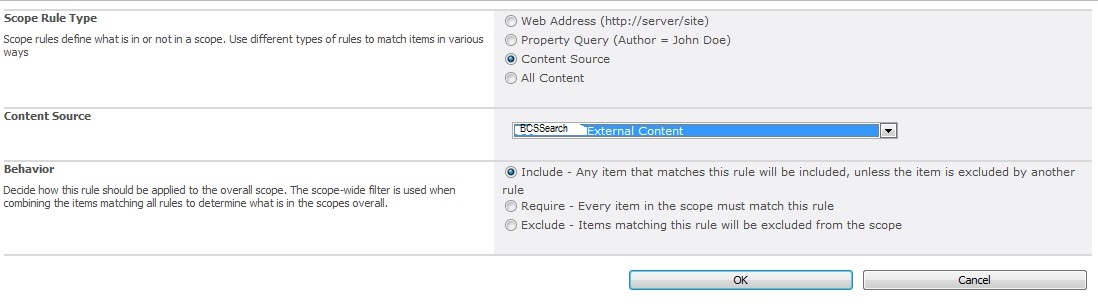

Add new scope with name “BCSSearch”.

Add New Rule using “BCSSearch External Content” Content Source.

Start Scope Update from Search Administration page.

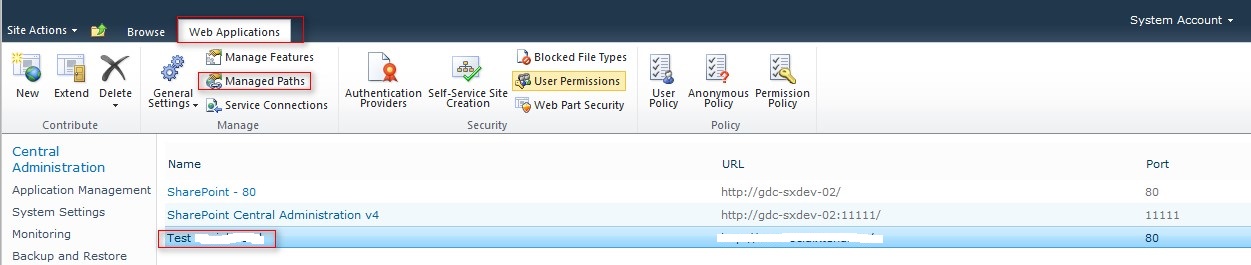

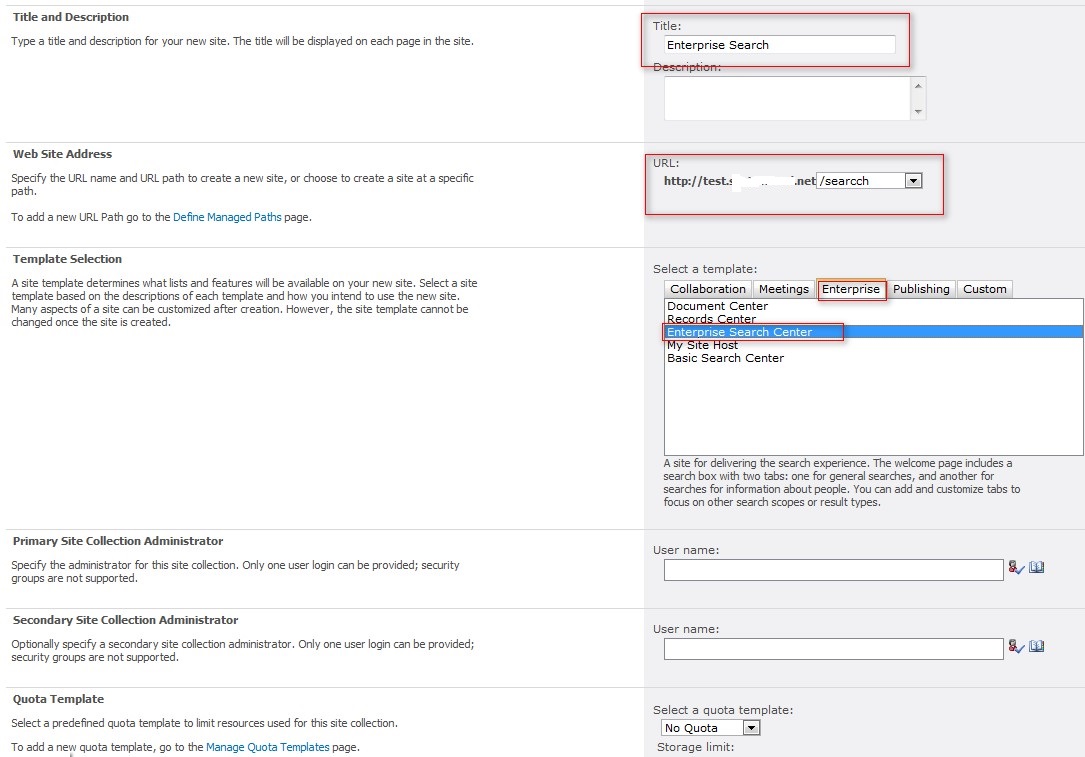

Create a Search Center Site (for Enterprise Search) (if not Exist) and Configure Search Settings

Create Search Center Site

- Go to Central Administration >>> Application Management >>> Manage Web Applications

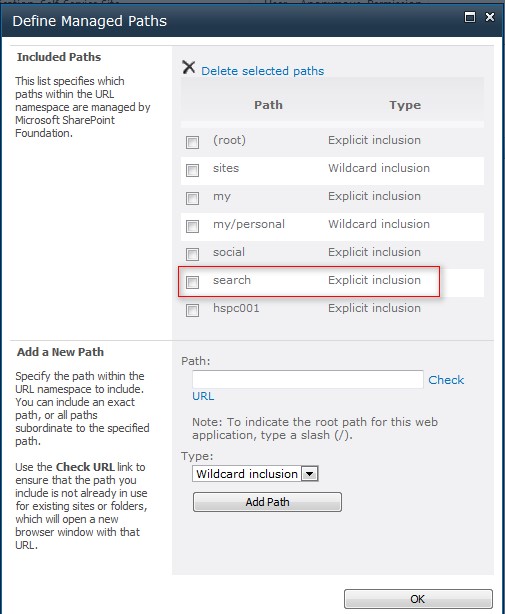

- Select Web Application >>> Click Managed Path

- Create Search (Explicit Inclusion) >>> click OK

- Go to Central Administration >>> Application Management >>> Create Site Collection

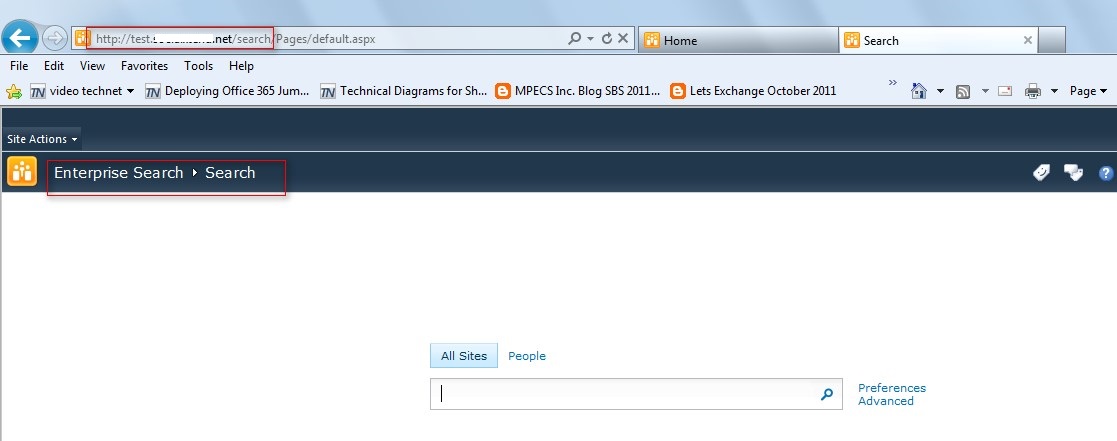

- Browse Search center site.

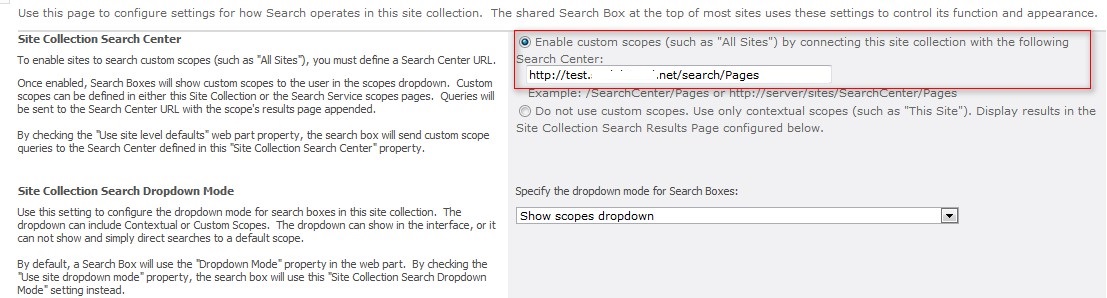

5.Configure Search Settings

Go to Site Actions >> Site Settings

Under Site Collection Administration, click Search Settings

Provide the URL of Enterprise Search Site collection we created earlier followed by “/pages”.

http://{Searchsiteurl}/pages

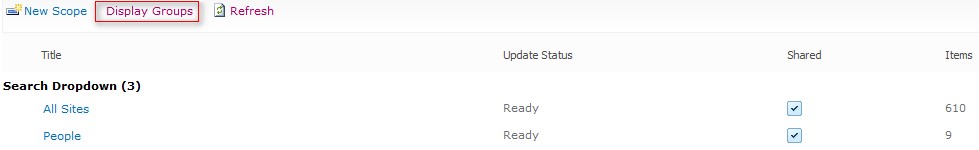

Go back to Site Settings >>> Site Collection Administration, click Search Scopes

Click Display Groups

Download complete package containing BDCM File / Source Code / Database Scripts from here.

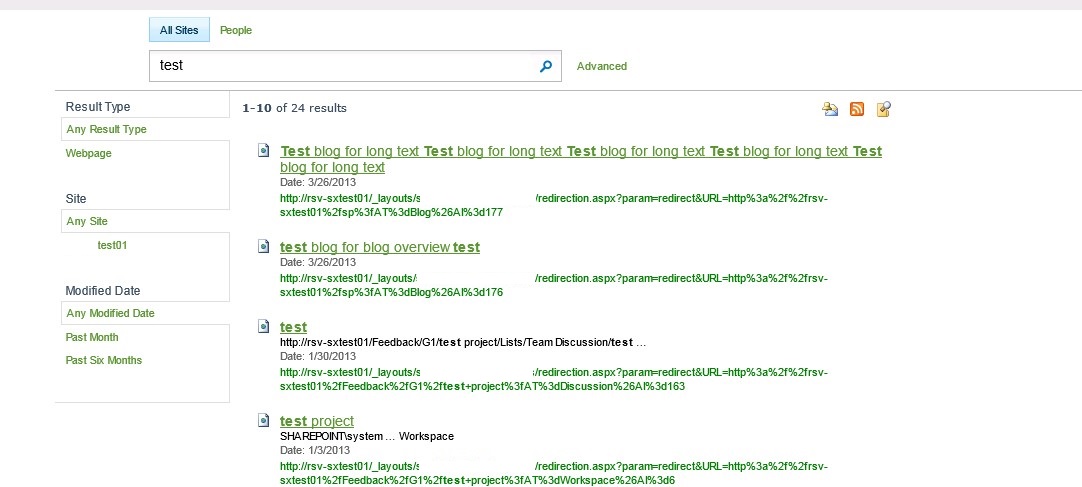

Conclusion

This solution tested and executed successfully for both SharePoint 2010 and SharePoint 2013 with Enterprise and FAST search on 1 million records with 10GB of files.

About Authors

Nitin K. Gupta - SharePoint Architect / Consultant since 2004, developed and designed several solutions in SharePoint 2003, MOSS 2007, SharePoint 2010 & SharePoint 2013.

Vaibhav Sharma - SharePoint Technical Lead, developed solutions in SharePoint MOSS 2007, SharePoint 2010, SharePoint 2013, SharePoint 2016, Office 365.