TensorFlow on Docker with Microsoft Azure Container Services

TensorFlow™ is an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them. The flexible architecture allows you to deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device with a single API. TensorFlow was originally developed by researchers and engineers working on the Google Brain Team within Google's Machine Intelligence research organization for the purposes of conducting machine learning and deep neural networks research, but the system is general enough to be applicable in a wide variety of other domains as well.

Learning more about TensorFlow

Installing TensorFlow

Once you have Docker installed see my previous post. https://blogs.msdn.microsoft.com/uk_faculty_connection/2016/09/23/getting-started-with-docker-and-container-services/

One of the easiest ways to get started with TensorFlow is running TensorFlow in a Docker container.

Google has provided a number of tools with their release, but a number of academics I have engaged with would prefer to have TensorFlow running within Jupyter notebook, Microsoft is making a lot of investment in Jupyter notebooks and recently launched https://notebooks.azure.com.

In regard to Docker there is a great set of Docker images on DockerHub. One of the images available contains a Jupyter installation with TensorFlow.

Since Jupyter notebooks run a local server, we need to allow port-forwarding for the port we intend to run on.

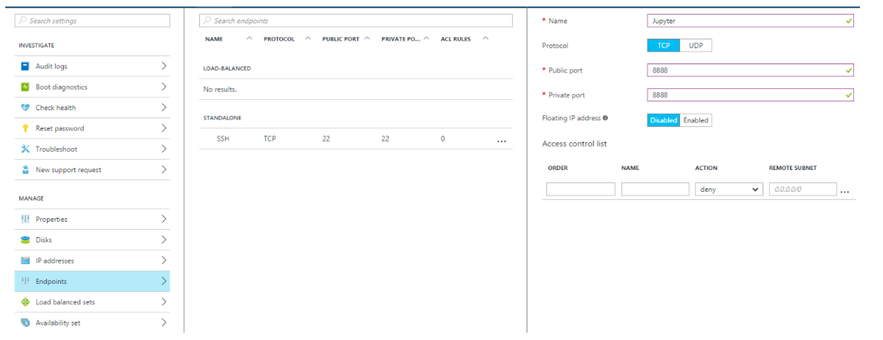

We need to not only port-forward from the container to the Docker-engine-running VM, but we need to port-forward from the VM externally. To do so, we simple expose that port via the Azure portal by adding a new endpoint mapping 8888 to whichever external port you choose:

Now that you've exposed the endpoint from Azure, you can fire up the Docker container by using the command:

docker run -d -p 8888:8888 -v /notebook:/notebook xblaster/tensorflow-jupyter

This will take some time, but once it's complete you should have a fully functional Docker container running TensorFlow inside a Jupyter notebook, which will persist the notebook for you.

Testing the Installation

Now that you have a running Jupyter notebook instance, you can hit your endpoint from your own home machine at https://\<your-vm>.cloudapp.net:8888/ and see it in action. Create a new Juypter notebook, and then paste the Python code below and run it to verify that TensorFlow is installed and working (from their documentation):

import tensorflow as tf

import numpy as np

# Create 100 phony x, y data points in NumPy, y = x * 0.1 + 0.3

x_data = np.random.rand(100).astype("float32")

y_data = x_data * 0.1 + 0.3

# Try to find values for W and b that compute y_data = W * x_data + b

# (We know that W should be 0.1 and b 0.3, but Tensorflow will

# figure that out for us.)

W = tf.Variable(tf.random_uniform([1], -1.0, 1.0))

b = tf.Variable(tf.zeros([1]))

y = W * x_data + b

# Minimize the mean squared errors.

loss = tf.reduce_mean(tf.square(y - y_data))

optimizer = tf.train.GradientDescentOptimizer(0.5)

train = optimizer.minimize(loss)

# Before starting, initialize the variables. We will 'run' this first.

init = tf.initialize_all_variables()

# Launch the graph.

sess = tf.Session()

sess.run(init)

# Fit the line.

for step in xrange(201):

sess.run(train)

if step % 20 == 0:

print step, sess.run(W), sess.run(b)

# Learns best fit is W: [0.1], b: [0.3]

Now you know it works, but notice that you didn't need to hit an https endpoint or type in any credentials

This a insecure implementation so please don't use this for anything you don't mind losing.

For a production environment you want a secure your notebook documentation is easy to follow.

Comments

- Anonymous

November 26, 2016

Tensorflow Walkthrough available Jupyer Notebooks01_Tensorflow_installation.ipynb in Python 2.x 02_Linear Regression.ipynb in Python 3.x 03_autoencoder.ipynb in Python 2.x 04_convolutional_net.ipynb in Python 2.x 05_lstm.ipynb in Python 2.x 08_word2vec.ipynb in Python 2.x Available at Notebooks.azure.com https://notebooks.azure.com/library/OEdO6ybBxM4/dashboard?page=1 Source Code can be downloaded from https://github.com/MSFTImagine/TensorFlow-Lab - Anonymous

February 27, 2017

A Selection of Tensorflow models https://github.com/tensorflow/models - Anonymous

April 18, 2017

Looks great Lee!My group (currently 3 researchers) was looking at using Jupyterhub on an Azure VM for our investigations with Tensorflow. I'm not so familiar with the architecture questions, but what would be the advantages of running it as you have laid out here vs. with Jupyterhub?Full disclosure - I haven't been successful getting the Jupyterhub solution on Azure yet...- Anonymous

April 19, 2017

Hi Christian you have few options we have just launched a Data Science VM on Ubuntu which includes Tensorflow and GPU Support see https://blogs.msdn.microsoft.com/uk_faculty_connection/2017/04/19/now-available-on-azure-marketplace-ubuntu-data-science-virtual-machine/ in regards to JupyterHub directly on the VM the Data Science VM includes Juypter Server or you can install this separately see https://github.com/jupyterhub/jupyterhub or simply utilise http://notebooks.azure.com which is our hosted Jupyter Notebook service which simply allows you to have Notebooks available 24 x 7 at no Cost- Anonymous

April 19, 2017

Hi Lee,That Data Science VM looks very similar to what I was trying to set up on my own! Thanks for the heads-up!

- Anonymous

- Anonymous

- Anonymous

April 20, 2017

If your interested in the implementation of autoscaling check out the following tutorial for Tensorflow using containers http://wbuchwalter.github.io/container/docker/machine/learning/kubernetes/gpu/training/2016/03/23/gpu-ml-training-cluster/ - Anonymous

April 23, 2017

Hi Lee,Thanks for the great post! Once TensorFlow models have been trained, what service would you recommend for operationalising them and exposing through API endpoints? Lack of control (and TF support) in Azure Machine Learning is a concern so I would like to avoid that if possible.- Anonymous

April 24, 2017

Hi DanYou can simply host these on Azure WebApp or Service here is an example https://github.com/sugyan/tensorflow-mnist which shows a simple restAPI example by using Python/Flask and loading pre-trained models. For more details on Azure Web and Flask see https://azure.microsoft.com/en-us/develop/python/- Anonymous

April 26, 2017

Hi Lee,That sounds great, thanks! I will look into those suggestions.

- Anonymous

- Anonymous