Microsoft Azure Data Science Virtual Machine with Theano and Keras

Dependencies

Before getting started, make sure you have the following:

- Azure Data Science Virtual Machine Deep Learning Toolkit

- CUDA 7.5 (link)

- Python & Anaconda already pre installed on the DSVM

- Compilers for C/C++ already preinstalled on the DSVM

- GCC for code generated by Theano

- Visual Studio already preinstalled on the Windows DSVM

The data science virtual machine (DSVM) on Azure, based on Windows Server 2012, contains popular tools for data science modelling and development activities such as Microsoft R Server Developer Edition, Anaconda Python, Jupyter notebooks for Python and R, Visual Studio Community Edition with Python and R Tools, Power BI desktop, SQL Server Developer edition, and many other data science and ML tools.

The DSVM is the perfect tool for academic educators and researchers and will jump-start modelling and development for your data science project.

This deep learning toolkit provides GPU versions of mxnet and CNTK for use on Azure GPU N-series instances.

These Nvidia GPUs use discrete device assignment, resulting in performance that is close to bare-metal, and are well-suited to deep learning problems that require large training sets and expensive computational training efforts. The deep learning toolkit also provides a set of sample deep learning solutions that use the GPU, including image recognition on the CIFAR-10 database and a character recognition sample on the MNIST database.

Deploying this toolkit requires access to Azure GPU NC-class instances for GPU based workloads.

Setting up CUDA and GCC

CUDA, go the NVIDIA’s website and download the CUDA 7.5 toolkit. Select the right version for you computer.

The GCC compiler, there are many GCC compliers available TDM-gcc has a 64 bit version

To make sure that everything is working at this point, run the the following command on the command line (cmd.exe) .

where gcc

where cl

where nvcc

where cudafe

where cudafe++

If if finds the path for everything you are good to go.

Getting Theano :

In DSVM, we have anaconda python 2.7 installed. Anaconda Python installs all dependencies of Theano.

Step 1: Open Windows Anaconda prompt and execute the following in command line -

conda install mingw libpython

Step 2: Installing Theano - Once the dependencies are installed, you can download and install Theano. To get the latest bleeding edge version go to Theano on GitHub and download the latest zip. Then unzip it somewhere.

Step 3: Configuring Theano - Once you have downloaded and unzipped Theano, cd to the folder and run

python setup.py develop

This step will add the Theano directory to your PYTHON_PATH environment variable.

Step 4: Getting Keras - Keras runs on Theano by default, so we just get it via pip

pip install keras

After installing the python libraries you need to tell Theano to use the GPU instead of the CPU. A lot of older posts would have you set this in the system environment, but it is possible to make a config file in your home directory named “ .theanorc.txt” instead. This also makes it easy to switch out config files. Inside the file put the following:

[global]

device = gpu

floatX = float32

[nvcc]

compiler_bindir=C:\Program Files (x86)\Microsoft Visual Studio 12.0\VC\bin

Lastly, set up the Keras config file ~/.keras/keras.json. In the Keras config file add

{

"image_dim_ordering": "tf",

"epsilon": 1e-07,

"floatx": "float32",

"backend": "theano"

}

Testing Theano with GPU

To check if your installation of Theano is using your GPU use the following code

from theano ``importfunction, config, shared, sandbox

importtheano.tensor as T

importnumpy

importtime

vlen ``=10*30*768# 10 x #cores x # threads per core

iters ``=1000

rng ``=numpy.random.RandomState(``22``)

x ``=shared(numpy.asarray(rng.rand(vlen), config.floatX))

f ``=function([], T.exp(x))

print``(f.maker.fgraph.toposort())

t0 ``=time.time()

for i ``inrange``(iters):

r ``=f()

t1 ``=time.time()

print``(``"Looping %d times took %f seconds"% (iters, t1 ``-t0))

print``(``"Result is %s"%(r,))

ifnumpy.``any``([``isinstance`` (x.op, T.Elemwise) ``for x ``inf.maker.fgraph.toposort()]):

print``(``'Used the cpu'``)

else``:

print``(``'Used the gpu'``)

Testing Keras with GPU

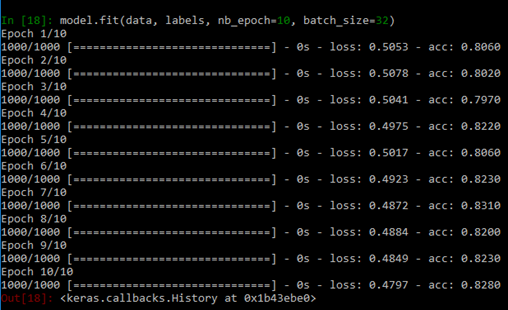

This code will make sure that everything is working and train a model on some random data. The software needs compile so expect a delay in the initial run.

from keras.models ``importSequential

from keras.layers ``importDense, Activation

# for a single-input model with 2 classes (binary):

model ``=Sequential()

model.add(Dense(``1``, input_dim``=``784``, activation``=``'sigmoid'``))

model.``compile``(optimizer``=``'rmsprop'``,

loss``=``'binary_crossentropy'``,

metrics``=``[``'accuracy'``])

# generate dummy data

importnumpy as np

data ``=np.random.random((``1000`` , ``784``))

labels ``=np.random.randint(``2``, size``=``(``1000`` , ``1``))

# train the model, iterating on the data in batches

# of 32 samples

model.fit(data, labels, nb_epoch``=``10``, batch_size``=``32``)

If everything works you will see the completion without any errors.

Resources

This tutorial is an improved version which allows you to make Theano and Keras work with Python 3.5 on Windows 10 if you have access to a local PC with a Nvidia GPU. https://ankivil.com/installing-keras-theano-and-dependencies-on-windows-10/

This sample shows how to import Theano and Keras into Azure ML and use them in Execute Python Script module. https://gallery.cortanaintelligence.com/Experiment/Theano-Keras-1

Keras Documentation https://keras.io/

Theano Documentation https://www.deeplearning.net/software/theano/

Using Tensorflow on Azure see https://blogs.msdn.microsoft.com/uk_faculty_connection/2016/09/26/tensorflow-on-docker-with-microsoft-azure/

Keras, Theano and Tensorflow Jupyter Notebooks https://github.com/MSFTImagine/deep-learning-keras-tensorflow these can be simply imported into https://notebooks.azure.com

Comments

- Anonymous

December 18, 2016

The comment has been removed- Anonymous

January 10, 2017

Hi Alexander Sorry to hear your issues, the DSVM team have made a number of improvement in the New Year bringing more analytics tool goodies to the users of the data science VM. Here are a few of the changes.1. Microsoft R Server 9.0.1 Dev edition is now on the VM. MRS 9 brings a lot of exciting changes including several new ML and deep learning algorithms from TLC (previously known as RML. The extended R Library with slew of fast new ML/AI algos is now called MicrosoftML). There is a brand new architecture and interface for R Deployment (The next generation of DeployR. It is now just called Microsoft R Operationalization and is closer to AzureML operationalization in terms of the R interface. The library is called mrsdeploy. We do have a basic deploy sample for both notebook and RTVS/Rstudio). SQL Server 2016 Dev edition and the associated In-DB analytics is also updated to SP1. 2. R Studio Desktop is now bundled into the VM. This was one of the popular requests and for many people Rstudio is among the first things they used to install after spinning up a DSVM. 3. RTVS is now updated to 0.5. 4. CNTK is now on CNTK2 Beta 6 with several improvements and sample notebooks. (BTW – We are already out of date as CNTK team rapidly iterates and released a Beta 7 after our validation. But updating to latest esp if you just using CNTK Python interface is pretty simple. Just install the Python wheel file quickly from the latest CNTK release on Github. Also I understand CNTK will be out of Beta later this month when hopefully there wont be as frequent updates). 5. Apache Drill a SQL based query tool that can work with various data sources and formats (like JSON, CSV) was part of our previous update. Now we pre-package and configure drivers to access various Azure data services (like Blobs, SQLDW/Azure SQL, HDI, DocDB). See tutorial on gallery for info on how to query data in various Azure data source from within Drill SQL query language. 6. Partnership with Julia Computing (founded by the creators of Julia language) who is offering Julia professional – a commercially supported and curated distribution of the open source Julia Language and a set of popular packages for scientific computing, data science, AI and optimization. A developer edition of Julia Professional is now installed on the VM. Julia is supported through an IDE and Jupyter. Julia Computing has also provided about 10 sample notebooks bundled into DSVM Jupyter instance (4 of which are data science and deep learning related) to help get started. Updates on Linux DSVM which is now an authoring experience for AzureML-BD (BD for Big Data - The Next generation of AzureML platform hitting private preview soon). In December, we soft launched an update with a local Apache Spark one node instance for development purposes with PySpark and PyCharms IDE. Paul Shealy will send a separate update on Linux DSVM updates as we finish up a new release shortly. This is just a heads-up so you can try these out now if you need them for your projects. Please check-out the new Windows and Linux DSVM to take advantage of some of these nice tools in your analytics arsenal. If there are any questions please use the DSVM forum where the team wants your feedback http://aka.ms/dsvm/forum

- Anonymous