First Look: Docker for Azure Beta

A lot of new and exciting stuff was announced at DockerCon 2016 a couple of months ago, including Docker for Azure.

I received my invitation a couple of days ago, and wanted to share my first impressions.

Setting up

The invitation email contains a "deploy to azure" button, clicking it send us to the custom deployment interface on Azure.

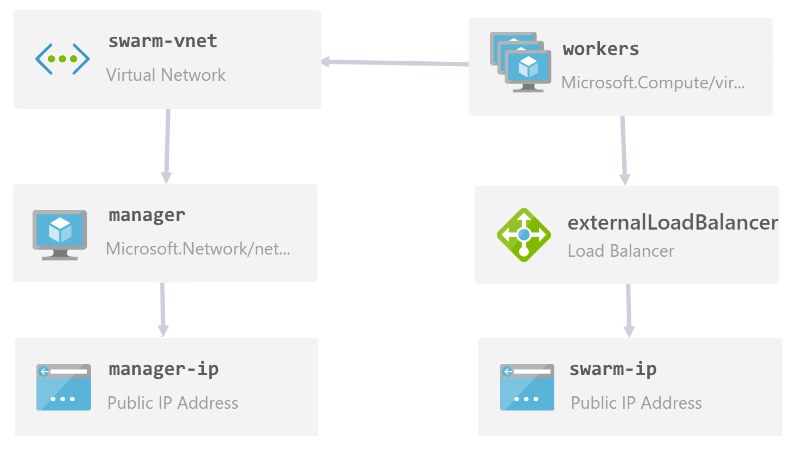

Indeed, Docker for Azure come as an ARM template, and here is what the important bits of this template look like (in the case of a single manager):

[caption id="attachment_1415" align="aligncenter" width="795"] Note: This diagram was generated with the new version of Armviz, which you should take a look at![/caption]

Note: This diagram was generated with the new version of Armviz, which you should take a look at![/caption]

Of course, the template comes with some parameters, allowing you to choose the number of managers and workers, the size of the VMs and the name of the swarm.

The template will also ask you to provide an ssh public key for the manager as well as a principal ID and secret. You can refer to Docker's own documentation for details about how the set up everything.

Diving In

In my case, I simply went with one manager and one worker node.

To connect to the manager, simply ssh into it with the user `docker`

> ssh docker@xxx.xxx.xxx.xxx

Welcome to Docker!

dockerswarm-manager0:~$

Obligatory docker version:

dockerswarm-manager0:~$ docker -v

Docker version 1.12.0, build 8eab29e, experimental

Checking the nodes shows that everything is running as expected:

dockerswarm-manager0:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

6p1xtdlrfwf3suzoqzws3fldh _dockerswarm-worker-vmss_0 Ready Active

bmzw0jiufycg23p3u3pfixsih * _dockerswarm-manager0 Ready Active Leader

Let's see which images are present right out of the box:

dockerswarm-manager0:~$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker4x/agent-azure latest b2b22beefac8 4 days ago 94.13 MB

docker4x/init-azure azure-v1.12.0-beta4 dd6652cf2f87 7 days ago 35.32 MB

docker4x/controller azure-v1.12.0-beta4 d61704e07424 10 days ago 22.46 MB

docker4x/guide-azure azure-v1.12.0-beta4 8dca840e0fc0 2 weeks ago 35.15 MB

And Let's see which one are running:

dockerswarm-manager0:~$ docker ps

CONTAINER ID IMAGE COMMAND

eec4385cd9a4 docker4x/controller:azure-v1.12.0-beta4 "loadbalancer run --d"

334c9369735a docker4x/guide-azure:azure-v1.12.0-beta4 "/entry.sh"

c50f292b7dc6 docker4x/agent-azure "supervisord --config"

3 images are currently running on our manager, you won't find a lot of info about them online, and their Docker hub repository are currently void of any doc, but here is what they do:

- Docker4x/agent-azure: this is Azure Linux Agent which "manages interaction between a virtual machine and the Azure Fabric Controller". This is basically responsible for communication between the VM and Azure for diagnostics, provisioning etc.

- Docker4x/guide-azure: I did not find a lot of info on this one. It is running a cron task that call `buoy` , a custom tool, which seem to be used for logging important events in the swarm.

- Docker4x/controller: This is the most interesting one. It is running `loadbalancer`, another custom tool from docker (which doesn't seem to be open source at the time of this writing). This container is actually managing the load balancing rules of our external load balancer, more about that following.

We saw another image was present, but not currently running: docker4x/init-azure. As the name implies, this images is responsible for the initialization of the different nodes composing your swarm: if the VM role is a manager it will init the swarm, and if the VM role is worker it will join the existing swarm.

Let's create a simple service to see everything in action:

dockerswarm-manager0:~$ docker service create --name nginx --publish 80:80 nginx

dockerswarm-manager0:~$ docker service ps nginx

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR

6lnn6ucp4hg0f5l3dnm47fluq nginx.1 nginx _dockerswarm-worker-vmss_0 Running Running 13 minutes ago

I created a service containing a single replica of nginx and I published the port 80. If I open my browser an navigate to the public IP of my swarm, sure enough I get a "welcome to nginx!", I don't have anything else to do.

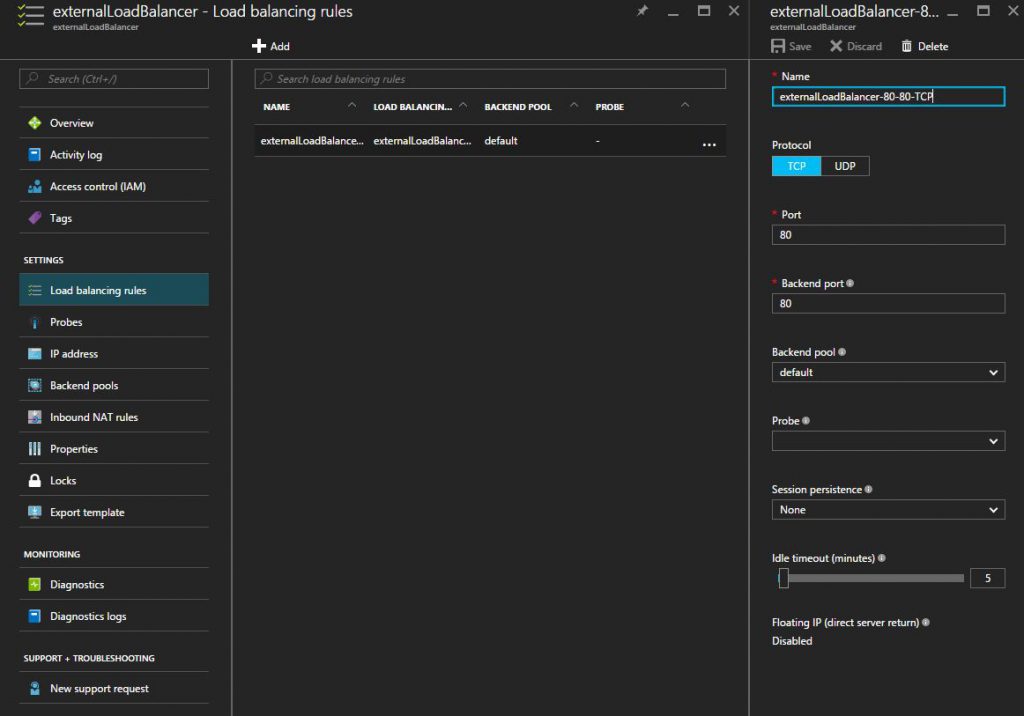

Let's take a look at our load balancer rules in azure:

We can see that one rule was created for the port 80 (the port I published when creating the nginx service), but I never created anything myself!

Remember the docker4x/controller image that we saw earlier was running on the manager? This is actually taking care of monitoring the services running on the swarm and updating the load balancing rules accordingly. When you create a new service in swarm, loadbalancer will create a new load balacing rule for each port that was published, and delete them when you delete the service in swarm.

Conclusion

Setting up the swarm took less than 5 minutes as all I needed to do was to provide 8 parameters for the ARM template.

Once the creation of the resources in Azure is completed, you have a ready to use swarm!

Thanks to loadbalancer, Azure Load Balancer is nicely integrated into the workflow making services creation a breeze.