Incubation Week: What Did you Say?

After the launch event this Monday, I spent the rest of my week deviating a bit from my normal schedule and participated in the Microsoft BizSpark Incubation Week for Windows 7 at the Microsoft Technology Center (MTC) in Waltham. Sanjay Jain did a great job orchestrating the event and capturing the day-by-day progress of the five start-ups involved in the effort, so I’d like to focus this post on the geekier parts of my week.

After the launch event this Monday, I spent the rest of my week deviating a bit from my normal schedule and participated in the Microsoft BizSpark Incubation Week for Windows 7 at the Microsoft Technology Center (MTC) in Waltham. Sanjay Jain did a great job orchestrating the event and capturing the day-by-day progress of the five start-ups involved in the effort, so I’d like to focus this post on the geekier parts of my week.

As one of the advisors, I ended up floating between two teams, Panopto and Answer Point Medical Systems. They are two completely different applications, at different stages of development, serving distinct audiences, but they ended up sharing a need for speech-to-text capabilities. Of course, I was aware there was some built-in speech recognition in Windows, but attributed it to an accessibility feature where you speak stilted English to (hopefully) make the applications do what you want. With a little digging though, I found that it’s a pretty rich capability with an unmanaged (and even a managed!) API for handling speech – rivaling some of the 3rd-party speech recognition products.

The built-in speech recognition capabilities (look for Windows Speech Recognition in the Windows Vista or Windows 7 Start menu)  actually go a long way to enabling you to incorporate speech into your application. When you run Windows Speech Recognition the first time, you’re encouraged to complete the tutorial, which actual doubles as training the recognizer to your voice.

actually go a long way to enabling you to incorporate speech into your application. When you run Windows Speech Recognition the first time, you’re encouraged to complete the tutorial, which actual doubles as training the recognizer to your voice.

There’s additional training you can also do, including spelling and recording individual words and phrases. This latter capability came in pretty handy as my colleague, Bob Familiar, was working with the Answer Point folks to capture a doctor’s verbal diagnosis in a medical record. As you might expect, words like hematoma don’t come out the way you want… I got ‘he told FEMA’ and ‘seem a Tacoma’ for example, before training for the word ‘hematoma’.

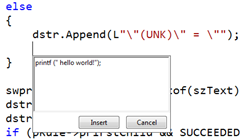

The built-in speech analyzer automatically outputs to whatever text-enabled application you happen to be in. In this case, Answer Point was building a WPF (and touch-enabled!) application, so the text was output to a FlowDocument element. And if your application isn’t text-enabled, as it appears Visual Studio is not, Windows 7 introduces the dictation scratchpad, which captures your text and has a simple interface to enable you to copy the text in to the document.

The built-in speech analyzer automatically outputs to whatever text-enabled application you happen to be in. In this case, Answer Point was building a WPF (and touch-enabled!) application, so the text was output to a FlowDocument element. And if your application isn’t text-enabled, as it appears Visual Studio is not, Windows 7 introduces the dictation scratchpad, which captures your text and has a simple interface to enable you to copy the text in to the document.

The guys from Panopto though had a completely different requirement for speech-to-text capability. Their application captures live events, like training and presentations, via multiple feeds – video, slides, audio, and transcript – and coalesces the content in a single viewing experience. But here’s the cool part, you can search the content, and the viewer will automatically bring you to the relevant portion of the recording – again, keeping the slideware, video and audio all in sync. They’ve been relying on a third-party manual transcription service, so while at Incubation Week we took a shot a automating this. Here’s roughly what we did:

Instantiated a System.Speech.Recognition.SpeechRecognitionEngine. The System.Speech namespace is part of the .NET Framework 3.0, which of course made life quite easy for us managed developers. The Speech API (SAPI 5.3) is actually part of the Windows SDK now as well, making it straightforward for unmanaged developers to incorporate speech capabilities as well. Check the /samples/winui/speech subdirectory of the Windows 7 SDK for a number of examples.

In this case, the input to the engine was not a live voice, but rather a WAV file that they extracted from their recordings, so instead of using the default audio device, they called the SetInputToWaveFile API. At that point, they next invoked RecognizeAsync on the recognition engine instance, setting in motion a series of events as the engine processed the audio stream:

SpeechDetected fires first, followed by

SpeechHypothesized fires when the recognizer makes its hypothesis on a word or phrase; you typically don’t put code here unless you’re debugging. This is followed by

SpeechRecognized which is where you access the speech-to-text result and apply it where needed. The Result argument of the associated event class is an instance of RecognitionResult, which provides a wealth of information about the recognized speech, including other candidate transcriptions (with a measure of confidence) as well as details on semantics and which grammar the text has matched. [Grammar refers to the capabilities to provide custom grammars, via XML or dynamically created in code, that map to common phrases you might detect, for example, “Press {number} for {department}”. There’s a W3C Speech Recognition Grammar Specification that the managed API supports as well.]

Lastly, SpeechRecognitionRejected fires in lieu of SpeechHypothesized if there’s no suitable translation of the speech to text within a reasonable confidence level.

Armed with this, the guys from Panopto made a pretty good foray into speech indexing, especially given the timeframe. Looking forward, we’re going to take a further look at what Microsoft Research has been doing with project MAVIS in terms of audio indexing.

Anyway. it was a great week allowing me to go a little deeper with customers than I normally get to and in a technology area in which I was completely oblivious!