Building a physical bot using the BotFramework #FreddyTheFishBot @FutureDecoded 2016

Yesterday, at the end of my talk on the botframework with Simon Michael at FutureDecoded, I unveiled my latest BotFramework creation. This was a fun project that showcases how you can take a bot from the digital world into the physical world using the technologies that were all covered as part of the Artificial Intelligence track with Martin Kearn and Amy Nicholson. During the day, Amy had covered Machine Learning and demonstrated how we could use data from previous FutureDecoded events to predict the likely audiences for the different sessions. Martin covered the growing amount of Cognitive Services that are currently available and did some great demos of their capability. Whilst Simon and I discussed the rise of bots, the positioning of the BotFramework within the Cortana Intelligence Suite and demonstrated how to build smart bots. The source code for the intelligent bot I built out during the session is available here.

However, I wanted to take it one stage further and demonstrate the new types of conversational experience that can now be created using all of this technology together. Using the DirectLine Channel within the botframework, allows us to integrate our bot into any application. So the only limit here is your imagination.. Roll on FreddyTheFishBot!

So firstly, I should point out, although this is a physical bot - you could interact with it over any of the other channels such as Skype etc. Here's an overview of the technology that's crammed into this thing:

From Visual Studio I created FreddyTheFishBot using the botframework Bot Builder SDK. Then I added some "smarts" to it, namely LUIS for language understanding and Bing News Search for returning news stories from MS Cognitive Services. It also integrates with a trained ML model based on FutureDecoded data from previous events. This is then deployed to Azure App Services to make it publically addressable within the BotFramework. Then using a Raspberry Pi 2 running Windows 10 IoT Core I build out a Universal Windows Platform (UWP) application that leverages the DirectLine API to broker messages to and from my bot.

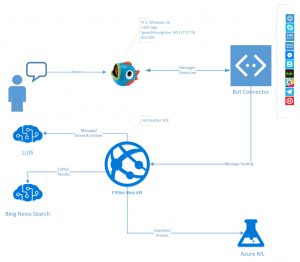

The overall architecture of FreddyTheFishBot looks like this:

Using spoken natural language, voice recognition is performed within the UWP app to convert speech to text (STT). This text is then sent via the Bot Connector to FreddyTheFishBot, where it applies LUIS to decipher the intent of the command/message and return any entities (parameters/variables) from the message. If it's a news search request, the Bing News Search API is invoked using the entities extracted from LUIS. If the request is related to the FutureDecoded trained Machine Learning model, it is then routed to it's published API for a response. When the response has been constructed it is routed back through the Bot Connector, down the DirectLine API to my UWP app - which then performs text to speech (TTS) to talk.

Due to the conference room size at FutureDecoded I had concerns that FreddyTheFishBot wouldn't handle the STT given I was using a cheap microphone component. Thus, I recorded an interview with him just in case..

[video width="854" height="480" mp4="https://msdnshared.blob.core.windows.net/media/2016/11/freddythefishbot.mp4"][/video]