Multitouch Event System for Unity 5.5 (and 5.3, 5.4)

I mentioned in my first post about Unity Multitouch processing that the 5.5 beta is making some private items protected to enable better derivation. This enables integration of the Multitouch Event System possible without having to build it into UnityEngine.UI.

There is another way of doing such integration--via reflection. This gets rather cumbersome and the compiler cannot help you get things right. As it turns out, the changes to allow integration without reflection did not get made until Beta 11 (and even at this point there's still one that isn't available yet). So the new repository, https://bitbucket.org/johnseghersmsft/unity-multitouch, may change if this need for reflection changes, but it's a kind of sneaky back door to get the multi-selection working, and Unity may choose to do that differently :)

This repository has five branches: 5.3, 5.4, 5.5, 5.3.testonly, and 5.3.testonly. The first three branches provide a Multitouch test application along with the Multitouch Event System in the Plugins directory. The *.testonly branches only provide the test application for working with the UnityEngine.UI fork referenced in my prior blog entry. Branch 5.5 has two tags on it for Beta 10 and Beta 11. The Beta 10 version has to use the same reflection methods as 5.3 and 5.4.

At this point, the new repository should be considered the most up-to-date project and the custom UnityEngine.UI version will not be updated going forward.

The Test App

[caption id="attachment_115" align="alignright" width="300"] Screenshot of multitouch test app (click to expand)[/caption]

Screenshot of multitouch test app (click to expand)[/caption]

The test app has a single scene, "main". It has a Scroll View on the left with a bunch of simple items in it. There is a normal button. And there is a target panel with a script which handles multi-touch events.

On the right side of the screen is also a text control showing debugging information about the touch points and how they are being processed. Each of the controls has a script which collects information about received events (TouchStatusCollector) so they can be displayed by this debugging tool.

Since the editor does not support multitouch, it is best to build the app and run it outside of the editor to get the full sense of how it works. You can get some sense of it in the editor (or on non-touch screens) by using a keyboard cheat: hold down the Control key when clicking with the mouse. This will cause a "fake touch" to be held onto as though it is still active which will let you simulate pinch and rotation gestures, but not the two-fingered Pan gesture since that requires both points to be moving in the same direction.

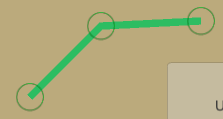

Along with these controls, there is an object called TouchTrackerOverlay. This contains a script that displays the touch points and the clusters with which they are associated. For example, this image shows how three touches in a cluster might look, with the green lines illustrating the spanning tree which connects the points.

[caption id="attachment_125" align="alignright" width="223"] Screengrab showing a three-touch cluster as rendered by the ShowTouchSpanningTree debugging component.[/caption]

Screengrab showing a three-touch cluster as rendered by the ShowTouchSpanningTree debugging component.[/caption]

Play with this a little. Use both hands and interact with multiple controls at once. Try starting with your hands close together and them pull them apart and you will see one cluster split into two. Move them back together and the clusters will stay separated. Each time you add a new touch, it is added to the cluster closest to the touch--if it's close enough. The maximum distance is set on the Multiselect Event System component of the EventSystem game object. I've made it short (3 inches) for this demo. In normal usage, on a large display, something in the 5-6 inch range may be more appropriate.

Unity Mouse Events

The normal Unity EventSystem is designed much more around mouse clicks than around touch. When the user presses the left mouse button while pointing at a button on the screen, for example, Unity Event System performs a raycast from that point. The raycast identifies all of the objects which are shown (or would be shown) at that pixel on the screen. In the case of Button1, there are three such objects: the Canvas, the Button1 game object, and the Text game object. Canvas rendering places child objects on top of parent objects, so the Text object is the top-most game object at that pixel.

The EventSystem then executes the OnPointerDown event on the hierarchy starting by the topmost object. So it examines the Text object of Button1 to see if it has a handler for the event (i.e. if there is a component that implements the IPointerDownHandler interface). The Text object does not, so it walks up the tree to the parent: Button1. The Button component does implement IPointerDownHandler--so it becomes the target object for handling the further mouse events until the mouse button is released. This can be seen by examining the PointerEventData object for future mouse events, where the eventData.pointerPress member is set to this Button's game object.

Two Touches, One Event

When you touch the screen with one finger, Unity essentially does the same thing as above. However, when you touch with two fingers, Unity's normal processing doesn't handle things the way you might want. It still generates a single OnPointerDown event, and other pointer events such as drag and release. However, it uses the centroid of the touches as the screen point for the raycast used to determine the event target. So if you touch the screen with two fingers on either side of a button, the centroid (the common center point of all of the touches) will be halfway between your fingers, thus sending the events to the button.

Similarly, if you're running on an 84" Surface Hub, and two people press the screen on opposite sides, something in the middle will be pressed.

The object handling the events could examine the Input.touches array to look at all of the individual touch points.

Touch Clusters

To solve this I group touches into clusters, and each cluster is processed similarly to the way a single mouse click is handled. In each cluster, the earliest touch (or the first one processed in the case of simultaneous touches) is considered the Active Touch and is used for defining the raycast position. In the Surface Hub case above, there would be two events, one for each cluster on either side of the display. Each event contains information about the points in the associated cluster. (Note that a cluster may only have a single touch.)

Handling Multitouch Events

Let's take a look at the script attached to the multitouch target panel: Assets/Samples/TouchSizePosition.cs.

[RequireComponent(typeof(RectTransform))]

public class TouchSizePosition : UIBehaviour,

IPanHandler, IPinchHandler, IRotateHandler,

IPointerDownHandler, IPointerClickHandler

The RequireComponent annotation tells us that the Transform for the game object on which the component is placed must be a RectTransform. The interfaces provide the ability to receive Pan (the multifinger equivalent of the Unity-defined Drag), Pinch, and Rotate gestures as well as the normal pointer click handlers.

public void OnPan(SimpleGestures sender, MultiTouchPointerEventData eventData, Vector2 delta)

{

var rcTransform = GetTransformAndSetLastSibling();

TranslateByScreenDelta(rcTransform, eventData.delta, eventData.pressEventCamera);

eventData.eligibleForClick = false;

}

private RectTransform GetTransformAndSetLastSibling()

{

var rcTransform = (RectTransform)transform;

rcTransform.SetAsLastSibling();

return rcTransform;

}

public static void TranslateByScreenDelta(RectTransform rc, Vector2 delta, Camera cam)

{

var pos = rc.anchoredPosition;

var parent = (RectTransform)rc.parent;

var worldPos = parent.TransformPoint(pos);

var screenPoint = RectTransformUtility.WorldToScreenPoint(cam, worldPos);

screenPoint += delta;

RectTransformUtility.ScreenPointToLocalPointInRectangle(parent, screenPoint, cam, out pos);

rc.anchoredPosition = pos;

}

IPanHandler defines a single method, OnPan. The event is sent by the SimpleGestures Multitouch Gesture Module. (In a future blog post I'll dive more into Gesture Modules--the code that interprets finger motions.) The eventData parameter provides all the information normally available in a PointerEventData (MultiToucPointerEventData derives from the former) as well as the information about the touch cluster and certain information about the change in the touch cluster--such as the position of the cluster's centroid. In the case of multiple such targets, I want the one currently being moved to also be the top-most visual object, thus the helper function GetTransformAndSetLastSibling is used in the handlers. TranslateByScreenDelta uses the event parameter delta to compute how much to move the RectTransform's anchoredPosition. (Note that the delta parameter is the same as the eventData.centroidDelta value.)

public void OnPinch(SimpleGestures sender, MultiTouchPointerEventData eventData,

Vector2 pinchDelta)

{

var rcTransform = GetTransformAndSetLastSibling();

var size = rcTransform.rect.size;

float ratio = Mathf.Sign(pinchDelta.x) * (pinchDelta.magnitude / size.magnitude);

size *= 1.0f + ratio * PinchFactor;

size = Vector2.Max(size, MinimumSize);

size = Vector2.Min(size, MaximumSize);

rcTransform.SetSizeWithCurrentAnchors(RectTransform.Axis.Horizontal, size.x);

rcTransform.SetSizeWithCurrentAnchors(RectTransform.Axis.Vertical, size.y);

}

The IPinchHandler also defines a single method, OnPinch. Here the pinchDelta is the change in distance between the points forming the pinch. This is pretty straightforward for two fingers, but it works for more fingers as well. If you're curious, this is an aggregate of how much each touch moves toward or away from the centroid.

public void OnRotate(SimpleGestures sender, MultiTouchPointerEventData eventData, float delta)

{

var rcTransform = GetTransformAndSetLastSibling();

rcTransform.Rotate(new Vector3(0, 0, -delta));

}

Similarly, the OnRotate method is the implementation of the IRotateHandler interface. The delta passed here is an angle (in degrees) based on the change in angle of each point from the centroid.

Normal Unity Events

The normal Unity pointer events work as expected--but there are a few things to note. As I mentioned earlier, many multitouch gestures begin as a single touch since we humans are not necessarily going to touch the screen at exactly the same moment with each finger. Therefore, the IPointerDownHandler will be invoked prior to many multitouch events. OnPointerDown is often not used to perform an action, but may change the visual state of a control. The Button, for example, will change color to show it is pressed (purple in the sample application). The other reason to implement IPointerDownHandler is to inform the Unity Event system that this control wants to receive the OnPointerUp and OnPointerClick events.

If some control ControlA only implements IPointerClickHandler, and no other component in the parent hierarchy implements IPointerDownHandler, then ControlA will receive the OnPointerClick event. However, if ControlP is the parent (or further ancestor in the hierarchy) of ControlA implements IPointerDownHandler, then ControlP will be considered the target for all of the future such events, and ControlA will not receive the OnPointerClick event.

public void OnPointerDown(PointerEventData eventData)

{

eventData.Use();

}

public void OnPointerClick(PointerEventData eventData)

{

var rcTransform = GetTransformAndSetLastSibling();

rcTransform.SetSizeWithCurrentAnchors(RectTransform.Axis.Horizontal, _originalSize.x);

rcTransform.SetSizeWithCurrentAnchors(RectTransform.Axis.Vertical, _originalSize.y);

rcTransform.rotation = _originalRotation;

}

Disambiguation

Try this with the built test application: scroll the list up and down with one finger, then add a second finger so you're dragging with two fingers. See how the list stops scrolling as soon as the second finger is added? And it continues to ignore the drag if you remove the second finger.

When the SimpleGestures module identifies a cluster for which it needs to send an event, it also clears any single-press state for that cluster. Therefore, the ScrollRect sees an OnEndDrag event and an OnPointerUp event (but no OnPointerClick). At this point, single-touch processing is disabled for this cluster.

There are also two selections on the SimpleGesture module which affects how other Gesture Modules are affected by either enough touch points being seen in a cluster and/or by events being sent. These control whether other Gesture Modules are allowed to process the current input. More on this in a later post.

Displaying Events for Debugging

You will note that the debugging information shows the Scroll Rect receiving OnDrag events when only one finger is used, and OnPan events after a second finger is added. This debugging information is being collected by adding the TouchStatusCollector script to any game object for which you want this information captured. This component automatically registers itself with the ShowTouchStatus component to contribute debugging information to that display.

One thing to note about Unity Event processing: When a game object receives an event, ALL components on that game object will receive the event if they have implemented the interfaces. So adding TouchStatusCollector to a control that is already receiving those events will not affect them. However, since TouchStatusCollector implements most of the interfaces, it may affect the handling of those events by parents of the object.

Using the Multitouch Event System

[caption id="attachment_55" align="alignright" width="200"] EventSystem game object showing components for Multitouch processing (click to expand)[/caption]

EventSystem game object showing components for Multitouch processing (click to expand)[/caption]

Copy the Assets/Plugins/Multitouch directory from this test app into the same location in your project. Then add an EventSystem object (or edit the one you already have--Unity will automatically add one when you add a Canvas object if there is no EventSystem in your scene) with the components shown here (MultiselectEventSystem, Win10TouchInputModule, SimpleGestures, and MouseTouches).

Then implement the interfaces for the Multitouch events you wish to handle on the components you wish to handle them.