SharePoint 2013: Crawl status live monitoring

Whenever I set up a new content source in SharePoint 2013 search, or when I change the processing in the Content Enrichment Web Service I need to monitor the progress of the crawl.Typically I need to:

- Check if I get any warnings or errors

- Check if the Documents Per Second (DPS) / throughput was affected

- Abort the crawl if it results in errors

In the old days of FAST ESP I would typically start feeding some content to the index, and then monitor using the doclog tool. There is no equivalent tool in SharePoint 2013. The only thing I could find was a CrawlStatus and CrawlState set for a content source. This state and status did not seem to be live, however. In my experience they usually showed me the status of a previous crawl.

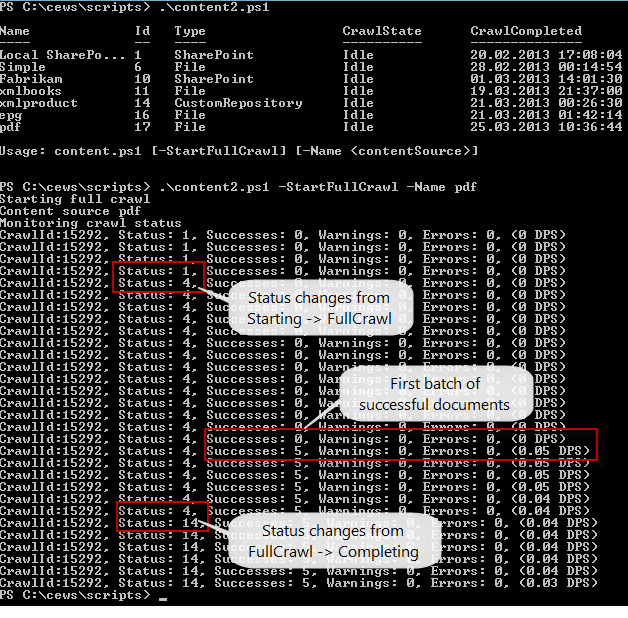

My colleague Brian Pendergrass gave me an example of how I could peek directly into the database to get the live crawl status. After some fiddling I managed to get a script working that starts my crawl and then monitors progress with some live statistics until it's done. Here's an example of the script in action:

Here's the full script (content2.ps1). It is also available for download at the end of this article.

param([string]$Name, [switch]$StartFullCrawl)

function getCrawlStatus($ssa)

{

$ret = $null

if ($ssa)

{

$queryString = "SELECT ";

$queryString += "MSSCrawlHistory.CrawlId, ";

$queryString += "MAX (MSSCrawlHistory.Status) AS Status, ";

$queryString += "MAX (MSSCrawlHistory.SubStatus) AS SubStatus, ";

$queryString += "MAX (MSSCrawlHistory.StartTime) AS CrawlStart, ";

$queryString += "SUM (MSSCrawlComponentsStatistics.SuccessCount) as Successes, ";

$queryString += "SUM (MSSCrawlComponentsStatistics.ErrorCount) as Errors, ";

$queryString += "SUM (MSSCrawlComponentsStatistics.WarningCount) as Warnings, ";

$queryString += "SUM (MSSCrawlComponentsStatistics.RetryCount) as Retries ";

$queryString += "FROM ["+$ssa.SearchAdminDatabase.Name+"].[dbo].[MSSCrawlHistory] WITH (nolock) ";

$queryString += "INNER JOIN ["+$ssa.SearchAdminDatabase.Name+"].[dbo].[MSSCrawlComponentsStatistics] ";

$queryString += "ON MSSCrawlHistory.CrawlId = MSSCrawlComponentsStatistics.CrawlId ";

$queryString += "WHERE MSSCrawlHistory.Status Not In (5,11,12)";

$queryString += "GROUP By MSSCrawlHistory.CrawlId";

$dataSet = New-Object System.Data.DataSet "ActiveCrawlReport"

if ((New-Object System.Data.SqlClient.SqlDataAdapter($queryString, $ssa.SearchAdminDatabase.DatabaseConnectionString)).Fill($dataSet))

{

$ret = $dataSet.Tables[0] | SELECT CrawlId, CrawlStart, Status, SubStatus, Successes, Errors, Warnings

}

}

return $ret

}

function monitorCrawlStatus($ssa)

{

$cs = getCrawlStatus($ssa)

if ($cs -eq $null)

{

Write-Host "No active crawls"

}

else

{

Write-Host "Monitoring crawl status"

while ($cs = getCrawlStatus($ssa))

{

$ts = $cs.CrawlStart

$tnow = [system.timezoneinfo]::ConvertTimeToUtc((Get-Date))

$tdiff = $tnow - $ts

$seconds = $tdiff.TotalSeconds

$docs = $cs.Successes

$dps = 0

if ($seconds -gt 0)

{

$dps = $docs / $seconds

$dps = [Math]::Round($dps, 2)

}

Write-Host ("CrawlId:" + $cs.CrawlId + ", Status: " + $cs.Status + ", Successes: " + $cs.Successes + ", Warnings: " + $cs.Warnings + ", Errors: " + $cs.Errors + ", (" + $dps + " DPS)")

Start-Sleep 5

}

}

}

$ssa = Get-SPEnterpriseSearchServiceApplication

if ($ssa)

{

if ($Name)

{

$contentSource = Get-SPEnterpriseSearchCrawlContentSource -SearchApplication $ssa | ?{ $_.Name -eq $Name}

if ($contentSource)

{

if ($StartFullCrawl)

{

Write-Host "Starting full crawl"

Write-Host "Content source $Name"

$contentSource.StartFullCrawl();

# Let the crawl get some time to start before we monitor it

Start-Sleep 10

monitorCrawlStatus($ssa)

}

else

{

$contentSource

}

}

else

{

Write-Error "Can't find content source with name $Name"

}

}

else

{

Get-SPEnterpriseSearchCrawlContentSource -SearchApplication $ssa

Write-Host ""

Write-Host "Usage: content.ps1 [-StartFullCrawl] [-Name <contentSource>]"

if ($MonitorActiveCrawls)

{

monitorCrawlStatus($ssa)

}

}

}

else

{

Write-Error "Can't find SSA"

}

Comments

Anonymous

June 24, 2013

Hi, Excellent post!!! Could you please elaborate how we can modify the scripts to work for monitoring the continous crawl? Tahnsk, RuchaAnonymous

June 15, 2014

love your work ... :) ... thanks