Some things to consider for your Spark on HDInsight workload

When it comes time to provision your Spark cluster on HDInsight we all want our workloads to execute fast. The Spark community has made some strong claims for better performance compared to mapreduce jobs. In this post I want to discuss two topics to consider when deploying your Spark application on an HDInsight cluster.

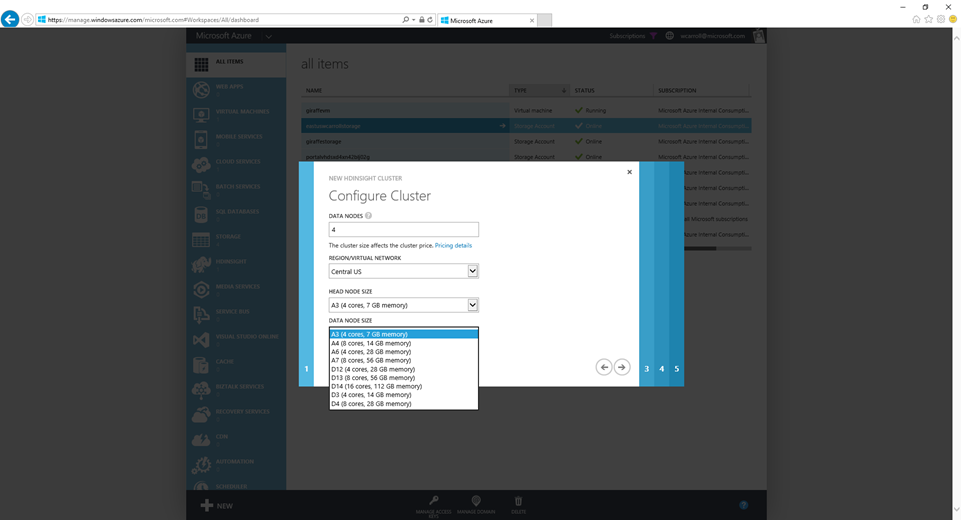

Azure VM Types

One of the first decisions you will have to make when provisioning a Spark Cluster is deciding which Azure VM Types to choose. This link discuss the various cloud services and specification sizes for VM's https://azure.microsoft.com/en-us/documentation/articles/cloud-services-sizes-specs/. The various Azure VM types also cost differently so review the pricing information also.

Recent research from the University of California at Berkeley indicates that CPU and stragglers are bigger bottlenecks than disk IO or network. https://www.eecs.berkeley.edu/~keo/publications/nsdi15-final147.pdf Internal testing at Microsoft also shows the importance of CPU. Spark speed improves as core count increases. Spark jobs are broken into stages and stages are broken into tasks. Each task uses its own thread within the executors on the worker nodes. More cores means better parallelization of task executions.

D-series VMs provide faster processors, a higher memory-to-core ratio, and a solid-state drive (SSD) for the temporary disk. Besides the faster processors the D-series has SSD for the temporary disk. Currently, Spark shuffle operations write intermediate data to disk on the VM's and not Windows Azure Blob Storage. Faster SSD's can help improve shuffle operations.

Sparks executors can cache data and this cached data is compressed. Some tests have shown a 4.5 compression ratio and even better if files are in the parquet format. This compressed data is distributed across the worker nodes. Your Spark cluster might not need as much memory as you think if your code is caching tables and using a columnar storage format like orc or parquet. You can review the Spark UI dashboard under the storage tab to see information on your cached RDD's. You might get better performance from increasing your core count that the size of the memory on the worker nodes.

Data Formats

The most common data formats for Spark are text (json, csv, tsv), orc and parquet. Orc and parquet are columnar formats and compress very well.

When choosing a file format, parquet has the best overall performance when compared to text or orc. Tests have shown a 3 times improvement on average over the other file formats. However once a file format is cached in Spark it is converted to its own columnar format in memory and all of the formats have the same performance. Caching and partitioning are important to Spark applications.

If you have not worked or used the parquet file format before, it is worth taking a look at for your project. Spark has parquetFile() and saveAsParquetFile() functions. Parquet is a self-describing file format. More details can be found at https://parquet.apache.org/documentation/latest/ and at https://spark.apache.org/

Conclusion

Spark has made a lot of promises for performance improvements for big data. However we all know that every workload is different and there are a lot of variables to consider to get optimal performance with big data projects. Testing with your code and your data is your best friend. However don't overlook the Azure VM types and the data file formats when it comes to your testing. This just might show big performance improvements for your project.

Bill