HDInsight: - backup and restore hive table

Introduction

My name is Sudhir Rawat and I work on the Microsoft HDInsight support team. In this blog I am going to explain the options for backing up and restoring a Hive table on HDInsight. The general recommendation is to store hive metadata on SQL Azure during provisioning the cluster. Sometimes, we may have many Hive tables in an HDInsight cluster and over time may need to increase the number of nodes on the cluster for providing more computation power. Currently, to change the number of compute nodes in an HDInsight cluster, we have to provision a fresh cluster. So the question is how to move metastore and what are the options available. There are various options to achieve a reusable

Saving metadata using “Enter the Hive/Oozie Metastore”

As mentioned earlier this is the recommended approach. In this scenario you must specify an existing Azure SQL Database that exists in the same data center as the HDInsight cluster. The provisioning process will automatically create the necessary Hive tables from scratch in the database.

This option will save the Hive metadata on SQL Azure and will save good amount of time.

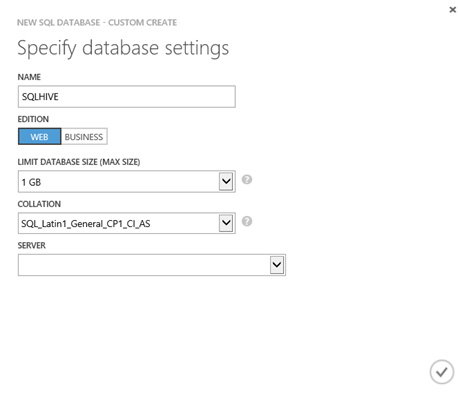

Below is the screenshot of the option which will be available during provisioning cluster in the Azure Portal.

Go to the Azure portal and click “SQL DATABASES” and create a new one. Make sure the creation of Azure SQL Database must be on the same region as where we provision the cluster.

Now that we have an SQL Database ready, go ahead to provision the HDInsight cluster. When choosing the configuration options, at second step select the checkmark “Enter the Hive/Oozie Metastore”. In the “METASTORE DATABASE” field dropdown, choose the database which we created earlier.

Once the cluster is provisioned successfully, upload your data files to Windows Azure blob storage. One of the ways to do it is through PowerShell which is described here.

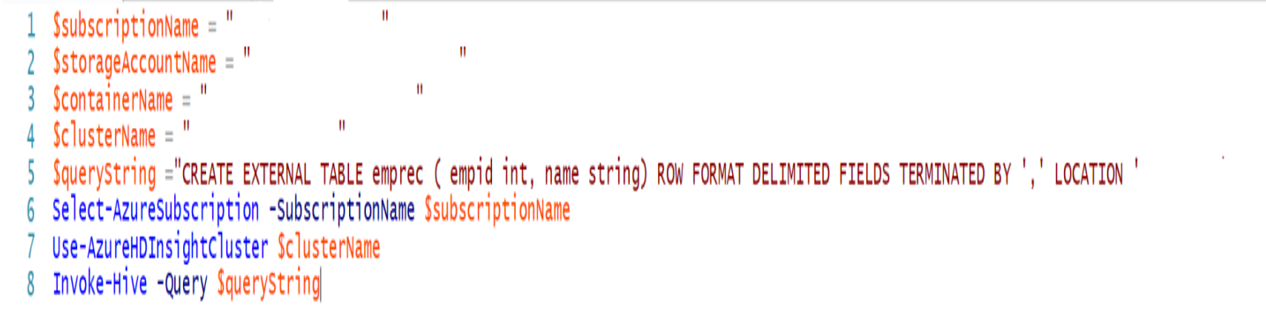

The Next step is to create a Hive table manually. Below is the PowerShell script for creating the Hive table.

Now, let’s query the table to verify it exists and the command works as expected.

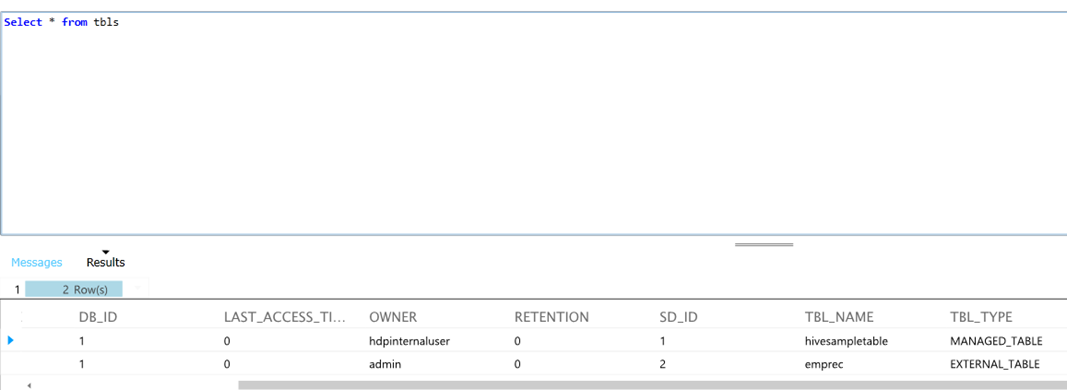

If I look onto the SQL Azure Database and query “TBLS” table, it shows there is one external table created.

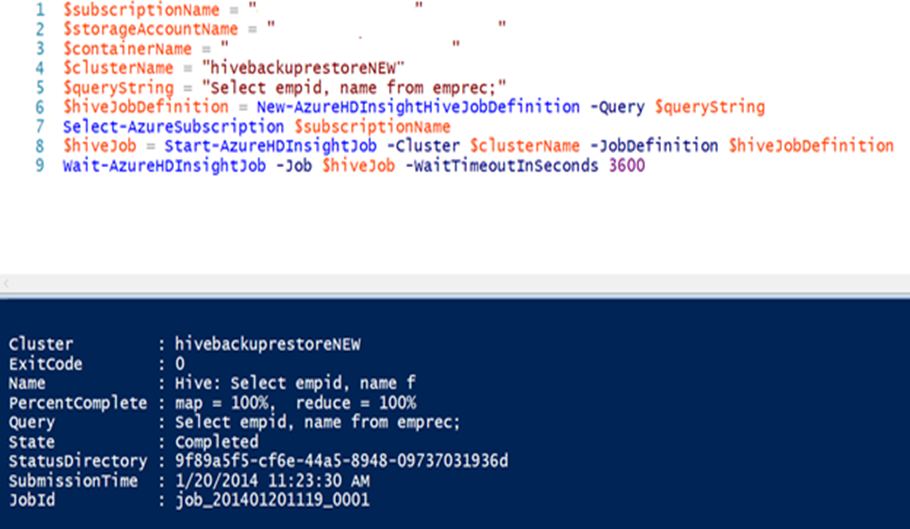

Next, we will delete the HDInsight cluster and provision a new one with more nodes. During this cluster creation, make sure to select existing storage and “Enter the Hive/Oozie Metastore”. Once the new cluster is ready, query the table again and you will be able to retrieve results. Notice below the cluster name is different.

Hive Import/Export

The export/import feature in Apache Hive is introduced in version 0.8.0. The Hive export command allows us to take backup of table or partition data along with metadata. The Hive import command allows us to restore the metadata and data in new cluster. More information about this addition can be found here.

Hive Import/Export before HDI 3.0 Cluster

If we are in stage of provision cluster, we can add the hive configuration via the PowerShell script. To do that, please look at the PowerShell script here. The script will create Azure BLOB storage and cluster along with the changes in hive configuration. These changes are not required in case provisioning HDI 3.0 cluster.

Let us look at a slightly different scenario. This is the case of an operational cluster that already exists and was originally created without specifying an explicit Hive/Oozie metastore (that is, unchecking the checkmark specifying that Hive/Oozie Metastore enabled). If there is a need to recreate this cluster with more nodes, then Hive export/import command can be configured and used.

Please note that this is not a recommended and supported approach. The reason is, that when the HDInsight cluster gets reimaged, then such configuration changes will not be retained. This scenario is described in more detail in our other blog post here.

Now follow below step to get export/import working on existing HDInsight cluster.

1. Do remote access (RDP) to headnode and add following property in hive-site.xml under “C:\apps\dist\hive-0.11.0.1.3.2.0-05\conf\”.

<property>

<name>hive.exim.uri.scheme.whitelist</name>

<value>wasb,hdfs,pfile</value>

</property>

2. Restart hivemetastore service. To do that you need remote (RDP) to cluster, open “Hadoop Command Line” and run below command.

It will stop all services related to hive. Below is the screenshot of it

To start all the services related to hive run below command.

It will start all the services related to hive. Below is the screenshot of it.

Once done, run export command in Hive CLI “export table table_name to ‘wasb://<Container_Name>@<Storage_Name>.blob.core.windows.net/<Folder_Name>’;”

Here is the outcome when I did export on external table.

Once the Hive export is done, I see several files in the blob storage. You will notice two files: the first one for metadata (_metadata is the file name), and the second is the data file itself. I used a tool Azure Blob Manager for navigation because I like the functionality of searching blobs. However, you can use whatever tool you are comfortable with.

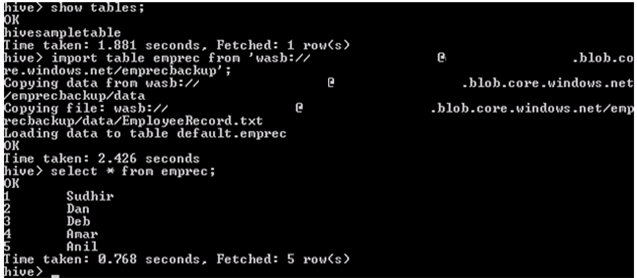

Now let’s say, I create a new cluster and add it with existing storage (where I export the metadata and data). Below is the screenshot of tables in newly created cluster

Below is the screenshot when I run the Hive import command followed by select command to check all the records.

One thing you will notice is there are three copies of the data on blob storage –

1. The original location

2. Location to which data is exported

3. Location used for import.

You may want to delete other data files to reduce the space on blob storage.

Hive Import/Export in/after HDI 3.0 Cluster

The configuration changes are not required in case of HDI 3.0 cluster. We can directly run the export/import command directly from PowerShell. Below are the commands for the reference.

export table TABLE_NAME to 'wasb://CONTAINER_NAME@STORAGE_NAME.blob.core.windows.net/DIRECTORY_NAME';

import table TABLE_NAME from 'wasb://CONTAINER_NAME@STORAGE_NAME.blob.core.windows.net/DIRECTORY_NAME';

Thanks Shuaishuai Nei for his input and thanks Dharshana, Jason and Dan for reviewing.

Thanks Much and Happy Learning

Sudhir Rawat

Comments

- Anonymous

February 07, 2015

hi,i use remote hbase tables in hive.possible to migrate hbase tables from old cluster to the new one?thanks