AppFabric Cache - Peeking into client & server WCF communication

The goal of this blog is to dig deeper into cache client & server communication, provide more insights into a set of configuration knobs and conclude with some best practices recommendation.

Introduction

If you are reading this blog, chances are that you are reasonably deep into AppFabric Cache, previously known as Velocity. If not, here is a quick 200 level summary with some useful links:

AppFabric Cache is Microsoft 's distributed caching solution. It is part of Windows Server AppFabric, and is available as an out of band release on Windows Server. You can get more information from https://msdn.microsoft.com/en-us/windowsserver/ee695849.aspx

Here are a set of scenarios for using a distributed cache:

- Improving performance of your application

- Reducing load on and scaling out the database tier

- ASP.NET session store repository

- Centralized in-memory repository for data shared by a set of services

Data suitable for caching can be broadly classified as:

- Reference data: readonly / infrequently changing data shared by all users. Eg: catalog data

- Activity data: read and write data, single user. Eg: shopping cart

- Resource data: read and write data, shared by all users. Eg: airline tickets

Background

AppFabric Cache has 3 modes of installation – cache client, caching services and admin. When you install the cache service on a machine, there is a configuration phase for setting up a configuration store (XML file based or SQL Server or custom provider). When a set of cache servers is configured to point to the same configuration store, they constitute a cache cluster. AppFabric transparently manages the entire cluster - load balancing of data, data partitioning map, replication of data, availability of cache servers etc. Any .NET based cache client can reference the installed client DLLs and with the choice of config and/or code start using the cluster. The cache client would need access to at least one cache server, from which it would get the list of all the servers in the cluster. Objects can be stored and retrieved from the cache cluster using simple APIs. The term 'client' in this context may be misleading, it is from a cache usage perspective. It can actually be a .NET service that hosts business logic or an ASP.NET web application. The term usage is analogous to the same service being referred to as 'SQL client'.

Cache Client - Server communication

The communication between the cache servers and cache clients uses the WCF channel model and net.Tcp binding. Cache Servers use the 22233 (default) TCP port for communicating with the cache client. There are some additional cache ports for more cache cluster functionality. In this context, there are several configuration knobs that will be useful to understand:

- ChannelOpenTimeOut: wait time for a cache client to establish a network connection with the cache server

- RequestTimeOut: wait time for a cache client to complete the cache operation and process the response.

- MaxConnectionsToServer: specifies the number of channels that are opened from the cache client to the cache server(s)

Test scenario

We will have a simple .NET application that will instantiate the DataCacheFactory object, get a reference to the default cache, store a <key, value> and then retrieve the value. The application is running on CACHECLIENT-MACHINE and the cache server is running on CACHESERVER-MACHINE. In this case, we have a 1 node cache cluster.

Tools setup

We will use tracelog to capture the ETW events on CACHECLIENT-MACHINE as part of this communication. You can download the tracelog utility which is part of the Windows Software Development Kit.

Note: The Tracelog.exe file is not included with Windows SDK for Windows Server 2008 and the Microsoft .NET Framework 3.5. Therefore, install the Windows Vista SDK on Windows Server 2008.

Test Execution and Capturing Results

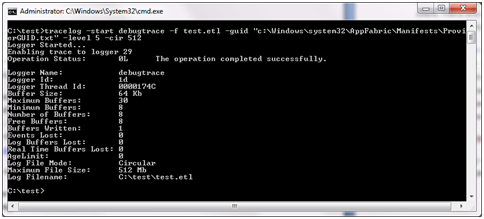

Now, let us run tracelog to start a session named 'debugtrace'. The output will be stored in test.etl

C:\test>tracelog -start debugtrace -f test.etl -guid "c:\Windows\system32\AppFabric\Manifests\ProviderGUID.txt" -level 5 -cir 512

Note: If you have issues in accessing the ProviderGUID.txt, copy it to another folder and retry the command.

Then let us execute the following piece of code:

public

static

DataCacheFactory myCacheFactory;

DataCache cache;

List<DataCacheServerEndpoint> servers = new

List<DataCacheServerEndpoint>(1);

string srvName = "CACHESERVER-MACHINE";

servers.Add(new

DataCacheServerEndpoint(srvName, 22233));

DataCacheSecurity security = new

DataCacheSecurity(DataCacheSecurityMode.None, DataCacheProtectionLevel.None);

DataCacheClientLogManager.ChangeLogLevel(System.Diagnostics.TraceLevel.Off);

//Create cache configuration

DataCacheFactoryConfiguration configuration = new

DataCacheFactoryConfiguration()

{

Servers = servers,

LocalCacheProperties = new

DataCacheLocalCacheProperties(),

SecurityProperties = security,

MaxConnectionsToServer = 1

};

myCacheFactory = new

DataCacheFactory(configuration);

Console.WriteLine("Connected to {0}", srvName);

Console.ReadLine();

cache = myCacheFactory.GetDefaultCache();

Console.WriteLine("Simple PUT GET starts");

cache.Put("test", new

object());

object foo = cache.Get("test");

Console.WriteLine("Simple PUT GET ends");

Console.ReadLine();

Here is the output from the application:

Now let us stop the trace collection.

C:\test>tracelog -stop debugtrace

NOTE: ETW tracing data is not localized in the V1 release. This analysis is based on the format and event data relevant to the V1 release.

Analyzing the output

Now, let us open test.etl from Event Viewer and analyze some of the entries. Let us split the entries into the following areas:

DataCacheFactory creation

- DRM instance created.

- Creating channel for [net.tcp://CACHESERVER-MACHINE:22233]

- channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825.

- Channel open succeeded - [Endpoint=[net.tcp://CACHESERVER-MACHINE:22233]; Lockstatus=12; ChannelState=Opened; Status=ChannelOpening]

Accessing the cache

- GetCache: Creating the Named Cache 'default'

- GenericCache: Constructing Cache: default

- Initialize: Completed

- 1:-1' GET_NAMED_CACHE_CONFIGURATION;Routed;default;;;;Version = 0:0 - Request saved for correlation.

- '1:-1' GET_NAMED_CACHE_CONFIGURATION;Routed;default;;;;Version = 0:0 - Sending request to [net.tcp://CACHESERVER-MACHINE:22233].

- Message sent to [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825.

- '1:-1' GET_NAMED_CACHE_CONFIGURATION;Routed;default;;;;Version = 0:0 - Send result - Status=Success.

- '1:-1';Ack;UNINITIALIZED_ERROR - Response got from remote DOM.

PUT Operation

- SendReceive: Begin: '2:-1' PUT;Routable;default;;;test;Version = 0:0

- SendMsgAndWait: Serializing Object: msgId = 2

- '2:-1' PUT;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Starting to process.

- '2:-1' PUT;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Destination - [net.tcp://CACHESERVER-MACHINE:22233].

- Message sent to [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825.

- '2:-1';Ack;UNINITIALIZED_ERROR - Response got from remote DOM.

- SendReceive: Request's 2 response's status is Ack : ErrorCode=UNINITIALIZED_ERROR

GET Operation

- SendReceive: Begin: '3:-1' GET;Routable;default;;;test;Version = 0:0

- SendMsgAndWait: Begin: msgId = 3

- '3:-1' GET;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Starting to process.

- '3:-1' GET;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Destination - [net.tcp://CACHESERVER-MACHINE:22233].

- '3:-1' GET;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Sending request to [net.tcp://CACHESERVER-MACHINE:22233].

- SendMsgAndWait: Request is Pending, msgId = 3

- '3:-1';Ack;UNINITIALIZED_ERROR - Response got from remote DOM.

- '3:-1' GET;Routed;default;Default_Region_0760;1043303394;test;Version = 0:0 - Request completed on response - '3:-1';Ack;UNINITIALIZED_ERROR.

- MyCallback: Begin Processing Response

- '3:-1';Ack;UNINITIALIZED_ERROR - MyCallback: End Processing Response

- SendMsgAndWait: DeSerializing Object, msgId = 3

Summary

- Serialization of the object happens on the cache client in a PUT operation (Step 2) and de-serialization happens on the cache client in a GET Operation (Step 11)

- Responses are processed asynchronously using I/O threads.

- Instantiation of a DataCacheFactory object includes a set of policy settings to control Local cache, ChannelOpenTimeout, RequestTimeout, Security etc settings as seen from the code snippet above. The instantiation creates a set of internal data structures (DRM, ThickClient).

- There was 1 DataCacheFactory used in this sample. This can be found in the event trace which shows only 1 'DistributedCache.ClientChannel.Client1' instance. Here is an extract from our trace collection.

Source |

DistributedCache.ClientChannel.Client1 |

Param |

Client channel opened. |

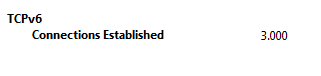

- maxConnectionsToServer was set to 1. This can be found out from the event trace which shows only 1 channelID being used. (ChannelID = 36610825). This can also be validated by looking at the perfmon counter 'TCPv6:Connections Established' which shows the connections established from this machine.

Source |

DistributedCache.ClientChannel.Client1 |

Param |

Message sent to [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825 |

- Changing maxConnectionsToServer = 3 in the code snippet above, collecting and analyzing the trace data now shows the additional ChannelIDs part of the same DataCacheFactory instance

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825 |

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 62130720 |

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 4593845 |

- Increasing the number of DataCacheFactories to 2 and maintaining maxConnectionstoServer=3 in our sample, let us collect the trace once again. Now we can see 2 clients for the DataCacheFactory instances (with the internal DRM , ThickClient objects) and each of them having 3 channels created, so 6 in total.

Source |

DistributedCache.ClientChannel.Client2 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 61325552 |

Source |

DistributedCache.ClientChannel.Client2 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 29028787. |

Source |

DistributedCache.ClientChannel.Client2 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 36610825. |

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 35885827. |

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 37710717. |

Source |

DistributedCache.ClientChannel.Client1 |

Param |

channel for [net.tcp://CACHESERVER-MACHINE:22233] ChannelID = 7995840. |

This can also be validated from perfmon by looking at the set of connections established which shows the increase in 6. Here are the before and after snapshots.

Before

After

Best Practice Recommendation

Even though having just 1 cache server in the client config would suffice, it is recommended to maintain as many cache server hostnames in the config file. *When a cache client connects to one of the cache servers, it gets the routing table which has the partitioning logic to access other cache servers. Including more cache servers helps with more resiliency for the initial connection. If the logic is implemented in code, building a host lookup service will work better.

Instantiating a DataCacheFactory object creates several internal data structures (DRM, ThickClient), minimizing the number of DataCacheFactories and creating them in advance (on a separate thread) is recommended. A singleton pattern should work for most scenarios; do not create one DataCacheFactory object per cache operation. When you need to have different policy settings, for example, if local cache is required only for a set of named caches, then having different DataCacheFactory objects will be appropriate.

Default setting for ChannelOpenTimeout is 15 seconds, you can set it to much lower values if you want the application to fail fast while opening the channel.

Default setting for RequestTimeout is 10 seconds, do not set it to 0. If you do, your application will see a timeout on every cache call. Changes to the default value need to take into account the workload and physical resources (client machine configuration, cache server configuration, network bandwidth, object size, ratio of GETs Vs PUTs, number of concurrent operations, usage of Regions, etc).

Setting maxConnectionsToServer=1 (default) will work in most situations. In scenarios, when there is a single shared DataCacheFactory and a lot of threads are posting on that connection, there may be a need to increase it. So if you are looking at a high throughput scenario, then increasing this value beyond 1 is recommended. Also, be aware that if you had 5 cache servers in the cluster, if the application uses 3 DataCacheFactories and if maxConnectionsToServer=3, from each client machine there would be 9 outbound TCP connections to each cacheserver, 45 in total across all cache servers.

Note: There will be a set of subsequent blogs for explaining capacity planning and debugging timeouts which will provide more details on the usage of these configuration knobs.

Comments

- Anonymous

February 25, 2011

Great Job, I wish I had found this article before hand - Anonymous

October 24, 2012

The comment has been removed