Introducing Table SAS (Shared Access Signature), Queue SAS and update to Blob SAS

For an overview of Shared Access Signatures please refer to the following documentations .

We’re excited to announce that, as part of version 2012-02-12, we have introduced Table Shared Access Signatures (SAS), Queue SAS and updates to Blob SAS. In this blog, we will highlight usage scenarios for these new features along with sample code using the Windows Azure Storage Client Library v1.7.1, which is available on GitHub.

Shared Access Signatures allow granular access to tables, queues, blob containers, and blobs. A SAS token can be configured to provide specific access rights, such as read, write, update, delete, etc. to a specific table, key range within a table, queue, blob, or blob container; for a specified time period or without any limit. The SAS token appears as part of the resource’s URI as a series of query parameters. Prior to version 2012-02-12, Shared Access Signature could only grant access to blobs and blob containers.

SAS Update to Blob in version 2012-02-12

In the 2012-02-12 version, Blob SAS has been extended to allow unbounded access time to a blob resource instead of the previously limited one hour expiry time for non-revocable SAS tokens. To make use of this additional feature, the sv (signed version) query parameter must be set to "2012-02-12" which would allow the difference between se (signed expiry, which is mandatory) and st (signed start, which is optional) to be larger than one hour. For more details, refer to the MSDN documentation.

Best Practices When Using SAS

The following are best practices to follow when using Shared Access Signatures.

- Always use HTTPS when making SAS requests. SAS tokens are sent over the wire as part of a URL, and can potentially be leaked if HTTP is used. A leaked SAS token grants access until it either expires or is revoked.

- Use server stored access policies for revokable SAS. Each container, table, and queue can now have up to five server stored access policies at once. Revoking one of these policies invalidates all SAS tokens issued using that policy. Consider grouping SAS tokens such that logically related tokens share the same server stored access policy. Avoid inadvertently reusing revoked access policy identifiers by including a unique string in them, such as the date and time the policy was created.

- Don’t specify a start time or allow at least five minutes for clock skew. Due to clock skew, a SAS token might start or expire earlier or later than expected. If you do not specify a start time, then the start time is considered to be now, and you do not have to worry about clock skew for the start time.

- Limit the lifetime of SAS tokens and treat it as a Lease. Clients that need more time can request an updated SAS token.

- Be aware of version: Starting 2012-02-12 version, SAS tokens will contain a new version parameter (sv). sv defines how the various parameters in the SAS token must be interpreted and the version of the REST API to use to execute the operation. This implies that services that are responsible for providing SAS tokens to client applications for the version of the REST protocol that they understand. Make sure clients understand the REST protocol version specified by sv when they are given a SAS to use.

Table SAS

SAS for table allows account owners to grant SAS token access by defining the following restriction on the SAS policy:

1. Table granularity: users can grant access to an entire table (tn) or to a table range defined by a table (tn) along with a partition key range (startpk/endpk) and row key range (startrk/endrk).

To better understand the range to which access is granted, let us take an example data set:

Row Number |

PartitionKey |

RowKey |

1 |

PK001 |

RK001 |

2 |

PK001 |

RK002 |

3 |

PK001 |

RK003 |

… |

… |

… |

300 |

PK001 |

RK300 |

301 |

PK002 |

RK001 |

302 |

PK002 |

RK002 |

303 |

PK002 |

RK003 |

… |

… |

… |

600 |

PK002 |

RK300 |

601 |

PK003 |

RK001 |

602 |

PK003 |

RK002 |

603 |

PK003 |

RK003 |

… |

… |

… |

900 |

PK003 |

RK300 |

The permission is specified as range of rows from (starpk,startrk) until (endpk, endrk).

Example 1: (starpk,startrk) =(,) (endpk, endrk)=(,)

Allowed Range = All rows in table

Example 2: (starpk,startrk) =(PK002,) (endpk, endrk)=(,)

Allowed Range = All rows starting from row # 301

Example 3: (starpk,startrk) =(PK002,) (endpk, endrk)=(PK002,)

Allowed Range = All rows starting from row # 301 and ending at row # 600

Example 3: (starpk,startrk) =(PK001,RK002) (endpk, endrk)=(PK003,RK003)

Allowed Range = All rows starting from row # 2 and ending at row # 603.

NOTE: The row (PK002, RK100) is accessible and the row key limit is hierarchical and not absolute (i.e. it is not applied as startrk <= rowkey <= endrk).

2. Access permissions (sp): user can grant access rights to the specified table or table range such as Query (r), Add (a), Update (u), Delete (d) or a combination of them.

3. Time range (st/se): users can limit the SAS token access time. Start time (st) is optional but Expiry time (se) is mandatory, and no limits are enforced on these parameters. Therefore a SAS token may be valid for a very large time period.

4. Server stored access policy (si) : users can either generate offline SAS tokens where the policy permissions described above is part of the SAS token, or they can choose to store specific policy settings associated with a table. These policy settings are limited to the time range (start time and end time) and the access permissions. Stored access policy provides additional control over generated SAS tokens where policy settings could be changed at any time without the need to re-issue a new token. In addition, revoking SAS access would become possible without the need to change the account’s key.

For more information on the different policy settings for Table SAS and the REST interface, please refer to the SAS MSDN documentation.

Though non-revocable Table SAS provides large time period access to a resource, we highly recommend that you always limit its validity to a minimum required amount of time in case the SAS token is leaked or the holder of the token is no longer trusted. In that case, the only way to revoke access is to rotate the account’s key that was used to generate the SAS, which would also revoke any other SAS tokens that were already issued and are currently in use. In cases where large time period access is needed, we recommend that you use a server stored access policy as described above.

Most Shared Access Signature usage falls into two different scenarios:

- A service granting access to clients, so those clients can access their parts of the storage account or access the storage account with restricted permissions. Example: a Windows Phone app for a service running on Windows Azure. A SAS token would be distributed to clients (the Windows Phone app) so it can have direct access to storage.

- A service owner who needs to keep his production storage account credentials confined within a limited set of machines or Windows Azure roles which act as a key management system. In this case, a SAS token will be issued on an as-needed basis to worker or web roles that require access to specific storage resources. This allows services to reduce the risk of getting their keys compromised.

Along with the different usage scenarios, SAS token generation usually follows the models below:

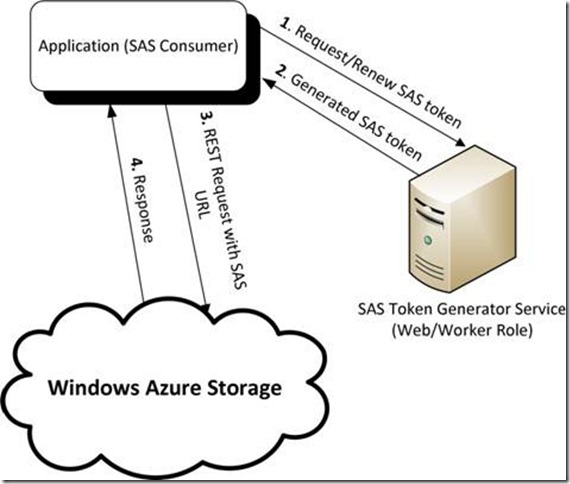

- A SAS Token Generator or producer service responsible for issuing SAS tokens to applications, referred to as SAS consumers. The SAS token generated is usually for limited amount of time to control access. This model usually works best with the first scenario described earlier where a phone app (SAS consumer) would request access to a certain resource by contacting a SAS generator service running in the cloud. Before the SAS token expires, the consumer would again contact the service for a renewed SAS. The service can refuse to produce any further tokens to certain applications or users, for example in the scenario where a user’s subscription to the service has expired. Diagram 1 illustrates this model.

Diagram 1: SAS Consumer/Producer Request Flow

- The communication channel between the application (SAS consumer) and SAS Token Generator could be service specific where the service would authenticate the application/user (for example, using OAuth authentication mechanism) before issuing or renewing the SAS token. We highly recommend that the communication be a secure one in order to avoid any SAS token leak. Note that steps 1 and 2 would only be needed whenever the SAS token approaches its expiry time or the application is requesting access to a different resource. A SAS token can be used as long as it is valid which means multiple requests could be issued (steps 3 and 4) before consulting back with the SAS Token Generator service.

- A one-time generated SAS token tied to a signed identifier controlled as part of a stored access policy. This model would work best in the second scenario described earlier where the SAS token could either be part of a worker role configuration file, or issued once by a SAS token generator/producer service where maximum access time could be provided. In case access needs to be revoked or permission and/or duration changed, the account owner can use the Set Table ACL API to modify the stored policy associated with issued SAS token.

Table SAS - Sample Scenario Code

In this section we will provide a usage scenario for Table SAS along with a sample code using the Storage Client Library 1.7.1.

Consider an address book service implementation that needs to scale to a large number of users. The service allows its customers to store their address book in the cloud and access it anywhere using a wide range of clients such as a phone app, desktop app, a website, etc. which we will refer to as the client app. Once a user subscribes to the service, he would be able to add, edit, and query his address book entries. One way to build such system is to run a service in Windows Azure Compute consisting of web and worker roles. The service would act as a middle tier between the client app and the Windows Azure storage system. After the service authenticates it, the client app would be able to access its own address book through a web interface defined by the service. The service would then service all of the client requests by accessing a Windows Azure Table where the address book entries for each of the customer reside. Since the service is involved in processing every request issued by the client, the service would need to scale out its number of Windows Azure Compute instances linearly with the growth of its customer base.

With Table SAS, this scenario becomes simpler to implement. Table SAS can be used to allow the client app to directly access the customer’s address book data that is stored in a Windows Azure Table. This approach would tremendously improve the scalability of the system and reduce cost by removing the service involvement out of the way whenever the client app accesses the address book data. The service role in this case would then be restricted to processing users’ subscription to the service and to generate SAS tokens that are used by the client app to access the stored data directly. Since the token can be granted for any selected time period, the application would need to communicate with the service generating the token only once every selected time period for a given type of access per table. This way, the usage of Table SAS will improve the performance and helps in easily scaling up the system while decreasing the operation cost since fewer servers are needed in this case.

The design of the system using Table SAS would be as follows: A Windows Azure Table called “AddressBook” will be used to store the address book entries for all the customers. The PartitionKey will be the customer’s username or customerID and the RowKey will represent the address book entry key defined as the contact’s LastName,FirstName. This means that all the entries for a certain customer would share the same PartitionKey, the customerID, so the whole address book will be contained within the same PatitionKey for a customer. The following C# class describes the address book entity.

[DataServiceKey("PartitionKey", "RowKey")]

public class AddressBookEntry

{

public AddressBookEntry(string partitionKey, string rowKey)

{

this.PartitionKey = partitionKey;

this.RowKey = rowKey;

}

public AddressBookEntry() { }

/// <summary>

/// Account CustomerID

/// </summary>

public string PartitionKey { get; set; }

/// <summary>

/// Contact Identifier LastName,FirstName

/// </summary>

public string RowKey { get; set; }

/// <summary>

/// The last modified time of the entity set by

/// the Windows Azure Storage

/// </summary>

public DateTime Timestamp { get; set; }

public string Address { get; set; }

public string Email { get; set; }

public string PhoneNumber { get; set; }

}

The address book service consists of the following 2 components:

- A SAS token producer, which is running as part of a service on Windows Azure Compute, accepts requests from the client app asking for a SAS token to give it access to a particular customer’s address book data. This service would first authenticate the client app through its preferred authentication scheme, and then it would generate a SAS token that grants access to the “AddressBook” table while restricting the view to the PartitionKey that is equal to the customerID. Full permission access would be given in order to allow the client app to query (r), update (u), add (a) and delete (d) address book entries. Access time would be restricted to 30 minutes in case the service decides to deny access to certain customers in case, for example his address book subscription expired. In this case, no further renewal for the SAS token would be permitted. The 30 minute period largely reduces the load on SAS token producer compared to a service that acts as a proxy for every request.

- The client app is responsible for interacting with the customer where it would query, update, insert, and delete address book entries. The client app would first contact the SAS producer service in order to retrieve a SAS token and caches it locally while the token is still valid. The SAS token would be used with any Table REST request against the Windows Azure Storage. The client app would request a new SAS token whenever the current one approaches its expiry time. A standard approach is to renew the SAS every N minutes, where N is half of the time the allocated SAS tokens are valid. For this example, the SAS tokens are valid for 30 minutes, so the client renews the SAS once every 15 minutes. This gives the client time to alert and retry if there is any issue obtaining a SAS renewal. It also helps in cases where application and network latencies cause requests to be delayed in reaching the Windows Azure Storage system.

The SAS Producer code can be found below. It is represented by the SasProducer class that implements the RequestSasToken responsible for issuing a SAS token to the client app. In this example, the communication between the client app and the SAS producer is assumed to be a method call for illustration purposes where the client app would simply invoke the RequestSasToken method whenever it requires a new token to be generated.

/// <summary>

/// The producer class that controls access to the address book

/// by generating sas tokens to clients requesting access to their

/// own address book data

/// </summary>

public class SasProducer

{

/* ... */

/// <summary>

/// Issues a SAS token authorizing access to the address book for a given customer ID.

/// </summary>

/// <param name="customerId">The customer ID requesting access.</param>

/// <returns>A SAS token authorizing access to the customer's address book entries.</returns>

public string RequestSasToken(string customerId)

{

// Omitting any authentication code since this is beyond the scope of

// this sample code

// creating a shared access policy that expires in 30 minutes.

// No start time is specified, which means that the token is valid immediately.

// The policy specifies full permissions.

SharedAccessTablePolicy policy = new SharedAccessTablePolicy()

{

SharedAccessExpiryTime = DateTime.UtcNow.AddMinutes(

SasProducer.AccessPolicyDurationInMinutes),

Permissions = SharedAccessTablePermissions.Add

| SharedAccessTablePermissions.Query

| SharedAccessTablePermissions.Update

| SharedAccessTablePermissions.Delete

};

// Generate the SAS token. No access policy identifier is used which

// makes it a non-revocable token

// limiting the table SAS access to only the request customer's id

string sasToken = this.addressBookTable.GetSharedAccessSignature(

policy /* access policy */,

null /* access policy identifier */,

customerId /* start partition key */,

null /* start row key */,

customerId /* end partition key */,

null /* end row key */);

return sasToken;

}

}

Note that by not setting the SharedAccessStartTime, Windows Azure Storage would assume that the SAS is valid upon the receipt of the request.

The client app code can be found below. It is represented by the Client class that exposes public methods for manipulating the customer’s address book such UpsertEntry and LookupByName which internally would request from the service front-end, represented by the SasProducer, a SAS token if needed.

/// <summary>

/// The address book client class.

/// </summary>

public class Client

{

/// <summary>

/// When to refresh the credentials, measured as a number of minutes before expiration.

/// </summary>

private const int SasRefreshThresholdInMinutes = 15;

/// <summary>

/// the cached copy of the sas credentials of the customer's addressbook

/// </summary>

private StorageCredentialsSharedAccessSignature addressBookSasCredentials;

/// <summary>

/// Sas expiration time, used to determine when a refresh is needed

/// </summary>

private DateTime addressBookSasExpiryTime;

/* ... */

/// <summary>

/// Gets the Table SAS storage credentials accessing the address book

/// of this particular customer.

/// The method automatically refreshes the credentials as needed

/// and caches it locally

/// </summary>

public StorageCredentials GetAddressBookSasCredentials()

{

// Refresh the credentials if needed.

if (this.addressBookSasCredentials == null ||

DateTime.UtcNow.AddMinutes(SasRefreshThresholdInMinutes) >= this.addressBookSasExpiryTime)

{

this.RefreshAccessCredentials();

}

return this.addressBookSasCredentials;

}

/// <summary>

/// Requests a new SAS token from the producer, and updates the cached credentials

/// and the expiration time.

/// </summary>

public void RefreshAccessCredentials()

{

// Request the SAS token.

string sasToken = this.addressBookService.RequestSasToken(this.customerId);

// Create credentials using the new token.

this.addressBookSasCredentials = new StorageCredentialsSharedAccessSignature(sasToken);

this.addressBookSasExpiryTime = DateTime.UtcNow.AddMinutes(

SasProducer.AccessPolicyDurationInMinutes);

}

/// <summary>

/// Retrieves the address book entry for the given contact name.

/// </summary>

/// <param name="contactname">

/// The lastName,FirstName for the requested address book entry.</param>

/// <returns>An address book entry with a certain contact card</returns>

public AddressBookEntry LookupByName(string contactname)

{

StorageCredentials credentials = GetAddressBookSasCredentials();

CloudTableClient tableClient = new CloudTableClient(this.tableEndpoint, credentials);

TableServiceContext context = tableClient.GetDataServiceContext();

CloudTableQuery<AddressBookEntry> query =

(from entry in context.CreateQuery<AddressBookEntry>(Client.AddressBookTableName)

where entry.PartitionKey == this.customerId && entry.RowKey == contactname

select entry).AsTableServiceQuery();

return query.Execute().SingleOrDefault();

}

/// <summary>

/// Inserts a new address book entry or updates an existing entry.

/// </summary>

/// <param name="entry">The address book entry to insert or merge.</param>

public void UpsertEntry(AddressBookEntry entry)

{

StorageCredentials credentials = GetAddressBookSasCredentials();

CloudTableClient tableClient = new CloudTableClient(this.tableEndpoint, credentials);

TableServiceContext context = tableClient.GetDataServiceContext();

// Set the correct customer ID.

entry.PartitionKey = this.customerId;

// Upsert the entry (Insert or Merge).

context.AttachTo(Client.AddressBookTableName, entry);

context.UpdateObject(entry);

context.SaveChangesWithRetries();

}

}

Stored Access Policy Sample Code

As an extension to the previous example, consider that the address book service is implementing a garbage collector (GC) that would delete the address book data for users that are no longer consumers of the service. In this case, and in order to avoid the chance of having the storage account credentials be compromised, the GC worker role would use a Table SAS token with maximum access time that is backed by a stored access policy associated with a signed identifier. The Table SAS token would grant access to the “AddressBook” table without specifying any range restrictions on the PartitionKey and RowKey but with delete-only permission. In case the SAS token gets leaked, the service owner would be able to revoke the SAS access by deleting the signed identifier associated with the “AddressBook” table as will be highlighted later through code. To be sure that the SAS access does not get inadvertently reinstated after revocation, the policy identifier has as part of its name the policy’s date and time of creation. (See the section on Best Practices When Using SAS below.)

In addition, assume that the GC worker role would come to be aware of the customerID that it needs to GC is through a Queue called “gcqueue”. Whenever a customer subscription expires, a message is enqueued into the “gcqueue” queue. The GC worker role would keep polling that queue at a regular interval. Once a customerID is dequeued, the worker role would delete that customer’s data and on completion, deletes the queue message associated with that customer. For the same reasons a SAS token is used to access the “AddressBook” table, the GC worker thread would also use a Queue SAS token associated with the “gcqueue” queue while using a stored access policy as well. The permissions needed in this case would be Process-only. More details on Queue SAS are available in the subsequent sections of this post.

To build this additional GC feature, the SAS token producer will be extended to generate a one-time Table SAS token against the “AddressBook” table and a one-time Queue SAS token against the “gcqueue” Queue by associating them with stored access signed identifiers with their respective table and queue as explained earlier. The GC role upon initialization, would contact the SAS token producer in order to retrieve these two SAS tokens.

The additional code needed as part of the SAS producer is as follow.

public const string GCQueueName = "gcqueue";

/// <summary>

/// The garbage collection queue.

/// </summary>

private CloudQueue gcQueue;

/// <summary>

/// Generates an address book table and a GC queue

/// revocable SAS tokens that is used by the GC worker role

/// </summary>

/// <param name="tableSasToken">

/// An out parameter which returns a revocable SAS token to

/// access the AddressBook table with delele only permissions</param>

/// <param name="queueSasToken">

/// An out parameter which returns a revocable SAS token to

/// access the gcqueue with process permissions</param>

public void GetGCSasTokens(out string tableSasToken, out string queueSasToken)

{

string gcPolicySignedIdentifier = "GCAccessPolicy" + DateTime.UtcNow.ToString();

// Create the GC worker's address book SAS policy

// that will be associated with a signed identifer

TablePermissions addressBookPermissions = new TablePermissions();

SharedAccessTablePolicy gcTablePolicy = new SharedAccessTablePolicy()

{

// Providing the max duration

SharedAccessExpiryTime = DateTime.MaxValue,

// Permission is granted to query and delete entries.

Permissions = SharedAccessTablePermissions.Query | SharedAccessTablePermissions.Delete

};

// Associate the above policy with a signed identifier

addressBookPermissions.SharedAccessPolicies.Add(

gcPolicySignedIdentifier,

gcTablePolicy);

// The below call will result in a Set Table ACL request to be sent to

// Windows Azure Storage in order to store the policy and associate it with the

// "GCAccessPolicy" signed identifier that will be referred to

// by the generated SAS token

this.addressBookTable.SetPermissions(addressBookPermissions);

// Create the SAS tokens using the above policies.

// There are no restrictions on partition key and row key.

// It also uses the signed identifier as part of the token.

// No requests will be sent to Windows Azure Storage when the below call is made.

tableSasToken = this.addressBookTable.GetSharedAccessSignature(

new SharedAccessTablePolicy(),

gcPolicySignedIdentifier,

null /* start partition key */,

null /* start row key */,

null /* end partition key */,

null /* end row key */);

// Initializing the garbage collection queue and creating a Queue SAS token

// by following similar steps as the table SAS

CloudQueueClient queueClient =

this.serviceStorageAccount.CreateCloudQueueClient();

this.gcQueue = queueClient.GetQueueReference(GCQueueName);

this.gcQueue.CreateIfNotExist();

// Create the GC queue SAS policy.

QueuePermissions gcQueuePermissions = new QueuePermissions();

SharedAccessQueuePolicy gcQueuePolicy = new SharedAccessQueuePolicy()

{

// Providing the max duration

SharedAccessExpiryTime = DateTime.MaxValue,

// Permission is granted to process queue messages.

Permissions = SharedAccessQueuePermissions.ProcessMessages

};

// Associate the above policy with a signed identifier

gcQueuePermissions.SharedAccessPolicies.Add(

gcPolicySignedIdentifier,

gcQueuePolicy);

// The below call will result in a Set Queue ACL request to be sent to

// Windows Azure Storage in order to store the policy and associate it with the

// "GCAccessPolicy" signed identifier that will be referred to

// by the generated SAS token

this.gcQueue.SetPermissions(gcQueuePermissions);

// Create the SAS tokens using the above policy which

// uses the signed identifier as part of the token.

// No requests will be sent to Windows Azure Storage when the below call is made.

queueSasToken = this.gcQueue.GetSharedAccessSignature(

new SharedAccessQueuePolicy(),

gcPolicySignedIdentifier);

}

Whenever customer’s data needs to be deleted the following method will be called which is assumed to be part of the SasProducer class for simplicity.

/// <summary>

/// Flags the given customer ID for garbage collection.

/// </summary>

/// <param name="customerId">The customer ID to delete.</param>

public void DeleteCustomer(string customerId)

{

// Add the customer ID to the GC queue.

CloudQueueMessage message = new CloudQueueMessage(customerId);

this.gcQueue.AddMessage(message);

}

In case a SAS token needs to be revoked, the following method would need to be invoked. Once the below method is called, any malicious user who might have gained access to these SAS tokens will be denied access. The garbage collector could in this case request new token from the SAS Producer.

/// <summary>

/// Revokes Revocable SAS access to a Table that is associated

/// with a policy referred to by the signedIdentifier

/// </summary>

/// <param name="table">

/// Reference to the CloudTable in question.

/// The table must be created with a signed key access,

/// since otherwise Set/Get Table ACL would fail</param>

/// <param name="signedIdentifier">the SAS signedIdentifier to revoke</param>

public void RevokeAccessToTable(CloudTable table, string signedIdentifier)

{

// Retrieve the current policies and SAS signedIdentifier

// associated with the table by invoking Get Table ACL

TablePermissions tablePermissions = table.GetPermissions();

// Attempt to remove the signedIdentifier to revoke from the list

bool success = tablePermissions.SharedAccessPolicies.Remove(signedIdentifier);

if (success)

{

// Commit the changes by invoking Set Table ACL

// without the signedidentifier that needs revoking

this.addressBookTable.SetPermissions(tablePermissions);

}

// else the signedIdentifier does not exist, therefore no need to

// call Set Table ACL

}

The garbage collection code that uses the generated SAS tokens is as follow.

/// <summary>

/// The garbage collection worker class.

/// </summary>

public class GCWorker

{

/// <summary>

/// The address book table.

/// </summary>

private CloudTable addressBook;

/// <summary>

/// The garbage collection queue.

/// </summary>

private CloudQueue gcQueue;

/// <summary>

/// Initializes a new instance of the GCWorker class

/// by passing in the required SAS credentials to access the

/// AddressBook Table and the gcqueue Queue

/// </summary>

public GCWorker(

string tableEndpoint,

string sasTokenForAddressBook,

string queueEndpoint,

string sasTokenForQueue)

{

StorageCredentials credentialsForAddressBook =

new StorageCredentialsSharedAccessSignature(sasTokenForAddressBook);

CloudTableClient tableClient =

new CloudTableClient(tableEndpoint, credentialsForAddressBook);

this.addressBook =

tableClient.GetTableReference(SasProducer.AddressBookTableName);

StorageCredentials credentialsForQueue =

new StorageCredentialsSharedAccessSignature(sasTokenForQueue);

CloudQueueClient queueClient =

new CloudQueueClient(queueEndpoint, credentialsForQueue);

this.gcQueue =

queueClient.GetQueueReference(SasProducer.GCQueueName);

}

/// <summary>

/// Starts the GC worker, which polls the GC queue for messages

/// containing customerID to be garbage collected.

/// </summary>

public void Start()

{

while (true)

{

// Get a message from the queue by settings its visibility time to 2 minutes

CloudQueueMessage message = this.gcQueue.GetMessage(TimeSpan.FromMinutes(2));

// If there are no messages, sleep and retry.

if (message == null)

{

Thread.Sleep(TimeSpan.FromMinutes(1));

continue;

}

// The account name is the message body.

string customerIDToGC = message.AsString;

// Create a context for querying and modifying the address book.

TableServiceContext context = this.addressBook.ServiceClient.GetDataServiceContext();

// Find all entries in a given account.

CloudTableQuery<AddressBookEntry> query =

(from entry in context.CreateQuery<AddressBookEntry>(this.addressBook.Name)

where entry.PartitionKey == customerIDToGC

select entry).AsTableServiceQuery();

int numberOfEntriesInBatch = 0;

// Delete entries in batches since all of the contact entries share

// the same partitionKey

foreach (AddressBookEntry r in query.Execute())

{

context.DeleteObject(r);

numberOfEntriesInBatch++;

if (numberOfEntriesInBatch == 100)

{

// Commit the batch of 100 deletions to the service.

context.SaveChangesWithRetries(SaveChangesOptions.Batch);

numberOfEntriesInBatch = 0;

}

}

if (numberOfEntriesInBatch > 0)

{

// Commit the remaining deletions (if any) to the service.

context.SaveChangesWithRetries(SaveChangesOptions.Batch);

}

// Delete the message from the queue.

this.gcQueue.DeleteMessage(message);

}

}

}

For completion, we are providing the following Main method code to illustrate the above classes and allow you to test the sample code.

public static void Main()

{

string accountName = "someaccountname";

string accountKey = "someaccountkey";

string tableEndpoint = string.Format(

"https://{0}.table.core.windows.net", accountName);

string queueEndpoint = string.Format(

"https://{0}.queue.core.windows.net", accountName);

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(

string.Format(

"DefaultEndpointsProtocol=http;AccountName={0};AccountKey={1}",

accountName, accountKey));

SasProducer sasProducer = new SasProducer(storageAccount);

string sasTokenForAddressBook, sasTokenForQueue;

// Get the revocable GC SAS tokens

sasProducer.GetGCSasTokens(out sasTokenForAddressBook, out sasTokenForQueue);

// Initialize and start the GC Worker

GCWorker gcWorker = new GCWorker(

tableEndpoint,

sasTokenForAddressBook,

queueEndpoint,

sasTokenForQueue);

ThreadPool.QueueUserWorkItem((state) => gcWorker.Start());

string customerId = "davidhamilton";

// Create a client object

Client client = new Client(sasProducer, tableEndpoint, customerId);

// Add some address book entries

AddressBookEntry contactEntry = new AddressBookEntry

{

RowKey = "Harp,Walter",

Address = "1345 Fictitious St, St Buffalo, NY 98052",

PhoneNumber = "425-555-0101"

};

client.UpsertEntry(contactEntry);

contactEntry = new AddressBookEntry

{

RowKey = "Foster,Jonathan",

Email = "Jonathan@fourthcoffee.com"

};

client.UpsertEntry(contactEntry);

contactEntry = new AddressBookEntry

{

RowKey = "Miller,Lisa",

PhoneNumber = "425-555-2141"

};

client.UpsertEntry(contactEntry);

// Update Walter's Contact entry with an email address

contactEntry = new AddressBookEntry

{

RowKey = "Harp,Walter",

Email = "Walter@contoso.com"

};

client.UpsertEntry(contactEntry);

// Look up an entry

contactEntry = client.LookupByName("Foster,Jonathan");

// Delete the customer

sasProducer.DeleteCustomer(customerId);

// Wait for GC

Thread.Sleep(TimeSpan.FromSeconds(120));

}

Queue SAS

SAS for queue allows account owners to grant SAS access to a queue by defining the following restriction on the SAS policy:

- Access permissions (sp): users can grant access rights to the specified queue such as Read or Peek at messages (r), Add message (a), Update message (u), and Process message (p) which allows the Get Messages and Delete Message REST APIs to be invoked, or a combination of permissions. Note that Process message (p) permissions potentially allow a client to get and delete every message in the queue. Therefore the clients receiving these permissions must be sufficiently trusted for the queue being accessed.

- Time range (st/se): users can limit the SAS token access time. You can also choose to provide access for maximum duration.

- Server stored access policy (si) : users can either generate offline SAS tokens where the policy permissions described above is part of the SAS token, or they can choose to store specific policy settings associated with a table. These policy settings are limited to the time range (start time and end time) and the access permissions. Stored access policies provide additional control over generated SAS tokens where policy settings could be changed at any time without the need to re-issue a new token. In addition, revoking SAS access would become possible without the need to change the account’s key.

For more information on the different policy settings for Queue SAS and the REST interface, please refer to the SAS MSDN documentation.

A typical scenario where Queue SAS can be used is for a notification system where the notification producer would need add-only access to the queue and the consumer needs processing and read access to the queue.

As an example, consider a video processing service that works on videos provided by its customers. The source videos are stored as part of the customer’s Windows Azure Storage account. Once the video is processed by the processing service, the resultant video is stored back as part of the customer’s account. The service provides transcoding to different video quality such as 240p, 480p and 720p. Whenever there are new videos to be processed, the customer client app would send a request to the service which includes the source video blob, the destination video blob and the requested video transcoding quality. The service would then transcode the source video and stores the resultant video back to the customer account location denoted by the destination blob. To design such service without Queue SAS, the system design would include 3 different components:

- Client, creates a SAS token access to the source video blob with read permissions and a destination blob SAS token access with write permissions. The client then sends a request to the processing service front-end along with the needed video transcoding quality.

- Video processing service front-end, accepts requests by first authenticating the sender using its own preferred authentication scheme. Once authenticated, the front-end enqueues a work item into a Windows Azure Queue called “videoprocessingqueue” that gets processed by a number of video processor worker role instances.

- Video processor worker role: the worker role would dequeue work items from the “videoprocessingqueue” and processes the request by transcoding the video. The worker role could also extend the visibility time of the work item if more processing time is needed.

The above system design would require that the number of front-ends to scale up with the increased number of requests and customer count in order to be able to keep up with the service demand. In addition, client applications are not isolated from unavailability of video processing service front-ends. Having the client application directly interface with the scalable, highly available and durable Queue using SAS would greatly alleviate this requirement and would help make the service run more efficiently and with less computational resources. It also decouples the client applications from availability of video processing service front-ends. In this case, the front-end role could instead issue SAS tokens granting access to the “viodeprocessingqueue” with add message permission for, say, 2 hours. The client can then use the SAS token in order to enqueue requests. When using Queue SAS, the load on the front-end greatly decreases, since the enqueue requests go directly from the client to storage, instead of through the front-end service. The system design would then look like:

- Client, which creates a SAS token access to the source video blob with read permissions and a destination blob SAS token access with write permissions. The client would then contact the front-end and retrieves a SAS token for the “videoprocessingqueue” queue and then enqueues a video processing work item. The client would cache the SAS token for 2 hours and renew it well before it expires.

- Video processing service front-end, which accepts requests by first authenticating the sender. Once authenticated, it would issue SAS tokens to the “videoprocessingqueue” queue with add message permission and duration limited to 2 hours.

- Video processor worker role: The responsibility of this worker role would remain unchanged from the previous design.

We will now highlight the usage of Queue SAS through code for the video processing service. Authentication and actual video transcoding code will be omitted for simplicity reasons.

We will first define the video processing work item referred to as TranscodingWorkItem as follow.

/// <summary>

/// Enum representing the target video quality requested by the client

/// </summary>

public enum VideoQuality

{

quality240p,

quality480p,

quality720p

}

/// <summary>

/// class representing the queue message Enqueued by the client

/// and processed by the video processing worker role

/// </summary>

public class TranscodingWorkItem

{

/// <summary>

/// Blob URL for the source Video that needs to be transcoded

/// </summary>

public string SourceVideoUri { get; set; }

/// <summary>

/// Blob URl for the resultant video that would be produced

/// </summary>

public string DestinationVideoUri { get; set; }

/// <summary>

/// SAS token for the source video with read-only access

/// </summary>

public string SourceSasToken { get; set; }

/// <summary>

/// SAS token for destination video with write-only access

/// </summary>

public string DestinationSasToken { get; set; }

/// <summary>

/// The requested video quality

/// </summary>

public VideoQuality TargetVideoQuality { get; set; }

/// <summary>

/// Converts the xml representation of the queue message into a TranscodingWorkItem object

/// This API is used by the Video Processing Worker role

/// </summary>

/// <param name="messageContents">XML snippet representing the TranscodingWorkItem</param>

/// <returns></returns>

public static TranscodingWorkItem FromMessage(string messageContents)

{

XmlSerializer mySerializer = new XmlSerializer(typeof(TranscodingWorkItem));

StringReader reader = new StringReader(messageContents);

return (TranscodingWorkItem)mySerializer.Deserialize(reader);

}

/// <summary>

/// Serializes this TranscodingWorkItem object to an xml string that would be

/// used a queue message.

/// This API is used by the client

/// </summary>

/// <returns></returns>

public string ToMessage()

{

XmlSerializer mySerializer = new XmlSerializer(typeof(TranscodingWorkItem));

StringWriter writer = new StringWriter();

mySerializer.Serialize(writer, this);

writer.Close();

return writer.ToString();

}

}

Below, we will highlight the code needed by the front-end part of the service. It will be acting as a SAS generator. This component will generate 2 types of SAS tokens; a non-revocable one that is limited to 2 hours consumed by clients and a one-time, maximum duration, revocable one that is used by the video processing worker role.

/// <summary>

/// SAS Generator component that is running as part of the service front-end

/// </summary>

public class SasProducer

{

/* ... */

/// <summary>

/// API invoked by Clients in order to get a SAS token

/// that allows them to add messages to the queue.

/// The token will have add-message permission with a 2 hour limit.

/// </summary>

/// <returns>A SAS token authorizing access to the video processing queue.</returns>

public string GetClientSasToken()

{

// The shared access policy should expire in two hours.

// No start time is specified, which means that the token is valid immediately.

// The policy specifies add-message permissions.

SharedAccessQueuePolicy policy = new SharedAccessQueuePolicy()

{

SharedAccessExpiryTime = DateTime.UtcNow.Add(SasProducer.SasTokenDuration),

Permissions = SharedAccessQueuePermissions.Add

};

// Generate the SAS token. No access policy identifier is used

// which makes it non revocable.

// the token is generated by the client without issuing any calls

// against the Windows Azure Storage.

string sasToken = this.videoProcessingQueue.GetSharedAccessSignature(

policy /* access policy */,

null /* access policy identifier */);

return sasToken;

}

/// <summary>

/// This method will generate a revocable SAS token that will be used by

/// the video processing worker roles. The role will have process and update

/// message permissions.

/// </summary>

/// <returns></returns>

public string GetSasTokenForProcessingMessages()

{

// A signed identifier is needed to associate a SAS with a server stored policy

string workerPolicySignedIdentifier =

"VideoProcessingWorkerAccessPolicy" + DateTime.UtcNow.ToString();

// Create the video processing worker's queue SAS policy.

// Permission is granted to process and update queue messages.

QueuePermissions workerQueuePermissions = new QueuePermissions();

SharedAccessQueuePolicy workerQueuePolicy = new SharedAccessQueuePolicy()

{

// Making the duration max

SharedAccessExpiryTime = DateTime.MaxValue,

Permissions = SharedAccessQueuePermissions.ProcessMessages | SharedAccessQueuePermissions.Update

};

// Associate the above policy with a signed identifier

workerQueuePermissions.SharedAccessPolicies.Add(

workerPolicySignedIdentifier,

workerQueuePolicy);

// The below call will result in a Set Queue ACL request to be sent to

// Windows Azure Storage in order to store the policy and associate it with the

// "VideoProcessingWorkerAccessPolicy" signed identifier that will be referred to

// by the SAS token

this.videoProcessingQueue.SetPermissions(workerQueuePermissions);

// Use the signed identifier in order to generate a SAS token. No requests will be

// sent to Windows Azure Storage when the below call is made.

string revocableSasTokenQueue = this.videoProcessingQueue.GetSharedAccessSignature(

new SharedAccessQueuePolicy(),

workerPolicySignedIdentifier);

return revocableSasTokenQueue;

}

}

We will now look at the client library code that is running as part of the customer’s application. We will assume that the communication between the client and service front-end is a simple method call invoked on the SasProducer object. In reality, this could be an HTTPS web request that is processed by the front-end and the SAS token is returned as part of the HTTPS response. The client library will use the customer’s storage credentials in order to create SAS to the source and destination video blobs. It would also retrieve the processing video Queue SAS token from the service and enqueues a transcoding work item into it.

/// <summary>

/// A class representing the client using the video processing service.

/// </summary>

public class Client

{

/// <summary>

/// When to refresh the credentials, measured as a number of minutes before expiration.

/// </summary>

private const int CredsRefreshThresholdInMinutes = 60;

/// <summary>

/// The handle to the video processing service, for requesting sas tokens

/// </summary>

private SasProducer videoProcessingService;

/// <summary>

/// a cached copy of the SAS credentials.

/// </summary>

private StorageCredentialsSharedAccessSignature serviceQueueSasCredentials;

/// <summary>

/// Expiration time for the service SAS token.

/// </summary>

private DateTime serviceQueueSasExpiryTime;

/// <summary>

/// the video processing service storage queue endpoint that is used to

/// enqueue workitems to

/// </summary>

private string serviceQueueEndpoint;

/// <summary>

/// Initializes a new instance of the Client class.

/// </summary>

/// <param name="service">

/// A handle to the video processing service object.</param>

/// <param name="serviceQueueEndpoint">

/// The video processing service storage queue endpoint that is used to

/// enqueue workitems to</param>

public Client(SasProducer service, string serviceQueueEndpoint)

{

this.videoProcessingService = service;

this.serviceQueueEndpoint = serviceQueueEndpoint;

}

/// <summary>

/// Called by the application in order to request a video to

/// be transcoded.

/// </summary>

/// <param name="clientStorageAccountName">

/// The customer's storage account name; Not to be confused

/// with the service account info</param>

/// <param name="clientStorageKey">the customer's storage account key.

/// It is used to generate the SAS access to the customer's videos</param>

/// <param name="sourceVideoBlobUri">The raw source blob uri</param>

/// <param name="destinationVideoBlobUri">The raw destination blob uri</param>

/// <param name="videoQuality">the video quality requested</param>

public void SubmitTranscodeVideoRequest(

string clientStorageAccountName,

string clientStorageKey,

string sourceVideoBlobUri,

string destinationVideoBlobUri,

VideoQuality videoQuality)

{

// Create a reference to the customer's storage account

// that will be used to generate SAS tokens to the source and destination

// videos

CloudStorageAccount clientStorageAccount = CloudStorageAccount.Parse(

string.Format("DefaultEndpointsProtocol=http;AccountName={0};AccountKey={1}",

clientStorageAccountName, clientStorageKey));

CloudBlobClient blobClient = clientStorageAccount.CreateCloudBlobClient();

CloudBlob sourceVideo = new CloudBlob(

sourceVideoBlobUri /*blobUri*/,

blobClient /*serviceClient*/);

CloudBlob destinationVideo = new CloudBlob(

destinationVideoBlobUri /*blobUri*/,

blobClient /*serviceClient*/);

// Create the SAS policies for the videos

// The permissions are restricted to read-only for the source

// and write-only for the destination.

SharedAccessBlobPolicy sourcePolicy = new SharedAccessBlobPolicy

{

// Allow 24 hours for reading and transcoding the video

SharedAccessExpiryTime = DateTime.UtcNow.AddHours(24),

Permissions = SharedAccessBlobPermissions.Read

};

SharedAccessBlobPolicy destinationPolicy = new SharedAccessBlobPolicy

{

// Allow 24 hours for reading and transcoding the video

SharedAccessExpiryTime = DateTime.UtcNow.AddHours(24),

Permissions = SharedAccessBlobPermissions.Write

};

// Generate SAS tokens for the source and destination

string sourceSasToken = sourceVideo.GetSharedAccessSignature(

sourcePolicy,

null /* access policy identifier */);

string destinationSasToken = destinationVideo.GetSharedAccessSignature(

destinationPolicy,

null /* access policy identifier */);

// Create a workitem for transcoding the video

TranscodingWorkItem workItem = new TranscodingWorkItem

{

SourceVideoUri = sourceVideo.Uri.AbsoluteUri,

DestinationVideoUri = destinationVideo.Uri.AbsoluteUri,

SourceSasToken = sourceSasToken,

DestinationSasToken = destinationSasToken,

TargetVideoQuality = videoQuality

};

// Get the credentials for the service queue. This would use the cached

// credentials in case they did not expire, otherwise it would contact the

// video processing service

StorageCredentials serviceQueueSasCrendials = GetServiceQueueSasCredentials();

CloudQueueClient queueClient = new CloudQueueClient(

this.serviceQueueEndpoint /*baseAddress*/,

serviceQueueSasCrendials /*credentials*/);

CloudQueue serviceQueue = queueClient.GetQueueReference(SasProducer.WorkerQueueName);

// Add the workitem to the queue which would

// result in a Put Message API to be called on a SAS URL

CloudQueueMessage message = new CloudQueueMessage(

workItem.ToMessage() /*content*/);

serviceQueue.AddMessage(message);

}

/// <summary>

/// Gets the SAS storage credentials object for accessing

/// the video processing queue.

/// This method will automatically refresh the credentials as needed.

/// </summary>

public StorageCredentials GetServiceQueueSasCredentials()

{

// Refresh the credentials if needed.

if (this.serviceQueueSasCredentials == null ||

DateTime.UtcNow.AddMinutes(CredsRefreshThresholdInMinutes)

>= this.serviceQueueSasExpiryTime)

{

this.RefreshAccessCredentials();

}

return this.serviceQueueSasCredentials;

}

/// <summary>

/// Request a new SAS token from the service, and updates the

/// cached credentials and the expiration time.

/// </summary>

/// <returns>True if the credentials were refreshed, false otherwise.</returns>

public void RefreshAccessCredentials()

{

// Request the SAS token. This is currently emulated as a

// method call against the SasProducer object

string sasToken = this.videoProcessingService.GetClientSasToken();

// Create credentials using the new token.

this.serviceQueueSasCredentials = new StorageCredentialsSharedAccessSignature(sasToken);

this.serviceQueueSasExpiryTime = DateTime.UtcNow.Add(SasProducer.SasTokenDuration);

}

}

We then look at the video processing worker role code. The code uses SAS tokens that can either be passed in as part of a configuration file or the video processing role could contact the SAS Producer role to get such info.

/// <summary>

/// A class representing a video processing worker role

/// </summary>

public class VideoProcessingWorker

{

public const string WorkerQueueName = "videoprocessingqueue";

/// <summary>

/// A reference to the video processing queue

/// </summary>

private CloudQueue videoProcessingQueue;

/// <summary>

/// Initializes a new instance of the VideoProcessngWorker class.

/// </summary>

/// <param name="sasTokenForWorkQueue">

/// The SAS token for accessing the work queue.</param>

/// <param name="storageAccountName">

/// The storage account name used by this service</param>

public VideoProcessingWorker(string sasTokenForWorkQueue, string storageAccountName)

{

string queueEndpoint =

string.Format("https://{0}.queue.core.windows.net", storageAccountName);

StorageCredentials queueCredendials =

new StorageCredentialsSharedAccessSignature(sasTokenForWorkQueue);

CloudQueueClient queueClient =

new CloudQueueClient(queueEndpoint, queueCredendials);

this.videoProcessingQueue =

queueClient.GetQueueReference(VideoProcessingWorker.WorkerQueueName);

}

/// <summary>

/// Starts the worker, which polls the queue for messages containing videos to be transcoded.

/// </summary>

public void Start()

{

while (true)

{

// Get a message from the queue by setting an initial visibility timeout to 5 minutes

CloudQueueMessage message = this.videoProcessingQueue.GetMessage(

TimeSpan.FromMinutes(5) /*visibilityTimeout*/);

// If there are no messages, sleep and retry.

if (message == null)

{

Thread.Sleep(TimeSpan.FromSeconds(5));

continue;

}

TranscodingWorkItem workItem;

try

{

// Deserialize the work item

workItem = TranscodingWorkItem.FromMessage(message.AsString);

}

catch (InvalidOperationException)

{

// The message is malformed

// Log an error (or an alert) and delete it from the queue

this.videoProcessingQueue.DeleteMessage(message);

continue;

}

// Create the source and destination CloudBlob objects

// from the workitem's blob uris and sas tokens

StorageCredentials sourceCredentials =

new StorageCredentialsSharedAccessSignature(workItem.SourceSasToken);

CloudBlob sourceVideo = new CloudBlob(workItem.SourceVideoUri, sourceCredentials);

StorageCredentials destinationCredentials =

new StorageCredentialsSharedAccessSignature(workItem.DestinationSasToken);

CloudBlob destinationVideo =

new CloudBlob(workItem.DestinationVideoUri, destinationCredentials);

// Process the video

this.ProcessVideo(sourceVideo, destinationVideo, workItem.TargetVideoQuality);

// Delete the message from the queue.

this.videoProcessingQueue.DeleteMessage(message);

}

}

/// <summary>

/// Transcodes the video.

/// This does not do any actual video processing.

/// </summary>

private void ProcessVideo(

CloudBlob sourceVideo,

CloudBlob destinationVideo,

VideoQuality targetVideoQuality)

{

Stream inStream = sourceVideo.OpenRead();

Stream outStream = sourceVideo.OpenWrite();

// This is where the real work is done.

// In this example, we just write inStream to outStream plus some extra text.

byte[] buffer = new byte[1024];

int count = 1;

while (count != 0)

{

count = inStream.Read(buffer, 0, buffer.Length);

outStream.Write(buffer, 0, count);

}

// Write the extra text

using (TextWriter writer = new StreamWriter(outStream))

{

writer.WriteLine(" (transcoded to {0})", targetVideoQuality);

}

}

}

For completion, we are providing the following Main method code that would allow you to test the above sample code.

public static void Main()

{

string serviceAccountName = "someserviceaccountname";

string serviceAccountKey = "someserviceAccountKey";

string serviceQueueEndpoint =

string.Format("https://{0}.queue.core.windows.net", serviceAccountName);

// Set up the SAS producer as part of the fron-end

SasProducer sasProducer = new SasProducer(serviceAccountName, serviceAccountKey);

// Get the SAS token for max time period that is used by the service worker role

string sasTokenForQueue = sasProducer.GetSasTokenForProcessingMessages();

// Start the video processing worker

VideoProcessingWorker transcodingWorker =

new VideoProcessingWorker(sasTokenForQueue, "someAccountName");

ThreadPool.QueueUserWorkItem((state) => transcodingWorker.Start());

// Set up the client library

Client client = new Client(sasProducer, serviceQueueEndpoint);

// Use the client libary to submit transcoding workitems

string customerAccountName = "clientaccountname";

string customerAccountKey = "CLIENTACCOUNTKEY";

CloudStorageAccount storageAccount = CloudStorageAccount.Parse(

string.Format("DefaultEndpointsProtocol=http;AccountName={0};AccountKey={1}",

customerAccountName,

customerAccountKey));

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

// Create a source container

CloudBlobContainer sourceContainer =

blobClient.GetContainerReference("sourcevideos");

sourceContainer.CreateIfNotExist();

// Create destination container

CloudBlobContainer destinationContainer =

blobClient.GetContainerReference("transcodedvideos");

destinationContainer.CreateIfNotExist();

List<CloudBlob> sourceVideoList = new List<CloudBlob>();

// Upload 10 source videos

for (int i = 0; i < 10; i++)

{

CloudBlob sourceVideo = sourceContainer.GetBlobReference("Video" + i);

// Upload the video

// This example uses a placeholder string

sourceVideo.UploadText("Content of video" + i);

sourceVideoList.Add(sourceVideo);

}

// Submit Video Processing Requests to the service using Queue SAS

for (int i = 0; i < 10; i++)

{

CloudBlob sourceVideo = sourceVideoList[i];

CloudBlob destinationVideo =

destinationContainer.GetBlobReference("Video" + i);

client.SubmitTranscodeVideoRequest(

customerAccountName,

customerAccountKey,

sourceVideo.Uri.AbsoluteUri,

destinationVideo.Uri.AbsoluteUri,

VideoQuality.quality480p);

}

// Let the worker finish processing

Thread.Sleep(TimeSpan.FromMinutes(5));

}

Jean Ghanem, Michael Roberson, Weiping Zhang, Jai Haridas, Brad Calder

Comments

Anonymous

July 17, 2012

Git doesn't contain the sample code.Anonymous

July 26, 2012

@Vin - we are still working on an appropriate place to publish samples associated with blogs. But until then, we have the entire source code in the blog.Anonymous

August 04, 2012

Is it possible to use Table SAS directly from javascript in a browser ie. addressing cross domain issues?Anonymous

August 08, 2012

when do you expect to release the changes to the storage client library? i'm assuming the changes for shared access signatures are not reflected in the recent 1.7 update.Anonymous

August 18, 2012

@jws, 1.7 still uses the 2011-08-18 REST version. We have released source code as mentioned in this post and we will make available our 2.0 library in the next month or so. The 2.0 library will support 2012-02-12 version of REST.Anonymous

August 18, 2012

@Andy, it will not be possible yet since Azure Storage service does not support CORS headers. However, it is already documented in the list of feature requests.Anonymous

December 01, 2012

Good Article.Anonymous

December 04, 2012

I dont see a link for the sample download. Where can I find it?Anonymous

December 05, 2012

@CloudDv, the sample code is provided inline as part of the blog. The Github link is for downloading the SDK. The latest SDK can now be found at github.com/.../azure-sdk-for-net , www.windowsazure.com/.../net or nuget.org/.../WindowsAzure.Storage Thanks, Jean