Introduction to Record and Playback Engine in VSTT 2010

In an earlier blog entry, we have talked about the Test Runner activity in Microsoft Test and Lab Manager (MTLM) and how it allows you to record and capture the UI actions (such as mouse clicks and keyboard input) that the user takes on an Application under Test (AuT). The Test Runner tool also allows a tester to create an action recording while performing a manual test. Next time the tester is running the same test case, she has the option to playback the action recording and “fast-forward” through the UI actions quickly, instead of having to manually repeat each step.

The CodedUI Test feature also allows you to record UI actions and generate test code from the recording.

Both Test Runner and CodedUI feature use a common action recording and playback (RnP) engine to enable their scenarios. In this post we will take a look at the inner workings of this engine.

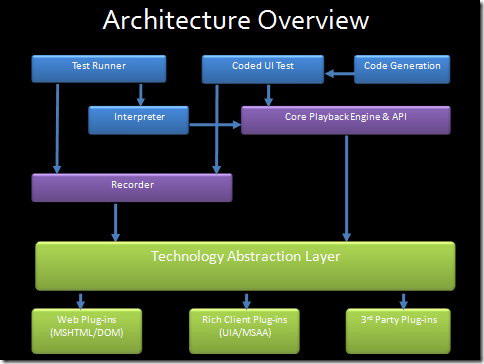

Record and Playback Engine Architecture

The following figure shows the high-level architecture of the RnP engine and how it is consumed by Test Runner and CodedUI Test.

The Recorder component is responsible for listening and capturing the UI actions. The Playback engine is responsible for interpreting the recording and playing back the equivalent actions. The Technology abstraction layer is responsible for abstracting away the UI technology specific details and provides a consistent interface to call into. All the logic for handling UI technology specific details are implemented in technology specific plug-ins that implement a standard public interface. Since the interface is public, third party vendors can implement their plug-in to handle technologies that we do not support out of the box.

Recorder Logic

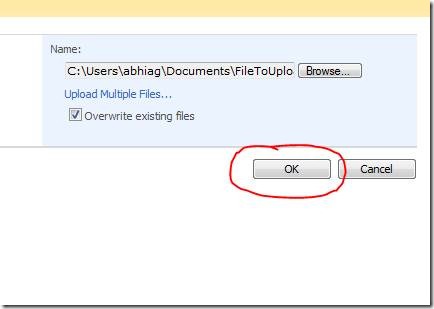

So how do we record a UI action? For example, say a tester wants to record a click on the OK button of a FileUpload Control

At a high level, the recorder will do the following:

- Listen for Mouse Events

- On mouse button down event it will get the X, Y coordinate

- Call technology specific apis to get the Control (e.g. button) at X,Y (in this example it will be a call into the Web plug-in)

- Fetch the properties to be used for searching the button

- Fetch the control hierarchy of the button

- Construct an internal query string that includes information about technology type, control hierarchy and control properties that will get used to search for the control during playback

- Capture the Actions (Mouse click in this case)

- Aggregate actions if needed

- Generate an XML representation of the recording (this can be used for interpreted playback in Test Runner or Code generation in CodedUI Test)

The quality of the recording will depend on the technology specific plug-in being able to provide good information about the control, its properties and the UI hierarchy.

I have highlighted the “aggregate actions” step above and would like to call it out in more detail. One of the goals and differentiating features of the Recorder is to provide an “Intent-Aware” recording. Instead of just capturing raw user actions, the engine has been designed to capture the intent of the user. For example, a user intending to record a file-upload operation in Internet Explorer will perform five UI actions as shown below. The RnP recorder is intelligent to aggregate a series of related actions into an equivalent action that captures the intent of the user. In the example below, only two actions are recorded instead of five. Reducing the number of raw actions that were recorded also makes the recording more robust and resilient to playback failures.

The following is the XML snippet for the recorded actions for the above example. Only two actions are captured, one is a setvalue in the fileinput control and one is a click on the ok button.

<SetValueAction UIObjectName=

"CurrentUIMap.UploadDocumentWindowWindow.HttpstsindiasitesPriClient.

UploadDocumentDocument.NameFileInput">

<ParameterName />

<Value Encoded="false">C:\Users\abhiag\Documents\FileToUpload.txt</Value>

<Type>String</Type>

</SetValueAction>

<MouseAction UIObjectName=

"CurrentUIMap.UploadDocumentWindowWindow.HttpstsindiasitesPriClient.

UploadDocumentDocument.OKButton">

<ParameterName />

<ModifierKeys>None</ModifierKeys>

<IsGlobalHotkey>false</IsGlobalHotkey>

<Location X="20" Y="16" />

<WheelDirection>0</WheelDirection>

<ActionType>Click</ActionType>

<MouseButton>Left</MouseButton>

</MouseAction>

The following XML snippet shows the properties about the OK button that is captured to enable search during playback. If you see closely, we collect two set of properties, primary and secondary. Primary properties are required to complete a successful search, secondary property are used optionally to refine the results of a search.

<UIObject ControlType="Button" Id="OKButton" FriendlyName="OK" SpecialControlType="None">

<TechnologyName>Web</TechnologyName>

<AndCondition Id="SearchCondition">

<AndCondition Id="Primary">

<PropertyCondition Name="ControlType">Button</PropertyCondition>

<PropertyCondition Name="Id">ctl00_PlaceHolderMain_ctl00_RptControls_btnOK</PropertyCondition>

<PropertyCondition Name="Name">ctl00$PlaceHolderMain$ctl00$RptControls$btnOK</PropertyCondition>

<PropertyCondition Name="TagName">INPUT</PropertyCondition>

<PropertyCondition Name="Value">OK</PropertyCondition>

</AndCondition>

<FilterCondition Id="Secondary">

<PropertyCondition Name="Type">button</PropertyCondition>

<PropertyCondition Name="Title" />

<PropertyCondition Name="Class">ms-ButtonHeightWidth</PropertyCondition>

<PropertyCondition Name="ControlDefinition">accessKey=O id=ctl00_PlaceHolderMain_ctl</PropertyCondition>

<PropertyCondition Name="TagInstance">8</PropertyCondition>

<PropertyCondition Name="RelativePosition">{X=1225,Y=274}</PropertyCondition>

</FilterCondition>

</AndCondition>

<Descendants />

</UIObject>

If you are using the Test Runner, you can view the attached recorded XML files to get an idea of what information is being recorded.

Playback Logic

The playback engine can be invoked via the interpreter in the Test Runner, which reads the XML and plays back the action or it can be invoked via automation apis in a CodedUI test. At a high-level the playback engine performs the following steps:

- Search for the Control: The playback engine uses the control information captured during recording to search for the control. For search purpose, the UI is visualized as a tree of UI elements rooted at Desktop. It employs innovative heuristics to provide a resilient and fast search.

- Ensure Control is Visible: In order to interact with the control, the control should be visible. The playback engine performs the necessary actions such as scrolling etc to ensure the control is in view.

- Wait for Control to be ready (WFR) : The playback needs to ensure that the control is ready to be acted upon before performing the action. The engine uses smart algorithms to ensure this. Plug-ins can override this behavior by providing technology specific implementation.

- UI Synchronization: Playback tries to ensure that the control that was supposed to have received an action has actually received it.

- Perform Action: The playback finally performs the UI action on the control. Currently, the playback supports the following primitive actions:

- Check, Uncheck, DoubleClick, MouseHover, MouseMove, MouseButtonClick, MouseWheel, SendKeys, SetValueasComboBox, Drag, DragDrop, Scroll, SetFocus

Technology Support

The engine supports a wide range of platforms out-of-the box covering thick client applications built with .Net technologies to latest web applications

- Support for Windows Internet Explorer 7.0 and Windows Internet Explorer 8.0

- Applications written in ASP.Net, php, jsp, asp, Ajax, SharePoint and web 2.0 applications are supported. The web plug-in uses The IEDOM for identifying properties of the controls and playing back the actions.

- Support for Win-forms Controls for Microsoft .Net framework 2.0, 3.5 SP1 and 4.0

- For Win-forms applications, the plug-in uses windows accessibility technology Microsoft Active Accessibility (MSAA) to identify Control properties and playback actions.

- Windows Presentation Framework (WPF) for .Net framework 3.5 SP1 and 4.0

- For WPF applications, the plug-in uses the UI Automation (UIA) accessibility framework in Windows to identify Control properties and playback actions.

- Custom Controls

- Since we rely on windows accessibility technologies, controls that follow accessibility guidelines are also supported. We are also working with partners to enable support for some popular custom controls shipped by third party vendors. Additionally, vendors can used our extensibility APIs to add improved support for their custom controls.

- Firefox 3.0 Support

- We are currently working on adding Firefox support. It will not be available in the box at RTM but we are actively working on making it available as add-on shortly afterwards.

Engine Extensibility

The engine has been designed with Extensibility in mind. We realize that there are scenarios where our default engine will not meet the needs of our customers. These include being able to test applications built on a different or new UI technology (e.g. flash) or certain application domains that require customized action recording support. Via our extensibility APIs, customers, third party vendors/ISVs can build plug-ins for these specific scenarios. Users can install and register the plug-ins with the engine and enable their scenarios.

Hope the above article has given you some insight into the inner workings of RnP engine and how it enables the Test Runner and the CodedUI Test features. In a future post, we will go into more details about the extensibility of the RnP engine and show how customers and third-party can extend it.

Abhishek Agrawal, Program Manager, Visual Studio Team Test

Comments

Anonymous

June 20, 2009

PingBack from http://blog.a-foton.ru/index.php/2009/06/21/introduction-to-record-and-playback-engine-in-vstt-2010/Anonymous

March 17, 2010

The comment has been removedAnonymous

April 07, 2014

The file upload is not working during play back.

![clip_image002[7] clip_image002[7]](https://msdntnarchive.z22.web.core.windows.net/media/TNBlogsFS/BlogFileStorage/blogs_msdn/vstsqualitytools/WindowsLiveWriter/edf7471a3cb1_A205/clip_image002%5B7%5D_thumb.gif)