Debugging Native memory leaks with Debug Diag 1.1

I often get questions about debugging native memory leaks. In other words, cases where you have high memory usage in your application but you can see that .net memory usage is not all that high.

Recently I was helping out on such a case and this post details both generally how you go about troubleshooting these issues as well as what troubleshooting steps we went through in this particular case.

Essentially you would go through these steps to troubleshoot a native memory leak:

1. Find out if your memory usage / leak is mostly .net memory or native memory

2. If it is native memory that is “leaking”, use a tool like debug diag 1.1. to track allocations and deallocations

3. Look at the main allocators and the stacks associated with the biggest chunks of allocations to determine who is making the allocations and why the memory is not released.

1. Find out if your memory usage / leak is mostly .net memory or native memory

There are various ways to find out if your memory usage is mostly .net or native.

Using perfmon to determine where your memory is going

The best way is probably to use performance monitor and collect a log over the lifetime of the process that is leaking with at least the following counters for the specific process (note that _total or _global counters are not very helpful here)

- .NET CLR Memory/#Bytes in all heaps

- Process/Private Bytes

- Process/Virtual Bytes

Simplified, Virtual Bytes is the memory that the process reserves, Private Bytes is how much of that the memory that the process actually uses and #Bytes in all Heaps is how much of the Private Bytes goes to memory stored on the .NET GC heap.

This is not entirely accurate if you want to be a nitpicker about what Private Bytes really means, but it is accurate enough for the purposes of troubleshooting a memory issue.

You can create such a logfile by following these steps

1. Open perfmon (Performance under Administrative Tools)

2. Under Performance Logs and Alerts, right-click Counter Logs and choose New Log Settings, give it a name and click ok.

3. Click “Add Counters” and choose the counters above. The best way to make sure you get the instance counters, rather than _Total and _Global is to choose All instances

4. Once you have added the counters, fill in the name of an administrator in the Run As: box and fill out the password. (This is important if you are logging for w3wp.exe for example as some counters are only available when you run the log as an admin)

5. The counter log will now be running, collecting data (shows up as green in the counter logs view) but you can start and stop it at any time.

Once you have this performance log you can now compare these values and specifically compare the trend and amounts for Private Bytes and #Bytes in all heaps to see if your memory leak is native (non .net) or .net related.

If your memory growth/usage is mostly .net, have a look through some of the other posts on debugging memory issues to check into how to troubleshoot them.

If however # Bytes in all heaps is pretty moderate you should continue reading from step 2 and on.

Getting memory usage info from a dump file

If you got a memory dump of the process but “forgot” to create a perfmon log with the counters described above, you can find out from the memory dump where the memory is used.

Open the memory dump in Windbg (File/Open Crash Dump) and run the command !address –summary

Note: The output might differ with different versions of windbg

0:000> !address -summary

-------------------- Usage SUMMARY --------------------------

TotSize ( KB) Pct(Tots) Pct(Busy) Usage

1bf2f000 ( 457916) : 21.84% 23.18% : RegionUsageIsVAD

772b000 ( 122028) : 05.82% 00.00% : RegionUsageFree

5881000 ( 90628) : 04.32% 04.59% : RegionUsageImage

22bc000 ( 35568) : 01.70% 01.80% : RegionUsageStack

8b000 ( 556) : 00.03% 00.03% : RegionUsageTeb

54dcb000 ( 1390380) : 66.30% 70.40% : RegionUsageHeap

0 ( 0) : 00.00% 00.00% : RegionUsagePageHeap

1000 ( 4) : 00.00% 00.00% : RegionUsagePeb

1000 ( 4) : 00.00% 00.00% : RegionUsageProcessParametrs

1000 ( 4) : 00.00% 00.00% : RegionUsageEnvironmentBlock

Tot: 7fff0000 (2097088 KB) Busy: 788c5000 (1975060 KB)

-------------------- Type SUMMARY --------------------------

TotSize ( KB) Pct(Tots) Usage

772b000 ( 122028) : 05.82% : <free>

5ee5000 ( 97172) : 04.63% : MEM_IMAGE

5fb000 ( 6124) : 00.29% : MEM_MAPPED

723e5000 ( 1871764) : 89.26% : MEM_PRIVATE -------------------- State SUMMARY --------------------------

TotSize ( KB) Pct(Tots) Usage

5a1e4000 ( 1476496) : 70.41% : MEM_COMMIT

772b000 ( 122028) : 05.82% : MEM_FREE

1e6e1000 ( 498564) : 23.77% : MEM_RESERVE

Largest free region: Base 06b10000 - Size 02170000 (34240 KB)

From this we can deduce a couple of things:

MEM_COMMIT is the committed memory, this should be fairly close to Private Bytes. In this particular case this process was using almost 1.4 GB of memory

MEM_RESERVE + MEM_COMMIT is the total reserved bytes, so this is Virtual Bytes in Perfmon. Here we can see that the process has reserved about 500 MB more than it committed, i.e. 500 MB more than it actually used. This is fairly normal for a big process as for example it will reserve chunks of memory for the .NET GC heap that it might not use all of, and there is some overhead reservations for dlls etc. as well as other types of reservations.

From the Usage SUMMARY section we can see where the reservations were made. RegionUsageIsVAD for example is allocations made with VirtualAlloc (.NET GC Heap for example goes here). RegionUsageIsImage is memory used for dlls and executables. RegionUsageStack is stack space for the threads, and RegionUsageHeap is for allocations on the NT Heaps. In this particular case we can see that most of the reservations (66.3%) and the commits (70.4%) are done on the NT heaps. This is typical for a native leak. If it was high .NET memory usage we would see a lot of the memory used for RegionUsageIsVAD.

If you want to learn more about the !address –summary output, check out the help in windbg for the command or check the lab 3 review where I have described some of this in more detail.

We can also check out exactly how much memory is used for .net objects by loading up sos in windbg (.loadby sos mscorwks) and then run !eeheap –gc. I have truncated the output below to just show the important parts

0:000> !eeheap -gc

Number of GC Heaps: 8

------------------------------

…

------------------------------

GC Heap Size 0x84680(542,336)

In this process we were using an incredibly small amount of .NET object, ~540k, so we can say for sure here that the leak is native.

2. If it is native memory that is “leaking”, use a tool like debug diag 1.1. to track allocations and deallocations

The next step in the process is to collect data about the leak and for this we can use Debug Diag 1.1.

The download page gives the instructions needed, in order to start monitoring for leaks

To debug memory and handle usage, use one of the following:

1. Create a leak rule against the process in question. The leak monitoring feature will track memory allocations inside the process. Tracking is implemented by injecting a DLL (leaktrack.dll) into the specified process and monitoring memory allocations over time. When configuring a memory and handle leak rule, you can specify memory dump generation based on time or memory usage.

2. Using the “processes” view, right-click the process in question and select the “monitor for leaks” option. When the process has grown to the suspected problem size, manually dump the process by right-clicking on the same process in the processes view and choosing the “Create Full Userdump” option.

A few notes about this: Leak tracking will only record leaks that occur while leak tracking is turned on. I know this may sound obvious but I am mentioning this because we often get leak track dumps that span only over a small period of time and does not include the allocations, because people have started leaktracking when memory was already high.

The other thing that is important to note is that by default, leak tracking will start recording stacks 15 minutes after leak tracking begins. This is to allow for an app to do the initial allocations like build up the cache, start up the form etc. before starting to monitor, so that the tracking only contains the relevant leak info.

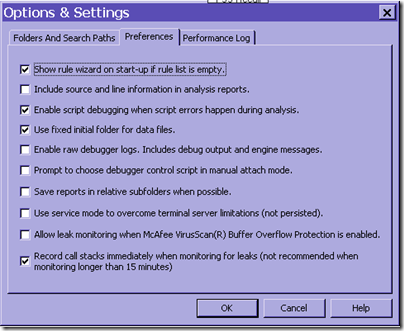

I’ve found that the best way to get all the info i need is to start up the application and let it initialize things, and then run leak tracking with the checkbox to record call stacks immediately checked. (This is under tools/options in debug diag)

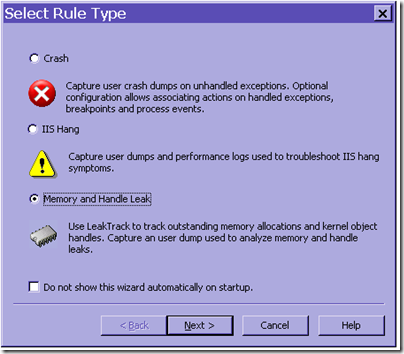

When you start Debug Diag you will be greeted with the Select Rule Type and here you choose Memory and Handle Leak and follow the steps above.

You can choose to have debug diag automatically generate dumps when the process reaches a certain size, but the easiest is probably to just choose the Memory and Handle Leak rule, and click next next finish, and then right-click on the process in the processes tab once the leak has manifested itself to get a memory dump (as per the original instructions.

3. Look at the main allocators and the stacks associated with the biggest chunks of allocations to determine who is making the allocations and why the memory is not released.

Ok, so now we have the memory dump containing the information about the leak. The cool thing here is that Debug Diag has an analysis feature that analyzes the dump for you.

Under the Advanced Analysis tab in Debug Diag, select the MemoryAnalysis.asp script and click add data files to add your memory dump, then click on “Start Analysis”.

This will generate a report that will pop up in your browser showing you information about all the allocations etc.

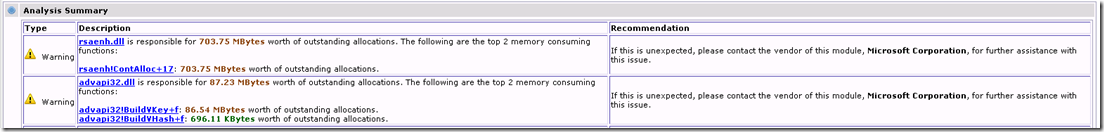

The first part of the report is an analysis summary showing a list of the components that allocated the most memory (and didn’t release them). In this case rsaenh.dll allocated 703 MB so we probably don’t even have to worry about the advapi32.dll allocations.

If you happen to debug a dump where most of the memory is .net you will probably see mscorwks.dll as one of the top allocators. This doesn’t mean that mscorwks.dll is leaking, it just means that the GC allocated a lot of segments that it didn’t release because the .net objects are still in use. If that is the case you need to follow some of the other articles on how to debug .net memory leaks instead.

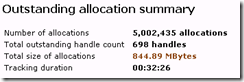

If you don’t see any big allocators in this list, you probably didn’t leak track for long enough. You can see how long tracking has been turned on, and how much has been allocated during that time by following the Leak Analysis Report a bit further down in the report output.

Going back to the issue at hand, we saw that most allocations were made by rsaenh!ContAlloc+17. Does that mean that this method leaks? Not neccessarily… we will have to look at the call stacks leading up to the allocations to see why it doesn’t release the memory.

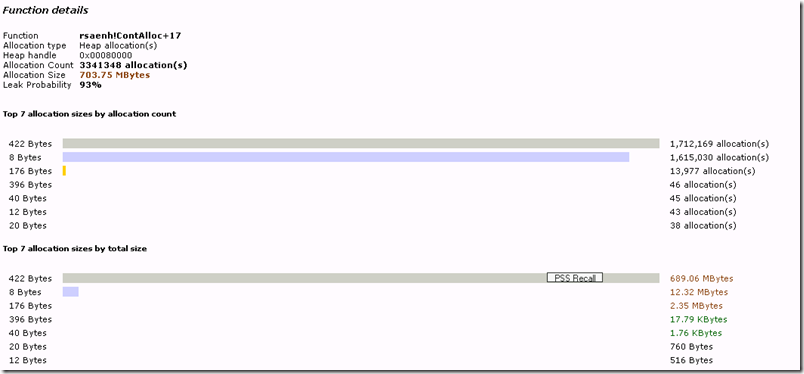

If we follow the link for rsaenh!ContAlloc+17 we will see some statistics about the allocations

So from here we find that most of the problematic allocations are the 422 byte allocations since a total of 689 MB was allocated in 422 byte chunks.

From here we can then drop down and look at the call stacks for the 422 byte allocations and find

Call stack sample 4

Address 0x0e309518

Allocation Time 00:05:00 since tracking started

Allocation Size 422 Bytes

Function Source Destination

rsaenh!ContAlloc+17 ntdll!RtlAllocateHeap

rsaenh!MakeNewKey+6c rsaenh!ContAlloc

rsaenh!CPDeriveKey+3b1 rsaenh!MakeNewKey

advapi32!CryptDeriveKey+af

SomeCryptoComponent+8328 advapi32!CryptDeriveKey

SomeCryptoComponent+4ef5

oleaut32!DispCallFunc+16a

oleaut32!CTypeInfo2::Invoke+234 oleaut32!DispCallFunc

. . .

Note: I have obfuscated the name of the 3rd party component here.

This call stack tells us that a method in the component “SomeCryptoComponent.dll” is calling advapi32!CryptDeriveKey and this is what eventually leads to the allocations we are seeing. We can’t see the method in SomeCryptoComponent.dll because we don’t have the symbols (pdb files) for it, but if we did we could add them to the symbol path under tools/options and rerun the analysis to get a better stack.

Either way, whenever you call CryptDeriveKey, you need to pair it up with a CryptDestroyKey when you are done with the Key in order to release memory. For some reason this is not happening here and thus the memory used for the keys is left allocated and this is what is causing the memory leak here.

In this particular case, the vendor for the SomeCryptComponent.dll already had an update with a fix ready to go, but I wanted to write this case study to show how you can track down a native memory leak like this with debug diag.

Have a good one,

Tess

Comments

Anonymous

January 14, 2010

Great article. Is this functionality also available in the 64-bit version of debugdiag?Anonymous

January 14, 2010

Yepp, that should work in the 64 bit version as wellAnonymous

January 17, 2010

Tess, thanks for a great and useful post. A small problem though - while creating analysis for my process I get an overflow error. The report is incomplete, with some of the numbers garbled. I'm using Windows 7. Maybe some of the memory figures are too large for the tool to handle. Any suggestions? Thanks!Anonymous

January 18, 2010

I think the problem might be using it on Win7, debug diag is not supported on win7 as of now so there might be some incompatibility issues there causing the issueAnonymous

January 20, 2010

Isn't that if "Process/Private Bytes" is increasing at much faster rate than ".NET CLR Memory/#Bytes in all heaps", we can guess/say that it is native leaks.Anonymous

January 20, 2010

nativecpp, yep, i think that sentence pretty much nails itAnonymous

January 25, 2010

Hi Tess, Thank you for this great article. I tried using the 64 bit version of Debug Diag Tool, but it seems that the download link for 64 bit installs an "analysis only" version, so I couldn't use the leak tracking capability... Do you know of such a limitation?Anonymous

January 31, 2010

Guy - if you use Windows 7 then you won't be able to use 64bit version of DebugDiag in "normal mode". The 32bit version works quite well, but the "Heap Analysis" shows some weird results like the one below:

Heap Name mscoree!EEDllGetClassObjectFromClass+124315 Heap Description This heap is used by mscoree Reserved memory 5 243 936,03 TBytes Committed memory 2 096 368,00 TBytes (39,98% of reserved) Uncommitted memory 3 147 568,02 TBytes (60,02% of reserved) Number of heap segments 1 segments Number of uncommitted ranges 1,46369135405302E+16 range(s) Size of largest uncommitted range 29 440,47 TBytes Calculated heap fragmentation 99,06%

The rest of the reports looks ok but let's hope that DebugDiag 1.2 will be released soon ;) BTW. Great blog, great articles, thank You Tess :)

Anonymous

February 03, 2010

The comment has been removedAnonymous

February 03, 2010

The comment has been removedAnonymous

February 17, 2010

The comment has been removedAnonymous

February 17, 2010

Robert, The GC uses Virtual Allocs for allocations of .NET GC heaps, so if you have a lot of .net objects that will show up as RegionUsageIsVAD here. Keep in mind also that the GC preallocates segments so if you have many processors there will be a lot of vmem reserved for the GC heaps. If you run 64bit the segments are very large so you may see a lot of overhead here, but this is just memory that is reserved, it is not committed.Anonymous

February 18, 2010

Hmm... So this reserved space doesn't get reported as part of the GC heap size? I know the reported size is part of RegionUsageIsVAD (say, 500 MB), but it's the extra 800+ MB that puzzles me. We're still under 32 bit for now and we're getting process crashes when we run out of free memory (fragmentation issues, too), so something isn't right. I'll just have to keep digging. :)Anonymous

February 18, 2010

The GC heap size is only what is actually committed, not what is reserved for it. RegionUsageIsVAD reports the reserved mem. Without looking at the details of your dump, i can't say unfortunately what the extra 800MB areAnonymous

February 22, 2010

I see, something else I didn't know. I didn't find any previous posts, so do you have any insight on the impact of excessive .dlls and .pdb files? Obviously the more that gets loaded, the more memory gets consumed but from some testing, loading a .dll seems to incur some RegionUsageIsVAD overhead beyond the space allocated in RegionUsageImage. Am I correct in saying this? If so, that may be part of my problem, since we have a lot of assemblies. Each load also seems to fragment memory more. Are there any techniques for "lining up" .dlls in memory? Should we even deploy .pdb files? Error reporting will suffer if we don't, I believe.Anonymous

February 24, 2010

The comment has been removedAnonymous

February 26, 2010

The comment has been removedAnonymous

February 26, 2010

Nevermind, I just read the following: http://blogs.msdn.com/tess/archive/2009/10/29/wohoo-debug-diag-64bit-is-finally-released.aspx Looks like Windows 7 is not supported.Anonymous

March 02, 2010

(Me one last time! Sorry for all the questions and thanks for the responses.)Anonymous

June 09, 2010

I am facing some problem after installing debug diag tool....After selecting a particular process for memory leak we get error...."Error while injecting leaktrack ino process via the dbgsvc service. Getlasterror returns 0x000000057. Could not open process handle to process. Can you please help me in this.."Anonymous

June 09, 2010

The comment has been removedAnonymous

November 09, 2010

Hi Tess, Does Debug Diag have a large footprint? It seems that as soon as I turn leak tracking on, my application slows to a crawl and eventually hangs before I can get anything useful out of it. (It is a very large and complex application) Are there any other options for me? Thanks, PaulAnonymous

November 09, 2010

HI Paul, Debug diag leaktracking can be pretty invasive since it records stacks and detours all allocations. Unfortunately that is the nature of pretty much any such tools because of what it needs to do to track native leaks, and to my knowledge there aren't a lot other tools like it out there. If possible, can you track only for a shorter time, with the option (under tools/options) to track stacks immediately? If you are not certain if your leak is managed or native i would also suggest looking at that first since managed "leaks" dont require leaktracking to troubleshoot.Anonymous

December 01, 2010

Hi I'm Using Windows server 2008 x64 bit. I find that i cannot use DebugDiag. Is there any other way i can troubleshoot my native memory leak?. Any help is really appreciated. Thanks.Anonymous

December 02, 2010

Paul, if DebugDiag is too invasive, you might want to give UMDH a try - msdn.microsoft.com/.../ff560206%28VS.85%29.aspx It works with my large and complex application, while DebugDiag does not.Anonymous

December 08, 2010

Hi Tess, We are having memory issues with our web application. Pvt bytes of w3wp is 1.09GB, Virtual Mem is ~900MB and #Bytes in all heaps is ~750MB. So where is my memory going - is it native leak or managed memory leak? Can I debug native memory leaks with WinDbg? Thanks.Anonymous

June 22, 2011

The comment has been removedAnonymous

May 02, 2013

Hi Tess: I ran DebugDiag against an application and it found a leak... but I'm looking at the committed bytes in the heap dump. Most of the memory belongs to the heap used by leakdiag.... is the heap used by leakdiag mixed up with the application's heap? Is the heap used by Leakdiag ignored when calcuating the total allocation bytes to the Memory leak calculation loss? Thanks a bunch. C.Anonymous

November 18, 2015

Nice article Tess, Very helpfull