MOSS 2007: Long Running Search Crawls - What's Going On?

Introduction

Crawls are taking unusually long to complete! My crawls are running very slow, maybe they are stuck! If you have run into any of these questions and don't have the answer yet, this post is meant to help you find it. This post assumes that you are familiar with basic troubleshooting tools such as performance monitor, log files, task manager etc.

How Crawls Work - Some Background

Following are the components that are involved in a crawl:

- Content Host - This is the server that hosts/stores the content that your indexer is crawling. For example, if you have a content source that crawls a SharePoint site, the content host would be the web front end server that hosts the site. If you are crawling a file share, the content host would be the server where the file share is physically located.

- MSDMN.EXE - When a crawl is running, you can see this process at work in the task manager at the indexer. This process is called the "Search Daemon" . When a crawl is started, this process is responsible for connecting to the content host (using the protocol handler and iFilter), requesting content from the content host and crawling the content. The Search Daemon has the biggest impact on the indexer in terms of resource utilization. It's doing the most amount of work.

- MSSearch.exe - Once the MSDMN.EXE process is done crawling the content, it passes the crawled content on to MSSearch.exe (this process also runs on the indexer, you should see it in the task manager during a crawl). MSSearch.exe does two things. It writes the crawled content on to the disk of the indexer and it also passes the metadata properties of documents that are discovered during the crawl to the backend database. Crawling metadata properties (document title, author etc.) allows the use of Advanced Search in MOSS 2007. Unlike the crawled content index, which gets stored on the physical disk of the indexer, crawled metadata properties are stored in the database.

- SQL Server (Search Database) - The search database stores information such as information about the current status of the crawls, metadata properties of documents/list items that are discovered during the crawl.

Slow Crawls: Possible Reasons

There are a number of reasons why your crawls could be taking longer then what you would expect them to take. Some of these, which are most common, are:

- There is just too much content to crawl.

- The "Content Host" is responding slow to the indexer's requests for content.

- Your database server is too busy and is slow in responding

- You are hitting a hardware bottleneck

Lets talk about each of these reasons and how we can address these in more detail.

Too Much Content to Crawl

This one may sound like common sense - more the content the crawler is crawling, longer will it take. However, it can be misleading at times. For example, say you run an incremental crawl of you MOSS content source every night. One day you notice that the crawl has been running longer than usual. That could be because the day before, a large team started a project, and many people uploaded, edited, deleted a lot more documents than usual. The crawl duration naturally, will increase.

The Solution: The solution to this problem is that you should schedule incremental crawls to run at more frequent intervals. If currently, you have scheduled the crawl to run once a day, you should consider changing the schedule for the crawl to run every two hours. Remember that incremental crawls only process changes in the content source since the last crawl. More frequent crawls will mean that each incremental crawl will have less changes to process and less work to do. You can find out how many changes will be processed by the incremental crawl before the start of the crawl, if you wish. You need to run a bunch of SQL queries against the search database which tells you the exact number of changes that will be processed by the incremental crawl and can also give you an idea of how long the crawl is going to take. This blog post shows how you can calculate the number of changes that will be processed by the incremental crawl.

Slow Content Host

The second reason why your crawls may be running slower than usual could be that the content host is under heavy burden. The indexer has to request content from the content host to crawl the content. If the host is responding slow, no matter how strong your indexer is, the crawl will run slow. A slow host is also referred to as a "Hungry Host".

In order to confirm that your crawl is starved by a hungry host, you will need to collect performance counter data from the indexer. Performance data should be collected for at least 2-12 hours (depending on how slow the crawl is. If the crawl runs for 80 hours, you should look at performance data for at least 8 hours).The performance counters that you are interested in are:

- \\Office Server Search Gatherer\ Threads Accessing Network

- \\Office Server Search Gatherer\ Idle Threads

The "Threads Accessing Network" counter shows the number of threads on the indexer that are waiting on the content host to return the requested content. A consistently high value of this counter indicates that the crawl is starved by a "hungry host". The indexer can create a maximum of 64 threads. The following performance data was collected from an indexer that was starved by a hungry host. This indexer was crawling only SharePoint sites, and all of these sites were on the same Web Front End Server. Performance data collected for 7 hours showed us the following:

The black like shows the threads accessing the network, and the other one is idle threads. In this case, "Threads Accessing the Network" is at 64 (max) from 1 to 4 PM. It starts to drop after that, probably because the number of users using the web server would have decreased at 4 PM, and the web server would have gained the capacity to serve the indexer's requests. At 7:30 PM, the web server again become "hungry" and threads accessing the network again maxes out at 64, at the same time, Idle threads drops to 0. So clearly, our crawl is starved by a slow or a hungry host. Indexer can only create up to 64 threads, so if all 64 threads are waiting on the same host, and another crawl is started at the same time that is suppoed to request content from a different, more responsive host, we will see no progress there, since all 64 threads are waiting on a hungry, slow host.

The Solution: The first thing that you should do is configure crawler impact rules on the indexer. Crawler impact rules allow you to set a maximum cap on the number of threads that can request content from a particular host simultaneously. If we set a cap on it, this will mean that all of indexer threads will not end up waiting on the same host and overall indexer performance will increase. Furthermore, the host will also get a relief from having to server a large number of threads simultaneously, hence increasing its performance as well. The crawler impact rules depend on the number of processors on the indexer and the configured indexer performance level.You should set crawler impact rules using the following information:

- Indexer Performance - Reduced

- Total number of threads: number of processors

- Max Threads/host: number of processors

- Indexer Performance - Partially reduced

- Total number of threads: 4 times the number of processors

- Max Threads/host: number of processors plus 4

- Indexer Performance - Maximum

- Total number of threads: 16 times the number of processors

- Max Threads/host: number of processors plus 4

In addition to setting the crawler impact rules, you can also investigate why the host is performing poorly, and if possible, add more resources on the host.

Database Server Too Busy

The indexer also uses the database during the indexing process. If the database server becomes too slow to respond, crawl durations will suffer. To see if the crawl is starved by a slow or busy database server, we will need to collect performance data on the indexer during the crawl. The performance counters that we are interested in to verify this are:

- \\Office Server Search Archival Plugin\Active Docs in First Queue

- \\Office Server Search Archival Plugin\Active Docs in Second Queue

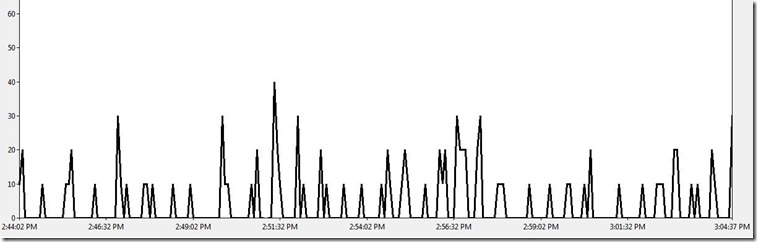

As I had mentioned earlier, once MSDMN.EXE is done crawling content, it passes this to MSSearch.exe, which then writes the index on disk and inserts the metadata properties into the database. MSSearch.exe queues all the documents whose metadata properties need to be inserted into the database . Consistently high values of these two counters will indicate that MSSearch.exe has requested the metadata properties to be inserted into the database, but the requests are just sitting in the queue because the database is too busy. Ideally, you see the number of documents in first queue increasing and then dropping to 0 at regular intervals of time (every few minutes) . The following performance data shows that the database server is serving the requests at an acceptable rate:

This is a twenty minute data, that shows the counter "Active Docs in First Queue". Clearly, the database is doing a good job at clearing up the queue as we see this counter drop to 0 at regular intervals. As long as you see these triangles in performance data, your database server is good.

The Solution: If you see consistently high values of the above mentioned two performance counters, you should engage your DBAs and investigate the possibilities of lifting some burden off the database servers. If your database server is dedicated to MOSS, you should consider optimizing things at the application level such as blob cache, object cache etc. I will talk about performance optimizations for MOSS in a later post.

Hardware Bottleneck

If you have verified everything else, you are probably hitting a hardware bottleneck (physical memory, disk, network, processor etc.). The following performance data collected for one hour from the indexer shows that the msdmn.exe process is consistently at 100%. The performance counter shown below is \\Process\%Processor Time (msdmn.exe is the selected instance).

This indicates that msdmn.exe is thrashing the indexer pretty hard, and the server is bottlenecked on processing power.

The Solution: If you are faced with the problem presented above (msdmn.exe consistently at high CPU utilization), you can change your indexer performance from "Maximum" to "Partially Reduced" (By going to "Services on Server" in central admin. and clicking on "Office Server Search"). If you are faced with other bottlenecks (memory, disk etc.), you should analyze performance data to get to a conclusion. This article explains how you can determine potential bottlenecks on your server.

Conclusion

Crawl durations can vary depending on a number of factors. You should establish a baseline of how many crawls can run at the same time and still perform at acceptable levels. Schedule your crawls such that the number of crawls running at the same time does not exceed your "healthy baseline". If you are maintaining an enterprise environment and SharePoint is a business critical application, you should not settle for anything less than 64 bit hardware which is scalable, and is the correct hardware for any SharePoint implementation that is at a large scale.

Comments

Anonymous

March 12, 2010

Great article!. Thanks! I have a question regarding the threads used by indexer. In your post you mentioned that the indexer can create a maximum of 64 threads. Later on while discussing indexer performance you mentioned that the indexer performance depends on the number of processors on the machine. So if I have an 8 proc machine with maximum indexer performance set, then I should have: Total number of threads: 16 times the number of processors (16 x 8 = 128 Threads) Max Threads/host: number of processors plus 4 (8 + 4 = 12) So where does the max thread apply? I notice that max value is 64 in crawler impact rules configuration, but I do not understand the cap on threads vs. indexer performance. Can you please explain?Anonymous

March 16, 2010

The comment has been removedAnonymous

September 14, 2010

Hi Tehnoon, First of Eid Mubarak to you and your family. I was wondering how can i increase the crawl thread in WSS 3.0? regards,Anonymous

October 14, 2010

Hi Safia, Thanks a lot! Sorry it took me so long to answer your question. I was swamped with other things. The WSS 3.0 crawl engine is not as sophisticated as the MOSS crwal engine, so it's not possible to configure crwaler impact rules or threads per host for WSS 3.0. Hope this helps!Anonymous

January 24, 2011

Great article! What does it mean if during a full crawl, most of your threads are Idle, and you have negligible threads accessing network or threads in plug-ins. That's what I'm seeing, and I have no hardware or SQL bottleneck. I just wish I could speed things up. I have a single Web App wtih a few dozen doc libraries and over a million items in the index. Sometimes my idle threads and thread accessing network are the inverse of eachother, like on your performance chart. Other times, the idle threads just drops from 64 down to 10-14.Anonymous

February 07, 2011

Hi AJ, It is very strange that you will see threads accessing the network counter at 0 for several minutes during a full crawl. The only thing I can think of is that maybe a "delete crawl" could be running. When you remove a start address from a content source, an automatic "delete crawl" is triggerred which basically deletes all items that were previously crawled from that start address. If you're using a 32-bit machine for your indexer, I would strongly recommend upgrading to 64-bit which would surely improve things.Anonymous

February 22, 2011

Salam Raza, Great article as expected from escaltion engineer of MS. Just a query, can i change the Indexer Performance - Reduced in WSS? I think we can from the stsadm command. But can I change it when crawling is in progress? And will it do the full crawl again, I have 900 gb of data!! ALLAH HAFIZAnonymous

February 23, 2011

WS Ayesha! Wow, 900 GB of data! You should get MOSS :). By default, the performance level in WSS is reduced. You can still change it using "stsadm -o spsearch -farmperformancelevel Reduced" . I am not a 100% sure but i would expect it to take effect immediately, even if a crawl is running. You can also restart the search service which should pick up the new setting without impactiing the in progress crawl.Anonymous

February 23, 2011

The comment has been removedAnonymous

February 23, 2011

Looks like it is much better now. But could you please let me know how to check what documents are crawled in WSS? There seems to no logging for it! RegardsAnonymous

April 17, 2011

Great Article, in regards of max Threads, are you referring to Processors or Cores ? The post of sudeepg in regards of 8 Processors confused me. RegardsAnonymous

December 18, 2012

Do you have an updated version of this article to cover new things learned in the past 2 years? Also, is there a location where the images can be found. Right now, none of the charts are showing when I read the article. We are seeing index crawling ranging from 2 hrs to 20+ hrs on the same basic set of content. We are trying to get a handle on it. We were running more frequent indexes, but that was making the system so slow during the normal work shift that people were unable to get work done, so we have dropped to doing one index crawl a day. The crawl was running at the reduced level when it was severely impacting the users, so there isn't any way to throttle things back any more from a Central Admin point of view.Anonymous

December 20, 2012

Hi Iwvidren, I don't have an updated version of the post yet. Sorry about the images not showing. I have just fixes them. It sounds like you may be having some general database bottlenecks which cause the slow down. I would suggest that you review the database maintanance white paper for SharePoint to ensure that you are following best practices regarding maintanance of indexes and statistics. The white paper is located here technet.microsoft.com/.../cc262731(v=office.12).aspxAnonymous

January 03, 2013

MOSS 2007 service pack 3, april 2012 cumulative update This is a small farm. It has its databases on a shared sql server. The web front end, central admin, and search crawling all occurs on a single machine. We have 300,000-500,000 documents. We do not crawl any external sources of information. Let's say the machine name is mysrv, but the farm itself - what users should be using in their URLs - is myfarm. Some people do, however, have links using http://mysrv instead of http://myfarm. When this machine was set up, years before I was involved, the content sources were set up as http://myfarm sps3://myfarm However, the crawl rules are set up as http://myfarm as the url and include http://mysrv as the url and include I don't know for certain, but I suspect that they were concerned about making certain they got all the links regardless of which way they were specified. We have the system configured to do 1 incremental crawl Sunday-Friday night at 6pm and 1 full crawl on Saturday morning. The problem we are having is that the crawls are taking a wildly varying time frame. For instance, in the past 2 weeks, the incremental has varied from 1.5 hrs to 20 hrs and the full has run 30 hrs or more. Could the crawl rules mentioned above be playing some factor in the long run times?