SQL Server R Services - Why we built it

This is the first post in a series of blog posts about SQL Server R Services. We want to take you behind the scenes and explain why we have built this feature and deep dive into how it works.

Future posts will include details about the architecture, highlighting advanced use cases with code examples to explain what can be done with this feature.

Before we get into details, we want to start off by giving you some background on why we built SQL Server R Services and how the architecture looks.

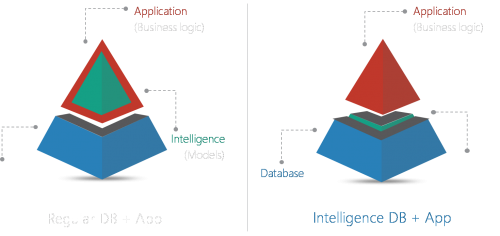

Making SQL Server a data intelligence platform

SQL Server R Services is an in-database analytics feature that tightly integrates R with SQL Server. With this feature, we want to provide a data intelligence platform that moves intelligence capabilities provided with R closer to the data . So why is that a good thing? The short answer is that a platform like this makes it much easier to consume and manage R securely and at scale in applications in production.

Challenges with traditional R language

There are three major challenges with traditional R. This is how we address those challenges by moving intelligence closer to the data in SQL Server:

Challenge 1 – Data Movement

Moving data from the database to the R Runtime becomes painful as data volumes grow and carries security risks

Our solution: Reduce or eliminate data movement with In-Database analytics

o There is no need to move data when you can execute R scripts securely on SQL Server. You can still use your favorite R development tool and simply just push the compute to execute on SQL Server using the compute context.

Challenge 2 - Operationalize R scripts and models

It is not trivial how to call R from your application in production. Often, you must recode the R script in another language. This can be time consuming and inefficient.

Our solution:

o Use familiar T-SQL stored procedures to invoke R scripts from your application

o Embed the returned predictions and plots in your application

o Use the resource governance functionality to monitor and manage R executions on the server

Challenge 3 - Enterprise Performance and scale

R runs single threaded and only accommodates datasets that fit into available memory

Our solution:

o Use SQL Server’s in-memory querying and Columnstore indexes

o Leverage RevoScaleR support for large datasets and parallel algorithms

SQL Server Extensibility Architecture

The foundation of this data intelligence platform is the new extensibility architecture in SQL Server 2016.

Extensibility Framework - Why?

The way we make SQL Server and R work together is by using a framework we call the extensibility architecture. Previously, CLR or extended stored procedures would enable you to run code outside the constructs of SQL Server, but in those cases, the code still runs inside the SQL Server process space. Having external code running inside the SQL Server process space can cause disruption and it is also not possible to legally embed runtimes that are not owned by Microsoft.

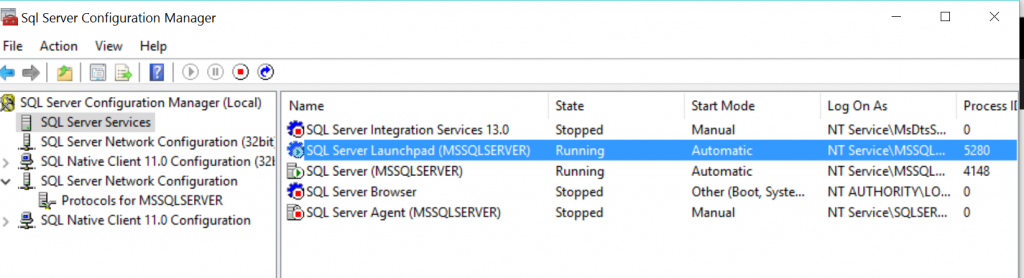

Instead, we have built a new generic extensibility architecture that enables external code, in this case R programs, to run, not inside the SQL Server process, but as external processes that launch external runtimes. If you install SQL Server with R Services, you will be able to see the new Launchpad service in SQL Server configuration manager:

T-SQL interface: sp_execute_external_script

So how is an external script, like an R script, executed using the extensibility architecture? Well, we have created a new special stored procedure called sp_execute_external_script for that. This stored procedure has all the benefits of any other stored procedure. It has parameters, can return results and is executable from any TSQL client that can run queries. It also enables you to execute external scripts inside SQL Server.

When you execute the stored procedure sp_execute_external_script, we connect to the Launchpad service using a named pipe and send a message to that service telling it what we want to run and how. We currently only support R as language.

Launchpad has a registration mechanism for launchers specific to a runtime/language. Based on the script type, it will invoke the corresponding launcher which handles the duties for invoking and managing the external runtime execution. This launcher creates a Satellite process to execute our R Scripts.

The Satellite process has a special dll that knows how to exchange data with SQL Server to retrieve input rows/parameters and send back results and output parameters. Multiple of these processes can be launched to isolate users from each other and achieve better scalability.

One major advantage with Launchpad is that it uses proven SQL Server technologies such as SQLOS and XEvent to enable XEvent tracing of the Launchpad service. You can read more about how to collect XEvents for R Services here.

Looking ahead

Without going into too much detail, this is how the extensibility architecture works. We hope that you found this interesting and that you will stay tuned for our coming blog posts on this topic.

Use this tutorial if you are new to SQL R Services and want to get started.

Author: Nellie Gustafsson

Comments

- Anonymous

January 10, 2017

The comment has been removed- Anonymous

January 10, 2017

The comment has been removed

- Anonymous

- Anonymous

January 11, 2017

Are there plans to have Python as a supported language?- Anonymous

January 11, 2017

Yes, we have plans to support other languages like Python in the future. The extensiblity framework in engine was designed with this goal in mind & it is not specific to R integration only.

- Anonymous

- Anonymous

April 09, 2017

The comment has been removed- Anonymous

May 01, 2017

The comment has been removed

- Anonymous

- Anonymous

May 15, 2017

The comment has been removed- Anonymous

May 15, 2017

Hi Sukesh, You have a typo in the name of the data.frame. It should "OutputDataSet" not "OutputDataset". Since R is case-sensitive, the name has to match strictly so that the correct data.frame can be returned to SQL Server. Here are 3 ways to return the plot:EXEC sp_execute_external_script @language = N'R' ,@script = N'library(ggplot2);image_file = tempfile();jpeg(filename = image_file, width=600, height=800);ggplot(mtcars, aes(wt, mpg)) + geom_point();dev.off();OutputDataSet <- data.frame(data=readBin(file(image_file,"rb"),what=raw(),n=1e6));'WITH RESULT SETS ((plot varbinary(max)));EXEC sp_execute_external_script @language = N'R' , @script = N'library(ggplot2);image_file = tempfile();jpeg(filename = image_file, width=600, height=800);ggplot(mtcars, aes(wt, mpg)) + geom_point();dev.off();plot <- data.frame(data=readBin(file(image_file,"rb"),what=raw(),n=1e6));' , @output_data_1_name = N'plot'WITH RESULT SETS ((plot varbinary(max)));DECLARE @plot varbinary(max);EXEC sp_execute_external_script @language = N'R' , @script = N'library(ggplot2);image_file = tempfile();jpeg(filename = image_file, width=600, height=800);ggplot(mtcars, aes(wt, mpg)) + geom_point();dev.off();plot <- readBin(file(image_file,"rb"),what=raw(),n=1e6);' , @params = N'@plot varbinary(max) OUTPUT' , @plot = @plot OUTPUT;SELECT @plot as plot;Hope this helps.

- Anonymous

- Anonymous

October 18, 2017

The comment has been removed- Anonymous

October 24, 2017

Can you send me the script that demonstrates the problem?

- Anonymous