Introducing Batch Mode Adaptive Memory Grant Feedback

SQL Server uses memory to store in-transit rows for hash join and sort operations. When a query execution plan is compiled for a statement, SQL Server estimates both the minimum required memory needed for execution and the ideal memory grant size needed to have all rows in memory. This memory grant size is based on the estimated number of rows for the operator and the associated average row size. If the cardinality estimates are inaccurate, performance can suffer:

- For cardinality under-estimates, the memory grant can end up being too small and the rows then spill to disk, causing significant performance degradation compared to a fully memory-resident equivalent.

- For cardinality over-estimates, the memory grant can be too large and the memory goes to waste. Concurrency can be impacted because the query may wait in a queue until enough memory becomes available, even though the query only ends up using a small portion of the granted memory.

You can sometimes address the cardinality misestimates through a variety of methods, such as statistics management (update frequency, increasing sample size), providing multi-column statistics, using intermediate result sets instead of one single complex query, or avoiding constructs such as table variables that have fixed cardinality estimates. But for some scenarios, addressing poor estimates that impact memory grant sizing can be difficult to address directly without a significant refactoring of the statement or the use of hints such as MIN_GRANT_PERCENT and MAX_GRANT_PERCENT.

When it comes to improving cardinality estimation techniques, there is no one single approach that works for all possible statements. With that in mind, the Query Processing team has been working on a new wave of adaptive query processing improvements to handle the more intractable cardinality estimation issues that often result in poor query performance.

Batch mode adaptive memory grant feedback is the first improvement under the adaptive query processing family of features to be surfaced in the public preview of the next release of SQL Server on Linux and Windows. You can now test this feature for non-production workloads and this feature will also be surfaced in Azure SQL DB in a future update.

Addressing repeating-workloads, this improvement recalculates the actual memory required for a query and then updates the grant value for the cached plan. When an identical query statement is executed, we will be able to use the revised memory grant size, reducing excessive memory grants that impact concurrency and fixing under-estimated memory grants that cause expensive spills to disk.

What kind of results can we expect to see?

For one internal Microsoft customer, they run a recurring process that generates summarized results from a very large telemetry data set. They query this large data set fifteen times, each time pulling different metrics. Out of the fifteen separate queries, fourteen of them encounter spills to disk due to memory grant misestimates.

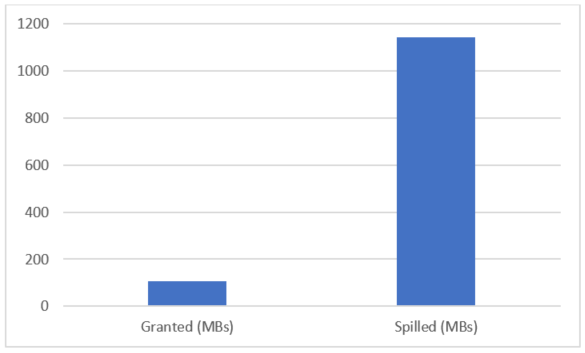

The following graph shows one example of using batch mode adaptive memory grant feedback. For the first execution of the customer’s query, duration was 88 seconds due to high spills:

DECLARE @EndTime datetime = '2016-09-22 00:00:00.000';

DECLARE @StartTime datetime = '2016-09-15 00:00:00.000';

SELECT TOP 10 hash_unique_bigint_id

FROM dbo.TelemetryDS

WHERE Timestamp BETWEEN @StartTime and @EndTime

GROUP BY hash_unique_bigint_id

ORDER BY MAX(max_elapsed_time_microsec) DESC

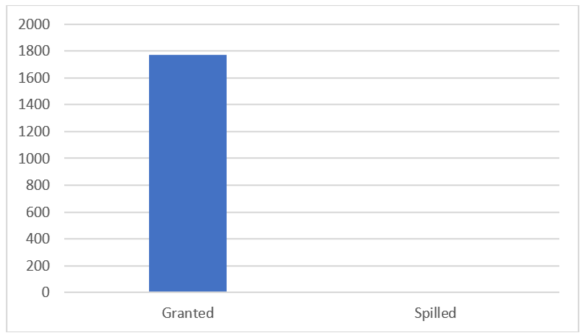

With memory grant feedback enabled, for the second execution, duration is 1 second (down from 88 seconds) and we see spills are removed entirely and the grant is higher:

How does batch mode adaptive memory grant feedback work? For excessive grants, if the granted memory is more than two times the size of the actual used memory, memory grant feedback will recalculate the memory grant and update the cached plan. Plans with memory grants under 1MB will not be recalculated for overages.

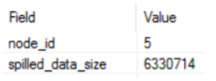

For insufficiently sized memory grants that result in a spill to disk for batch mode operators, memory grant feedback will trigger a recalculation of the memory grant. Spill events are reported to memory grant feedback and can be surfaced via the spilling_report_to_memory_grant_feedback XEvent event. This event returns the node id from the plan and spilled data size of that node.

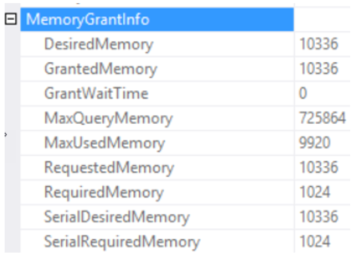

Can I see the adjusted memory grant in my execution plan?

Yes. The adjusted memory grant will show up in the actual (post-execution) plan via the “GrantedMemory” property. You can see this property in the root operator of the graphical showplan or in the showplan XML output:

<MemoryGrantInfo SerialRequiredMemory="1024" SerialDesiredMemory="10336" RequiredMemory="1024" DesiredMemory="10336" RequestedMemory="10336" GrantWaitTime="0" GrantedMemory="10336" MaxUsedMemory="9920" MaxQueryMemory="725864" />

How do I enable batch mode adaptive memory grant feedback?

To have your workloads automatically eligible for this improvement, enable compatibility level 140 for the database. For CTP1, you can set this using Transact-SQL. For example:

ALTER DATABASE [WideWorldImportersDW] SET COMPATIBILITY_LEVEL = 140;

What if my memory grant requirements differ significantly based on parameter values of consecutive executions?

Different parameter values may also require different query plans in order to remain optimal. This type of query is defined as “parameter-sensitive.” For parameter-sensitive plans, memory grant feedback will disable itself on a query if it has unstable memory requirements. The plan is disabled after several repeated runs of the query and this can be observed by monitoring the memory_grant_feedback_loop_disabled XEvent.

Does this feature help singleton executions?

Feedback can be stored in the cached plan for a single execution, however it is the consecutive executions of that statement that will benefit from the memory grant feedback adjustments. This improvement applies to repeated execution of statements.

How can I track when batch mode adaptive memory grant feedback is used?

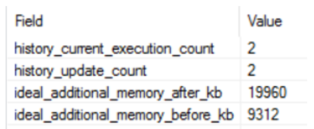

You can track memory grant feedback events using the memory_grant_updated_by_feedback XEvent event. This event tracks the current execution count history, the number of times the plan has been updated by memory grant feedback, the ideal additional memory grant before modification and the ideal additional memory grant after memory grant feedback has modified the cached plan.

Why batch mode and not row mode for this improvement?

We are starting with batch mode, however we are looking to expand this to row mode as well for a future update.

Does this improvement work with Resource Governor and memory grant query hints?

The actual memory granted honors the query memory limit determined by resource governor or query hint.

What if the plan is evicted from cache?

Feedback is not persisted if the plan is evicted from cache.

Will this improvement work if I use OPTION (RECOMPILE)?

A statement using OPTION(RECOMPILE) will create a new plan and not cache it. Since it is not cached, no memory grant feedback is produced and it is not stored for that compilation and execution. However, if an equivalent statement (i.e. with the same query hash) that did not use OPTION(RECOMPILE) was cached and then re-executed, the consecutive statement can benefit from memory grant feedback.

Will Query Store capture changes to the memory grant?

Memory grant feedback will only change the cached plan. Changes are not captured in Query Store for this version.

I’m interested in the other adaptive query processing features. How can I stay informed, provide feedback and learn about how (and when) I can test my own workloads?

Please sign up for the adaptive query processing preview here: https://aka.ms/AdaptiveQPPreview

We’ll keep in touch with customers who fill out the survey and we will contact you regarding testing and feedback opportunities that will surface in early 2017.

Comments

- Anonymous

December 04, 2016

How one can determine if a particular query is suffering from under/over estimates?- Anonymous

December 05, 2016

The comment has been removed- Anonymous

December 05, 2016

Thanks Joseph. Really nice article!! So, this feature will be available for only SQL 2016 or lower versions of SQL also?- Anonymous

December 07, 2016

Thanks Raman!This improvement applies to SQL vNext and Azure SQL DB.

- Anonymous

- Anonymous

- Anonymous

- Anonymous

January 20, 2017

Joseph,Thanks for the good article on this topic. - Anonymous

November 15, 2017

Hello, i have a question. While this feature seems nice, is there a way of diabling it (other than switching database to level 130)? We have queries that have very big differencies in memory grant depending on parameters and unfortunalelty this is killing us. Apparently SQL is not disabling grant feedback automaticly for some reason (or the treshold is big enought to notice the issue).Another question is - do you know how to enable dynamic memory grants for batch operators (TF 9389) on Azure? It could be better for us than grant feedback.Regards,Andrzej- Anonymous

November 15, 2017

Hi Andrzej,Could you email us the details at joe .sack@microsoft.com? Would like to learn more. Thanks,Joe

- Anonymous