Clustered Columnstore Index: Massively Parallel Trickle Insert

A traditional scenario of loading data into CCI is a nightly load from one or more data files containing millions of rows. Recommended technique is to load the data with batchsize >= 102400 as explained https://blogs.msdn.microsoft.com/sqlserverstorageengine/2014/07/27/clustered-column-store-index-bulk-loading-the-data/. However, we are seeing many scenarios where data source is parallel data stream (i.e. trickle insert) to be loaded to CCI for analytics, a typical IOT scenario. CCI allows concurrent data streams into the same delta rowgroup. However, you will see higher page latch contention as you increase the concurrency. You may wonder why this is so? Well, each delta RG is organized internally as a clustered btree index and the dataload follows the pattern of monotonically increasing clustered index key causing latch contention on the last page. Here is a simple way to test the ‘hidden’ key values inserted into a CCI

create table foo (c1 int)

create clustered columnstore index foocci on foo

go

insert into foo values (1)

insert into foo values (2)

-- check the hidden clustering key index

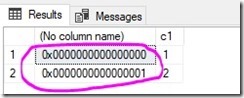

select %%physloc%% , * from foo

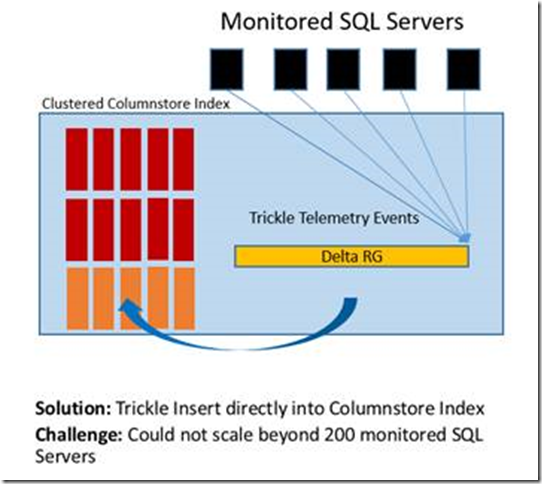

Note, the values encircled above represents a hidden rowstore clustered index key column representing (<rowgroup-id>, <tuple-id>). <tuple-id> is internally generated in a monotonically increasing number resulting in page latch contention on concurrent insert. In fact, we have seen this with many of our customers as shown in the picture below. The scenario here is collect telemetry data from applications (in this case SQL Servers) and load it into a table with CCI for analytics. In the example, below, the delta RG could not scale beyond a certain number of data sources, in this example 200. The actual number will vary with your workload.

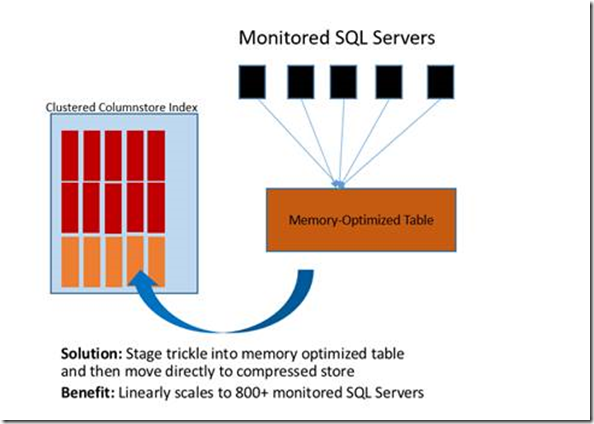

You can remove this data loading by staging the data into a non-durable/durable memory optimized table as shown below. Note, the memory optimized table, native to SQL Server Engine, are implemented to keep data in memory without the PAGE structure thereby eliminating last page contention.

With this change, the customer was able to completely by pass the delta rowgroup using a background thread that migrates the rows from memory-optimized table in chunks larger than 102400.

Thanks

Sunil Agarwal

Comments

- Anonymous

December 17, 2018

Sunil Ji, Thank you so much for such wonderful articles on column store technology. I have reviewed almost all of your presentations in Channel9 and in your blogs. We are extensively using column store in our PaaS environment. I have a question on tuple mover. Does the tuple mover release space to the operation system after compression or at the table level.The reason i ask is, we are having large transaction column store tables which have unused space. I am wondering if we have to do full index rebuild to claim the unused space in the table.In PaaS, we are in 1TB database limit, Standard S9.Thanks you- Anonymous

December 21, 2018

I don't fully understand the question. When you say 'operation system', I assume you are referring to transactional table where data is being imported from. Transactional table or the 'source' table lives outside the scope of Tuple Mover. If you are referring to Memory-Optimized table above, it is the user's responsibility to clean up the rows once they have been migrated to columnstore.Now, if you have lot of unused space in columnstore table, are you referring to rows that have been deleted? If yes, then REORGANIZE command can be run that uses a heurtistic to reclaim space used by deleted rowsthanksSunil- Anonymous

December 21, 2018

Thanks for the information, Sunil ji. I was looking for the REORGANIZE command to reclaim the unused space in the table.Sunil Kumar Godiyal

- Anonymous

- Anonymous