How bwin is using SQL Server 2016 In-Memory OLTP to achieve unprecedented performance and scale

Written by: Biljana Lazic (bwin – Senior DBA) and Rick Kutschera (bwin – Engineering Manager). Reviewed by: Mike Weiner (SQLCAT) bwin (part of GVC Holdings PLC) is one of Europe's leading online betting brands, and is synonymous with sports. Having offices situated in various locations across Europe, India, and the US, bwin is a leader in several markets including Germany, Belgium, France, Italy and Spain.

To be able to achieve our goals in these increasingly competitive markets, bwin’s infrastructure is constantly being pushed to stay on top of today’s - and sometimes even tomorrow’s – technology demands. With around 19 million bets and over 250 000 active users per day, our performance and scale requirements are extraordinary. In this blog, we will discuss how we have adopted In-Memory OLTP with SQL Server 2016 to meet these demands.

Our Caching Systems:

For years we’ve depended on Microsoft AppFabric and other distributed cache systems such as Cassandra or Memcached, as one of the central pieces in our architecture, in order to meet the demanding requirements on our systems. All major components, including sports betting, poker, and casino gaming rely on this cache, which makes it a critical component for our current and future business needs. In fact, a failure of this caching system would directly translate to a total blackout of our business, making it one of the most mission critical systems in the overall architecture.

With this configuration, we faced scalability issues, and worse, we saw that with higher transaction volumes, the stability of the whole distributed cache system was not able to keep up with the workload. Even in scaling out the amount of caching nodes we still faced stability issues, leading to high availability degradation.

Additionally, there were setup and maintenance pains, which lead to a high workload overhead for various departments to keep the system operational. Even with all this reoccurring work, the results we achieved were never satisfactory.

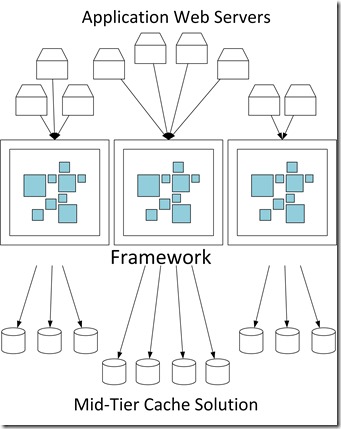

Below is a simplified architecture of our distributed caching system:

For these reasons, to become more stable and keep up with the business requirements, we were forced to consider better alternatives and overall solutions for our caching layer.

That was the moment when we realized that we already had the solution - codenamed “Hekaton”, the In-Memory OLTP engine, built within SQL Server. In fact, this was the technology we were already using for a similar solution for a while, our ASP.NET SessionState.

Our History with ASP.Net SessionState:

ASP.NET SessionState itself is a caching system that we have been using for a long time now and thanks to the performance we achieved, it became a building block for our new global caching database. But before we get further into the global caching database, let’s take a look into our history with ASP.NET SessionState.

bwin was the very first customer to use the In-Memory OLTP engine for an ASP.Net SessionState database in production, with SQL Server 2014 (long before it was called SQL Server 2014). For reference, before SQL Server 2014, our ASP.NET SessionState database was able to handle around 12,000 batch requests/sec before suffering from latch contention. We were forced to implement 18 SessionState databases and partition our workload to handle the required user load. With SQL Server 2014 In-Memory OLTP we could consolidate back to one SQL Server box and database to handle ~300,000 batch requests/sec, with the bottleneck moving to the splitting-up of data as there was no support of LOB datatypes with memory-optimized tables.

Over the years, we gathered a good deal of experience working with In-Memory OLTP for our ASP.NET SessionState solution, and the performance of the solution received positive feedback both from within and outside of our company.

With In-Memory OLTP in SQL Server 2016 extending its functionality, for example memory-optimized table support for LOB datatypes, we were able to move more code into native compiled stored procedures, and even reach a new record for our In-Memory OLTP based ASP.NET SessionState database during our research LAB engagement with Microsoft Enterprise Engineering Center (EEC) in October 2015.

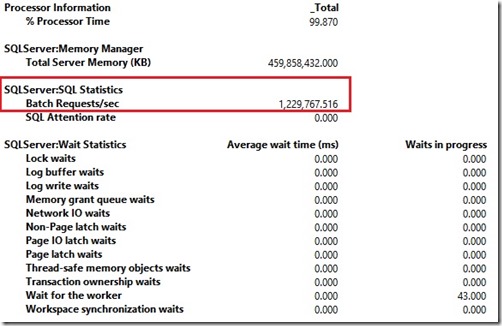

With all the scalability improvements in SQL Server 2016, and migration of the all the Transact-SQL to natively compiled stored procedures and a memory-optimized table, we were able to achieve and sustain over 1.2 million batch requests/second – an improvement of 4x over our previous high watermark!

Below is the summary of performance improvements we’ve achieved by using In-Memory OLTP for our ASP.NET SessionState. We have also included the batch requests/sec from performance monitor and the measurements of waits within SQL Server

| Version | SessionState Performance | Technology | Bottleneck |

| SQL Server 2012 | 12 000 batch requests/sec | SQL Server Interpreted T-SQL | Latch contentions |

| SQL Server 2014 | 300 000 batch requests/sec | Memory-Optimized Table, Interpreted T-SQL, Handling of Split LOBs | CPU |

| SQL Server 2016 | 1 200 000 batch requests/sec | Memory-Optimized Table with LOB support, Natively Compiled stored procedures | CPU |

**NOTE: In Testing we did hit spinlock issues at around 800,000 batch requests/sec, this is already resolved within SQL Server 2016 CU2 (Blog : https://blogs.msdn.microsoft.com/sqlcat/2016/09/29/sqlsweet16-episode-8-how-sql-server-2016-cumulative-update-2-cu2-can-improve-performance-of-highly-concurrent-workloads/)

With this level of performance and stability achieved, together with all the experience we gathered during years of working with the ASP.NET SessionState solution, we felt confident we could utilize this as our building block for a new global caching system based on the In-Memory OLTP engine.

Our New Global Caching System

The global caching system, based on our ASP.NET Session State implementation and In-Memory OLTP is now the replacement for all our distributed caching systems.

Below is a simplified architecture diagram of our new global caching system with SQL Server 2016 In-Memory OLTP: ![clip_image005[4] clip_image005[4]](https://msdntnarchive.z22.web.core.windows.net/media/2016/10/clip_image0054_thumb2.jpg)

Having changed the architecture, we can now not just keep up with performance we had with our distributed cache system, but far-exceed it, using only one single database node, compared to the 19 mid-tier cache nodes previously used.

| Version | Cache System Performance | Hardware nodes/distribution |

| Mid-Tier Cache solution without SQL Server | 150 000 batch requests/sec | 19 |

| Solution using SQL Server 2016 | 1 200 000 batch requests/sec | 1 |

In addition to reducing the number of servers needed to obtain the performance we needed, we also achieved several performance gains, as displayed in the diagram below. The graphic, which comes from our application monitoring solution, shows three things:

Usage: First, the green circular rings represent all the different products (and corresponding server counts which are represented by the numbers in the circles) dependent on the global cache. This includes numerous products from sports betting, casino gaming, bingo, all the way to our portal and sales API, just to name a few. With all these components accessing the cache, it has become even more central in our architecture than the ASP.NET SessionState.

Performance Throughput: Next, you can see our average load, that’s around 1.6 million requests per minute. With our user load scaling up, we expect to have twice the number of batch requests/sec and we expect to be able to handle even up to 20 times this load.

Performance Latency: Finally, you can see the consistent latency measured from the client around round-trip time at 1ms. This number is even more important if we directly compare it to the previous distributed cache system, where the latency varied all the time, having response times from 2ms up to 200ms.

Also of note, as the global cache is such a central piece of our business we also have it as part of a high availability solution. In this case, if for some reason the server hosting the SQL Server database does fail we can easily move the workload to another SQL Server database.

Implementation of the Global Caching Database

While the performance gains with In-Memory OLTP have been amazing, the implementation at its core was quite simple. From the database perspective, the memory-optimized table, simply put, is just a key/value store with its primary makeup containing a key (Primary Key), a value (BLOB) and an expiration date. The application has three possible ways of interacting with this table, in the form of three natively compiled stored procedures:

- One stored procedure to insert a value with a key into the table

- One stored procedure to retrieve the value, by providing the key

- One stored production to delete the value from the table.

In the background, there is a scheduled T-SQL job which deletes all expired entries from the table, on a regular basis.

From the development perspective, the impact was quite minimal. Previously, all the code necessary to access the distributed cache solutions was contained in an “abstraction layer” DLL. Hence the changes needed to use SQL Server as the caching solution were quite localized to that specific DLL, which meant no impact to the actual applications using the caching tier.

As our senior software engineer on the project noted “ All in all the migration of the code in [our] framework was not so difficult, we spent much more time by testing and tuning it than by the implementation itself.” – Marius Zuzcak

In the appendix below we provide code from the database, of the memory-optimized table using the LOB datatype, as well as code from the data access layer with calls to the In-Memory OLTP Global Cache.

Appendix: Code Examples

Below is the Transact-SQL code for the memory-optimized table, now with LOB datatype support:

CREATE TABLE [dbo].[CacheItems]

(

[Key] [nvarchar](256) COLLATE Latin1_General_100_BIN2 NOT NULL,

[Value] [varbinary](max) NOT NULL,

[Expiration] [datetime2](2) NOT NULL,

[IsSlidingExpiration] [bit] NOT NULL,

[SlidingIntervalInSeconds] [int] NULL,

CONSTRAINT [pk_CacheItems] PRIMARY KEY NONCLUSTERED HASH

( [Key]) WITH ( BUCKET_COUNT = 10000000)

)WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_ONLY )

Here we are providing pseudo-code from the data-access layer code (Hekaton.Dal).

using System;

using System.Data.SqlClient;

using System.Diagnostics.Contracts;

namespace Hekaton.Dal

{

internal interface IHekatonDal

{

object GetCacheItem(string key);

void SetCacheItem(string key, object value, CacheItemExpiration cacheItemExpiration);

object RemoveCacheItem(string key);

}

internal sealed class HekatonDal : IHekatonDal

{

private readonly IHekatonConfiguration configuration;

public HekatonDal(IHekatonConfiguration configuration)

{

Contract.Requires(configuration != null);

this.configuration = configuration;

}

public object GetCacheItem(string key)

{

byte[] buffer;

long length;

ExecuteReadOperation(key, out buffer, out length, SqlCommands.GetCacheItemCommand);

return Serialization.DeserializeBuffer(buffer, length);

}

public void SetCacheItem(string key, object value, CacheItemExpiration cacheItemExpiration)

{

ExecuteSetCacheItem(key, value, cacheItemExpiration);

}

public object RemoveCacheItem(string key)

{

byte[] buffer;

long length;

ExecuteReadOperation(key, out buffer, out length, SqlCommands.RemoveCacheItemCommand);

return Serialization.DeserializeBuffer(buffer, length);

}

private void ExecuteReadOperation(string key, out byte[] valueBuffer, out long valueLength,

Func<SqlConnection, SqlCommand> readCommand)

{

using (var connection = new SqlConnection(configuration.HekatonConnectionString))

using (var command = readCommand(connection))

{

command.Parameters[0].Value = key;

connection.Open();

using (command.ExecuteReader())

{

// Item is returned from the output parameter.

var value = command.Parameters[1].Value;

if (Convert.IsDBNull(value))

{

valueBuffer = null;

valueLength = 0;

}

else

{

valueBuffer = (byte[])value;

valueLength = valueBuffer.LongLength;

}

}

}

}

private void ExecuteSetCacheItem(string key, object value, CacheItemExpiration cacheItemExpiration)

{

using (var connection = new SqlConnection(configuration.HekatonConnectionString))

using (var command = SqlCommands.SetCacheItemCommand(connection))

{

var serializedValue = Serialization.SerializeObject(value);

command.Parameters[0].Value = key;

command.Parameters[1].Value = serializedValue;

command.Parameters[2].Value = cacheItemExpiration.AbsoluteExpiration;

command.Parameters[3].Value = cacheItemExpiration.IsSlidingExpiration;

command.Parameters[4].Value = cacheItemExpiration.SlidingIntervalInSeconds;

connection.Open();

command.ExecuteNonQuery();

}

}

}

}

Below are the SQL Server calls to get, set, and remove items from the memory-optimized table, these calls can be utilized from the data access layer

//GetCacheItem

public override CacheItem GetCacheItem(string key, string regionName = null)

{

var compositeKey = BuildCompositeCacheKey(key);

try

{

var result = hekatonDal.GetCacheItem(compositeKey);

return result != null ? new CacheItem(key, result, regionName) : null;

}

catch (SqlException ex)

{

Log(CreateLogMessage(key, compositeKey, ex, "Failed to get"), ex);

return null;

}

catch (SerializationException ex)

{

log.Error(CreateLogMessage(key, compositeKey, ex, "Failed to get"));

return null;

}

}

//SetCacheItem

public override void SetCacheItem(string key, object value, CacheItemPolicy policy, string regionName = null)

{

var compositeKey = BuildCompositeCacheKey(key);

try

{

hekatonDal.SetCacheItem(compositeKey, value, new CacheItemExpiration(policy, configuration));

}

catch (SqlException ex)

{

Log(CreateLogMessage(key, compositeKey, ex, "Failed to set"), ex);

}

}

//RemoveCacheItem

public override object RemoveCacheItem(string key, string regionName = null)

{

var compositeKey = BuildCompositeCacheKey(key);

try

{

var result = hekatonDal.RemoveCacheItem(compositeKey);

return result;

}

catch (SqlException ex)

{

Log(CreateLogMessage(key, compositeKey, ex, "Failed to remove"), ex);

return null;

}

catch (SerializationException ex)

{

log.Error(CreateLogMessage(key, compositeKey, ex, "Failed to deserialize (while removing)"));

return null;

}

}

Comments

- Anonymous

November 16, 2016

Why not use Redis? Wouldn't that be faster or as fast?- Anonymous

December 22, 2016

Afais, seeing redis benchmarks here : https://redis.io/topics/benchmarks (peaking at 120.000 TPS)hekaton is even going over 1mio TPS / Batches/sec in the given usecase. So I guess the answer to your question is, no, it wouldn't be faster or as fast, thats at least my understanding. - Anonymous

January 04, 2017

The "Our Caching Systems" section specifies that they used Memcached (similar to Redis) and Cassandra but switched to MS SQL 2016 due to scalability/stability issues.This was a surprise to me as I have never heard of someone switching from a dedicated caching system to a relational database for these reasons.- Anonymous

February 09, 2017

Hi Charles, thanks for your comment and the read! Yes, while this is not the “typical pattern” for a caching solution, as the article describes it was the right solution for bwin to get over their performance and management bottlenecks with the dedicated caching solutions. As well, this is why we wanted to share it here for everyone’s consideration!

- Anonymous

- Anonymous

May 25, 2017

The comment has been removed

- Anonymous

- Anonymous

July 30, 2017

Would you please provide stored procedures? because their correct implementations are critical for handling concurrency- Anonymous

August 16, 2017

Hi Mahi, please find the scripts (sps and tables) here: http://www.mrc.at/Files/CacheDB.zip Additional info: We've added a second table to the solution in order to avoid write/write conflicts, during peak times. Greetings, Billy

- Anonymous

![clip_image007[4] clip_image007[4]](https://msdntnarchive.z22.web.core.windows.net/media/2016/10/clip_image0074_thumb2.jpg)