Setting Up Hana System Replication on Azure Hana Large Instances

This blog details a recent customer deployment by Cognizant SAP Cloud team on HANA Large Instances. From an architecture perspective of HANA Large Instances, the usage of HANA System Replication as disaster recovery functionality between two HANA Large Instance units in two different Azure regions does not work. Reason is that the network architecture applied is not supporting transit routing between two different HANA Large Instance stamps that are located in different Azure regions. Instead the HANA Large Instance architecture offers the usage of storage replication between the two regions in a geopolitical area that offer HANA Large Instances. For details, see this article. However, there are customers who already have experience with SAP HANA System Replication and its usage as disaster recovery functionality. Those customers would like to continue the usage of HANA System Replication in Azure HANA Large Instance units as well. Hence the Cognizant team needed to solve the issue around the transit routing between the two HANA Large Instance units that were located in two different Azure regions. The solution applied by Cognizant was based on Linux IPTables. A Linux Network Address Translation based solution allows SAP Hana System Replication on Azure Hana Large Instances in different Azure datacenters. Hana Large Instances in different Azure datacenters do not have direct network connectivity because Azure ExpressRoute and VPN does not allow Transit Routing.

To enable Hana System Replication (HSR) to work a small VM is created that forwards traffic between the two Hana Large Instances (HLI).

Another benefit of this solution is that it is possible to enable access to SMT and NTP servers for patching and time synchronization.

The solution detailed below is not required for Hana systems running on native Azure VMs, only for the Azure Large Instance offering that offers bare metal Tailored Data Centre Integration (TDI) Infrastructure up to 20TB

Be aware that the solution described below is not part of the HANA Large Instance architecture. Hence support of configuring, deploying, administrating and operating the solution needs to be provided by the Linux vendor and the instances that deploys and operates the IPTables based disaster recovery solution.

High Level Overview

SAP Hana Database offers three HA/DR technologies: Hana System Replication, Host Autofailover and Storage based replication

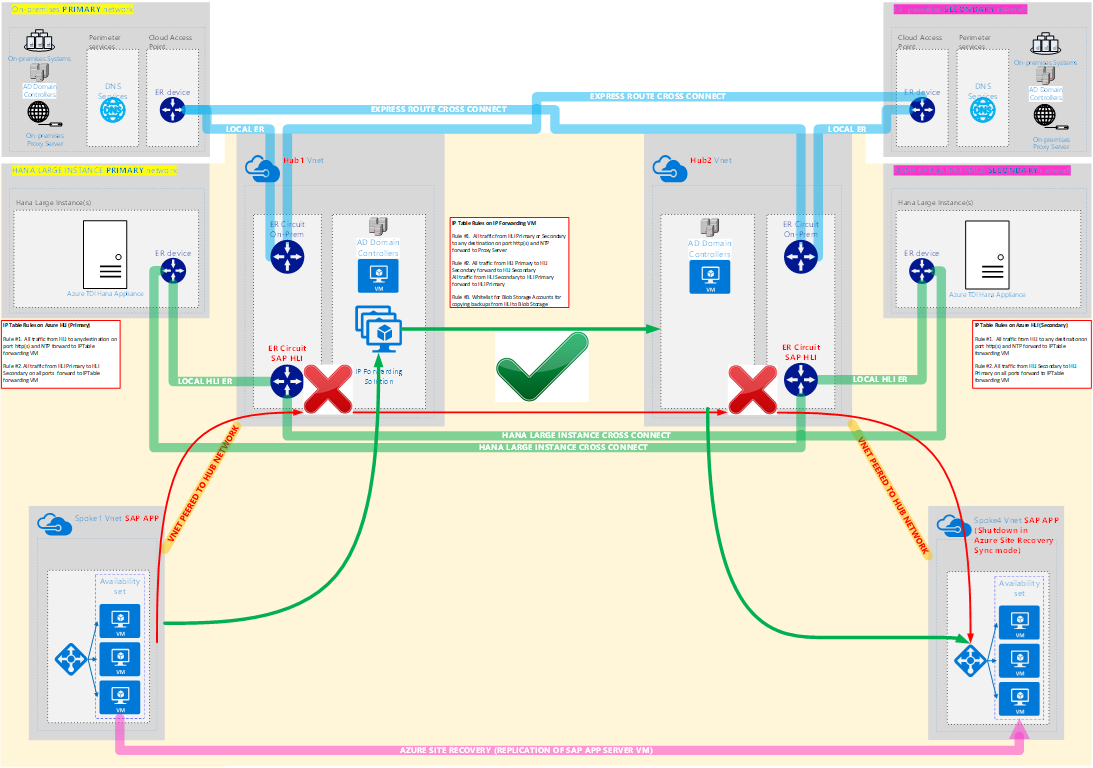

The diagram below illustrates a typical HLI scenario with Geographically Dispersed Disaster Recovery solution. The Azure HLI solution offers storage based DR replication as an inbuilt solution however some customers prefer to use HSR which is a DBMS software based HA/DR solution.

HSR can already be configured within the same Azure datacenter following the standard HSR documentation

HSR cannot be configured between the primary Azure datacenter and the DR Azure datacenter without an IPTables solution because there is no network route from primary to DR. "Transit Routing" is a term that refers to network traffic that passes through two ExpressRoute connections. To enable HSR between two different datacenters a solution such as the one illustrated below can be implemented by a SAP System Integrator or Azure IaaS consulting company

Key components and concepts in the solution are:

1. A small VM running any distribution of Linux is placed in Azure on a VNET that has network connectivity to both HLI in both datacenters

2. The ExpressRoute circuits are cross connected from the HLI to a VNET in each datacenter

3. Standard Linux functionality IPTables is used to forward traffic from the Primary HLI -> IP Forwarding VM in Azure -> Secondary HLI (and vice versa)

4. Each customer deployment has individual differences, for example:

a. Some customers deploy two HLI in the primary datacenter, setup synchronous HSR between these two local HLI and then (optionally) configure Suse Pacemaker for faster and transparent failover. The third DR node for HSR typically is not configured with Suse Pacemaker

b. Some customers have a proxy server running in Azure and allow outbound traffic from Azure to Internet directly. Other customers force all http(s) traffic back to a Firewall & Proxy infrastructure on-premises

c. Some customers use Azure Automation/Scripts on a VM in Azure to "pull" backups from the HLI and store them in Azure blob storage. This removes the need for the HLI to use a proxy to "push" a backup into blob storage

All of the above differences change the configuration of the IPTables rules, therefore it is not possible to provide a single configuration that will work for every scenario

https://blogs.sap.com/2017/02/01/enterprise-readiness-with-sap-hana-host-auto-failover-system-replication-storage-replication/ https://blogs.sap.com/2017/04/12/revealing-the-differences-between-hana-host-auto-failover-and-system-replication/

The diagram shows a hub and spoke network topology and Azure ExpressRoute cross connect, a generally recommended deployment model

/en-us/azure/architecture/reference-architectures/hybrid-networking/hub-spoke /en-us/azure/expressroute/expressroute-faqs

Diagram 1. Illustration showing no direct network route from HLI Primary to HLI Secondary. With the addition of an IP forwarding solution it is possible for the HLI to establish TCP connectivity

Note: ExpressRoute Cross Connect can be changed to regional vnet peering when this feature is GA /en-us/azure/virtual-network/virtual-network-peering-overview

Sample IP Table Rules Solution – Technique 1

The solution from a recent deployment by Cognizant SAP Cloud Team on Suse 12.x is shown below:

HLI Primary 10.16.0.4

IPTables VM 10.1.0.5

HLI DR 10.17.0.4

On HLI Primary

iptables -N input_ext

iptables -t nat -A OUTPUT -d 10.17.0.0/24 -j DNAT --to-destination 10.1.0.5

On IPTables

## this is HLI primary -> DR

echo 1 > /proc/sys/net/ipv4/ip_forward

iptables -t nat -A PREROUTING -s 10.16.0.0/24 -d 10.1.0.0/24 -j DNAT --to-destination 10.17.0.4

iptables -t nat -A POSTROUTING -s 10.16.0.0/24 -d 10.17.0.0/24 -j SNAT --to-source 10.1.0.5

## this is HLI DR -> Primary

iptables -t nat -A PREROUTING -s 10.17.0.0/24 -d 10.1.0.0/24 -j DNAT --to-destination 10.16.0.4

iptables -t nat -A POSTROUTING -s 10.17.0.0/24 -d 10.16.0.0/24 -j SNAT --to-source 10.1.0.5

On HLI DR

iptables -N input_ext

iptables -t nat -A OUTPUT -d 10.16.0.0/24 -j DNAT --to-destination 10.1.0.5

The configuration above is not permanent and will be lost if the Linux servers are restarted.

On all nodes after the config is setup and correct:

iptables-save > /etc/iptables.local

add "iptables-restore -c /etc/iptables.local" to the /etc/init.d/boot.local

On the IPTables VM run this command to make ip forwarding a permanent setting

vi /etc/sysctl.conf

add net.ipv4.ip_forward = 1

The above example uses CIDR networks such as 10.16.0.0/24 It is also possible to specify an individual specific host such as 10.16.0.4

Sample IP Table Rules Solution – Technique 2

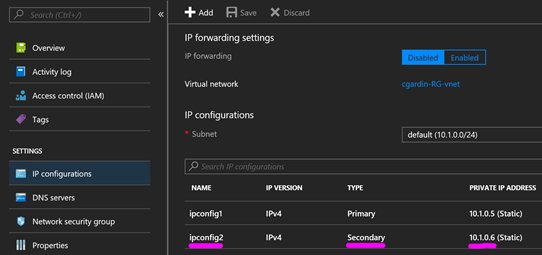

Another possible solution uses an additional IP address on the IPTables VM to forward to the target HLI IP address.

This approach has a number of advantages:

1. Inbound connections are supported, such as running Hana Studio on-premises connecting to HLI

2. IPTables configuration is only on the IPTables VM, no configuration is required on the HLI

To implement technique 2 follow this procedure:

1. Identify the target HLI IP address. In this case the DR HLI is 10.17.0.4

2. Add an additional static IP address onto the IPTables VM. In this case 10.1.0.6

Note: after performing this configuration it is not required to add the IP address in YAST. The IP address will not show in ifconfig

3. Enter the following commands on the IPTables VM

iptables -t nat -A PREROUTING -d 10.1.0.6 -j DNAT --to 10.17.0.4

iptables -t nat -A PREROUTING -d << a unique new IP assigned on the IP Tables VM>> -j DNAT --to <<any other HLI IP or MDC tenant IP>>

iptables -t nat -A POSTROUTING -o eth0 -j MASQUERADE

4. Ensure /proc/sys/net/ipv4/ip_forward = 1 on the IP Tables VM and save the configuration in the same way as Technique 1

5. Maintain DNS entries and/or hosts file to point to the new IP address on the IPTables VM – in this case all references to the DR HLI will be 10.1.0.6 (not the "real" IP 10.17.0.4)

6. Repeat this procedure for the reverse route (HLI DR -> HLI Primary) and for any other HLI (of MDC tenants)

7. Optionally High Availability can be added to this solution by adding the additional static IP addresses on the IPTables VM to an ILB

8. To test the configuration execute the following command:

ssh 10.1.0.6 -l <username> (address 10.1.0.6 will be NAT to 10.17.0.4)

after logging on confirm that the ssh session has connected to the HLI DR (10.17.0.4)

IMPORTANT NOTE: The SAP Application servers must use the "real" HLI IP (10.17.0.4) and should not be configured to connect via the IPTables VM.

Notes:

To purge ip tables rules:

iptables -t nat -F

iptables -F

To list ip tables rules:

iptables -t nat -L

iptables -L

ip route list

ip route del

https://www.netfilter.org/documentation/index.html https://www.systutorials.com/816/port-forwarding-using-iptables/ https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/4/html/Security_Guide/s1-firewall-ipt-fwd.html https://www.howtogeek.com/177621/the-beginners-guide-to-iptables-the-linux-firewall/ https://www.tecmint.com/linux-iptables-firewall-rules-examples-commands/ https://www.tecmint.com/basic-guide-on-iptables-linux-firewall-tips-commands/

What Size VM is Required for the IP Forwarder?

Testing has shown that network traffic during initial synchronization of a Hana DB is in the range of 15-25 megabytes/sec at peak. It is therefore recommended to start with a VM that has at least 2 cpu. Larger customers should consider a VM such as a D12v2 and enable Accelerated Networking to reduce network processing overhead

It is recommended to monitor:

1. CPU consumption on the IP Forwarding VM during HSR sync and subsequently

2. Network utilization on the IP Forwarding VM

3. HSR delay between Primary and Secondary node(s)

SQL: "HANA_Replication_SystemReplication_KeyFigures" displays among others the log replay backlog (REPLAY_BACKLOG_MB).

As a fallback option you can use the contents of M_SERVICE_REPLICATION to determine the log replay delay on the secondary site:

SELECT SHIPPED_LOG_POSITION, REPLAYED_LOG_POSITION FROM M_SERVICE_REPLICATION

Now you can calculate the difference and multiply it with the log position size of 64 byte:

(SHIPPED_LOG_POSITION - REPLAYED_LOG_POSITION) * 64 = <replay_backlog_byte>

1969700 - SQL Statement Collection for SAP HANA

This SAP Note contains a reference to this script that is very useful for monitoring HSR key figures. HANA_Replication_SystemReplication_KeyFigures_1.00.120+_MDC

If high CPU or network utilization is observed it is recommended to upgrade to a VM Type that supports Accelerated Networking

Required Reading, Documentation and Tips

Below are some recommendations for those setting up this solution based on test deployments:

1. It is strongly recommended that an experienced Linux engineer and a SAP Basis consultant jointly setup this solution. Test deployments have shown that there is considerable testing of the iptables rules required to get HSR to connect reliably. Sometimes troubleshooting has been delayed because the Linux engineer is unfamiliar with Hana System Replication and the Basis consultant may have only very limited knowledge of Linux networking

2. A simple and easy way to test if ports are opened and correctly configured is to ssh to the HLI using the specific port. For example from the primary HLI run this command: ssh <secondary hli>:3<sys nr.>15 If this command times out then there is likely an error in the configuration. If the configuration is correct the ssh session should connect briefly

3. The NSG and/or Firewall for the IP forwarder VM must be opened to allow the HSR ports to/from the HLIs

4. More information Network Configuration for SAP HANA System Replication

5. How To Perform System Replication for SAP HANA

6. If there is a failure on the IPTables VM Hana will treat this the same way as any other network disconnection or interruption. When the IPTables VM is available again (or networking is restored) there is considerable traffic while HSR replicates queued transactions to the DR site.

7. The IPTables solution documented here is exclusively for Asynchronous DR replication. We do not recommend using such a solution for the other types of replication possible with HSR such as Sync in Memory and Synchronous. Asynchronous replication across geographically dispersed locations with true diverse infrastructure such as power supply always has the possibility of some data loss as the RPO <> 0. This statement is true for any DR solution on any DBMS with or without using an IP forwarder such as IPTables. It is expected that IPTables would have a negligible impact on RPO assuming the CPU and Network on the IPTable VM is not saturated https://help.sap.com/viewer/6b94445c94ae495c83a19646e7c3fd56/2.0.02/en-US/c039a1a5b8824ecfa754b55e0caffc01.html

8. Some recommended SAP OSS Notes:

2142892 - SAP HANA System Replication sr_register hangs at "collecting information" (Important note)

An alternative to this SAP Note is to use this command on the IPTables VM: iptables -t mangle -A POSTROUTING -p tcp –tcp-flags SYN,RST SYN -o eth0 -j TCPMSS –set-mss 1500 2222200 - FAQ: SAP HANA Network 2484128 - hdbnsutil hangs while registering secondary site 2382421 - Optimizing the Network Configuration on HANA- and OS-Level 2081065 - Troubleshooting SAP HANA Network 2407186 - How-To Guides & Whitepapers For SAP HANA High Availability

9. HSR can enable a multi-tier HSR scenario even on small and low cost VMs as per SAP Note 1999880 - FAQ: SAP HANA System Replication – buffer for replication target has a minimum size of 64GB or row store + 20GB (whichever is higher) [no preload of data]

10. Refer to existing SAP Notes and documentation for limitations on HSR and storage based snapshot backup restrictions and MDC

11. The IPTables rules above forward entire CIDR networks. It is also possible to specify individual hosts.

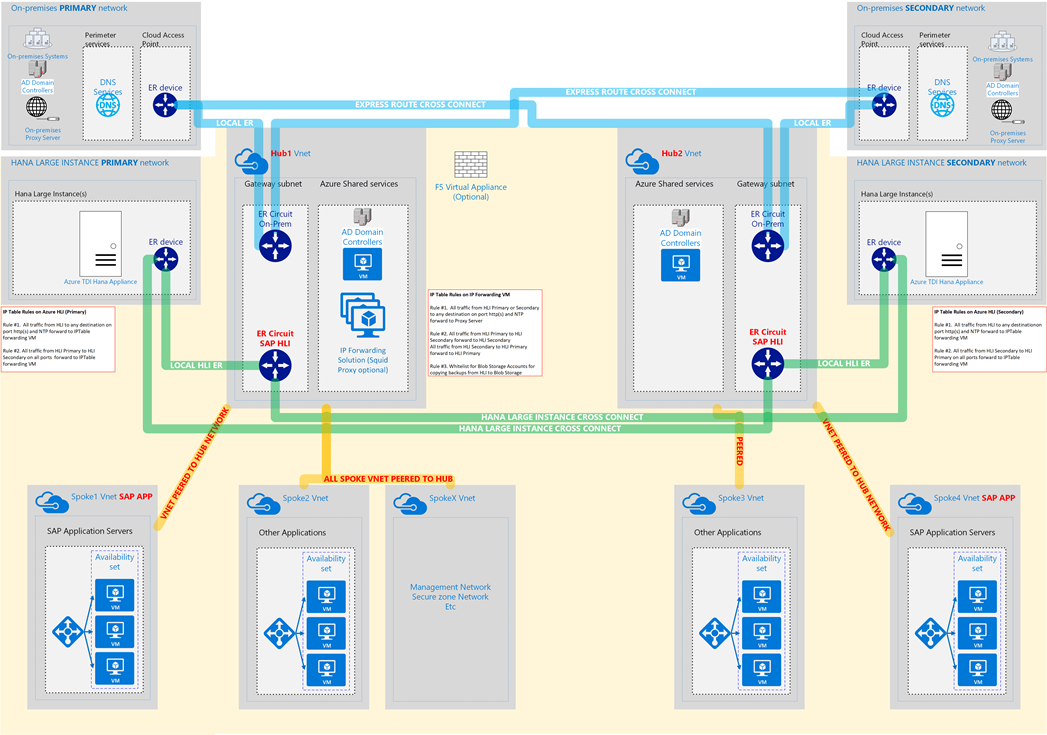

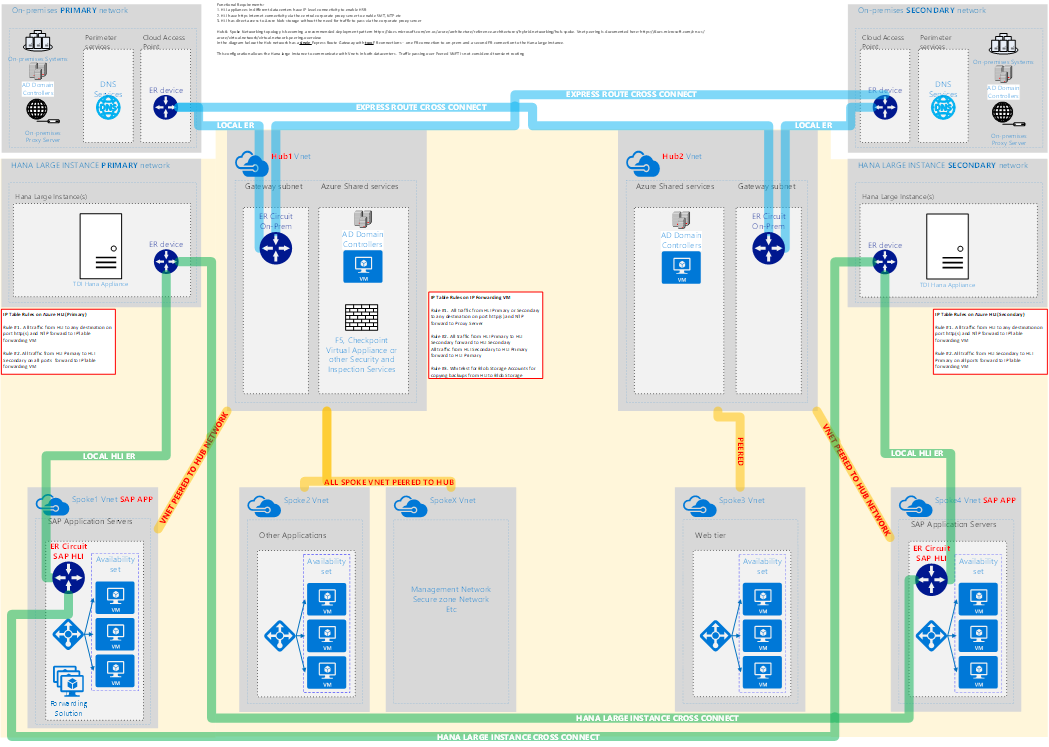

Sample Network Diagrams

The following two diagrams show two possible Hub & Spoke Network topologies for connecting HLI.

The second diagram differs from the first in that the HLI is connected to a Spoke VNET. Connecting the HLI to a Spoke on a Hub & Spoke topology might be useful if IDS/IPS inspection and monitoring Virtual Appliances or other mechanisms were used to secure the hub network and traffic passing from the Hub network to on-premises

Note: In the normal deployment of a Hub and Spoke network leveraging network appliances for routing, a User defined route is required on the expressroute gateway subnet to force all traffic though network routing appliances. If a user defined route has been applied, it will apply to the traffic coming from the HLI expressroute and this may lead to significant latency between the DBMS server and the SAP application server. Ensure an additional user defined route allows for the HLI's to have direct routing to the application servers without having to pass through the network appliances.

Thanks to:

Rakesh Patil – Azure CAT Team Linux Expert for his invaluable help.

Peter Lopez – Microsoft CSA

For more information on the solution deployed:

Sivakumar Varadananjayan - Cognizant Global SAP Cloud and Technology Consulting Head https://www.linkedin.com/in/sivakumarvaradananjayan/detail/recent-activity/posts/

Content from third party websites, SAP and other sources reproduced in accordance with Fair Use criticism, comment, news reporting, teaching, scholarship, and research

Comments

- Anonymous

March 01, 2018

Many thanks to all who instigated and contributed to this blog. Many customers in the field like the fast failover capability of HSR. This setup enables that feature between large instances in different datacenters. With some modification, this same setup also enables another scenario for migrating large SAP HANA on-premises systems to Azure Large Instances with minimal down time. Great work, thanks all!