Using PCP - How to accomplish Reduction

A common operation in parallel code design involves accumulating, or “reducing”, results from a number of concurrent operations into a single “all-up” result. With the Parallel Computing Platform, this operation is associated closely with the new C++ Concurrency Runtime’s Concurrency::Combinable template class.

For comparison, the reduction operation is also commonly used with other parallel programming libraries including OpenMP and MPI (both also supported via Windows and Visual Studio).

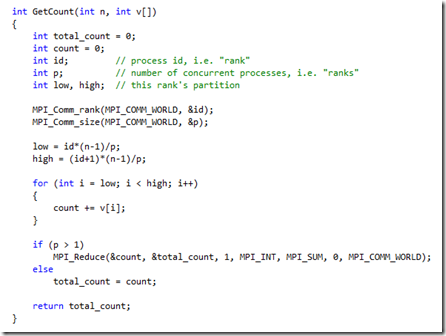

MPI remains a good implementation choice for distributed shared memory computing, effectively scaling to thousands of concurrent processes. With an SIMD application model, each process (i.e. rank) executes the same instructions with rank zero accumulating the global reduction. Note the explicit use of the MPI_Reduce API within the following simplified MPI example :

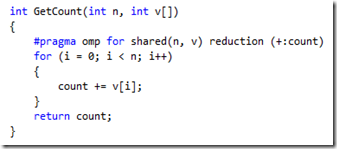

The OpenMP parallel programming model is well-suited for iteration of data arrays and efficiently accomplishes concurrent thread scheduling using a static runtime resource scheduler. Note the use of #pragma within the following simplified OpenMP example that enables a developer to incrementally introduce parallelization within a legacy single-threaded codebase without changing the original logic.

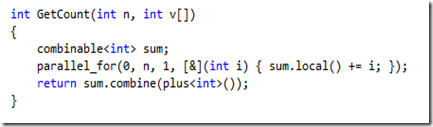

The C++ Concurrency Runtime integrates the STL model of generic C++ containers and algorithms. Template containers including combinable enable lock-free, concurrency-safe access. A dynamic runtime scheduler adapts to available resources in response to system workload changes. A significant concept, incorporated into both the Parallel Patterns Library (PPL) and the Asynchronous Agents Library, is the perspective of “task-oriented” concurrency specification instead of “thread-oriented”. Note the use of new C++0x inline “lambda” syntax within the following parallel_for statement.