Exploring Windows Azure Storage APIs By Building a Storage Explorer Application

Introduction

The Windows Azure platform provides storage services for different kinds of persistent and transient data:

- Unstructured binary and text data

- Binary and text messages

- Structured data

In order to support these categories of data, Windows Azure offer three different types of storage services: Blobs, Queues, and Tables.

The following table illustrates the main characteristics and differences among the storage types supplied by the Windows Azure platform.

| Storage Type | URL schema | Maximum Size | Recommended Usage |

| Blob | https://[StorageAccount].blob.core.windows.net/[ContainerName]/[BlobName] | 200 GB for a Block Blob. 1 TB for a Page Blob |

Storing large binary and text files |

| Queue | https://[StorageAccount].queue.core.windows.net/[QueueName] |

8 KB | Reliable, persistent messaging between on premises and cloud application. Small binary and text messages |

| Table | https://[StorageAccount].table.core.windows.net/[TableName]?$filter=[Query] | No limit on the size of tables. Entity size cannot exceed 1 MB. |

Queryable structured entities composed of multiple properties. |

Storage services expose a RESTful interface that provides seamless access to a large and heterogeneous class of client applications running on premises or in the cloud. Consumer applications can be implemented using different Microsoft, third party and open source technologies:

- .NET Framework: .NET applications running on premises or in the cloud applications can access Windows Azure Storage Services by using the classes contained in the Windows Azure Storage Client Library Class Library contained in the Windows Azure SDK.

- Java: the Windows Azure SDK for Java gives developers a speed dial to leverage Windows Azure Storage services. This SDK is used in the Windows Azure Tools for Eclipse project to develop the Windows Azure Explorer feature. In addition, the AppFabric SDK for Java facilitates cross platform development between Java projects and the Windows Azure AppFabric using the service bus and access control.

- PHP: the Windows Azure SDK for PHP enables PHP developers to take advantage of the Windows Azure Storage Services.

The following section provides basic information on the Storage Services available in the Windows Azure Platform. For more information on Storage Services, see the following articles:

- “About the Storage Services API” on MSDN.

- “Using the Windows Azure Storage Services” on MSDN.

- “Understanding Data Storage Offerings on the Windows Azure Platform” on TechNet.

Blob Service

The Blob service provides storage for entities, such as binary files and text files. The REST API for the Blob service exposes two resources: Containers and Blobs. A container can be seen as folder containing multiple files, whereas a blob can be considered as a file belonging to a specific container. The Blob service defines two types of blobs:

- Block Blobs: this type of storage is optimized for streaming access.

- Page Blobs: this type of storage is optimized for random read/write operations and provide the ability to write to a range of bytes in a blob.

Containers and blobs support user-defined metadata in the form of name-value pairs specified as headers on a request operation. Using the REST API for the Blob service, developers can create a hierarchical namespace similar to a file system. Blob names may encode a hierarchy by using a configurable path separator. For example, the blob names MyGroup/MyBlob1 and MyGroup/MyBlob2 imply a virtual level of organization for blobs. The enumeration operation for blobs supports traversing the virtual hierarchy in a manner similar to that of a file system, so that you can return a set of blobs that are organized beneath a group. For example, you can enumerate all blobs organized under MyGroup/ .

Block Blobs can be created in one of two ways. Block blobs less than or equal to 64 MB in size can be uploaded by calling the Put Blob operation. Block blobs larger than 64 MB must be uploaded as a set of blocks, each of which must be less than or equal to 4 MB in size. A set of successfully uploaded blocks can be assembled in a specified order into a single contiguous blob by calling Put Block List. The maximum size currently supported for a block blob is 200 GB.

Page blobs can be created and initialized with a maximum size by invoking the Put Blob operation. To write contents to a page blob, you can call the Put Page operation. The maximum size currently supported for a page blob is 1 TB.

Blobs support conditional update operations that may be useful for concurrency control and efficient uploading. For more information about the Blob service, see the following topics:

- “Understanding Block Blobs and Page Blobs” on MSDN.

- "Blob Service Concepts" on MSDN.

- “Blob Service API” on MSDN.

- “Windows Azure Storage Client Library” on MSDN.

Queue Service

The Queue service provides reliable, persistent messaging between on premises and cloud applications and among different roles of the same Window Azure application. The REST API for the Queue service exposes two types of entities: Queues and Messages. Queues support user-defined metadata in the form of name-value pairs specified as headers on a request operation. Each storage account may have an unlimited number of message queues identified by a unique name within the account. Each message queue may contain an unlimited number of messages. The maximum size for a message is limited to 8 KB. When a message is read from the queue, the consumer is expected to process the message and then delete it. After the message is read, it is made invisible to other consumers for a specified interval. If the message has not yet been deleted at the time the interval expires, its visibility is restored, so that another consumer may process it. For more information about the Queue service, see the following topics:

- “Queue Service Concepts” on MSDN.

- “Queue Service API” on MSDN.

- “Windows Azure Storage Client Library” on MSDN.

Table Service

The Table service provides structured storage in the form of tables. The Table service supports a REST API that is compliant with the WCF Data Services REST API. Developers can use the .NET Client Library for WCF Data Services to access the Table service. Within a storage account, a developer can create one or multiple tables. Each table must be identified by a unique name within the storage account. Tables store data as entities. An entity is a collection of named properties and associated values. Tables are partitioned to support load balancing across storage nodes. Each table has as its first property a partition key that specifies the partition an entity belongs to. The second property is a row key that identifies an entity within a given partition. The combination of the partition key and the row key forms a primary key that identifies each entity uniquely within the table. In any case, tables should be seen more as a .NET Dictionary object rather than a table in a relation database.In fact, each table is independent of each other and the Table service does not provide join capabilities across multiple tables. If you need a full-fledged relational database in the cloud, you should definitely consider adopting SQL Azure.Another characteristic that differentiates the table service from a table of a traditional database is that the Table service does not enforce any schema. In other words, entities in the same table are not required to expose the same set of properties. However, developers can choose to implement and enforce a schema for individual tables when they implement their solution. For more information about the Table service, see the following topics:

- “Table Service Concepts” on MSDN.

- “Table Service API” on MSDN.

- “Windows Azure Storage Client Library” on MSDN.

- "Understanding the Table Service Data Model" on MSDN.

- “Querying Tables and Entities” on MSDN.

- “Client Applications of ADO.NET Data Services” on MSDN.

Solution

After a slightly long-winded but necessary introduction, we are now ready to dive into some code! When I started to approach Windows Azure and Storage Services in particular, I searched on the internet for some good references, prescriptive guidelines, samples, hands-on-labs and tools. At the end of this article you can find a list of the most interesting resources I found. During my research, I also ran into a couple of interesting tools for managing data on a Windows Azure storage account. In particular, one of them excited my curiosity, the Azure Storage Explorer by Neudesic available for free on CodePlex. Since the only way to truly learn a new technology is using it for solving a real problem, I decided to jump into the mud and create a Windows Forms application to handle Windows Azure Storage Services: Blobs, Queues and Tables. The following picture illustrates the architecture of the application:

Let’s dive into the code. I started creating a helper library called CloudStorageHelper that wraps and extends the functionality supplied by the classes contained in the Windows Azure Storage Client Library. Indeed, there are many samples of such libraries on the internet. For example, on the Windows Azure Code Samples project page on CodePlex you can download a sample client library that provides .NET wrapper classes for REST API operations for Blob, Queue, and Table Storage.

In the following section I’ll go through the most important features of my CloudStorageHelper class and I’ll explain how the smart client application takes advantage of its functionality.

CloudStorageAccount and Configuration

The Windows Azure Storage Client Library contains a class called CloudStorageAccount that you can use to retrieve account information and authentication credentials from the configuration file. In particular, the class exposes a static method called FromConfigurationSetting that creates a new instance of a CloudStorageAccount object from a specified configuration setting. This method should be called only after the SetConfigurationSettingPublisher method has been called to configure the global configuration setting publisher. The code snippet below shows the configuration file of my smart client application:

|

As you can see, the configuration file contains a custom section called storageAccounts that is used to define the storage accounts to manage. The LocalStorageAccount item contains the connection string for the Development storage. Now, the storage emulator is a local account with a well-known name and key. Since the account name and key are the same for all users, you can use a shortcut string format to refer to the storage emulator within a connection string. You can simply specify UseDevelopmentStorage=true as value for the connection string. Instead, the second element, PaoloStorageAccount, contains the connection string of my storage account. To create a connection string that relies on the default endpoints for the storage service, use the following connection string format. Indicate whether you want to connect to the storage account through HTTP or HTTPS, replace myAccount with the name of your storage account and myAccountKey with your account access key:

DefaultEndpointsProtocol=[http|https];AccountName=myAccountName;AccountKey=myAccountKey

For more information on how to define a connection string to a storage account in Windows Azure, see the following topic:

-

“How to Configure Connection Strings” on MSDN.

At the startup of the application, the constructor of the MainForm creates an instance of the CloudStorageHelper class, then retrieves the storage account data from the configuration file and uses it to initialize the StorageAccounts property. The latter is a Dictionary object where the key contains the name of the storage account as defined in the configuration file, whereas the value contains the corresponding connection string.

|

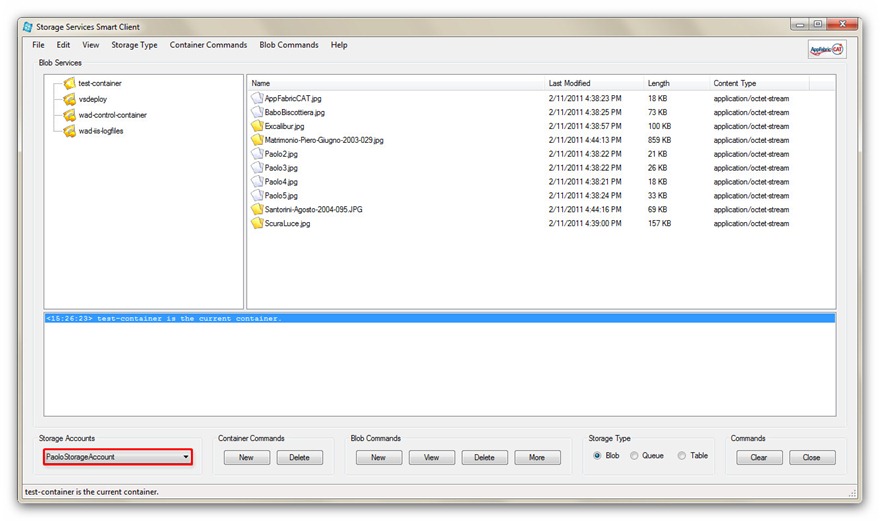

When you launch the application, you can select a storage account from the drop-down list highlighted in red in the picture below:

This action causes the execution of the CreateCloudStorageAccount method exposed by the CloudStorageHelper object that in turn creates an instance of the CloudStorageAccount class using the connection string for the selected storage account.

|

Once selected a storage account, you can use the commands located in the bottom part of the main window or in the menus located in the upper part to decide which type of objects (Blobs, Queues, Tables) to manage. In the following sections I will explain in detail how the smart client utilizes the functionality exposed by the CloudStorageHelper class to manage the entities contained in the selected storage account.

Containers

When you select Blob as a storage type, the smart client retrieves the list of the containers from the selected storage account and for each of them creates a node in the TreeView located in the left panel of the main window. You can select a container by clicking the corresponding node in the TreeView. When you do so, the ListView in the right panel displays the Block (represented by the white document icon) and Page Blobs (represented by the yellow document icon) located in the selected container. By using the New or Delete buttons in the Container Commands group or the corresponding menu items in the Container Commands menu, you can respectively create a new container or delete the selected container.

In particular, in order to create a new container, you have to perform the following steps:

-

Press the New button within the Container Commands group or select the New menu item from the Container Commands menu.

-

Enter a name for the new container in the New Container dialog box.

-

Specify the accessibility (Public or Private) for the new container.

The table below includes the methods exposed by the CloudStorageHelper class that can be used to list, create or delete containers.

|

Blobs

When the current Storage Type is set to Blob, the ListView in the right panel displays the Block (represented by the white document icon) and Page Blobs (represented by the yellow document icon) located in the current container. You can manage Blob objects by using the buttons contained in the Blob Commands group or the items contained in the Blob Commands menu, both highlighted in red in the image below.

As illustrated in the picture below, when you click the New button, the smart client allows you to select and upload multiple files from a local folder to the selected container of the current storage account.

The Upload Files dialog box allows you to select a Blob type (Block or Page) and an execution mode (Parallel or Sequential). When you select the Parallel execution mode, the smart client will start a separate, concurrent Task for each file to upload. Conversely, when you select the Sequential execution mode, the smart client will wait for the completion of the current upload before starting the transfer of the next file. When you click the OK button, the smart client starts uploading blobs and a dialog box shows the progress bar for each file being transferred.

You can also click one or multiple blobs in the ListView and press the Delete button to remove the selected items from the current container or press the View button to download the selected blobs to a local folder. In the latter case, the Download Files dialog box allows to select an execution mode, Parallel or Sequential.

When you press the OK button to confirm the operation, the smart client start downloading the selected blobs from the storage account to the specified local folder.

To delete one or multiple blobs, you can simply select them from the list and click the corresponding Delete button.

The table below includes the methods exposed by the CloudStorageHelper class that can be used to list, upload, download or delete block and page blobs. Let’s look more closely at the code of the UploadBlob method. It receives the following input parameters:

-

A list of BlobFileInfo objects which describe the properties (FileName, ContainerName, BlobType, etc.) of the blobs to transfer.

-

The upload execution order: Parallel or Sequential.

-

The reference to an event handler to invoke as the upload progresses.

-

An Action to execute when the last upload has been completed.

When the value for the execution order is equal to Sequential, the UploadBlob method uses the TaskFactory to create and start a new Task. The latter executes loops through the blobFileInfoList and starts a new, separate Task for each file to upload to the storage account. Note that the method waits for the completion of the current upload Task before initiating the next transfer. Alternatively, when the value for the execution order is equal to Parallel, the UploadBlob method starts a separate Task for each file to upload without waiting for the completion of the previous transfer. The UploadFile method is used to transfer individual files. It executes a different action based on the blob type, Block or Page. In particular, to handle the upload and download of Page Blobs, I highly customized the code presented in the following article:

-

“Using Windows Azure Page Blobs and How to Efficiently Upload and Download Page Blobs” available on the Windows Azure Storage Team Blog.

In any case, my UploadFile method creates a FileStream object to read the contents of the file to upload and wraps it with a ProgressStream object. Then the code assigns the progressChangedHandler event handler to the ProgressChanged event exposed by the ProgressStream object. This allows the smart client to be notified as the file upload progresses and accordingly update the corresponding progress bar. Note that the UploadFile method uses the UploadFromStream method of the CloudBlockBlob class to upload the file to a Block Blob. Indeed, the CloudBlockBlob class provides several methods to upload a file to the cloud:

-

PutBlock: this method can be used to upload a block for future inclusion in a block blob. A block may be up to 4 MB in size. After you have uploaded a set of blocks, you can create or update the blob on the server from this set by calling the PutBlockList method. Each block in the set is identified by a block ID that is unique within that blob. Block IDs are scoped to a particular blob, so different blobs can have blocks with same IDs.

-

UploadByteArray: this method uploads an array of bytes to a block blob. This method is inherited from the CloudBlob base class.

-

UploadFile: this method can be used to upload a file from the file system to a block blob. This method is inherited from the CloudBlob base class.

-

UploadFromStream: this is the method I used in my code. It allows to upload any stream to a block blob. This method is inherited from the CloudBlob base class.

-

UploadText: this method can be used to upload a string of text to a block blob. This method is inherited from the CloudBlob base class.

At this point, maybe you are asking yourself why I created my own UploadFile method instead of using the one provided out of the box by the StorageClient API. The answer is quite straightforward: I wanted to control the file upload\download process and visualize its status at runtime with a progress bar.

When the current blob type is Page, the UploadFile method uses the WritePages method of the CloudPageBlob class to write a sequential set of pages up to 4MBs. Bear in mind that Page Blobs consist of an array of pages; the minimal page size is 512 bytes and each page can accommodate any data in multiples of 512 bytes up to 4 MB into a page. This also means that pages must align to page boundaries and hence page offsets must be multiples of 512 bytes, otherwise an exception is thrown. Also the CloudPageBlob class exposes multiple methods to upload a file to the cloud:

-

WritePages: this method uploads an array of bytes to a block blob.

-

UploadByteArray: this method uploads an array of bytes to a block blob. This method is inherited from the CloudBlob base class.

-

UploadFile: this method can be used to upload a file from the file system to a block blob. This method is inherited from the CloudBlob base class.

-

UploadFromStream: this is the method I used in my code. It allows to upload any stream to a block blob. This method is inherited from the CloudBlob base class.

-

UploadText: this method can be used to upload a string of text to a block blob. This method is inherited from the CloudBlob base class.

The DownloadBlob method follows the same logic and implementation schema as the UploadFile method. In particular, the DownloadFile method uses the DownloadToStream method of the CloudBlockBlob class to download the contents of a Block Blob to a file on the file system, whereas it uses a BlobStream object for reading the contents from a Page Blob. Once again, both the CloudBlockBlob and CloudBlockBlob classes offer multiple methods for retrieving the contents respectively from a Block and Page Blob. For more information on this topic, see the documentation for the Windows Azure Storage Client Library on MSDN.

|

To control the degree of parallelism, you can use the ParallelOperationThreadCount property of the CloudBlobClient class. As a rule of thumb, you should specify a value that is equal to the number of logical processors. Irrespective of the number of logical or physical processors, when using the Azure development store on my local machine to test the transfer of blob files, I found out that when trying to upload or download multiple files at once, the data transfer remained pending and finally failed. When I set the value of the ParallelOperationThreadCount property to 1, the problem disappeared. That’s why the smart client by default sets the value of the latter property to 1 when using the local development storage account.

In any case, you can specify a default value for the ParallelOperationThreadCount property in the configuration file of the smart client or you can define a new value at runtime by clicking the Setting menu.

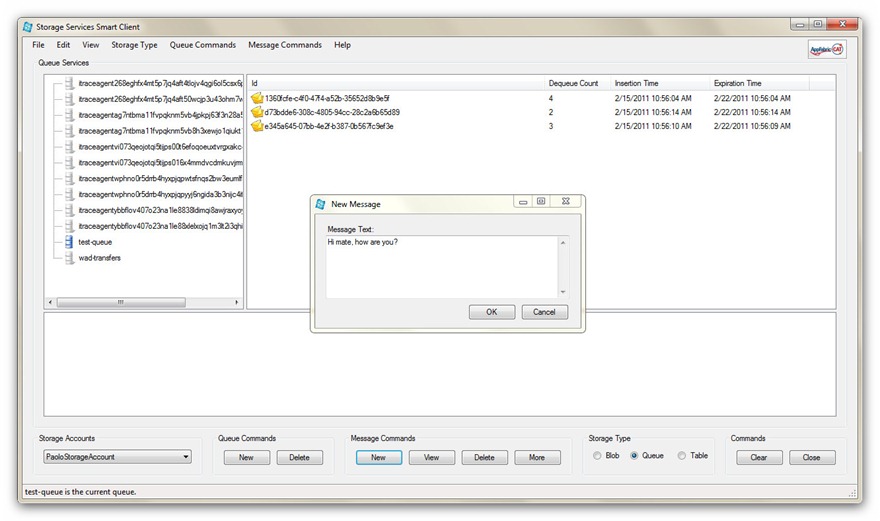

Queues

When you select Queue as a storage type, the smart client retrieves the list of the queues from the selected storage account and for each of them creates a node in the TreeView located in the left panel of the main window. You can select a specific queue by clicking the corresponding node in the TreeView. When you do so, the ListView in the right panel displays the messages contained in the selected queue. By clicking the New or Delete buttons in the Queue Commands group or selecting the corresponding menu items in the Queue Commands menu, you can respectively create a new queue or delete the currently selected queue.

In particular, to create a new container, you have to perform the following steps:

-

Press the New button within the Queue Commands group or select the New menu item from the Queue Commands menu.

-

Enter a name for the new queue in the New Queue dialog box.

The table below includes the methods exposed by the CloudStorageHelper class that can be used to list, create or delete queues.

|

Messages

When the current Storage Type is set to Queue, the ListView in the right panel displays the messages within the current queue. You can manage messages by using the buttons contained in the Message Commands group or the items contained in the Blobs Commands menu, both highlighted in red in the image below.

As shown in the picture below, when you click the New button, the smart client allows you to create and send a new text message to the current queue.

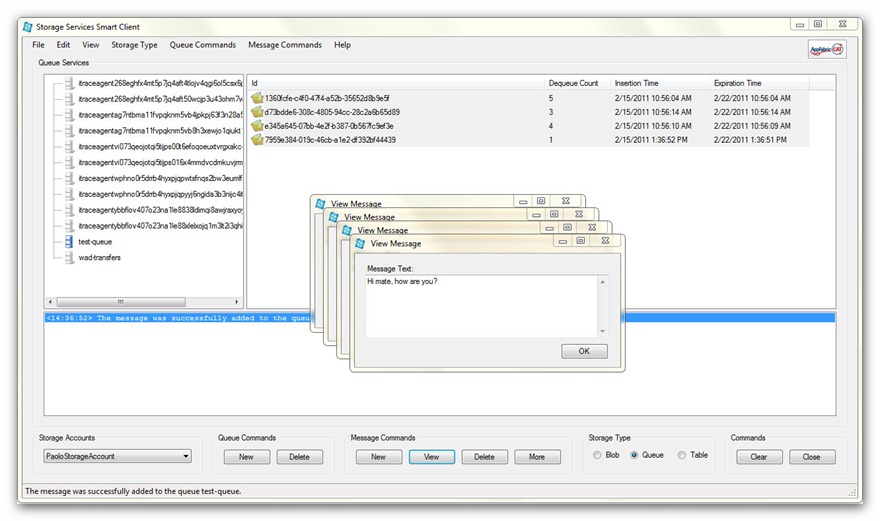

You can retrieve the content of one or more messages. To do so, it’s sufficient to select one or multiple message from the list and click the View button.

This click event handler invokes the ListMessages method on the CloudStorageHelper object that in turn executes the GetMessages method of the CloudQueue class to retrieve messages from the current queue. The GetMessages method retrieves a specified number of messages from the queue. The maximum number of messages that may be retrieved with a single call is 32. The GetMessages and GetMessage methods do not consume messages from the queue. Hence, after a client retrieves a message by calling the GetMessage or GetMessages method, the client is expected to process and explicitly delete the message by invoking the DeleteMessage method on the CloudQueue object. When a message is retrieved, its PopReceipt property is set to an opaque value that indicates that the message has been read. The value of the message's pop receipt is used to verify that the message being deleted is the same message that was read. After a client retrieves a message, that message is reserved for deletion until the date and time indicated by the message's NextVisibleTime property, and no other client may retrieve the message during that time interval. If the message is not deleted before the time specified by the NextVisibleTime property, it again becomes visible to other clients. If the message is not subsequently retrieved and deleted by another client, the client that retrieved it can still delete it. If another client does retrieve it, then the first client can no longer delete it. The CloudQueue class exposes two additional methods called PeekMessage and PeekMessages that allow to retrieve one or multiple messages from the queue without consuming them and without changing their visibility. In other words, a message retrieved with the PeekMessage or PeekMessages method remains available to other clients until a client retrieves them with a call to GetMessage or GetMessages method. The call to PeekMessage does not update the message's PopReceipt value, so the message cannot subsequently be deleted. Additionally, calling PeekMessage does not update the message's NextVisibleTime or DequeueCount properties. As a consequence, I used the GetMessages method instead of the PeekMessages to read messages from the current queue. In fact, this allows you to retrieve and then delete messages from the current queue.

To delete one or multiple messages, you can simply select them from the list and click the Delete button.

The table below includes the methods exposed by the CloudStorageHelper class that can be used to list, create, view or delete messages.

|

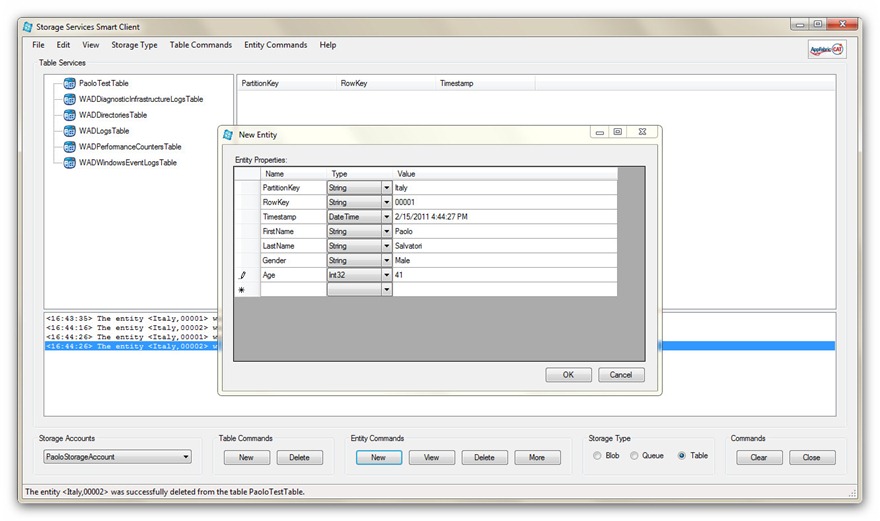

Tables

When you select Table as a storage type, the smart client retrieves the list of the tables from the selected storage account and for each of them creates a node in the TreeView located in the left panel of the main window. You can select a specific table by clicking the corresponding node in the TreeView. When you do so, the ListView in the right panel displays the entities contained in the current table. By clicking the New or Delete buttons in the Table Commands group or selecting the corresponding menu items in the Table Commands menu, you can respectively create a new table or delete the currently selected table.

In particular, to create a new table, you have to perform the following steps:

-

Press the New button within the Table Commands group or select the New menu item from the Table Commands menu.

-

Enter a name for the new queue in the New Queue dialog box.

The table below includes the methods exposed by the CloudStorageHelper class that are used by the smart client to list, create or delete tables.

|

Entities

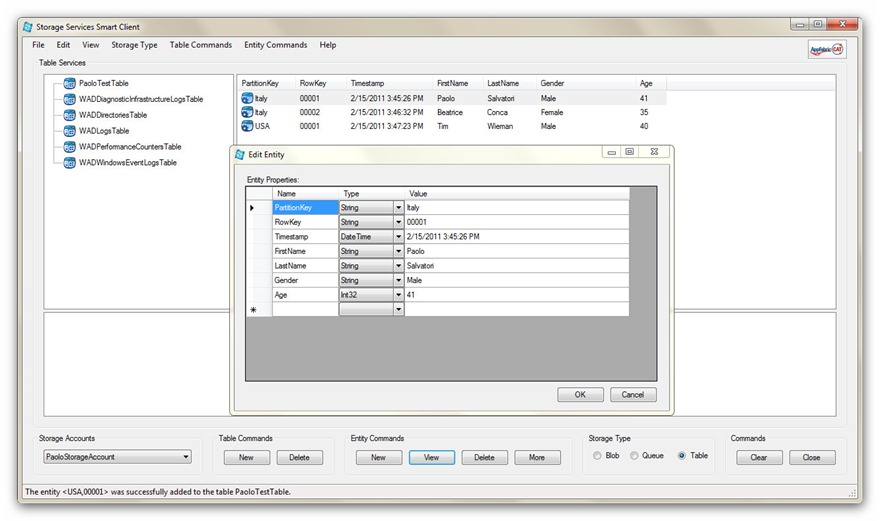

When the current Storage Type is set to Tables, the ListView in the right panel displays the entities contained in the current table. You can manage these objects by using the buttons contained in the Entities Commands group or the items contained in the Entities Commands menu, both highlighted in red in the image below.

As shown in the picture below, when you click the New button, the to create and edit a new entity. Entities can be seen as a collection of name value pairs. Hence, the New Entity dialog box allows you to perform the following actions:

-

Specify a value for the PartitionKey and RowKey. These properties are mandatory as they represent the primary key of the new entity in the current table.

-

Define a value for the Timestamp.

-

Define the name, type and value for entity properties.

You can also select an existing entity and press the View button to display and edit its properties. You can also use this operation to add a new property to a pre-existing entity.

Finally, you can select one or multiple entities from the corresponding list and press the Delete button to remove them from the table.

In order to model generic, untyped entities, I created a class called CustomEntity that inherits from the TableServiceEntity that represents an entity in a Windows Azure Table service. The CustomEntity class uses a public collection of type Dictionary<string, object> to contain the properties of an entity. Indeed, I wanted to use a DynamicObject to model generic entities, but the the DataServiceContext class, which represents the base class for the TableServiceContext class, does not digest and support dynamic objects, so I had to change strategy.

When you select a table, its entities are divided into pages of fixed size. You can specify the the page size along with other parameters in the appSettings section in the configuration file or at runtime by clicking the Settings menu item under the Edit menu, as shown in the picture below.

When the table contains additional results, the following message will appear in the status bar:

-

There are additional results to retrieve. Click the More button to retrieve the next set of results.

As suggested by the message, you can click the the More button to retrieve the next set of entities. Under the cover, the ListEntities method of the CloudStorageHelper class uses a ResultContinuation object to keep a continuation token and and continue a query until all results have been retrieved.

Another interesting feature provided by the smart client is the ability to submit queries against a Windows Azure table. The REST API for the Table service is available at the table and entity levels of the hierarchy. The Table service API is compatible with the WCF Data Services REST API and the Windows Azure Storage Client Library provides a collection of classes that allows to create and submit a query against a particular table to retrieve a subset of data. In order to create and execute a CloudQueryTable object, you can proceed as follows:

-

You start creating an instance of the TableServiceContext class. The latter inherits from the DataServiceContext that along with the DataServiceQuery class allows to to execute queries against a data service. For more information on this topic, see the following topic: “

-

[Querying the Data Service (WCF Data Services)](https://msdn.microsoft.com/en-us/library/dd673933.aspx)” on MSDN.

Then you can use the CreateQuery<T>() method in the DataServiceContext class to create an object of type DataServiceQuery<T>, where T is the type of the entities which are being returned by the query.

At this point you can use the the AddQueryOption method to add options to DataServiceQuery<T> object. In particular, you can specify a where condition that will be added to the absolute URI of the table. This allows to identify a subset of the entries from the collection of entities identified by the the URI of the table. For more information on this topic, you can see the following articles:

“Query Entities” on MSDN.

“OData: URI Conventions” on the OData site.

Next, you can use the AsTableServiceQuery() extension method of the TableServiceExtensionMethods class to create a CloudTableQuery<T> object from a IQueryable<T> object, where T is the type of the entities which are being returned by the query.

Finally, you can use the synchronous Execute method or the asynchronous BeginExecuteSegmented method to run the query against the Windows Azure table. For more information on this technique, look at the code at QueryEntities method in the table below.

For more information on this technique, see the following topic:

|

As shown in the picture below, in order to execute a query against the current table, you can select the Query menu item under the Entities Commands menu, enter a query in the dialog box that pops up and finally press the OK button.

The following table contains the methods exposed by the CloudStorageHelper class that can be used to list, create, view, update or delete entities. For more information, I suggest you to download and examine the source code of the application. You can find the link to the code at the end of the article.

|

Conclusions

I created the application described in this article to gain experience with Azure Storage Services and try different approaches to solve common problems when managing Blobs, Queues and Tables. Here you can download the code. I hope it may be useful to you and help you to solve eventual problems that you may encounter while developing your applications for the Windows Azure platform.

References

For more information about Storage Services, you can review the following articles:

Storage Services

"Exploring Windows Azure Storage" on MSDN.

"Windows Azure Code Samples" on CodePlex.

“Windows Azure Storage Architecture Overview” on Windows Azure Storage Team Blog.

Storage Account

- "Azure Storage Client v1.0" on Neil Mackenzie's Blog.

- "SetConfigurationSettingPublisher() – Azure Storage Client v1.0" on Neil Mackenzie's Blog.

Blobs

- "Blobs – Azure Storage Client v1.0" on Neil Mackenzie's Blog.

- "Access Control for Azure Blobs" on Neil Mackenzie's Blog.

- "Using Windows Azure Page Blobs and How to Efficiently Upload and Download Page Blobs" on Windows Azure Storage Team Blog.

Queues

- "Queues – Azure Storage Client v1.0" on Neil Mackenzie's Blog.

Tables

- "Entities in Azure Tables" on Neil Mackenzie's Blog.

- "Tables – CloudTableClient – Azure Storage Client v1.0" on Neil Mackenzie's Blog.

- "More on Azure Tables – Azure Storage Client v1.0" on Neil Mackenzie's Blog.

- “Queries in Azure Tables” on Neil Mackenzie's Blog.

- "Partitions in Windows Azure Table" on Neil Mackenzie's Blog.

- “Paging with Windows Azure Table Storage” on Scott Densmore’s Blog.

- “Table Storage Backup & Restore for Windows Azure” on CodePlex.

- "Protecting Your Tables Against Application Errors" on Windows Azure Storage Team Blog.

Links

- “Azure Links and Some Cloudy Links”on Neil Mackenzie's Blog.

Webcasts

- “Cloud Cover” on Channel9.

Comments

Anonymous

February 23, 2011

This looks like a nice addition to the tooling support. Your custom application looks very useful. Some of the challenge of working with table storage and the blobs is just in visualizing what is there. I know the Azure MMC (archive.msdn.microsoft.com/windowsazuremmc) provided some of this capability too. Thanks,Anonymous

February 23, 2011

The comment has been removedAnonymous

February 24, 2011

Quick note, your link to the Blob service API is broken. It is msdn.microsoft.com/.../dd135733.aspx should be msdn.microsoft.com/.../dd135733.aspx (dropped the - in the language)Anonymous

February 24, 2011

Hi Don thanks for the heads-up! I fixed the link! Ciao, PaoloAnonymous

October 25, 2011

The comment has been removedAnonymous

October 25, 2011

Hi Angel0in, thanks for the positive feedback! Indeed, I developed a similar tool for the AppFabric Service Bus that I'll publish soon here or on MSDN. In this case, I give you the ability to specify the connection string in the configuration file, but also a Connect Form that allows you to specify the Service Bus namspace and credentials on the fly without the nedd to persist them in the configuration file. Time permitting, I could modify the Storage Services Smart Client to introduce the same feature, or you could customize my code and do it by yourself. ;-) To answer your question, you can avoid using the CloudStorageAccount.SetConfigurationSettingPublisher method and specify account credentials as a parameter in the public constructor of the CloudStorageAccount class. For more information see msdn.microsoft.com/.../gg435946.aspx.