[Service Fabric] SF node fails to read DNS conf and fix

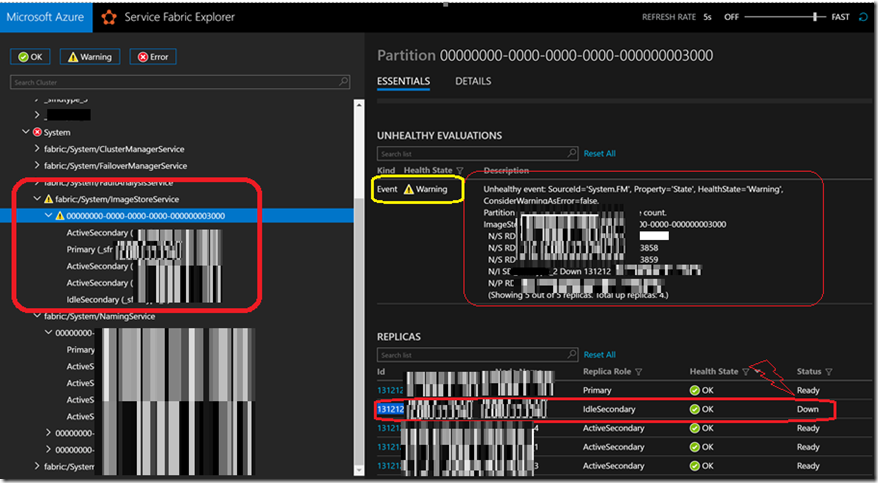

Recently SF developer reported this problem where his Azure Service Fabric > ImageStoreService(ISS) displayed a warning message due to one his secondary node down. This node was “down” all sudden without any change to his cluster/application. From the SF Explorer portal, we do noticed a brief warning message saying due to some unhealthy event, this node is down.

SF Explorer - warning

Error message

Unhealthy event: SourceId='System.PLB', Property='ServiceReplicaUnplacedHealth_Secondary_00000000-0000-0000-0000-000000003000', HealthState='Warning', ConsiderWarningAsError=false.

The Load Balancer was unable to find a placement for one or more of the Service's Replicas:

ImageStoreService Secondary Partition 00000000-0000-0000-0000-000000003000 could not be placed, possibly, due to the following constraints and properties:

TargetReplicaSetSize: 5

Placement Constraint: NodeTypeName==sf**type

Depended Service: ClusterManagerServiceName

Constraint Elimination Sequence:

ReplicaExclusionStatic eliminated 3 possible node(s) for placement -- 2/5 node(s) remain.

PlacementConstraint + ServiceTypeDisabled/NodesBlockListed eliminated 0 + 1 = 1 possible node(s) for placement -- 1/5 node(s) remain.

ReplicaExclusionDynamic eliminated 1 possible node(s) for placement -- 0/5 node(s) remain.

Nodes Eliminated By Constraints:

ReplicaExclusionStatic -- No Colocations with Partition's Existing Secondaries/Instances:

FaultDomain:fd:/1 NodeName:_sf**type_1 NodeType:sf**type NodeTypeName:sf**type UpgradeDomain:1 UpgradeDomain: ud:/1 Deactivation Intent/Status: None/None

FaultDomain:fd:/4 NodeName:_sf**type_4 NodeType:sf**type NodeTypeName:sf**type UpgradeDomain:4 UpgradeDomain: ud:/4 Deactivation Intent/Status: None/None

FaultDomain:fd:/3 NodeName:_sf**type_3 NodeType:sf**type NodeTypeName:sf**type UpgradeDomain:3 UpgradeDomain: ud:/3 Deactivation Intent/Status: None/None

PlacementConstraint + ServiceTypeDisabled/NodesBlockListed -- PlacementProperties must Satisfy Service's PlacementConstraint, and Nodes must not have had the ServiceType Disabled or be BlockListed due to Node's Pause/Deactivate Status:

FaultDomain:fd:/2 NodeName:_sf**type_2 NodeType:sf**type NodeTypeName:sf**type UpgradeDomain:2 UpgradeDomain: ud:/2 Deactivation Intent/Status: None/None

ReplicaExclusionDynamic -- No Colocations with Partition's Existing Primary or Potential Secondaries:

FaultDomain:fd:/0 NodeName:_sf**type_0 NodeType:sf**type NodeTypeName:sf**type UpgradeDomain:0 UpgradeDomain: ud:/0 Deactivation Intent/Status: None/None

Noticed below health warning event

There was no data from ‘sf**type_2’ post that failing date, so we confirmed both ISS and FabricDCA.exe was crashing

N/S RD sf**type_3 Up 131212...946953

N/S RD sf**type_1 Up 131212...593463858

N/S RD sf**type_4 Up 13121.....3859

N/I SB sf**type_2 Down 1312.....63860

N/P RD sf**type_0 Up 131212.....61

Event Log

Log Name: Application

Source: Microsoft-Windows-PerfNet

Date: 12/9/2016 7:42:40 AM

Event ID: 2005

Task Category: None

Level: Error

Keywords: Classic

User: N/A

Computer: sf***ype00xxx02

Description:

Unable to read performance data for the Server service. The first four bytes (DWORD) of the Data section contains the status code, the second four bytes contains the IOSB.Status and the next four bytes contains the IOSB.Information.

C0000466 00000000 634A41F0

Log Name: Microsoft-ServiceFabric/Admin

Source: Microsoft-ServiceFabric

Date: 12/12/2016 11:29:13 AM

Event ID: 59904

Task Category: FabricDCA

Level: Error

Keywords: Default

User: NETWORK SERVICE

Computer: sf***ype00xxx02

Description:

Failed to copy file D:\\SvcFab\Log\PerformanceCounters_ServiceFabricPerfCounter\fabric_counters_6361xxxxx47065191_000940.blg to Azure blob account sf***ype00xxx02, container fabriccounters-2b73743xxxxxxa46d111c4d5.

Microsoft.WindowsAzure.Storage.StorageException: The remote name could not be resolved: 'sf***ype00xxx02.blob.core.windows.net' ---> System.Net.WebException: The remote name could not be resolved: 'sf***ype00xxx02.blob.core.windows.net'

at System.Net.HttpWebRequest.GetRequestStream(TransportContext& context)

at System.Net.HttpWebRequest.GetRequestStream()

at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.ExecuteSync[T](RESTCommand`1 cmd, IRetryPolicy policy, OperationContext operationContext)

--- End of inner exception stack trace ---

at Microsoft.WindowsAzure.Storage.Core.Executor.Executor.ExecuteSync[T](RESTCommand`1 cmd, IRetryPolicy policy, OperationContext operationContext)

at Microsoft.WindowsAzure.Storage.Blob.CloudBlockBlob.UploadFromStreamHelper(Stream source, Nullable`1 length, AccessCondition accessCondition, BlobRequestOptions options, OperationContext operationContext)

at FabricDCA.AzureFileUploader.CreateStreamAndUploadToBlob(String sourceFile, CloudBlockBlob destinationBlob)

at FabricDCA.AzureFileUploader.CopyFileToDestinationBlobWorker(FileCopyInfo fileCopyInfo, CloudBlockBlob destinationBlob)

at FabricDCA.AzureFileUploader.CopyFileToDestinationBlob(Object context)

at System.Fabric.Dca.Utility.PerformWithRetries[T](Action`1 worker, T context, RetriableOperationExceptionHandler exceptionHandler, Int32 initialRetryIntervalMs, Int32 maxRetryCount, Int32 maxRetryIntervalMs)

at FabricDCA.AzureFileUploader.CopyFileToDestination(String source, String sourceRelative, Int32 retryCount, Boolean& fileSkipped)

Check list tried:

- we checked the free space details of drive “D:/” of this failing node – had enough space.

- we were able to RDP into the machine (failing node) but could not able to browse any web sites or nslookup any url.

-

- >nslookup sf***ype00xxx02.blob.core.windows.net

- server:unknown

- *** unknown can’t find sf***ype00xxx02.blob.core.windows.net: No response from server

- logs confirmed that, this particular failing node VM was going through some network related issue which is why it was not able to connect to any of the services and storage account.

- there were no fabric log post this issue start date which also confirmed this vm lost its connectivity

- checked any crash dump under D:\SvcFab\Log\CrashDumps – no dumps

checked the traces from D:\SvcFab\Log\Traces – did not get any hint

Fix/resolution:

- With above all findings, we confirmed this failing node:_sf***ype_2 was not resolving the DNS for some reason. This issue occurs very rarely due to corruption at OS level.

- From the registry we see it has received the proper DNS settings from the azure DHCP server.

- The “HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Tcpip\Parameters\DhcpNameServer” was set to 16x.6x.12x.1x” but the affected machine was not able to read and use this DNS configuration due to which name resolution was broken at the operating system.

- To overcome this issue, we ran “Netsh int ip reset c:\reset.log” and “netsh winsock reset catalog” to reset the IP stack and windows socket catalog and rebooted the Virtual machine which eventually resolved this issue.

Reference article :https://support.microsoft.com/en-us/kb/299357

Let me know if this helps in someway.