Getting Started with Log Parser Studio - Part 1

Hopefully, if you are reading this you already know what Log Parser 2.2 is and that Log Parser Studio is a graphical interface for Log Parser. Additionally, Log Parser Studio (which I will refer to from here forward simply as LPS) contains a library of pre-built queries and features that increases the usefulness and speed of using Log Parser exponentially. If you need to rip through gigabytes of all types of log files and tell a story with the results, Log Parser Studio is the tool for you!

None of this is of much use if you don’t have LPS and know how to get it up and running but luckily this is exactly what this blog post is about. So let’s get to it; the first thing you want to do of course is to download LPS and any prerequisites. The prerequisites are:

- Log Parser Studio (get it here).

- .NET 4.x which can be found here.

- Log Parser 2.2 which is located here.

Once everything is downloaded we’ll install the prerequisites first. Run the installer for Log Parser 2.2 and make sure that you choose the “Complete” install option. The complete install option installs logparser.dll which is the only component from the install LPS actually requires:

Next we want to install .NET 4 and you can run the webinstaller as needed. Once it is installed all that is left is to install Log Parser Studio. Oh snap, LPS doesn’t require an install, all you need to do is unzip all the files into a folder in the location of your choice and run LPS.exe. Once you have completed these steps the install is complete and the only thing left is a few basic setup steps in LPS.

Setting up the default output directory

LPS (based on the query you are running) may export the results to a CSV, TSV or other file format as part of the query itself. The default location is C:\Users\username\AppData\Roaming\ExLPT\Log Parser Studio. However, it’s probably better to change that path to something you are more familiar with. To set a new default output directory run LPS and go to Options > Preferences and it is the first option at the top:

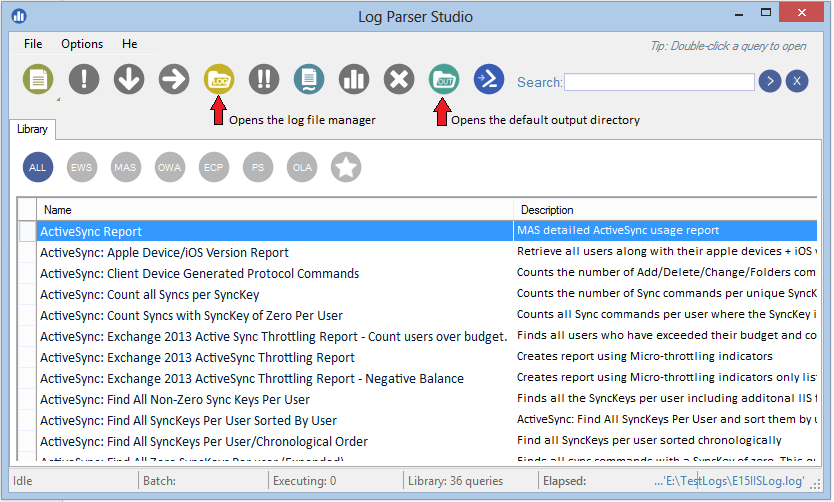

Click the browse button and choose the directory you wish to use as your default output directory. You can always quickly access this folder directly from LPS by clicking the show output directory button in the main LPS window. If you just exported a query to CSV and want to browse to it, just click that button, no need to manually browse:

Choose the log files you wish to query

Next you’ll want to choose the log file(s) you want to query. If you are familiar with Log Parser 2.2 the following physical log file types are supported: .txt, .csv, .cap, .log, .tsv and .xml. To choose the logs you need open the log file manager by clicking the orange “log” button shown in the screenshot above. Technically, you can query almost any text based file, more on that in upcoming articles.

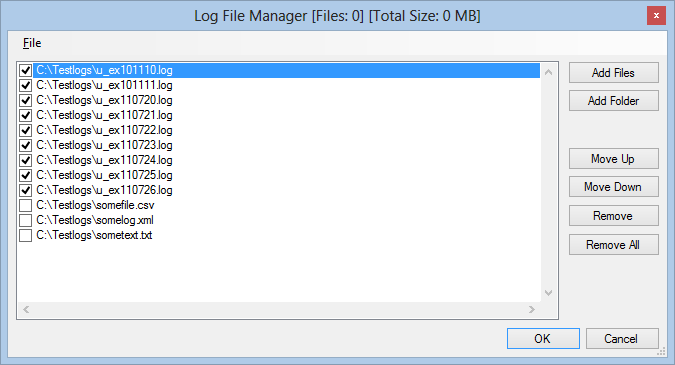

In the log file manager you can choose single files, multiple files or entire folders based on log type. Just browse to the logs you care about. You can house multiple file types in the log file manager and only the ones that are checked will be queried. This is very handy if you have multiple log types and you need to quickly switch between without having to browse for them each time:

Note: When adding a folder you need to double-click or select at least one log file. LPS will know that you want all the files and will use wildcards accordingly instead of the single file you selected. If you use the Add Files button then only files you select will be added.

Running your first query

By this point you are ready to start running queries. All queries are stored in the LPS library which is the first window you see when opening LPS. To load any query to run, just double-click it and it will open in its own tab:

The only thing left is to execute the query and to do so just click the execute query button.  If you are wondering why I chose such an icon as this it’s because Log Parser uses SQL syntax and traditionally this icon has always been used to identify the “run query” button in applications that edit queries such as SQL Server Management Studio. If you are wondering why there is another button below that is similar but contains two exclamation points you might be able to guess that it executes multiple queries at once. I'll elaborate in an upcoming post that covers grouping multiple queries together so they can all be executed as a batch.

If you are wondering why I chose such an icon as this it’s because Log Parser uses SQL syntax and traditionally this icon has always been used to identify the “run query” button in applications that edit queries such as SQL Server Management Studio. If you are wondering why there is another button below that is similar but contains two exclamation points you might be able to guess that it executes multiple queries at once. I'll elaborate in an upcoming post that covers grouping multiple queries together so they can all be executed as a batch.

Here are the results from my test logs after the query has completed:

We can see that it took about 15 seconds to execute and 9963 records were returned, there are 36 queries in my test library, zero batches executing and zero queries executing.

Conclusion

And that’s it, you are now up and running with LPS. Just choose your logs, find a query that you want to use and click run query. The only thing you need to be aware of is that different log formats require different log types so you’ll want to make sure those match or you’ll get an error. In other words the format for IISW3C format is different than the format for an XML file and LPS needs to know this so it can pass the correct information to Log Parser in the background. Thankfully, these are already setup inside the existing queries, all you need to do is choose an IIS query for IIS logs and so on.

Most every button and interface element in LPS has a tool-tip explanation of what that button does so be sure to hover your mouse cursor over them to find out more. There is also a tips message that randomly displays how-to tips and tricks in the top-right of the main interface. You can also press F10 to display a new random tip.

You can also write your own queries, save them to the library, edit existing queries and change log types and all format parameters. There is a huge list of features in LPS both obvious and not so obvious, thusly upcoming posts will build on this and introduce you the sheer power and under-the-hood tips and tricks that LPS offers. It’s amazing how much can be accomplished once you learn how it all works and that’s what we are going to do next. :)

Continue to the next post in the series: Getting Started with Log Parser Studio - Part 2

Comments

Anonymous

January 01, 2003

Thanks again kary!Anonymous

January 01, 2003

The comment has been removedAnonymous

January 01, 2003

how can I query against exchange 2003 message tracking log? I tried with log type W3CLOG with no luck (I get an error, see this screenshot:dl.dropboxusercontent.com/.../logParser.png) thank youAnonymous

January 01, 2003

Hi Satya,

You might try CTRL+ALT+E which pulls up any errors logged. It may only display what you saw already but worth a try. Next chance I get I'll try it on 2012 R2 and report back but that "shouldn't" make a difference.Anonymous

January 01, 2003

I just posted the solution. Please try this method and see if it works for you: blogs.technet.com/.../log-parser-studio-exchange-2003-message-tracking-logs.aspxAnonymous

January 01, 2003

The comment has been removedAnonymous

January 01, 2003

Hi. I think Sunshine is asking where can we review the errors if LPS fails to read the log file.Anonymous

June 19, 2013

Did you try IISW3CLOG instead of W3CLOG? If that doesn't work I will get you a method for 2k3 message tracking logs. I remember there could be special steps for 2003 Message Tracking logs and will test for you and reply as soon as I can.Anonymous

September 17, 2013

Hi Kary, Thanks for a great tool! Is there a way to access parsing errors through the GUI? Since all rows appear in the output whether they parsed or not, maybe you could add an error column with information about what the error is. Even just dumping it to a text file would be helpful.Anonymous

September 20, 2013

Hello Sunshine, My apologies... Can you elaborate as I'm afraid I don't quite understand the question. :)Anonymous

February 26, 2014

Do I need to do a find and replace for exchange perflogs headers? I'm getting a blank result.Anonymous

February 26, 2014

Hi Wyatt, which perflogs?Anonymous

February 26, 2014

exchange CAS and Mailbox server performance logs. it's a binary log that convert to csv. headers are still intact.Anonymous

February 26, 2014

Due to the way performance monitor logs are laid out, I don't know of a great way to query them usefully. I'll check something in a few when I get free and reply back. There are certainly ways to query them with CSV and/or TEXTWORD log types but need to see how useful it is.Anonymous

February 26, 2014

CSV works. It just requires knowing what you want and how you want it. Choose CSVLOG as the log type then just run the default "new query" to see the basic results:

/* New Query */

SELECT TOP 10 * FROM '[LOGFILEPATH]'Anonymous

February 26, 2014

The query ran but came back blank. I've feeling the default headers need to be change.Anonymous

February 26, 2014

The comment has been removedAnonymous

February 26, 2014

Kary, I wanted to thank you for taking the time to help me.

I'm getting Log row too long error when choosing CSVLOG type. The registry edit of CSVInMaxRowSize has already been done.Anonymous

February 26, 2014

My pleasure Wyatt. I'm assuming you are using the setting here:

http://blogs.technet.com/b/samdrey/archive/2012/12/11/logparser-or-exrap-tools-log-row-too-long-issue.aspx

I've actually not personally hit that limit yet. so If you can email me a copy of the headers to karywa@ I'll take a look but it may be tomorrow before I can dig in to see if I can find a good workaround for you. Otherwise, yes you can create a custom header file but based on the error you are hitting, I don't see that being anything other than difficult to do.Anonymous

February 26, 2014

Yes, that's setting I changed. I'll email you the log headers in a few minute. Thanks!Anonymous

February 27, 2014

The recommended changes made a different. I'm able to see the result of the Exchange logs. I'm only query against certain category of logs at a time. I'm still can't query against Exchange Perflogs though but that probably has to do with the size of the captured logs.

Thank you very much for the help!Anonymous

May 22, 2014

Getting error when installed it on Windows 2012 R2. I have tried it on 2 Exchange 2010 SP3 mailbox servers and getting error " Background thread error, one or more errors occurred. Just wondering any .net version mismatching?Anonymous

September 25, 2014

Hey all,

I'm back with some more info to empower your Exchange administration! Once you'veAnonymous

November 07, 2014

Hi, I am trying to parse tracking logs on Exchange 2010. No matter what query I try, or if I change the log type to CSV, I get the same error: Error parsing query: select clause: Syntax error: unknown field 'recipient address' record field does not exist. The information inside the quotes changes depending upon what query I use, but the initial error stays the same. Running this on Window 2008 R2, querying Exchange 2010 v14.3 build 123.4., using unc paths to log directories on remote servers.Anonymous

November 15, 2014

This is a fantastic tool. It is making my life a lot easier. Thank you for all your hard work!Anonymous

February 14, 2015

According to what I've seen online Log Parser 2.2 supports .evtx files as long as you use -i:EVT why is it that Log Parser Studio doesn't seem to support .evtx file format? I've tried selecting my .evtx files and it won't parse them it just grabs the default local logs when I try to query the .evtx files I select using the "Choose Log Files/Folders to query" button in LPS. The .evtx format is the native format for EventLog exports and LPS looked SO promising for what I wanted to do until I realized it isn't working with .evtx files...can you help me with this? Maybe I'm doing something wrong...?Anonymous

February 14, 2015

Forgot to mention I am selecting/using the "Log Type: EVTLOG" in LPS when I am attempting this...your help would be immensely appreciated!Anonymous

February 14, 2015

I believe I've figured out what this wasn't working- the location I was specifying which was storing my .evtx files was a mapped network drive...as soon as I copied one of the .evtx files to my local disk it seemed to work fine....does this make sense? Would there be any specific reason LPS cannot parse .evtx files stored on mapped network drives? If there is not can you add/fix this functionality? Thanks so much!Anonymous

February 17, 2015

Hi NoXiDe, I don't see why it wouldn't query the logs over a mapped network drive. A couple items of interest...

LPS can query live event logs such as \computernamelogname eg... \localhostapplication or \remotehostapplication

Or as you are using it, read the logs directly from the file system. Were the mapped remote files live or exported? Meaning, if they were the live logs AND you accessed them as a file it could have been locked by the remote system but that is a guess not knowing more about the details.Anonymous

February 17, 2015

@Joseph

If the field has a space then use brackets instead of quotes such as [recipient address]'

This also works when using aliases and custom field names:

Select FieldName AS [My Custom Name]

FROM '[LOGFILEPATH]'Anonymous

February 17, 2015

Note I mistakenly left a ' at the end of the example above, that is a typo it should read:

[recipient address]Anonymous

February 24, 2015

I am trying to run the LPS on our webserver, but i am getting a .NET runtime error (1000 and 1026). we have LP 2.2 installed and it works. I am not a web system administrator but i have been told we have .NET 2.0 and .NET 4.0 installed on this server. are there other steps to the installation that i am missing.?Anonymous

April 22, 2015

The comment has been removedAnonymous

June 10, 2015

One area I do developer support for is EAS development with those who have an EAS client developmentAnonymous

October 26, 2015

Hi,

Is LPS checking the registry for the presence of LP? Was wondering if it was possible to check the local folder first? The reason I am interested in this is that on the computers we use, we aren't allowed to make 'administrative changes' (i.e. installs). So I often install on another computer, package up the files and copy them to the restricted computer as a 'portable application'. This usually works, but LPS seems to check the actual registry rather than a config file?

AndrewAnonymous

January 12, 2016

LP 2.2 Download links everywhere seem broken. I can't find anywhere to actually get LP, which is a prereq for LPS.

Where can Log Parser 2.2 actually be obtained from?!?!Anonymous

February 08, 2016

Hi

Would like to know whether we can use LP to interpret WebSphere IHS logs?Anonymous

February 11, 2016

The comment has been removedAnonymous

March 15, 2016

How do I go about checking the user-agent details? I don't see such an query in the library. Any help? Thanks!- Anonymous

July 15, 2016

You want to include the cs(user-agent) field in your query. Let me know if you need more details.

- Anonymous

Anonymous

May 09, 2016

Hi,Is is possible to pass parameters into a query?- Anonymous

July 15, 2016

Potentially but not directly in LPS. You could (again potentially) export the query as a PowerShell script (File > Export > As PowerShell Script). You could then replace the sections you want to exchange with parameters in the query with PowerShell $variables, then introduce those as parameters in the PowerShell script. You could then run the script and pass in the parameters when running the script:.\MyExportedQuery.ps1 -AutoOpen $true -OutFile C:\Temp\MyReport.CSV -MyCustomParam1 "SomeParm" -MyCustomParam2 "SomeOtherParam"Obviously this is more efficient for something you may run regularly but not so much for one-offs.Regards,Kary

- Anonymous

Anonymous

June 30, 2016

The comment has been removed- Anonymous

July 15, 2016

This is because Log Parser 2.2 is 32 bit so we are bound to 32 bit in LPS. Typically what eats up all the heap space (we only have ~2GB) is populating the GUI with the results. Therefore using INTO to send to a CSV file bypasses LPS completely. In other words the underlying native LP 2.2 writes that file directly saving us the memory usage. I recommend that anytime the result set is larger > 50-100k rows, go to CSV.To address too many rows in the CSV file you can then query that file as CSVLOG in LPS, that means you wouldn't SELECT * to select all rows; with this many rows we should have an idea of what we are looking for to begin with which we can narrow down. I recommend 'narrow down' with this wording, because result sets this large really beg the question as to why we need it unless we are going to query it and narrow down further.- Anonymous

July 19, 2016

The comment has been removed- Anonymous

November 29, 2016

I think a may have missed this Steve, if so, my apologies. I can't recompile because of the underlying dependency on Log Parser 2.2 which is out of development and 32 bit. That is the single reason LPS is 32 bit and the reason that the only way around the heap limitation is to run the query to CSV (because it bypasses the GUI of which filling the grid is what eats up all the heap memory). If you see this and want to elaborate on the subject, I'm happy to engage.

- Anonymous

- Anonymous

- Anonymous