Make practical use of NSG Flow Logs for troubleshooting

So, you've found the new Azure Network Watcher features.

Cool, now I can start getting some real information about what's going on in my Azure Network.

The NSG flow logs section of the Network Watcher blade, in the Azure Portal, lets you specify a Storage Account for each NSG (Network Security Group) to output detailed information on all the traffic hitting the NSG.

Note: The Storage Account must be in the same region as the NSG

Awesome right!

Then you try and use that information and find there are a heap of sub-folders created for each Resource Group, NSG all with separate files for each hour of logging.

Also, they are all in JSON format and really hard to find anything specific.

I don't know about you, but I found it really hard to troubleshoot a particular network communication issue with a specific VM and/or Subnet.

So, I've written the following Powershell script that pulls all these files from the Storage Account down to a local folder and then builds an array of these 'Events'.

The properties built out into the array are -

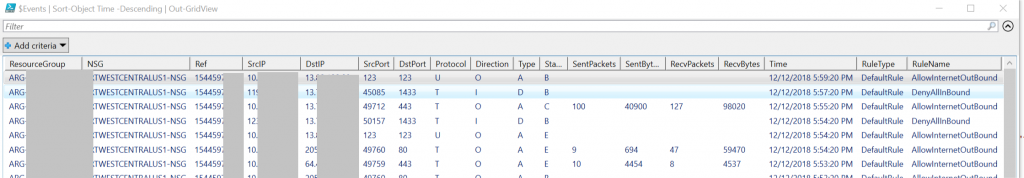

ResourceGroup, NSG, Ref, SrcIP, DstIP, SrcPort, DstPort, Protocol 'T(TCP)/U(UDP)' , Direction 'O(Outbound)/I(Inbound)' , Type 'A(Accept)/D(Denied)' , Time, RuleType, and RuleName.

This is then presented in a GridView that can be sorted and filtered to find what you are looking for.

I've also added an option at the end to dump it out to a CSV if you need to send the output to someone else.

Note: Due to the folder nesting for the NSG logging you will need to create a 'short' local root folder to cache locally.

Generally the $env:TEMP location will be too many folders deep to allow for the required folder nesting for NSG logs.

I've used C:\Temp\AzureNSGFlowLogs here, you will need to either create this folder or update that variable set line in the script.

Update [July 22nd 2017]: Allow for user selection of Storage Account and cater for multiple NSGs, in multiple locations, logging to different Storage Accounts.

Update [Nov 7th 2018]: Update Find-AzureRmResource to Get-AzureRmResource due to deprecated command (thanks to Victor Dantas Mehmeri for finding)

Update [Nov 7th 2018]: Added Version 2 new fields - State (B-Begin, C- Continue, & E-End), Send and Recv Packets and Bytes.

[sourcecode gutter="false" title="NSGFlowLogAnalysis" language="powershell"]

#Prompt user to select storage account(s)

$StorageAccountNames = (Get-AzureRmStorageAccount | Select-Object Location, StorageAccountName | Out-GridView -Title "Select Storage Account(s)" -PassThru).StorageAccountName

#$LocalFolder = "$($env:TEMP)\AzureNSGFlowLogs"

$LocalFolder = "C:\Temp\AzureNSGFlowLogs"

#If you want to override where the local copy of the NSG Flow Log files go to, un-comment the following line and update

$TimeZoneStr = (Get-TimeZone).Id

#If you want to override the Timezone to something other than the locally detected one, un-comment the following line and update

#$TimeZoneStr = "AUS Eastern Standard Time"

$StorageDetails = @()

foreach ($StorageAccountName in $StorageAccountNames) {

$StorageResource = $null

$ResourceGroupName = $null

$StoKeys = $null

$StorageAccountKey = $null

$StorageAccountName

$StorageResource = Get-AzureRmResource -ResourceType Microsoft.Storage/storageAccounts -Name $StorageAccountName

$ResourceGroupName = $StorageResource.ResourceGroupName

$ResourceGroupName

$StoKeys = Get-AzureRmStorageAccountKey -ResourceGroupName $ResourceGroupName -Name $StorageAccountName

$StorageAccountKey = $StoKeys[0].Value

$StorageContext = New-AzureStorageContext -StorageAccountName $StorageAccountName -StorageAccountKey $StorageAccountKey

$StorageDetail = New-Object psobject -Property @{

StorageAccountName = $StorageAccountName

StorageResource = $StorageResource

ResourceGroupName = $ResourceGroupName

StorageAccountKey = $StorageAccountKey

StorageContext = $StorageContext

}

$StorageDetails += $StorageDetail

}

$SubscriptionID = (Get-AzureRmContext).Subscription.Id

#Function to create the sub folders as required

function MakeFolders (

[object]$Path,

[string]$root

)

{

if (!(Test-Path -Path $root)) {

$rootsplit = $root.Split("\")

$tPath = ''

foreach ($tPath in $rootsplit) {

$BuildPath = "$($BuildPath)$($tPath)\"

$BuildPath

if (!(Test-Path -Path $BuildPath)) {

mkdir $BuildPath

}

}

}

$Build = "$($root)"

foreach ($fld in $path) {

$Build = "$($Build)\$($fld)"

#$Build

mkdir "$($build)" -ErrorAction SilentlyContinue

}

}

#End function MakeFolders

#Function to get the Timezone information

function GetTZ (

[string]$TZ_string

)

{

$r = [regex] "\[([^\[]*)\]"

$match = $r.match($($TZ_string))

# If there is a successful match for a Timezone ID

if ($match.Success) {

$TZId = $match.Groups[1].Value

# Try and get a valid TimeZone entry for the matched TimeZone Id

try {

$TZ = [System.TimeZoneInfo]::FindSystemTimeZoneById($TZId)

}

# Otherwise assume UTC

catch {

$TZ = [System.TimeZoneInfo]::FindSystemTimeZoneById("UTC")

}

} else {

try {

$TZ = [System.TimeZoneInfo]::FindSystemTimeZoneById($TZ_string)

} catch {

$TZ = [System.TimeZoneInfo]::FindSystemTimeZoneById("UTC")

}

}

return $TZ

}

#end function GetTZ

#Set the $TZ variable up with the required TimeZone information

$TZ = GetTZ -TZ_string $TimeZoneStr

#Get all the blobs in the insights-logs-networksecuritygroupflowevent container from the specified Storage Account

$Blobs = @()

foreach ($StorageDetail in $StorageDetails) {

#$StorageContext

$Blob = Get-AzureStorageBlob -Container "insights-logs-networksecuritygroupflowevent" -Context $StorageDetail.StorageContext

$Blobs += $Blob

}

#Build an array of the available selection criteria

$AvailSelection = @()

foreach ($blobpath in $blobs.name) {

$PathSplit = $blobpath.Split("/")

$datestr = Get-Date -Year $PathSplit[9].Substring(2) -Month $PathSplit[10].Substring(2) -Day $PathSplit[11].Substring(2) -Hour $PathSplit[12].Substring(2) -Minute $PathSplit[13].Substring(2) -Second 0 -Millisecond 0 -Format "yyyy-MM-ddTHH:mm:ssZ"#2017-07-06T02:53:02.3410000Z

$blobdate = (get-date $datestr).ToUniversalTime()

#Write-Output "RG: $($PathSplit[4])`tNSG:$($PathSplit[8])`tDate:$($blobdate)"

$SelectData = New-Object psobject -Property @{

ResourceGroup = $PathSplit[4]

NSG = $PathSplit[8]

Date = $blobdate

}

$AvailSelection += $SelectData

}

#Prompt user to select the required ResourceGroup(s), NSG(s), and hourly sectioned files

$SelectedResourceGroups = ($AvailSelection | Select-Object -Property "ResourceGroup" -Unique | Out-GridView -Title "Select required Resource Group(s)" -PassThru).ResourceGroup

$selectedNSGs = ($AvailSelection | Where-Object {$_.ResourceGroup -in $SelectedResourceGroups} | Select-Object -Property "NSG" -Unique | Out-GridView -Title "Select required NSG(s)" -PassThru).NSG

$SelectedDates = ($AvailSelection | Where-Object {$_.ResourceGroup -in $SelectedResourceGroups -and $_.NSG -in $selectedNSGs} | Select-Object -Property @{n="System DateTime";e={$_.Date}},@{n="Local DateTime";e={[System.TimeZoneInfo]::ConvertTimeFromUtc((Get-Date $_.Date),$TZ)}} -Unique | Sort-Object 'System DateTime' -Descending| Out-GridView -Title "Select required times (1 hour blocks)" -PassThru).'System DateTime'

#Loop though blobs and download any that meet the specified selection and are newer than those already downloaded

foreach ($blob in $blobs) {

$PathSplit = $blob.Name.Split("/")

$blobdate = get-date -Year $PathSplit[9].Substring(2) -Month $PathSplit[10].Substring(2) -Day $PathSplit[11].Substring(2) -Hour $PathSplit[12].Substring(2) -Minute $PathSplit[13].Substring(2) -Second 0 -Millisecond 0

if ($PathSplit[4] -in $SelectedResourceGroups -and $PathSplit[8] -in $selectedNSGs -and $blobdate -in $SelectedDates) {

$fld = $blob.name.Replace("/","\")

$flds = $fld.Split("\") | Where-Object {$_ -ne "PT1H.json"}

$lcl = "$($LocalFolder)\$($fld)"

MakeFolders -Path $flds -root $LocalFolder

if (Test-Path -Path $lcl) {

$lclfile = Get-ItemProperty -Path $lcl

$lcldate = (get-date $lclfile.LastWriteTimeUtc)

} else {

$lcldate = get-date "1 Jan 1970"

}

$blobdate = $blob.LastModified

if ($blobdate -gt $lcldate) {

Write-Output "Copied`t$($blob.Name)"

Get-AzureStorageBlobContent -Container "insights-logs-networksecuritygroupflowevent" -Context $blob.Context -Blob $blob.Name -Destination $lcl -Force

} else {

Write-Output "Leave`t$($blob.Name)"

}

}

}

#Get a list of all the files in the specified local directory

$Files = dir -Path "$($LocalFolder)\resourceId=\SUBSCRIPTIONS\$($SubscriptionID)\RESOURCEGROUPS\" -Filter "PT1H.json" -Recurse

#Loop through local files and build $Events array up with files that meet the selected criteria.

$Events=@()

foreach ($file in $Files) {

$PathSplit = ($file.DirectoryName.Replace("$($LocalFolder)\",'')).split("\")

$blobdate = get-date -Year $PathSplit[9].Substring(2) -Month $PathSplit[10].Substring(2) -Day $PathSplit[11].Substring(2) -Hour $PathSplit[12].Substring(2) -Minute $PathSplit[13].Substring(2) -Second 0 -Millisecond 0

$blobResourceGroup = $PathSplit[4]

$blobNSG = $PathSplit[8]

if ($blobResourceGroup -in $SelectedResourceGroups -and $blobNSG -in $selectedNSGs -and $blobdate -in $SelectedDates) {

$TestFile = $file.FullName

$json = Get-Content -Raw -Path $TestFile | ConvertFrom-Json

foreach ($entry in $json.records) {

$time = (get-date $entry.time).ToUniversalTime()

$time = [System.TimeZoneInfo]::ConvertTimeFromUtc($time, $TZ)

$FlowVer = $entry.properties.version

foreach ($flow in $entry.properties.flows) {

$rules = $flow.rule.split("_")

$RuleType = $rules[0]

$RuleName = $rules[1]

foreach ($f in $flow.flows) {

if ($FlowVer -eq 2) {

$Header = "Ref","SrcIP","DstIP","SrcPort","DstPort","Protocol","Direction","Type","State","SentPackets","SentBytes","RecvPackets","RecvBytes"

$o = $f.flowTuples | ConvertFrom-Csv -Delimiter "," -Header $Header

$o = $o | select @{n='ResourceGroup';e={$blobResourceGroup}},@{n='NSG';e={$blobNSG}},Ref,SrcIP,DstIP,SrcPort,DstPort,Protocol,Direction,Type,State,SentPackets,SentBytes,RecvPackets,RecvBytes,@{n='Time';e={$time}},@{n="RuleType";e={$RuleType}},@{n="RuleName";e={$RuleName}}

$Events += $o

} else {

$Header = "Ref","SrcIP","DstIP","SrcPort","DstPort","Protocol","Direction","Type"

$o = $f.flowTuples | ConvertFrom-Csv -Delimiter "," -Header $Header

$o = $o | select @{n='ResourceGroup';e={$blobResourceGroup}},@{n='NSG';e={$blobNSG}},Ref,SrcIP,DstIP,SrcPort,DstPort,Protocol,Direction,Type,@{n='Time';e={$time}},@{n="RuleType";e={$RuleType}},@{n="RuleName";e={$RuleName}}

$Events += $o

}

}

}

}

}

}

#Open $Events in GridView for user to see and filter as required

$Events | Sort-Object Time -Descending | Out-GridView

#Prompt to export to CSV.

$CSVExport = read-host "Do you want to export to excel? (Y/N)"

#If CSV required, export and open CSV file

if ($CSVExport.ToUpper() -eq "Y") {

$FileName = "$($LocalFolder)\NSGFlowLogs-$(Get-Date -Format "yyyyMMddHHmm").csv"

$Events | Sort-Object Time -Descending | export-csv -Path $FileName -NoTypeInformation

Start-Process "Explorer" -ArgumentList $FileName

}

Important Note:

The Azure Network Watcher team has provided a release note (https://azure.microsoft.com/en-us/updates/azure-network-watcher-network-security-group-flow-logs-blob-storage-path-update/) detailing that the path of the Flow Logs in the storage account will change on July 31st 2017.

Once this is released, I will update my script, if required, to cater for the change.

Comments

- Anonymous

November 07, 2018

The comment has been removed- Anonymous

November 07, 2018

Thanks Victor!I've updated the Find-AzureRmResource command as you found.

- Anonymous