Declarative Database Development

Define What You Want, Not How You Get There!

If Not Exists…

Database development is many aspects behind in comparison to regular application development. If you look how database development is integrated with regular application development or development processes it becomes even more obvious that there is room for improvement in this area. When asked, you will find that many database developers are spending their valuable time writing, maintaining, and testing database deployment scripts. The practices may vary, some write them by hand, some use schema comparison tools to generate the script and modify that, and some went all the way to create their own tools to generate these kinds of deployment scripts.

However, the outcome of these efforts is all the same, a set of SQL statements captured inside .SQL script files or embedded as SQL statements inside some application or installer program like WiX. In general they all look like this:

1: --

2: -- [dbo].[DataLoading]

3: --

4: IF (OBJECT_ID('[dbo].[DataLoading]', 'U') IS NULL)

5: BEGIN

6: CREATE TABLE [dbo].[DataLoading]

7: (

8: [TableName] SYSNAME NOT NULL,

9: [MultiValue] TINYINT NOT NULL,

10: [RowCount] BIGINT NOT NULL,

11: PRIMARY KEY CLUSTERED

12: (

13: [TableName],

14: [MultiValue]

15: )

16: )

17: END

18: GO

Fig 1: A typical fragment of a manually written deployment script

Most scripts are significantly more complicated, especially when you start dealing with incremental version updates. If you ever wrote or maintained one of these scripts you know this is when the problems with this approach become clear. For the simple cases it is all straightforward—check if the object exists, if not create it—this is even true when adding constraints, etc., since the object is easily identifiable. How about adding a column, changing its data type, and renaming a column name or changing the column order inside an index without changing the index name? As soon as you have multiple changes on a single aspect of an object, ordering and the ability to detect which change needs to be made gets very complicated.

Some database developers therefore stamp each and every object, using an extend property or by maintaining a version table, with versioning information about the object. This works great, as long as you are the only one and/or only tool that makes the changes to the schema, since SQL Server can and will not enforce the versioning information.

The fact that SQL Server does not maintain and expose enough useful information when it comes to versioning of the database schema, nor can it make any guarantees that the schema has been changed or tempered with, only compounds the problem.

The next problem with this approach is that the execution time of the deployment will grow over time. What most database developers will do is maintain two scripts, a new deployment and an incremental deployment, in the same fashion as described above. When time progresses you are accumulating lots of changes, which all have to be executed in the same time order to guarantee the right results. This might also lead to having to touch or rebuild objects multiple times, because dependencies between objects cannot be honored between change scripts across time periods. In other words change script v4 to v5 needs to be independent of v7 to v8 and therefore cannot collapse operations together for efficiency sake.

This brings me to the last obstacle for this approach; your script can only reflect your knowledge of the system as you understand at the time you are writing the script. What do I mean by that? Say you are distributing an application; the user has a need for some additional reporting and adds VIEWs to the system inside their own schema, providing abstraction and isolation from your schema. Great practice! They are very happy and add an index over the view, which makes the VIEW schema bound. Now it is time for you to update your schema and you happen to have a need to update one of the tables that is covered by a schema-bound view from the user. You do not know about this view, so the reality is that most deployments will fail at this time.

The Alternative Approach

So what is the alternative you might ask? If you analyze the problems described above there are a couple of conclusions you can draw:

- SQL Server does not provide any guarantees about the schema version deployed.

- You cannot rely on your knowledge about the state of the system; the system might have changed underneath you, or might be on a different version or is in some weird state.

- The number of permutations to validate inside your deployment script is therefore endless and you cannot compensate for all possible cases.

The root cause, however, is the fact that you are encoding state transitions inside your deployment script that are based on a state of the system at time T. When you are deploying this at time T+5 the world might look and be in fact different and all you could do is “fail” the deployment since the outcome of your deployment is now in doubt.

Now what if you describe how you want your schema to look, instead of encoding inside a script how to implement it? This is what declarative database development is about. You define what you want, not how you get there or physically implement the database.

Let’s dive into how the Visual Studio 2008 Team System Database Edition GDR product leverages declarative database development to produce a model-driven deployment of database schemas.

Let There Be a Model

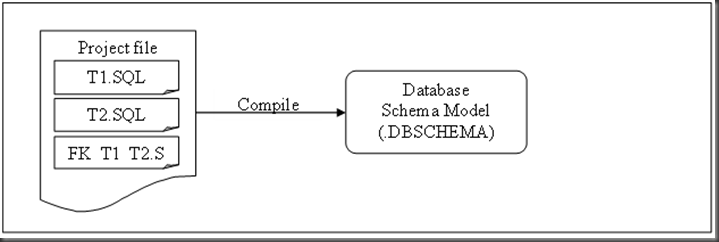

The first thing you need is a model representation of the database schema. The model describes all the objects inside your database schema; this is the “what you want” part. In order to create and fill the model, the system needs a description of the objects. Normally this is provided through a DSL (Domain Specific Language); VSDB uses the regular T-SQL DDL language to define the shape of schema objects, so there is no need to learn a new language or representation. So the model is fed a collection of DDL statements, which are living inside .SQL files, held together by a project file (.DBPROJ), which functions as the container for the source code files.

Fig 2: Database projects are containers of SQL files, which are compiled in to the schema model

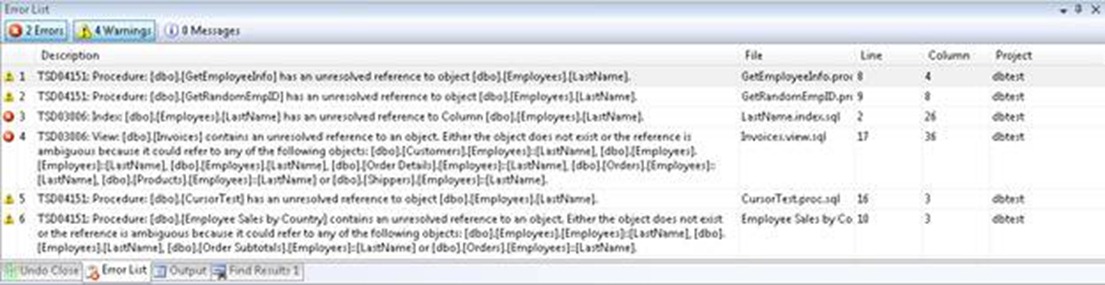

The fact that the model is built based on a source-code representation of the database is a huge differentiator. This enables a true offline development experience and better integration with source code control systems and with aspects like build servers, etc. It also means that your model is allowed to be in an inconsistent or incomplete state; you can break dependencies inside the schema and the compiler will immediately flag those.

Fig 3: The Visual Studio error list indicates that your model is in an inconsistent state.

The underlying engine inside VSDB will perform the following actions on top of the source code when building the model:

- Parse the source code, this will enforce the syntactic validity and transform the source code into a programmatically accessible representation, which is used to store the object inside the model.

- Interpretation, will wire up all the object relationships.

- Validation rules, this is the stage where semantic validation is performed, for example an object that has an instead of trigger is not allowed to be part of a transactional replication publication. Two in themselves valid and correct DDL statements, but together mutually exclusive.

- Optionally you can enforce more rules over the model through the use of T-SQL Static Code Analysis.

When the model is in a valid and consistent state, you can now perform a build. This will perform all the steps described above, excluding running T-SQL Static Code Analysis since this is an optional step. When no errors are detected, it will persist the resulting model to disk, as a .DBSCHEMA file. The .DBSCHEMA file is effectively an XML serialized format of the database model, which functions as your database schema “blueprint,” this is the manifest that describes what you want inside the database.

How Do I Deploy this Puppy?

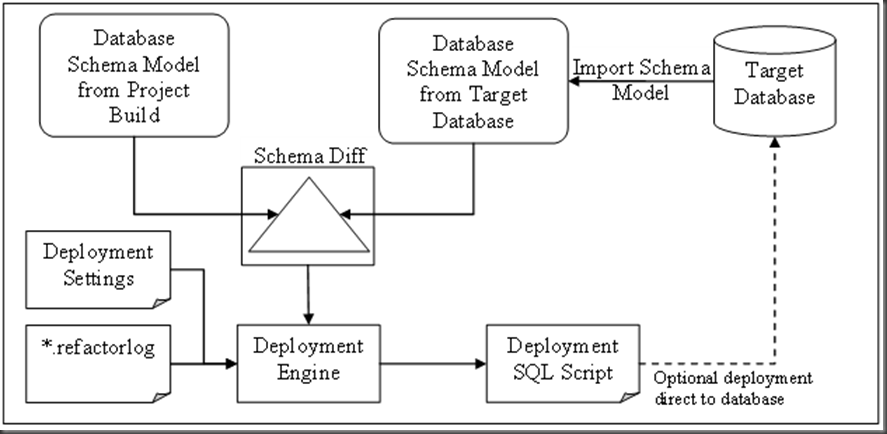

Now that you have a model representation, the question is how does it help solve the deployment problem? The easiest way to explain this is to describe the deployment process using the following diagram.

Fig 4: The VSDB deployment engine in action

- The first step is to load the source model, if this is provided in the form of a .DBSCHEMA file this is simply deserializing the model.

- The second step is to produce a model representation of the target database. This is done by importing the schema the same way you created the initial project representation, except it does not persist the resulting source code representation in this case.

- Now that there are two models, compare the two models, taking into account the deployment settings and additional information like the .REFACTORINGLOG file. The deployment settings determine the rules and settings used to compare and to generate the deployment script. The rafactoringlog file is providing additional information to deploy changes that cannot be determined using a difference-based deployment engine. One of the key examples is a rename column operation, which cannot be distinguished from a drop-and-add column of the same type with a different name; however, since there is state associated with the change, it is very important to preserve this information.

- The result of the model comparison is a list of differences. The deployment engine will then represent each atomic change as an individual action. Then the optimizer will take the collection of actions, fold them together where possible, separate them if needed, and place them in the correct dependency order.

- This information is then used to generate the deployment script, which is a regular .SQL script that can be executed normally using tools like SQLCMD.EXE or SSMS (SQL Server Management Studio).

- Optionally you can tell the deployment engine to directly update the target database, but by default only the deployment script is generated, since most people want to validate that it meets the expectations before actually executing.

The end result is that you create a deployment script taking into consideration the state of the schema as it exists in time, based on the “blueprint” of the schema that you provided.

The Icing on the Cake

Since most SQL Server deployments do not have Visual Studio installed, or you simply do not have access from your Visual Studio environment to the database that needs to get updated, there is a fully standalone command-line-based deployment engine that allows you to deploy the results of a build. This command-line engine is XCOPY deployable, so you can copy it onto a memory stick or server share and deploy your database from there.

1: "%programfiles%\microsoft visual studio 9.0\vstsdb\deploy\vsdbcmd.exe"

2: /a:Deploy

3: /manifest:MyDatabase.deploymanifest

4: /p:DeploymentConfigurationFile=MyDatabase.sqldeployment

5: /p:SqlCommandVariablesFile=MyDatabase.sqlcmdvars

6: /cs:"Data Source=MyServer\sql2008;Integrated Security=true"

Fig 5: Deploying from the command line using VSDBCMD.EXE

Using VSDBCMD.EXE is as easy as pointing it to the .DEPLOYMENTMANIFEST file, which is a container that holds all the build output together, but the tool allows you to override every part of the manifest.

This includes which deployment settings or variable files are to be used by the deployment engine.

You can also use VSDBCMD.EXE to create a model file from an existing database by using the import option like this:

1: "%programfiles%\microsoft visual studio 9.0\vstsdb\deploy\vsdbcmd.exe"

2: /a:Import

3: /cs:"Data Source=(local);Integrated Security=true;Initial Catalog=MySourceDB"

4: /dsp:sql

5: /model:MySourceDB.dbschema

Fig 6: Creating a model representation from a target database using VSDBCMD.EXE

The resulting file can now be used as the import file for the Schema Comparison tool, which will allow you to perform the visual comparison between two database schemas presented as a .DBSCHEMA file, a Database Project, or a connection to a database. This is an ideal support tool if you need to troubleshoot the differences between two database schemas.

Summary

Declarative database development and Visual Studio 2008 Team System Database Edition GDR can help you streamline your processes and integration of database development tasks and ease the deployment of your database schema, through a great standalone and redistributable deployment engine.

GertD @ www.DBProj.com

Comments

Anonymous

June 06, 2009

I love this tool but find I still have to resort to generating pre vetted scripts due to issues with standing data. For example if I add a NOT NULL field (with a default) to an existing table that has a foreign key constraint to second table, I have to manually create the second table first and populate it with data before I can run this tool. Are there any plans to include standing data into the model?Anonymous

June 06, 2009

The comment has been removedAnonymous

June 06, 2009

Daily tech links for .net and related technologies - June 7, 2009 Web Development Implementing IncrementalAnonymous

July 21, 2009

Introducing such a topic you'd like to congratulate you've let us know. Have good work.Anonymous

August 17, 2009

What I looking for is some information on how to make this great tool work where the big push is to control the schema in a data modeling tool such as ERWin or ERStudio. From a company standpoint they are unwilling to budge and let something like this become the development paradigm unless a modeling tool can be incorporated as part of the development tool kit.Anonymous

November 09, 2009

The comment has been removedAnonymous

November 09, 2009

The comment has been removedAnonymous

November 09, 2009

The comment has been removedAnonymous

November 16, 2009

We are looking at using the features of Datadude for dynamic deployment. Is it possible to compare two database schemas at run-time and generate a deployment script accordingly through code?Anonymous

November 17, 2009

The comment has been removed