Windows Azure Guidance – Replacing the data tier with Azure Table Storage

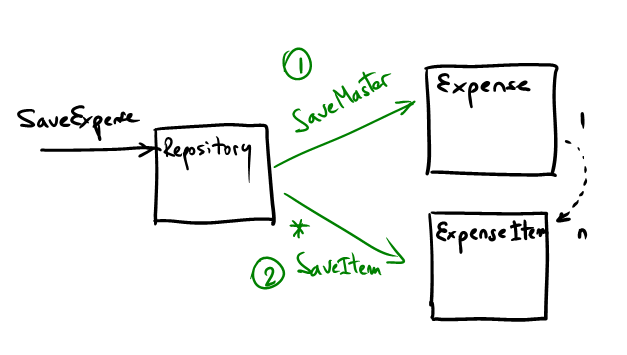

This new release focuses primarily on replacing the data tier with Azure Table Storage. To make things more interesting, we changed the data model in a-Expense so it now requires two different related entities: the expense report “header” (or master) and the details (or line items) associated with it.

The implementation of this on SQL Azure is trivial and nothing out of the ordinary. We’ve been doing this kind of thing for years. However when using Azure Table Storage this small modification triggers a number of questions:

- Will each of this entities correspond to its own “table”?

- Given that Azure Tables don’t really require a “fixed schema”, should we just store everything in a single table?

#1 is the more intuitive solution if you come with a “relational” mindset. However, the biggest consideration here is that Azure Table Storage doesn’t support transactions across different tables.

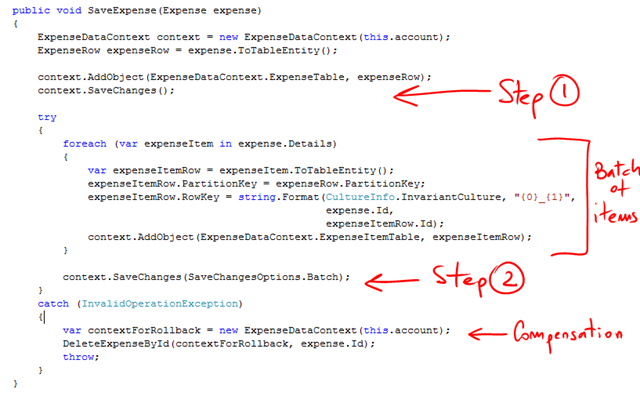

If step 1 completes successfully and step 2 fails, then you need to handle that failure and maybe delete the header. There’s a number of issues though: the deletion of of step 1 itself might fail leaving an “orphan” record, and in between step 1 and 2, the header is visible to others. Meaning that a query from another process against the Expense table will yield records that are (or were) still part of a unit of work. There are many solutions for these 2 issues:

- Include an “orphan collector” process (which could well be part of the tasks of a worker process) that will routinely scan for records that are the result of failed unit of works that didn’t complete compensation process.

- Use state flags to indicate whether a unit of work is in progress or not and filter records based on this flag.

Neither of these two are always necessary and depends on the application you are building.

The second option of using a single table for both entities is tempting because you can now use Azure Table transactions. But there are caveats: the transactional support in Azure Tables is limited to 100 entities on the same table, on the same partition key. On top of this, the current batch of operations cannot be larger than 4MB. Another drawback is that dealing with these 2 different schemas on the single table makes the client code more complex.

Again, you need to evaluate these tradeoffs in your own context and then decide what’s better for you.

In a-Expense implementation, we opted for the first solution: 2 tables, one for each entity and then compensate when there’s a failure. To be completely accurate though, we are using a hybrid approach, since the items of an expense are all added in a single transaction (currently assumes they are less than 100 items):

There are other interesting features and decisions we made on the design that we will be describing in the documentation. One is the selection of the PartitionKey for your entities. Probably the most important decision if you want to scale.

In this release we have also included some tools developed to automate the deployment using Windows Azure Management APIs. Check Scott’s post on this for more information.

Make sure you read the “readme” file included in the release.

Comments

- Anonymous

July 26, 2010

if the compensating method(DeleteExpenseByid) fail , how will you deal with that