Use Ranges/Bands for measuring Frequencies and visualizing Empirical Distributions

Scenario

The majority of BI-related activities require measuring something on your business process. These measures usually relate to one of:

- Inputs to a business process (usually leading indicators, i.e. number of incidents occurring; volume of calls happening; duration of calls; number of transactions).

- How the process performs (i.e. number of tickets per analyst; time to resolution; length of queues).

- Outputs of that process (usually lagging indicators, i.e. satisfaction scores; TTR [Avg. Time To Resolution]; costs; throughput; sales).

In almost all of these cases we need data over time to reflect how these measures progress, and be able to make decisions to drive improvements and also verify the impact of previous decisions (i.e. did adding a resource help improve satisfaction scores or TTR?).

One of the most common analyses in these cases relates to measuring the frequencies of these events happening (i.e. how often?), in order to understand the most common cases, as well as the least common ones, and ultimately the probability of a value to happen. So, counting how often the transactions happen on intervals (AKA ranges or bands) is very useful information.

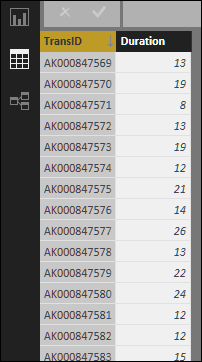

Let’s take a very simple example, in which our dataset is Transaction IDs and its time to resolution in minutes (Duration):

HowTo

If we use the articles referenced at the bottom of this post, we could use those techniques to define the specific ranges we want; however, that assumes we know the data (meaning that we know min/max values and possible ranges). If we want to do a more generic analysis, we’ll have to calculate dynamically the ranges based on the input data, and the number of ranges in which we want to analyze it.

Let’s say we want to know the frequencies of these durations on intervals of 2 minutes, and see the distribution.

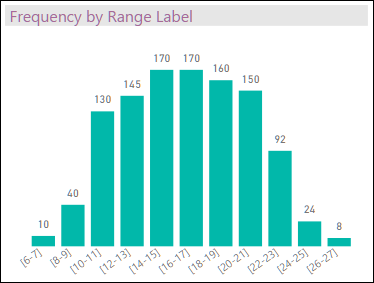

For that, we need to divide the range of values in equal intervals of 2 minutes. Then, we’ll count how many transactions happened on each interval. When we picture that with a bar chart with the ranges as X axis, and the count of transactions as Y, we’ll obtain the empirical distribution of that data set.

We’ll need these 4 elements:

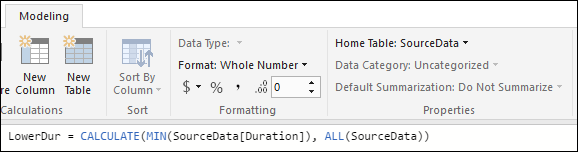

- A measure to obtain the minimum duration across all the dataset.

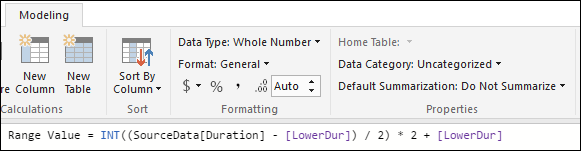

- A column that calculates the range (as a number) to which the current Duration for a given transaction belongs to.

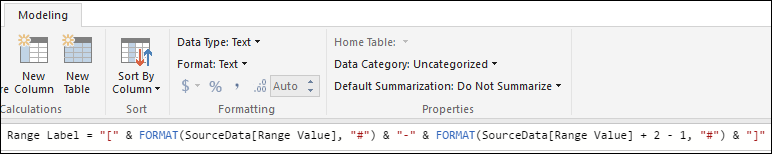

- A second column that calculates the label for the range (so we use as X axis in the chart).

- A measure that calculates the number of transactions on each range.

The first measure calculates the minimum duration of the entire dataset:

With that, we can calculate a column for the range to which this transaction’s duration belongs to (this way we calculate once the MIN(Duration) and use multiple times):

Then we calculate another column for the label of the range, which we’ll use in the chart:

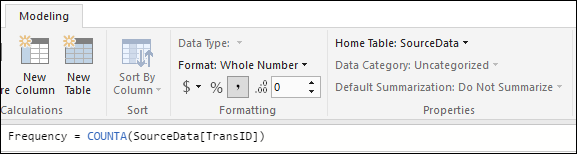

And finally, we calculate the measure for the frequency (i.e. how many transactions had a duration within a given range?):

Results

We can now create a bar chart with the Range Label in the X axis, and Frequency on the Y axis:

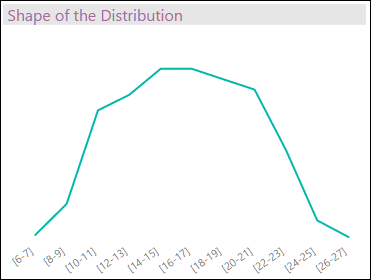

which reflects the real distribution of durations in that dataset (which I call here “empirical distribution” because it’s based on the data itself and not on any statistical distribution)… the “shape” of the bar chart reflects the actual distribution of the dataset. As over time you refresh the dataset, the ranges will recalculate and reflect the new buckets:

This information can be used to calculate the empirical probability of new values on these ranges, if conditions in the business process don’t change. [I call it “empirical” because, as mentioned above, we use the actual data and not any theoretical or statistical distribution or any kind of sampling.] If you make a decision in your business process (i.e. adding 2 people to help resolve tickets faster), then a change in the “shape” of your KPI (“Duration” in this case) would reflect the impact of this decision on the business process, and thus give you a way to assess how valuable that decision was (a topic for a future post).

Related Posts

Comments

- Anonymous

April 05, 2016

HiGood stuff! I wonder how to achieve a step before that so we can work out what x value for interval should be to create normal distribution. What I mean is how do we know the appropriate value instead of static 2 that we use for the above sample?Many thanksPeddie- Anonymous

April 05, 2016

Hi Peddie,I was planning to make a 2nd post for a user-selected, variable value for the size of the intervals; that's coming soon. I also have a post in the backlog about comparing empirical distributions with theoretical ones, and some analyses from comparing them. I'd advice against "creating" a normal distribution from empirical data, because it may lead to conclusions not really supported by the empirical observations, or provide an unwarranted sense of confidence on the appropriateness of some conclusions. Will try to post them by this weekend.Regards,-Daniel.

- Anonymous

- Anonymous

August 07, 2016

Hola, Daniel, no sé si te acordás de mí, de la época en que el San José todavía era de los Hermanos Maristas. Intento ponerme en contacto con vos porque quisiera intercambiar unas ideas que tal vez serían beneficiosas para ambos. Te mando un saludo enorme desde la misma ciudad de siempre. Cariños!