Real World Example of Troubleshooting R2 Live Migration using CSV’s

As I’ve mentioned several times, we run our engineering lab on much of the latest & greatest. There are many who would argue that this is a luxury (one afforded to those who work at a Software company such as Microsoft) and I wouldn’t have a solid argument against their argument. However, it doesn’t change the fact that often we are so cutting edge that when “issues” arise we don’t get a solid response internally without development debugging. There is a challenge to often getting product group developers on tap to help and this leads to “fiddling” around with things which is where I found myself this past week.

We run our lab on a 7-node Hyper-V cluster that has each node attached to our EMC Clarion AX-4 SAN. This cluster is in a node-majority setup and recently I found that R2’s new Live Migration functionality wasn’t working as designed. After a little bit of investigation, I determined that only 2 of the 7 hosts were unable to migrate and each time a migration was attempted (for any VM), the following error was thrown in the event viewer -

'Machine name’ live migration did not succeed at the source.

Failed to get the network address for the destination node 'server a': A cluster network is not available for this operation. (0x000013AB)

Unlike many event error messages, the error “A cluster network is not available for this operation” is literally the value for the Win error 000013AB.

Quick Review of the Infrastructure

Each member of the cluster has two physical NICs, one for management and one that is dedicated to Hyper-V and represents multiple networks through VLAN Trunking. The first thing that most folks, out-of-the-box, will see happen with clustering and networking is the lack of “exact match” when using Virtual Machine Manager (VMM) to manage your cluster. Basically, the following two items must be identical in order for you to manage any machines in the cluster -

- Network Name (on the physical NIC)

- Network Tag

Depending on the number of Nodes in your cluster, this can be a pain in the you know what to determine. It is very easy though utilizing the following PowerShell script and execute on your VMM server -

#####################################################################

function DisplayNicInfo($VMHostName)

{

$yy= get-VirtualNetwork -VMHost $VMHostName;

$yy | ForEach-object {write-host " Name " $_.Name;

write-host " Locations " $_.Locations;

write-host " Tag " $_.Tag;

}

}

#####################################################################

$clusname = read-host "Host Cluster name to check"

Write-Host ""

$VMMServer = get-vmmserver -computername localhost

$Cluster = get-vmhostcluster -name $clusname

$VMHosts = get-vmhost -vmhostcluster $Cluster

$VMHosts | ForEach-object {Write-Host "VMHost: " $_.Name;

DisplayNicInfo($_.Name);

Write-Host ""}

NOTE: This PowerShell script is going to require you to lower your script in order to execute it. To do this, do the following -

Set-ExecutionPolicy Unrestricted

To execute, you would then just open PowerShell, navigate to the PS1 file, and execute it. Enter the Cluster Network Name (e.g. cluster.contoso.com), and it will display all nodes current Network Name & Tag configuration. Fix whatever doesn’t match…

For more information, see the following blog I did about Network Name & Tag.

How do I do “Live Migration” when using Cluster Shared Volumes?

The first thing to note is that Live Migration is possible without the use of Clustering or Clustered Shared Volumes (CSV). It is accomplished in Virtual Machine Manager (VMM 2008 R2) by right-clicking the virtual machine in the SCVMM Admin Console, select Migrate. This you are clustering your Virtual Machines in order to get High-Availability then this changes thing.

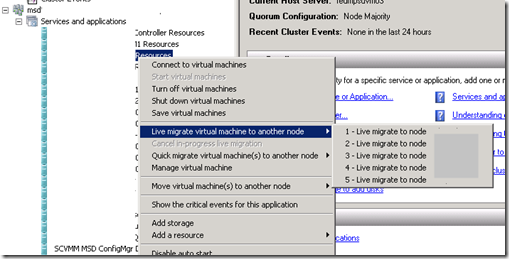

To successfully migrate, you now move out of the SCVMM Admin Console and find your new best friend called the Failover Cluster Admin Console. On your VMM server (if not one of your hosts), you can add this using Server Manager and adding it under the Remote Administration Tools. You will see your highly available virtual machines listed in the console under Services and Applications, and you can right-click any VM and you will see the following option:

Resolving the Live Migration “Cluster Network is not Available”

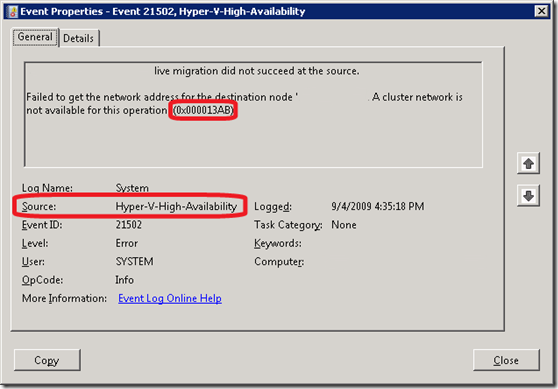

Now that you are crystal clear on how to “migrate” between nodes let’s talk about how to troubleshoot a bit when you aren’t successfully able to migrate. This was the case for me. The first tool in your toolbox is the trusty Event Viewer which is where all events are targeted in case of failures for Hyper-V and High Reliability. As shown above, you might see the following error screen in your event viewer -

As you can see, the source is Hyper-V-High-Availability and in this case you will see that the failure occurred for the “designation node” which is important as the error tells you whether it was destination or source. I’ve blanked the Computer name but in this case I was on the source computer and located this error message thus indicating that it was “unable” locate the destination computer.

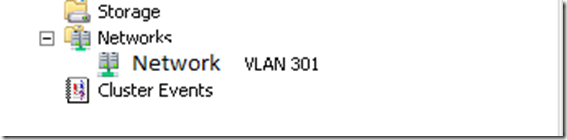

This was troublesome after reviewing the cluster networks configuration as all “looked” well as indicated in this screen shot -

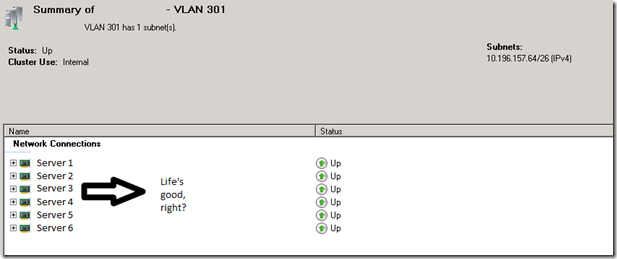

Furthermore, if you highlight the network and look at all the servers in the cluster you see the following -

Um, I learned that this screen might “lie” to you every once in awhile and things are not all happy and content in Cluster Live Migration land. No fear, a little digging around in the network bindings clears life up a bit.

The following steps cleared up my headaches and by no means are they guaranteed to make your life happy. I do, though, hope that it does save someone else a lot of time as this took a few hours to dig into and determine what was causing the problem. At least, what I believe was causing the issue.

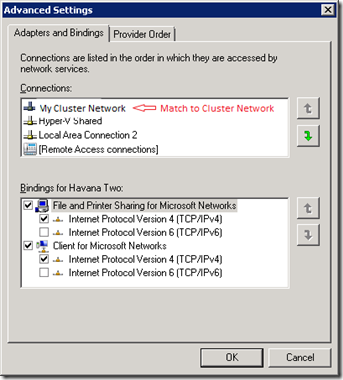

There are a lot of folks out there who are unclear on how to change the binding order when you have multiple NICs in your workstation or server. In my case, I didn’t want the first binding to be the unconfigured VLAN NIC as this would certainly cause problems. Thus, I went digging and ensured that all seven nodes in the cluster had the exact same binding orders and the first in the list was the domain and cluster management network card.

To do this, you do the following:

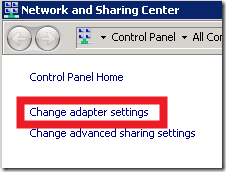

- Click Start, locate Networks, and right-click and select Properties

- In the Network and Sharing Center, click Change adapter settings

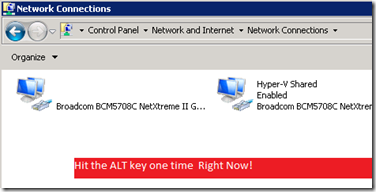

- Hit the ALT key (yes, that’s it)

- Click Advanced

- Click Advanced Settings…

- In the Adapters and Bindings Tab, ensure your Cluster Network "Connection” is the first listed (see screen shot)

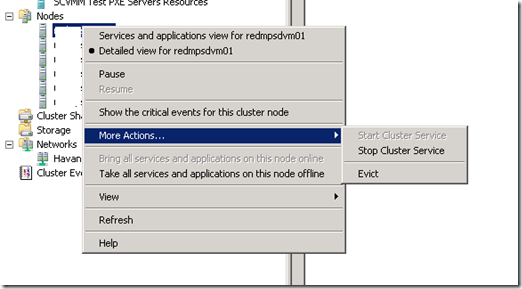

After doing this on the node’s that are failing, the last step was to stop the cluster service on these nodes. This will force a “Quick Migrate” but not a Live migration so there is a possible outage so do so in your outage window. Keep in mind for those new to clustering (or those like me who haven’t used it since the late 90’s), the cluster service is stopped not in the Services but in the Failover Cluster Manager.

- Under Nodes, right-click the Nodes

- Select More Actions…

- Click Stop Cluster Service

Give it a minute… If there a lot of VMs on the host, they are all going to Save state and then migrating to the new host. After several minutes, you can go back in and select Start Cluster Service. You should now (if they same problem as mine) be able to live Migrate back to the broken hosts. Happy Clustering of VMs are here again!

Enjoy your Labor Day holiday weekend (if you celebrate!)

-Chris

Comments

Anonymous

January 01, 2003

Hey Pete- This is a great question. I'm in the process of rebuilding one of the cluster nodes (should get done early next week) and for grins I will throw R2 Server Core on there and see what I can do. It is a great question and my hunch is that netsh is our tool of choice but let me do some digging. Give me a week... Thanks, -ChrisAnonymous

January 01, 2003

Hey Philip, That is awesome! I'm really glad you covered me as I never got a chance to dig into Server Core. Since I kinda do this for fun on the side, I never really was able to dedicate the time! I'm glad to know that you figured it out. I'm going to go ahead and post a blog that points to yours as that is super helpful! Thanks for sharing! -ChrisAnonymous

September 09, 2009

Thanks for the information. Any idea how to do this under Server Core? Thanks!Anonymous

November 24, 2009

The comment has been removedAnonymous

March 19, 2010

Chris, Error hit today on a lab cluster we just stood up on a 4 node Intel Modular Server. The fix was the binding order as per your blog post! Thanks for that, and in return, here is my post on how to restructure the binding order on Hyper-V Server 2008 RTM/R2 and Server Core: http://blog.mpecsinc.ca/2010/03/nic-binding-order-on-server-core-or.html Philip ElderAnonymous

March 20, 2010

Chris, It took a bit for us to figure out why our setup was failing. And, along with that it took a while to figure out how to move those bindings around in Hyper-V Server 2008 R2. Once we had it all straightened out, our Live Migrations began to work! Thanks for that. Also, Please see the following link on how to change the binding order on Core: http://blog.mpecsinc.ca/2010/03/nic-binding-order-on-server-core-or.html Philip