IIS SEO Toolkit - Start new analysis automatically through code

One question that I've been asked several times is: " Is it possible to schedule the IIS SEO Toolkit to run automatically every night? " . Other related questions are: "Can I automate the SEO Toolkit so that as part of my build process I'm able to catch regressions on my application? ", or "Can I run it automatically after every check-in to my source control system to ensure no links are broken? ", etc.

The good news is that the answer is YES!. The bad news is that you have to write a bit of code to be able to make it work. Basically the SEO Toolkit includes a Managed code API to be able to start the analysis just like the User Interface does, and you can call it from any application you want using Managed Code.

In this blog I will show you how to write a simple command application that will start a new analysis against the site provided in the command line argument and process a few queries after finishing.

IIS SEO Crawling APIs

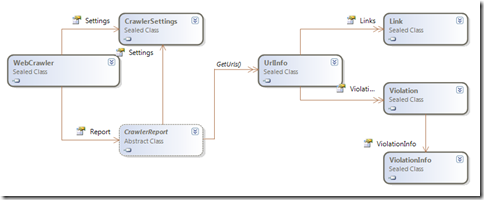

The most important type included is a class called WebCrawler. This class takes care of all the process of driving the analysis. The following image shows this class and some of the related classes that you will need to use for this.

The WebCrawler class is initialized through the configuration specified in the CrawlerSettings. The WebCrawler class also contains two methods Start() and Stop() which starts the crawling process in a set of background threads. With the WebCrawler class you can also gain access to the CrawlerReport through the Report property. The CrawlerReport class represents the results (whether completed or in progress) of the crawling process. It has a method called GetUrls() that returns an instance to all the UrlInfo items. A UrlInfo is the most important class that represents a URL that has been downloaded and processed, it has all the metadata such as Title, Description, ContentLength, ContentType, and the set of Violations and Links that it includes.

Developing the Sample

- Start Visual Studio.

- Select the option "File->New Project"

- In the "New Project" dialog select the template "Console Application", enter the name "SEORunner" and press OK.

- Using the menu "Project->Add Reference" add a reference to the IIS SEO Toolkit Client assembly "c:\Program Files\Reference Assemblies\Microsoft\IIS\Microsoft.Web.Management.SEO.Client.dll".

- Replace the code in the file Program.cs with the code shown below.

- Build the Solution

using System;

using System.IO;

using System.Linq;

using System.Net;

using System.Threading;

using Microsoft.Web.Management.SEO.Crawler;

namespace SEORunner {

class Program {

static void Main(string[] args) {

if (args.Length != 1) {

Console.WriteLine("Please specify the URL.");

return;

}

// Create a URI class

Uri startUrl = new Uri(args[0]);

// Run the analysis

CrawlerReport report = RunAnalysis(startUrl);

// Run a few queries...

LogSummary(report);

LogStatusCodeSummary(report);

LogBrokenLinks(report);

}

private static CrawlerReport RunAnalysis(Uri startUrl) {

CrawlerSettings settings = new CrawlerSettings(startUrl);

settings.ExternalLinkCriteria = ExternalLinkCriteria.SameFolderAndDeeper;

// Generate a unique name

settings.Name = startUrl.Host + " " + DateTime.Now.ToString("yy-MM-dd hh-mm-ss");

// Use the same directory as the default used by the UI

string path = Path.Combine(

Environment.GetFolderPath(Environment.SpecialFolder.MyDocuments),

"IIS SEO Reports");

settings.DirectoryCache = Path.Combine(path, settings.Name);

// Create a new crawler and start running

WebCrawler crawler = new WebCrawler(settings);

crawler.Start();

Console.WriteLine("Processed - Remaining - Download Size");

while (crawler.IsRunning) {

Thread.Sleep(1000);

Console.WriteLine("{0,9:N0} - {1,9:N0} - {2,9:N2} MB",

crawler.Report.GetUrlCount(),

crawler.RemainingUrls,

crawler.BytesDownloaded / 1048576.0f);

}

// Save the report

crawler.Report.Save(path);

Console.WriteLine("Crawling complete!!!");

return crawler.Report;

}

private static void LogSummary(CrawlerReport report) {

Console.WriteLine();

Console.WriteLine("----------------------------");

Console.WriteLine(" Overview");

Console.WriteLine("----------------------------");

Console.WriteLine("Start URL: {0}", report.Settings.StartUrl);

Console.WriteLine("Start Time: {0}", report.Settings.StartTime);

Console.WriteLine("End Time: {0}", report.Settings.EndTime);

Console.WriteLine("URLs: {0}", report.GetUrlCount());

Console.WriteLine("Links: {0}", report.Settings.LinkCount);

Console.WriteLine("Violations: {0}", report.Settings.ViolationCount);

}

private static void LogBrokenLinks(CrawlerReport report) {

Console.WriteLine();

Console.WriteLine("----------------------------");

Console.WriteLine(" Broken links");

Console.WriteLine("----------------------------");

foreach (var item in from url in report.GetUrls()

where url.StatusCode == HttpStatusCode.NotFound &&

!url.IsExternal

orderby url.Url.AbsoluteUri ascending

select url) {

Console.WriteLine(item.Url.AbsoluteUri);

}

}

private static void LogStatusCodeSummary(CrawlerReport report) {

Console.WriteLine();

Console.WriteLine("----------------------------");

Console.WriteLine(" Status Code summary");

Console.WriteLine("----------------------------");

foreach (var item in from url in report.GetUrls()

group url by url.StatusCode into g

orderby g.Key

select g) {

Console.WriteLine("{0,20} - {1,5:N0}", item.Key, item.Count());

}

}

}

}

If you are not using Visual Studio, you can just save the contents above in a file, call it SEORunner.cs and compile it using the command line:

C:\Windows\Microsoft.NET\Framework\v3.5\csc.exe /r:"c:\Program Files\Reference Assemblies\Microsoft\IIS\Microsoft.Web.Management.SEO.Client.dll" /optimize+ SEORunner.cs

After that you should be able to run SEORunner.exe and pass the URL of your site as a argument, you will see an output like:

Processed - Remaining - Download Size

56 - 149 - 0.93 MB

127 - 160 - 2.26 MB

185 - 108 - 3.24 MB

228 - 72 - 4.16 MB

254 - 48 - 4.98 MB

277 - 36 - 5.36 MB

295 - 52 - 6.57 MB

323 - 25 - 7.53 MB

340 - 9 - 8.05 MB

358 - 1 - 8.62 MB

362 - 0 - 8.81 MB

Crawling complete!!!

----------------------------

Overview

----------------------------

Start URL: https://www.carlosag.net/

Start Time: 11/16/2009 12:16:04 AM

End Time: 11/16/2009 12:16:15 AM

URLs: 362

Links: 3463

Violations: 838

----------------------------

Status Code summary

----------------------------

OK - 319

MovedPermanently - 17

Found - 23

NotFound - 2

InternalServerError - 1

----------------------------

Broken links

----------------------------

https://www.carlosag.net/downloads/ExcelSamples.zip

The most interesting method above is RunAnalysis, it creates a new instance of the CrawlerSettings and specifies the start URL. Note that it also specifies that we should consider internal all the pages that are hosted in the same directory or subdirectories. We also set the a unique name for the report and use the same directory as the IIS SEO UI uses so that opening IIS Manager will show the reports just as if they were generated by it. Then we finally call Start() which will start the number of worker threads specified in the WebCrawler::WorkerCount property. We finally just wait for the WebCrawler to be done by querying the IsRunning property.

The remaining methods just leverage LINQ to perform a few queries to output things like a report aggregating all the URLs processed by Status code and more.

Summary

As you can see the IIS SEO Toolkit crawling APIs allow you to easily write your own application to start the analysis against your Web site which can be easily integrated with the Windows Task Scheduler or your own scripts or build system to easily allow for continuous integration.

Once the report is saved locally it can then be opened using IIS Manager and continue further analysis as with any other report. This sample console application can be scheduled using the Windows Task Scheduler so that it can run every night or at any time. Note that you could also write a few lines of PowerShell to automate it without the need of writing C# code and do that by only command line, but that is left for another post.

Comments

- Anonymous

January 27, 2010

is the PowerShell automation post still coming? - Anonymous

February 08, 2010

Hi!If i use this method, what is the best way to read the content of each file? The response and responseStream properties is always null. Do I need to open and close the file each time?Thanks for your great posts, it has helped me alot. - Anonymous

September 27, 2010

The comment has been removed - Anonymous

September 28, 2010

@Jim , the reason you dont see it run is because it is only registered with the IIS Manager client, however when you consume the APIs directly you need to use the method RegisterModule to add the instance of any custom module to the Crawler, such as the W3C validator module directly. Something like: webCrawler.RegisterModule(new W3CValidatorMOdule()); - Anonymous

September 29, 2010

Thanks for your prompt reply.I've tried adding 'WebCrawler.RegisterModule(new SEOW3ValidatorModule());' and other variations immediately prior to the crawler.Start(); in SEOrunner.cs, but when I compile it I get the error 'The type or namespace name 'SEOW3ValidatorModule' could not be found.I then tried adding 'using SEOW3Validator;' at the top of the code, but am still getting the same error.What am I doing wrong? Thanks again! - Anonymous

September 29, 2010

@Jim, In order to compile it you have to add a reference to the right DLL, the W3C validator module is not part of the core, so you need to either include the code as part of the DLL or add a reference to the Validator Module and make sure it is exposed as a public class. - Anonymous

April 01, 2013

Thanks Carlos for the sample.Is there any upper limit for MaximumLinkCount?I'm using this tool to crawl site which has lot of archived content. Is it possible to configure the bot so that it can read a specific meta tag on the page and skip it? We of course we don't want the bot to report it as a 404 :)Looking forward to hear from you :) - Anonymous

October 27, 2013

The above seems to generate three xml files under my documentsIIS SEO Reportsurl date. If I open IIS SEO Toolkit GUI I can see the complete report in a user friendly format and I can manually export it to csv. Which is fantastic.But I'm wondering if there is a way to automate exporting to csv from the API? Basically we want to create a text based report with violations and email that to specific people. In particular I'm interested in broken hyperlinks but being able to report on other issues would be useful.I assume because these are xml based files I could potentially programatically parse the xml and obtain this information but is there an easier/more direct way to do this from within the SEO Toolkit API?The documentation on the MSDN site for the api seems kind of sparse. msdn.microsoft.com/.../microsoft.web.management.seo.crawler(v=vs.90).aspx It gives a list of methods, properties etc but I don't see any description of what anything does. In some cases one can make an educated guess based on the name but it would be great if this were better fleshed out/documented. - Anonymous

August 16, 2014

My colleague uses this software. Is it still useful today?