IIS SEO Toolkit – Crawler Module Extensibility

Sample SEO Toolkit CrawlerModule Extensibility

In this blog we are going to write an example on how to extend the SEO Toolkit functionality, so for that we are going to pretend our company has a large Web site that includes several images, and now we are interested in making sure all of them comply to a certain standard, lets say all of them should be smaller than 1024x768 pixels and that the quality of the images is no less than 16 bits per pixel. Additionally we would also like to be able to make custom queries that can later allow us to further analyze the contents of the images and filter based on directories and more.

For this we will extend the SEO Toolkit crawling process to perform the additional processing for images, we will be adding the following new capabilities:

- Capture additional information from the Content. In this case we will capture information about the image, in particular we will extend the report to add a "Image Width", "Image Height" and a "Image Pixel Format".

- Flag additional violations. In this example we will flag three new violations:

- Image is too large. This violation will be flagged any time the content length of the image is larger than the "Maximum Download Size per URL" configured at the start of the analysis. It will also flag this violation if the resolution is larger than 1024x768.

- Image pixel format is too small. This violation will be flagged if the image is 8 or 4 bits per pixel.

- Image has a small resolution. This will be flagged if the image resolution per inch is less than 72dpi.

Enter CrawlerModule

A crawler module is a class that extends the crawling process in Site Analysis to provide custom functionality while processing each URL. By deriving from this class you can easily raise your own set of violations or add your own data and links to any URL.

public abstract class CrawlerModule : IDisposable

{

// Methods

public virtual void BeginAnalysis();

public virtual void EndAnalysis(bool cancelled);

public abstract void Process(CrawlerProcessContext context);

// Properties

protected WebCrawler Crawler { get; }

protected CrawlerSettings Settings { get; }

}

It includes three main methods:

- BeginAnalysis. This method is invoked once at the beginning of the crawling process and allows you to perform any initialization needed. Common tasks include registering custom properties in the Report that can be accessed through the Crawler property.

- Process. This method is invoked for each URL once its contents has been downloaded. The context argument includes a property URLInfo that provides all the metadata extracted for the URL. It also includes a list of Violations and Links in the URL. Common tasks include augmenting the metadata of the URL whether using its contents or external systems, flagging new custom Violations, or discovering new links in the contents.

- EndAnalysis. This method is invoked once at the end of the crawling process and allows you to do any final calculations on the report once all the URLs have been processed. Common tasks in this method include performing aggregations of data across all the URLs, or identifying violations that depend on all the data being available (such as finding duplicates).

Coding the Image Crawler Module

Create a Class Library in Visual Studio and add the code shown below.

- Open Visual Studio and select the option File->New Project

- In the New Project dialog select the Class Library project template and specify a name and a location such as "SampleCrawlerModule"

- Using the Menu "Project->Add Reference", add a reference to the IIS SEO Toolkit client library (C:\Program Files\Reference Assemblies\Microsoft\IIS\Microsoft.Web.Management.SEO.Client.dll).

- Since we are going to be registering this through the IIS Manager extensibility, add a reference to the IIS Manager extensibility DLL (c:\windows\system32\inetsrv\Microsoft.Web.Management.dll) using the "Project->Add Reference" menu.

- Also, since we will be using the .NET Bitmap class you need to add a reference to "System.Drawing" using the "Project->Add Reference" menu.

- Delete the auto-generated Class1.cs since we will not be using it.

- Using the Menu "Project->Add New Item" Add a new class named "ImageExtension".

using System;

using System.Drawing;

using System.Drawing.Imaging;

using Microsoft.Web.Management.SEO.Crawler;

namespace SampleCrawlerModule {

/// <summary>

/// Extension to add validation and metadata to images while crawling

/// </summary>

internal class ImageExtension : CrawlerModule {

private const string ImageWidthField = "iWidth";

private const string ImageHeightField = "iHeight";

private const string ImagePixelFormatField = "iPixFmt";

public override void BeginAnalysis() {

// Register the properties we want to augment at the begining of the analysis

Crawler.Report.RegisterProperty(ImageWidthField, "Image Width", typeof(int));

Crawler.Report.RegisterProperty(ImageHeightField, "Image Height", typeof(int));

Crawler.Report.RegisterProperty(ImagePixelFormatField, "Image Pixel Format", typeof(string));

}

public override void Process(CrawlerProcessContext context) {

// Make sure only process the Content Types we need to

switch (context.UrlInfo.ContentTypeNormalized) {

case "image/jpeg":

case "image/png":

case "image/gif":

case "image/bmp":

// Process only known content types

break;

default:

// Ignore any other

return;

}

//--------------------------------------------

// If the content length of the image was larger than the max

// allowed to download, then flag a violation, and stop

if (context.UrlInfo.ContentLength >

Crawler.Settings.MaxContentLength) {

Violations.AddImageTooLargeViolation(context,

"It is larger than the allowed download size");

// Stop processing since we do not have all the content

return;

}

// Load the image from the response into a bitmap

using (Bitmap bitmap = new Bitmap(context.UrlInfo.ResponseStream)) {

Size size = bitmap.Size;

//--------------------------------------------

// Augment the metadata by adding our fields

context.UrlInfo.SetPropertyValue(ImageWidthField, size.Width);

context.UrlInfo.SetPropertyValue(ImageHeightField, size.Height);

context.UrlInfo.SetPropertyValue(ImagePixelFormatField, bitmap.PixelFormat.ToString());

//--------------------------------------------

// Additional Violations:

//

// If the size is outside our standards, then flag violation

if (size.Width > 1024 &&

size.Height > 768) {

Violations.AddImageTooLargeViolation(context,

String.Format("The image size is: {0}x{1}",

size.Width, size.Height));

}

// If the format is outside our standards, then flag violation

switch (bitmap.PixelFormat) {

case PixelFormat.Format1bppIndexed:

case PixelFormat.Format4bppIndexed:

case PixelFormat.Format8bppIndexed:

Violations.AddImagePixelFormatSmall(context);

break;

}

if (bitmap.VerticalResolution <= 72 ||

bitmap.HorizontalResolution <= 72) {

Violations.AddImageResolutionSmall(context,

bitmap.HorizontalResolution + "x" + bitmap.VerticalResolution);

}

}

}

/// <summary>

/// Helper class to hold the violations

/// </summary>

private static class Violations {

private static readonly ViolationInfo ImageTooLarge =

new ViolationInfo("ImageTooLarge",

ViolationLevel.Warning,

"Image is too large.",

"The Image is too large: {details}.",

"Make sure that the image content is required.",

"Images");

private static readonly ViolationInfo ImagePixelFormatSmall =

new ViolationInfo("ImagePixelFormatSmall",

ViolationLevel.Warning,

"Image pixel format is too small.",

"The Image pixel format is too small",

"Make sure that the quality of the image is good.",

"Images");

private static readonly ViolationInfo ImageResolutionSmall =

new ViolationInfo("ImageResolutionSmall",

ViolationLevel.Warning,

"Image resolution is small.",

"The Image resolution is too small: ({res})",

"Make sure that the image quality is good.",

"Images");

internal static void AddImageTooLargeViolation(CrawlerProcessContext context, string details) {

context.Violations.Add(new Violation(ImageTooLarge,

0, "details", details));

}

internal static void AddImagePixelFormatSmall(CrawlerProcessContext context) {

context.Violations.Add(new Violation(ImagePixelFormatSmall, 0));

}

internal static void AddImageResolutionSmall(CrawlerProcessContext context, string resolution) {

context.Violations.Add(new Violation(ImageResolutionSmall,

0, "res", resolution));

}

}

}

}

As you can see in the BeginAnalysis the module registers three new properties with the Report using the Crawler property. This is only required if you want to provide either a custom text or use it for different type other than a string. Note that current version only allows primitive types like Integer, Float, DateTime, etc.

During the Process method it first makes sure that it only runs for known content types, then it performs any validations raising a set of custom violations that are defined in the Violations static helper class. Note that we load the content from the Response Stream, which is the property that contains the received from the server. Note that if you were analyzing text the property Response would contain the content (this is based on Content Type, so HTML, XML, CSS, etc, will be kept in this String property).

Registering it

When running inside IIS Manager, crawler modules need to be registered as a standard UI module first and then inside their initialization they need to be registered using the IExtensibilityManager interface. In this case to keep the code as simple as possible everything is added in a single file. So add a new file called "RegistrationCode.cs" and include the contents below:

using System;

using Microsoft.Web.Management.Client;

using Microsoft.Web.Management.SEO.Crawler;

using Microsoft.Web.Management.Server;

namespace SampleCrawlerModule {

internal class SampleCrawlerModuleProvider : ModuleProvider {

public override ModuleDefinition GetModuleDefinition(IManagementContext context) {

return new ModuleDefinition(Name, typeof(SampleCrawlerModule).AssemblyQualifiedName);

}

public override Type ServiceType {

get { return null; }

}

public override bool SupportsScope(ManagementScope scope) {

return true;

}

}

internal class SampleCrawlerModule : Module {

protected override void Initialize(IServiceProvider serviceProvider, ModuleInfo moduleInfo) {

base.Initialize(serviceProvider, moduleInfo);

IExtensibilityManager em = (IExtensibilityManager)GetService(typeof(IExtensibilityManager));

em.RegisterExtension(typeof(CrawlerModule), new ImageExtension());

}

}

}

This code defines a standard UI IIS Manager module and in its client-side initialize method it uses the IExtensibilityManager interface to register the new instance of the Image extension. This will make it visible to the Site Analysis feature.

Testing it

To test it we need to add the UI module to Administration.config, that also means that the assembly needs to be registered in the GAC.

To Strongly name the assembly

In Visual Studio, you can do this easily by using the menu "Project->Properties", and select the "Signing" tab, check the "Sign the assembly", and choose a file, if you don't have one you can easily just choose New and specify a name.

After this you can compile and now should be able to add it to the GAC.

To GAC it

If you have the SDK's you should be able to call it like in my case:

"\Program Files\Microsoft SDKs\Windows\v6.0A\bin\gacutil.exe" /if SampleCrawlerModule.dll

(Note, you could also just open Windows Explorer, navigate to c:\Windows\assembly and drag & drop your file in there, that will GAC it automatically).

Finally to see the right name that should be use in Administration.config run the following command:

"\Program Files\Microsoft SDKs\Windows\v6.0A\bin\gacutil.exe" /l SampleCrawlerModule

In my case it displays:

SampleCrawlerModule, Version=1.0.0.0, Culture=neutral, PublicKeyToken=6f4d9863e5b22f10, …

Finally register it in Administration.config

Open Administration.config in Notepad using an elevated instance, find the </moduleProviders> and add a string like the one below but replacing the right values for Version and PublicKeyToken:

<add name="SEOSample" type="SampleCrawlerModule.SampleCrawlerModuleProvider, SampleCrawlerModule, Version=1.0.0.0, Culture=neutral, PublicKeyToken=6f4d9863e5b22f10" />

Use it

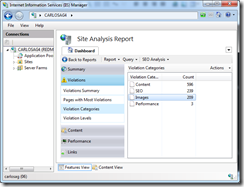

After registration you now should be able to launch IIS Manager and navigate to Search Engine Optimization. Start a new Analysis to your Web site. Once completed if there are any violations you will see them correctly in the Violations Summary or any other report. For example see below all the violations in the "Images" category.

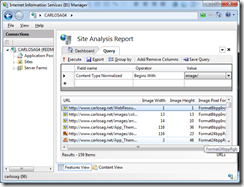

Since we also extended the metadata by including the new fields (Image Width, Image Height, and Image Pixel Format) now you can use them with the Query infrastructure to easily create a report of all the images:

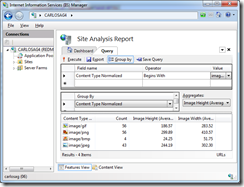

And since they are standard fields, they can be used in Filters, Groups, and any other functionality, including exporting data. So for example the following query can be opened in the Site Analysis feature and will display an average of the width and height of images summarized by type of image:

<?xml version="1.0" encoding="utf-8"?>

<query dataSource="urls">

<filter>

<expression field="ContentTypeNormalized" operator="Begins" value="image/" />

</filter>

<group>

<field name="ContentTypeNormalized" />

</group>

<displayFields>

<field name="ContentTypeNormalized" />

<field name="(Count)" />

<field name="Average(iWidth)" />

<field name="Average(iHeight)" />

</displayFields>

</query>

And of course violation details are shown as specified, including Recommendation, Description, etc:

Summary

As you can see extending the SEO Toolkit using a Crawler Module allows you to provide additional information, whether Metadata, Violations or Links to any document being processed. This can be used to add support for content types not supported out-of-the box such as PDF, Office Documents or anything else that you need. It also can be used to extend the metadata by writing custom code to wire data from other system into the report giving you the ability to exploit this data using the Query capabilities of Site Analysis.

Comments

- Anonymous

November 23, 2009

Wow! This is an excellent walk-through of a practical use for a wonderful feature of the IIS SEO Toolkit. I had no idea the toolkit was extensible. Thanks a million, Carlos! - Anonymous

January 06, 2010

Seems cool. How it affects the performance? - Anonymous

January 07, 2010

The performance impact depends directly on the work done by the module. The overhead of adding a module that does nothing should be minimal. Also to consider is the fact that the work is done in multiple threads which means that even if loading each image takes a bit of time, the fact that it only impacts one thread while the others continue to do their work should make it overall very acceptable. - Anonymous

January 25, 2010

The comment has been removed - Anonymous

January 26, 2010

Currently we do not have a redistribution license, which means you can consume the DLL from your products if it is already installed in the system.You cannot register modules in app.config automatically, however you can add them programmatically to the WebCrawler.Modules collection which means you could discover them programmatically from any source (including app.config). - Anonymous

January 26, 2010

Carlos, Thanks a lot for such a quick response.I've just noticed that the WebCrawler class has method named RegisterModule just for this. That's beautiful !I'm a little bit confused regarding the license. So if I've got it on my server (by installing the free toolkit or just by copying the DLL file) and I use it to generate reports that will be shown to users by using my UI ((and also apply some changes to the result XML file). I'm talking here about a web site. Is it still Ok ? - Anonymous

January 26, 2010

You cannot just copy the DLL it needs to be installed in its current packaging. So if you do it installing the SEO Toolkit (in its current MSI) then it should be fine to consume the API's in any way. - Anonymous

January 26, 2010

Thanks Carlos.Actually I took DLL and run it on clean Windows 7 PC (no IIS installed) and it worked great. The only thing it requires is Full Trust when running in web app. I guess there's another DLL responsible for the UI part in IIS.Thanks for you help. - Anonymous

January 26, 2010

When I say you cannot just copy, I mean "legally" copy it, there are no "redistribution" privileges in the current License. So legally the only way is to get the IIS SEO msi installed.Technically it definitely works. - Anonymous

January 26, 2010

Thanks Carlos for the above information. I appreciate it. - Anonymous

March 29, 2010

Thank for carlo , you are excellent - Anonymous

April 12, 2010

How it affects the performance? - Anonymous

April 12, 2010

If you are referring to how does it affect the performance of the crawler see above response:The performance impact depends directly on the work done by the module. The overhead of adding a module that does nothing should be minimal. Also to consider is the fact that the work is done in multiple threads which means that even if loading each image takes a bit of time, the fact that it only impacts one thread while the others continue to do their work should make it overall very acceptable. - Anonymous

April 14, 2010

Thanks Carlos for the information. Very helpful for me - Anonymous

April 21, 2010

Hi Carlos. I've tried to send you an email through your blog contact form and I think you did not get it. Is there an alternative way to send you messages ? Thanks - Anonymous

April 21, 2010

Hi Kobi, I have received it and I'm following up on the question you asked. - Anonymous

April 21, 2010

Thanks a lot Carlos for such a quick response. - Anonymous

June 04, 2010

@:CarlosAg : thankz for this infomation - Anonymous

February 28, 2011

Thank you for sharing SEO ideas effectively. Expect to receive from you or write it. Wishing success - Anonymous

March 02, 2011

Hi Carlos, great blogpost!How do I debug this extension dll for IIS SEO Toolkit on Windows 7? I can't add a reference to InetMgr.exe since that file is not shown even though I start Microsoft Visual Studio C# as administrator.It would be great if you wrote a blogpost about debugging extension dlls for IIS SEO Toolkit. - Anonymous

March 03, 2011

To debug since you should be in a Class Library project (DLL) then you basically just need to set the "Project->Properties->Debug->Start Program" and set it to inetmgr.exe. If you are running in 64-bit machine you will need to do a hacky thing since VS is 32 bit it will redirect to SysWow and not show inetmgr.exe, so for that just use \localhostadmin$system32inetsrvinetmgr.exe as the target program that will workaround the redirection. - Anonymous

March 06, 2011

Thanks Carlos! Debug now works perfectly :)Another question while I'm at it... I've been trying to read a config file for my dll (projectname.dll.config) but with no luck. The config file has been copied to the same location as inetmgr.exe, is there some magic that needs to be done to be able to read a dlls config file that's being run by inetmgr?Oh, and I've also tried to add some config lines to the InetMgr.exe.config but then IIS Toolkit won't run at all... - Anonymous

March 07, 2011

I've got an almost automatic installer now, I've created the installer through a Visual Studio Installer Setup Project which also does the GAC automatically, sweet!The installer would be completely automatic if there was a way to register the module to the Administration.config file on install... does anyone know if this is possible, and if so... how do I do this? - Anonymous

March 08, 2011

I wrote a blog on how you can do that a few years ago, check it out at: blogs.msdn.com/.../creatingsetupprojectforiisusingvisualstudio2008.aspx - Anonymous

September 04, 2011

Hi again Carlos,I'm looking into ways of getting data out from SEO Toolkit without doing a manual export... is there any way of reading the stored data somehow? I'm guessing that the data from a crawl is stored in a database, can that database be queried? if so... how do I connect to it? - Anonymous

December 11, 2011

Thanks for the nice example.What I was looking for is a way to recognize the canonical tags that google suggested. We are using HTTP and HTTPS and therefore get tons of errors with this tool. But we have the canonical tag set. Any way to extend / update this? - Anonymous

October 09, 2013

thanks a lot of. http://phimtuoi.com