Building a dashboard on top of a web API with Power BI and Azure – Part 3

In Part 1 of this series we connected to the driveBC API in Power BI Desktop and built a simple dashboard. In Part 2 we designed the architecture capable of storing the data over time. In Part 3 we are implementing implement the architecture.

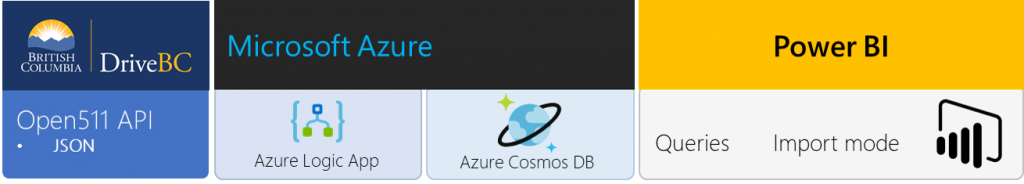

In the previous article, we defined our architecture as follow:

We will now build our data pipeline, composed of a Logic App that will query the source API every X minutes, extract the JSON payload and push it into Cosmos DB.

If you don't already have an Azure subscription, you can create a free account any time and even benefit from free credits.

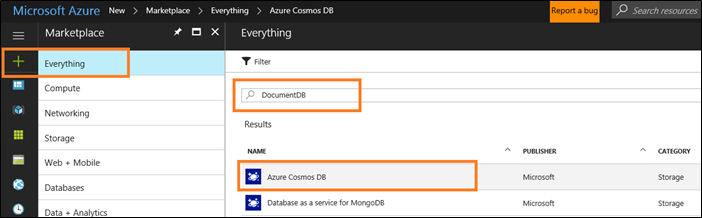

- Once logged into the Azure portal, we will provision a new Cosmos DB account (formerly Document DB):

- Be sure the select DocumentDB as the API type in the following screen:

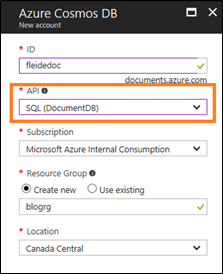

- The account will need an ID, and a resource group. A resource group is the equivalent of a project. It allows you to put all the assets used in a solution into a single container, to monitor and administer them together

- Here we are creating an account, that can host multiple databases, each one containing multiple collections (tables)

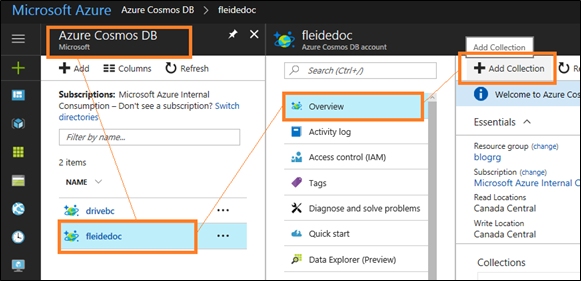

- Once the Cosmos DB account is provisioned, we need to add a database and a collection to it

- You can find your Cosmos DB account either by browsing through your Resource Group, via the Azure Cosmos DB pane, or by searching resources (top of the screen)

- The collection will contain events, let's name it accordingly

- To minimize cost, we can use fixed storage and minimal throughput (400)

- No need to partition yet

- We can use the Data Explorer to see what's in the collection (empty at the moment), but right now we'll just make a note of the URI (connection string) of the account

- We can now get started on the Logic App, let's create a new one

- As a best practice for demos, let's reuse the same Resource Group as the Cosmos DB account

- Once the Logic App is provisioned, we can start designing our tasks

- You can find the Logic App either by browsing through your Resource Group, via the Logic Apps pane, or by searching resources (top of the screen)

- Once found, we will then use the Logic Apps Designer to create the actual workflow

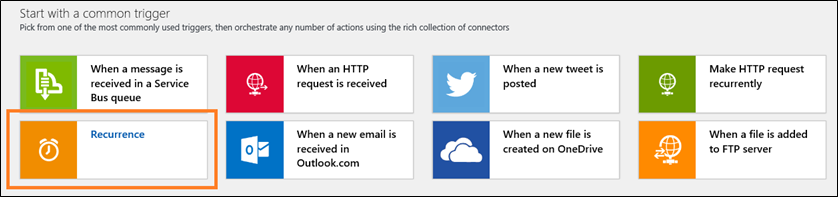

- Our trigger will be a recurrence (every X minutes)

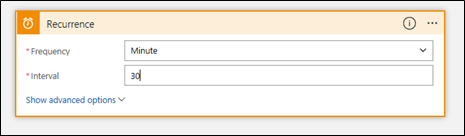

- Let's configure the recurrence to execute once every 30 minutes for now

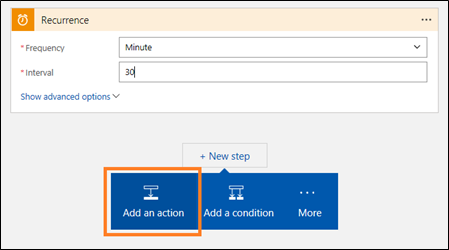

- Let's add an action…

- … to call our API

- We are calling that API through a URL, that's a HTTP action. We're doing nothing fancy so we can use the basic HTTP – HTTP action

- We will do a GET, on the URI of the API: https://api.open511.gov.bc.ca/events?format=json&status=ACTIVE&limit=300

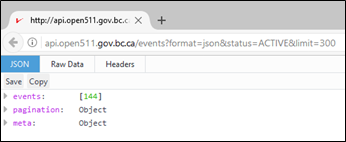

- From there we should receive the full payload (events + pagination + meta)

- In Firefox we can see how the payload is more than simply the list of events

- Let's parse it and extract the events, using the Parse JSON Data Operations

- What we need to parse is the body of the previous task using a schema

- We will use the dynamic content to grab the content (body) from the previous HTTP task

- And if we can usually generate the schema from an existing payload (link at the bottom of the activity), in our current context the schema needs a little bit of tweaking

- The geography attribute has two alternate structures (points or lines) that defeats the generator

- The correct one is available here, we can simply copy and paste it in the task

- Let's check that everything works so far : Save and Run

- Back in designer mode, we will need to iterate over the list of events, and insert each of them into our collection, using a For Each loop

- For each event, let's create a document (record) in our collection (table) with the Azure DocumentDB connector

- Let's select the Account first

- That will create a connection in the Resource Group that we will be able to reuse across Logic Apps, and also use to centralize administration

- And point to the right collection

- We will insert individual event (events – item), and not the whole events list

- Again, note that we selected individual events, as indicated by the suffix - item, and not the complete event list (events)

- In Upsert mode, which means insert if new and update if existing. The update is based on the id field by convention in DocumentDB. Note that we are lucky that the API also named its unique identifier id or we would have needed to handle that ourselves (generating the Document manually here instead of using the dynamic content, see step 6)

- We will insert individual event (events – item), and not the whole events list

- We can again check that everything works: Save and Run

- It takes a few minutes, due to using the lowest DocumentDB performance tier

- And even go see in the collection that our events are there (Cosmos DB > Collection > Data Explorer)

Without any other modification, our app is now running, and extracting data every 30 minutes from the API to push it into our DocumentDB.

Let's quickly connect to DocumentDB in Power BI Desktop and see how it goes:

- In Power BI Desktop : Get Data > Azure > DocumentDB

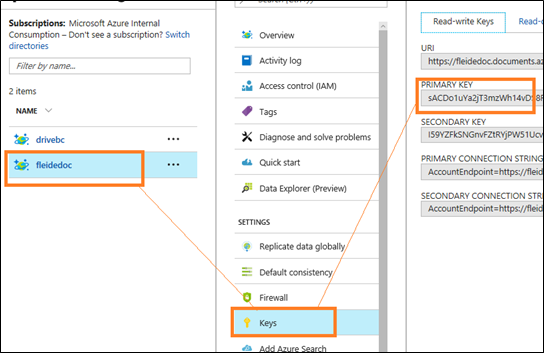

- We need the URL we noted earlier (Cosmos DB Account > Overview)

- We will also need an access key (Cosmos DB Account > Keys (Settings))

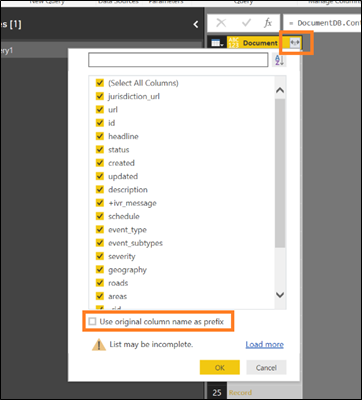

- Then we can Edit the query, and select all the columns we need

In this article, we built the pipeline that calls the API regularly, parse the payload and stores the extracted records in our database. We now have a solution that keeps data over time instead of only accessing the current payload of the API.

There is a lot more to add to that solution to get a complete product:

- importing archived events and archiving already loaded events (just another Logic App job with a different API call also in upsert mode),

- dealing with the event id that contains a forbidden character (Cosmos DB doesn't like "/" in the id field, it can be replaced on the fly in the Logic App, and the already loaded events can be quickly updated via C#),

- data modeling to create actual dimensions and handling the different geographies (lines and points) properly

- ...

But the basic blocs of the solution are there, and they were relatively easy to set up. That's the power of the public cloud with Azure.