Troubleshooting Oozie or other Hadoop errors with DEBUG logging

In troubleshooting Hadoop issues, we often need to review the logging of a specific Hadoop component. By default, the logging level is set to INFO or WARN for many Hadoop components like Oozie, Hive etc. and in many cases this level of logging is sufficient to trace the issue. However, in certain cases, INFO or WARN level logging may not be good enough to diagnose the issue, specifically when the error is a generic error, like this one- "JA009: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses" and when the error call stack does not provide a clue. Recently I worked with a customer who ran into this error while submitting an Oozie job to an HDInsight Linux cluster. As the error indicates, this is some sort of a configuration issue –but since there are quite a few places we can specify the same configuration, it is not always obvious where the misconfiguration is and it may sometimes become a tedious trial and error exercise. In this blog, I wanted to share an example of how DEBUG logging helped our troubleshooting and the steps to enable DEBUG logging for Oozie (we can use the same steps for other Hadoop components as well) on a Hadoop cluster. I used an HDInsight 3.2 Linux cluster, but these steps should apply to any Hadoop (2.x or later) cluster with Ambari 2.x.

The Issue and initial troubleshooting:

Customer attempted to submit an Oozie job, running Java Action, to an HDInsight 3.2 Linux cluster and the Java action failed with the error below –

org.apache.oozie.action.ActionExecutorException: JA009: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.

.....

Caused by: java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.

at org.apache.hadoop.mapreduce.Cluster.initialize(Cluster.java:120)

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:82)

at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:75)

at org.apache.hadoop.mapred.JobClient.init(JobClient.java:485)

at org.apache.hadoop.mapred.JobClient.<init>(JobClient.java:464)

at org.apache.oozie.service.HadoopAccessorService$2.run(HadoopAccessorService.java:436)

at org.apache.oozie.service.HadoopAccessorService$2.run(HadoopAccessorService.java:434)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1628)

at org.apache.oozie.service.HadoopAccessorService.createJobClient(HadoopAccessorService.java:434)

at org.apache.oozie.action.hadoop.JavaActionExecutor.createJobClient(JavaActionExecutor.java:1178)

at org.apache.oozie.action.hadoop.JavaActionExecutor.submitLauncher(JavaActionExecutor.java:927)

... 10 more

We reviewed the configurations specified in workflow.xml and Oozie REST payload (equivalent of job.properties or job.xml in Oozie/REST), but those appeared to be correct. We then decided to enable DEBUG level logging for Oozie.

Enabling Oozie DEBUG logging on HDInsight Linux:

For Oozie (same for other Hadoop components), logging properties are specified in the oozie-log4j.properties file. On HDInsight Linux Cluster, oozie-log4j.properties file is located under /etc./oozie/conf on headnode0. But we can enable DEBUG logging via Ambari web UI and enabling DEBUG logging is very simple via Ambari dashboard.

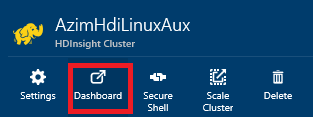

1. Login to 'Ambari web' from Azure portal

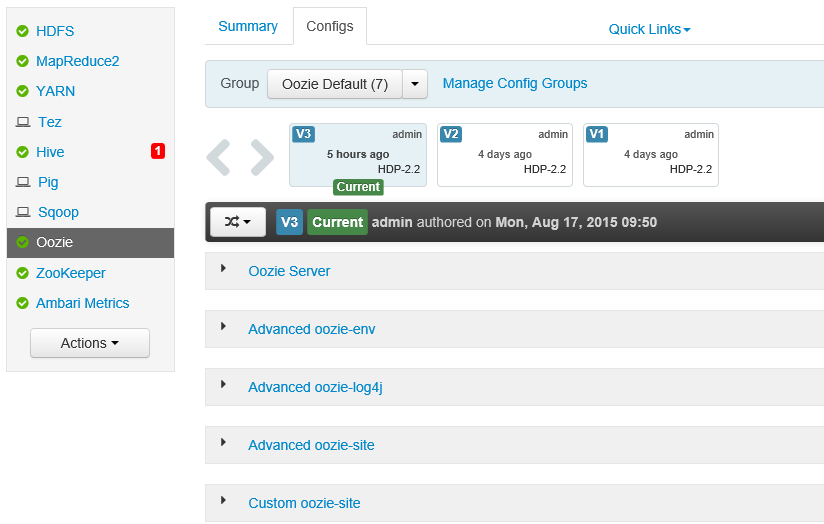

2. Select Oozie on the left hand side and then select the Configs tab –

3. Expand 'Advanced oozie-log4j' section and scroll to the section where we can see the logging levels defined-

Change the logging level to DEBUG for the logging type you are interested about. For example, I wanted to add more detailed logging in oozie-log, so I changed the following entries to DEBUG -

log4j.logger.org.apache.oozie=DEBUG, oozie, ETW

log4j.logger.org.apache.hadoop=DEBUG, oozie, ETW

After the change, click on Save to save the changes.

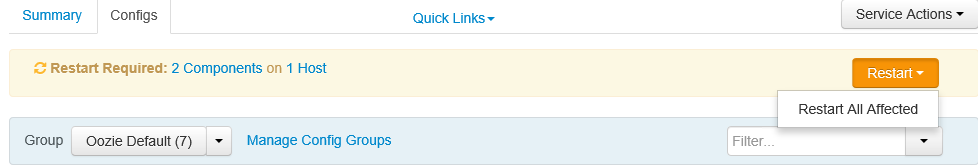

4. Ambari web UI will then indicate that services need to be restarted due to stale config, restart the Oozie services –

5. Now that DEBUG level logging is in place, we can reproduce the issue and collect the logs - on HDInsight Linux cluster, we can get the oozie-log via Oozie Web UI, by selecting a job and then clicking on 'Get logs' button, like below. To access Oozie Web UI, you will need to enable SSH tunneling, as shown in this blog

Alternatively, we can get all the Oozie logs from the directory /var/log/oozie.

Reviewing Debug Log

In my example, oozie-log (with DEBUG logging) had the following relevant entry just before the error we were troubleshooting-

2015-08-18 15:15:39,972 INFO Cluster:113 - SERVER[headnode0.MyCluster-ssh.c2.internal.cloudapp.net] Failed to use org.apache.hadoop.mapred.YarnClientProtocolProvider due to error: java.lang.reflect.InvocationTargetException

2015-08-18 15:15:39,973 DEBUG UserGroupInformation:1632 - SERVER[headnode0.MyCluster-ssh.c2.internal.cloudapp.net] PrivilegedActionException as:oozie (auth:PROXY) via oozie (auth:SIMPLE) cause:java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses.

We also noted the following entry in the debug log -

2015-08-18 15:14:25,754 DEBUG AzureNativeFileSystemStore:1915 - SERVER[headnode0.myCluster-ssh.c2.internal.cloudapp.net] Found blob as a directory-using this file under it to infer its properties https://mystoragescct.blob.core.windows.net/mycontainername/user/admin/qemp-workflow/config-default.xml

This made us look into config-default.xml, located under 'qemp-workflow', which was same as workflow application path. Review of the config-default.xml revealed that we had incorrect Jobtracker and NameNode specified in there. Looking back, we may wonder why we didn't look at the config-default.xml in the first place, but that's the whole point J there are quite a few places configurations can be present and the DEBUG logging helped to reveal the existence of the config file that we were not aware of initially.

On Another flavor of this error, DEBUG logging helped to point to the root cause of the error –

2015-08-17 15:02:06,817 DEBUG UserGroupInformation:1632 - SERVER[headnode0.AzimHdiLinuxAux-ssh.j10.internal.cloudapp.net] PrivilegedActionException as:admin (auth:PROXY) via oozie (auth:SIMPLE) cause:org.apache.hadoop.fs.UnsupportedFileSystemException: No AbstractFileSystem for scheme: wasbs

Reverting back to previous configuration-

The nice thing about Ambari Web UI is that it stores multiple versions of the Configs, like below, which enable us to compare between a certain version and current version and then revert back to one of the previous versions, like below –

So, after we are done with troubleshooting, we can revert back to INFO level debugging by selecting the previous version and making it Current. This will require restart of Oozie services, as prompted by Ambari Web.

NOTE: We strongly recommend that you revert back the logging level to default since DEBUG level logging is very verbose and may cause the disk to run out of space.

The Takeaways-

- My example was for Oozie, but using the steps above, you can enable DEBUG logging for other Hadoop components (such as Hive, Pig etc. for which log4j logging settings can be changed via Ambari) for troubleshooting when default level of logging doesn't help.

- The above steps are not limited to changing logging level - using the Ambari web UI, you can change various configurations of Hadoop components for quick testing and then reverting it back.

Enabling Oozie DEBUG logging on HDInsight Windows:

I wanted to quickly touch on how we can enable debug logging on an HDInsight windows cluster. On HDInsight Windows, we don't have Ambari 2.x, nor the Ambari Web UI - so the steps are different. On HDInsight Windows cluster, oozie-log4j.properties file is located under the folder %OOZIE_ROOT%\conf or 'C:\apps\dist\oozie-4.1.0.2.2.7.1-0004\oozie-win-distro\conf'.

1. On the active headnode, go to folder %OOZIE_ROOT%\conf or 'C:\apps\dist\oozie-4.1.0.2.2.7.1-0004\oozie-win-distro\conf' (oozie version will vary).

2. Manually edit the file oozie-log4j.properties , such as -

log4j.logger.org.apache.oozie=DEBUG, oozie, ETW

log4j.logger.org.apache.hadoop=DEBUG, oozie, ETW

3. Go to start -> Run -> Services.msc and restart the Apache Hadoop OozieService

4. After troubleshooting is done, change the log4j logging back to the default level and restart the Oozie service again.

Happy troubleshooting J

Azim

Comments

- Anonymous

September 10, 2015

The comment has been removed