The HDInsight Support Team is Open for Business

Hi, my name is Dan and I work on the HDInsight Support Team at Microsoft.

This week the Azure HDInsight Service reached the General Availability (GA) milestone and the HDInsight support team is officially open for business!

Azure HDInsight is a 100% Apache compatible Hadoop distribution available on Windows Azure. Instead of building our own Hadoop distribution, Microsoft chose to partner with Hortonworks to port Apache Hadoop to the Windows platform. Microsoft has contributed 6,000+ engineering hours and over 25,000 lines of code in various Apache projects related to the Hadoop ecosystem. One of Microsoft's more notable contributions is Windows Azure Storage-Blob (WASB) - a thin wrapper that exposes Windows Azure block blob storage as an HDFS file system from your Azure HDInsight cluster. Microsoft is also actively engaged and contributing to the Hive Stinger project, and working to achieve better security integration on the Windows platform.

Microsoft aims to make the complex simple … or at least we try. :) Have you have ever installed a Hadoop cluster from scratch? If you have you'll appreciate how easy we've made the HDInsight cluster provisioning experience. Just specify the DNS name for your cluster, a size up to 32 nodes (larger clusters are possible), a couple of additional details, click "Create HDInsight Cluster" and 15 minutes later your HDInsight cluster will be up and running!

When the cluster provisioning process is complete you'll have a 100% Apache compatible Hadoop cluster with the following architecture:

Let's take a quick "lap" around the cluster and understand the functionality exposed by each node.

Secure Role or Gateway Node:

The secure role is a reverse proxy that serves as a "gateway" to your Azure HDInsight cluster. The Secure Role performs authentication and authorization, and exposes endpoints for WebHcat, Ambari, HiveServer, HiveServer2, and Oozie on port 443. To authenticate to the cluster, you use the user name and password you specified at the time of cluster provisioning.

Head Node:

The head node is an Azure XL(A4) VM with 8 cores and 14GB of RAM. In an Azure HDInsight cluster the head node runs important services that supply "core" Hadoop functionality:

• NameNode

• Secondary NameNode

• JobTracker

Your head node also runs the following data services:

• HiveServer and HiveServer2

• Pig

• Sqoop

• Metastore

• Derbyserver

And the following operational services:

• Oozie

• Templeton

• Ambari

Worker Nodes:

The worker nodes are run on Azure Large (A3) VM's with 4 cores and 7GB or RAM. The worker nodes run services that support task scheduling, task execution and data access:

• TaskTracker

• DataNode

• Pig

• Hive Client

Windows Azure Storage-BLOB (WASB):

The default file system on your Azure HDInsight cluster is Azure Blob Storage. Microsoft has implemented a thin layer over Azure Blob Storage that exposes it as an HDFS file system we call Windows Azure Storage-Blob or WASB.

The great thing about WASB is that you can interact with it using DFS commands, directly using the Blob Storage REST API's or through one of the many popular explorer tools. There are a couple of subtle distinctions about WASB that really make it a natural choice in the Azure cloud. First, any data you store in WASB will be available across HDInsight cluster life cycles. When you are finished using your Azure HDInsight cluster and delete it, the data stored in Azure Blob Storage isn't deleted. The next time you provision a cluster you can point it back at the same Windows Azure storage account and use the data again if you desire.

If you have "born in cloud" data that's created by a service you run in the Azure data center, you can directly access that same data from your Azure HDInsight cluster provided your cluster is configured to access the storage account where the data is stored.

It's also much cheaper to store data in WASB because it doesn't require an operational HDInsight cluster to upload or download data. So, there's no additional compute cost like there would be if you stored the data in local HDFS in your HDInsight cluster.

Lastly, storing data in WASB also makes it easy to share it with other applications that run outside of your HDInsight cluster.

There are other benefits of using WASB with HDInsight that are documented here.

Local HDFS:

Local HDFS storage is also available on your HDInsight cluster, but its use is discouraged because it's more expensive to use (cluster has to be operational) and anything that's stored in an Azure HDInsight cluster's local HDFS file system is deleted when the cluster is deleted.

What are the Versions of the Apache Hadoop Applications on my HDInsight Cluster?

The version of each of the Apache components installed on your Azure HDInsight 2.1 cluster are as follows:

| Component | Version |

| Apache Hadoop | 1.2.0 |

| Apache Hive | 0.11.0 |

| Apache Pig | 0.11 |

| Apache Sqoop | 1.4.3 |

| Apache Oozie | 3.2.2 |

| Apache HCatalog | Merged with Hive |

| Apache Templeton | 0.1.4 |

| SQL Server JDBC Driver | 3.0 |

| Ambari | API v1.0 |

What's Next?

So now that I have an Azure HDInsight cluster what can you do with it?

You have two ways to interact with your Azure HDInsight cluster.

- Remote access via remote job submission or REST endpoints

- RDP

Remote Access via Remote Job Submission

The preferred mechanism to interact with your Azure HDInsight cluster is remotely via the Secure Gateway node I described earlier. You can programmatically submit MapReduce, Streaming MapReduce, Pig and Hive jobs via PowerShell or the HDInsight SDK. You can also remotely access your Hive warehouse using Excel and the Hive ODBC Driver.

Remote Access via REST Endpoints

You can retrieve Ambari metrics and submit Oozie workflows using their respective REST API's (also via the secure gateway on port 443).

If you want to send REST requests directly to the secure gateway's endpoint, be aware that you need to imbed the name of the Apache application that's the target of the REST call in the request URI. The secure gateway node validates the URI, and uses rewrite rules to forward the request to the correct application and port on the HDInsight cluster behind the secure gateway.

Here's an example for an Ambari REST request using CURL. Substitute your user name, password and cluster DNS name (replacing the angle brackets the request URI) in the example below:

| curl -u <username>:<password> -k https://<YourClusterDnsName>azurehdinsight.net:443/ambari/api/v1/clusters/<YourClusterDnsName>.azurehdinsight.net/services/ |

Remote Access via RDP

You can also access your cluster via Remote Desktop (RDP) although RDP access is turned off by default. To enable RDP, access your HDInsight cluster via the Windows Azure management portal and click on "CONFIGURATION".

After the screen renders click "ENABLE REMOTE" and the "Configure Remote Desktop" dialog will launch. Specify an RDP user name and password and an expiration data for remote access. To be clear, the RDP user is a different user than the account you specified when you provisioned the cluster. The RDP user account cannot be used to authenticate to any of the endpoints exposed by the secure gateway. It can only be used to connect to Azure HDInsight cluster over RDP.

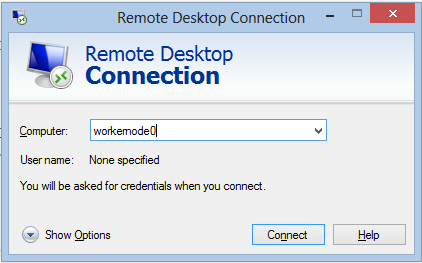

When you RDP into your cluster you will land on the cluster head node that was described earlier. After logging into the head node over RDP, you can also RDP into any of your cluster's worker nodes. To do so, launch the RDP client and specify workernode[n] for the "Computer" name in the Remote Desktop Connection dialog where "n" is an integer representing the node you want to connect to relative to 0. You cannot log directly into one of your cluster's worker nodes from outside your HDInsight cluster.

Making Changes to Your HDInsight Cluster using RDP

Please be aware that you shouldn't store any files on the C:, D: or E: drives on your cluster head node or worker nodes. You also shouldn't modify any files or install any software on your HDInsight cluster while logged in via RDP. If you do, your changes will be lost when software updates are applied to the VM's that host your Azure HDInsight cluster.

Cluster customization is supported via the HDInsight Powershell cmdlets. You can find an example of how to customize your cluster when provisioning it here.

Well, that's more ground than I intended to cover in my first post but I hope you find this content useful. Please let us know how we are doing, and what you would like us to write about.

How to Contact Us

As a team we look forward to engaging with you and helping you to get the most out of Azure HDInsight! If you would like to contact us, log into the Windows Azure Management portal and click the "Windows Azure" down-arrow and then click "SUPPORT":

Comments

- Anonymous

November 12, 2013

Nicely written! - Anonymous

November 20, 2013

We have been providing<a href="technowand.com.au/"> it support for business </a> to small and medium scale businesses for many years now and understand full well how important it is to focus on the provision of proactive support services. - Anonymous

February 03, 2015

Do you have a list of all the ports for HDInsight and Blob storage so tools like AZCopy, Storage Explorer and RDP to Headnodes work? - Anonymous

May 20, 2015

Thanks for the nice document. Can you point me to some support for HDInsight HBase table setting? Particularly nested entities.Thanks again.