Some Commonly Used Yarn Memory Settings

We were recently working on an out of memory issue that was occurring with certain workloads on HDInsight clusters. I thought it might be a good time to write on this topic based on all the current experience troubleshooting some memory issues. There are a few memory settings that can be tuned to suit your specific workloads. The nice thing about some of these settings is that they can be configured either at the Hadoop cluster level, or can be set for specific queries known to exceed the cluster's memory limits.

Some Key Memory Configuration Parameters

So, as we all know by now, Yarn is the new data operating system that handles resource management and also serves batch workloads that can use MapReduce and other interactive and real-time workloads. There are memory settings that can be set at the Yarn container level and also at the mapper and reducer level. Memory is requested in increments of the Yarn container size. Mapper and reducer tasks run inside a container. Let us introduce some parameters here and understand what they mean.

mapreduce.map.memory.mb and mapreduce.reduce.memory.mb

Description: Upper memory limit for the map/reduce task and if memory subscribed by this task exceeds this limit, the corresponding container will be killed.

These parameters determine the maximum amount of memory that can be assigned to mapper and reduce tasks respectively. Let us look at an example to understand this well. Say, a Hive job runs on MR framework and it needs a mapper and reducer. Mapper is bound by an upper limit for memory which is defined in the configuration parameter mapreduce.map.memory.mb. However, if the value for yarn.scheduler.minimum-allocation-mb is greater than this value of mapreduce.map.memory.mb, then the yarn.scheduler.minimum-allocation-mb is respected and the containers of that size are given out.

This parameter needs to be set carefully as this will restrict the amount of memory that a mapper/reducer task has to work with and if not set properly, this could lead to slower performance or OOM errors. Also, if it is set to a large value that is not typically needed for your workloads, it could result in a reduced concurrency on a busy system, as lesser applications can run in parallel due to larger container allocations. It is important to test workloads and set this parameter to an optimal value that can serve most workloads and tweak this value at the job level for unique memory requirements. Please note that for Hive queries on Tez, the configuration parameters hive.tez.container.size and hive.tez.java.opts can be used to set the container limits. By default these values are set to -1, which means that they default to the mapreduce settings, however there is an option to override this at the Tez level. Shanyu's blog covers this in greater detail.

How to set this property: Can be set at site level with mapred-site.xml. This change does not require a service restart.

yarn.scheduler.minimum-allocation-mb

Description: This is the minimum allocation size of a container. All memory requests will be handed out as increments of this size.

.

Yarn uses the concept of containers for acquiring resources in increments. So, the minimum allocation size of a container is determined by the configuration property yarn.scheduler.minimum-allocation-mb. This is the minimum unit of container memory grant possible. So, even if a certain workload needs 100 MB of memory, it will still be granted 512 MB of memory as that is the minimum size as defined in yarn.scheduler.minimum-allocation-mb property for this cluster. This parameter needs to be chosen carefully based on your workload to ensure that it is providing the optimal performance you need while also utilizing the cluster resources. If this configuration parameter is not able to accommodate the memory needed by a task, it will lead to out of memory errors. The error below is from my cluster where a container has oversubscribed memory, so the NodeManager kills the container and you will notice an error message like below on the logs. So, I would need to look at my workload and increase the value for this configuration property to allow my workloads to complete without OOM errors.

Vertex failed,vertexName=Reducer 2, vertexId=vertex_1410869767158_0011_1_00,diagnostics=[Task failed, taskId=task_1410869767158_0011_1_00_000006,diagnostics=[AttemptID:attempt_1410869767158_0011_1_00_000006_0

Info:Container container_1410869767158_0011_01_000009 COMPLETED with diagnostics set to [Container [pid=container_1410869767158_0011_01_000009,containerID=container_1410869767158_0011_01_000009] is running beyond physical memory limits.

Current usage: 512.3 MB of 512MB physical memory used; 517.0 MB of 1.0 GB virtual memory used. Killing container.

Dump of the process-tree for container_1410869767158_0011_01_000009 :

|- PID CPU_TIME(MILLIS) VMEM(BYTES)WORKING_SET(BYTES)

|- 7612 62 1699840 2584576|- 9088 15 663552 2486272

|- 7620 3451328 539701248 532135936

Container killed on request. Exit code is 137

Container exited with a non-zero exit code 137

How to set this property: Can be set at site level with mapred-site.xml, or can be set at the job level. This change needs a recycle of the RM service. On HDInsight, this needs to be done when provisioning the cluster with custom configuration parameters.

yarn.scheduler.maximum-allocation-mb

Description: This is the maximum allocation size allowed for a container.

This property defines the maximum memory allocation possible for an application master container allocation request. Again, this needs to be chosen carefully as if the value is not large enough to accommodate the needs for processing, then this would result in an OOM error. Say mapreduce.map.memory.mb is set to 1024 and if the yarn.scheduler.maximum-allocation-mb is set to 300, it leads to a problem as the maximum allocation possible for a container is 300 MB, but the mapper task would need 1024 MB as defined in the mapreduce.map.memory.mb setting. Here is the error you would see in the logs:

org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested memory < 0, or requested memory > max configured, requestedMemory=1024, maxMemory=300

How to set this property: Can be set at site level by with mapred-site.xml, or can be set at the job level. This change needs a recycle of the RM service. On HDInsight, this needs to be done when provisioning the cluster with custom configuration parameters.

mapreduce.reduce.java.opts and mapreduce.map.java.opts

Description: This allows to configure the maximum and minimum JVM heap size. For maximum use, -Xmx and for minimum use –Xms.

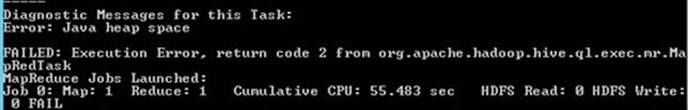

This property value needs to be less than the upper bound for map/reduce task as defined in mapreduce.map.memory.mb/mapreduce.reduce.memory.mb, as it should fit within the memory allocation for the map/reduce task. These configuration parameters specify the amount of heap space that is available for the JVM process to work within a container.If this parameter is not properly configured, this will lead to Java heap space errors as shown below.

You can get the yarn logs by executing the following command by feeding in the applicationId.

Now, let us look at the relevant error messages from the Yarn logs that has been extracted to the heaperr.txt by executing the command above –

2014-09-18 14:36:32,303 INFO [IPC Server handler 29 on 59890] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1410959438010_0051_r_000004 asked for a task

2014-09-18 14:36:32,303 INFO [IPC Server handler 29 on 59890] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1410959438010_0051_r_000004 given task: attempt_1410959438010_0051_r_000000_1

2014-09-18 14:41:11,809 FATAL [IPC Server handler 18 on 59890] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Task: attempt_1410959438010_0051_r_000000_1 - exited : Java heap space

2014-09-18 14:41:11,809 INFO [IPC Server handler 18 on 59890] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Diagnostics report from attempt_1410959438010_0051_r_000000_1: Error: Java heap space

2014-09-18 14:41:11,809 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1410959438010_0051_r_000000_1: Error: Java heap space

As we can see the reduce attempt 1 has exited with an error due to insufficient Java heap space. I am able to tell that this is a reduce attempt due to the letter 'r' in the task attempt ID as highlighted here - jvm_1410959438010_0051_r_000004. It would be a letter 'm' for the mapper. This reduce task would be tried 4 times as defined in mapred.reduce.max.attempts config property in mapred-site.xml and in my case all the four attempts failed due to the same error. When you are testing a workload to determine memory settings on a standalone one-node box, you can reduce the number of attempts by reducing the mapred.reduce.max.attempts config property and find the right amount of memory that the workload would need by tweaking the different memory settings and determining the right configuration for the cluster. From the above output, it is clear that a reduce task has a problem with the available heap space and I could solve this issue by increasing the heap space with a set statement just for this query as most of my other queries were happy with the default heap space as defined in the mapred-site.xml for the cluster. Counter committed heap bytes can be used to look at the heap memory that the job eventually consumed. This is accessible from the job counters page.

How to set this property: Can be set at site level with mapred-site.xml, or can be set at the job level. This change does not require a service restart.

yarn.app.mapreduce.am.resource.mb

Description: This is the amount of memory that the Application Master for MR framework would need.

Again, this needs to be set with care as a larger allocation for the AM would mean lesser concurrency, as you can spin up only so many AMs before exhausting the containers on a busy system. This value also needs to be less than what is defined in yarn.scheduler.maximum-allocation-mb, if not, it will create an error condition – example below.

2014-10-23 13:31:13,816 ERROR [main]: exec.Task (TezTask.java:execute(186)) - Failed to execute tez graph.

org.apache.tez.dag.api.TezException: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested memory < 0, or requested memory > max configured, requestedMemory=1536, maxMemory=699

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:228)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.validateResourceRequest(RMAppManager.java:385)

How to set this property: Can be set at site level with mapred-site.xml, or can be set at the job level. This change does not require a service restart.

We looked at some common Yarn memory parameters in this post. This brings us to the end of our journey in highlighting some of the most commonly used memory config parameters with Yarn. There is a nice reference from Hortonworks here that talks about a tool that gives some best practice suggestions for memory settings and also goes over how to manually set these values. This can be used as a starting point for testing some of your workloads and tuning it iteratively from there to suit your specific needs. Hope you found some of these nuggets helpful. We will try and explore some more tuning parameters in further posts.

-Dharshana

@dharshb

Thanks to Dan and JasonH for reviewing this post!

Comments

Anonymous

September 01, 2015

Thanks Dharshana for putting this blog. I find it very useful. Keep up the good work.- Anonymous

August 17, 2016

3Q,very useful

- Anonymous