Transient Data Stores

By Ryan Coffman, Cloud Solution Architect

There is an ever-increasing need for traditional integration architecture and services when building cloud solutions. While deployment and implementation has accelerated with cloud, finding the right tool for the job has become much harder.

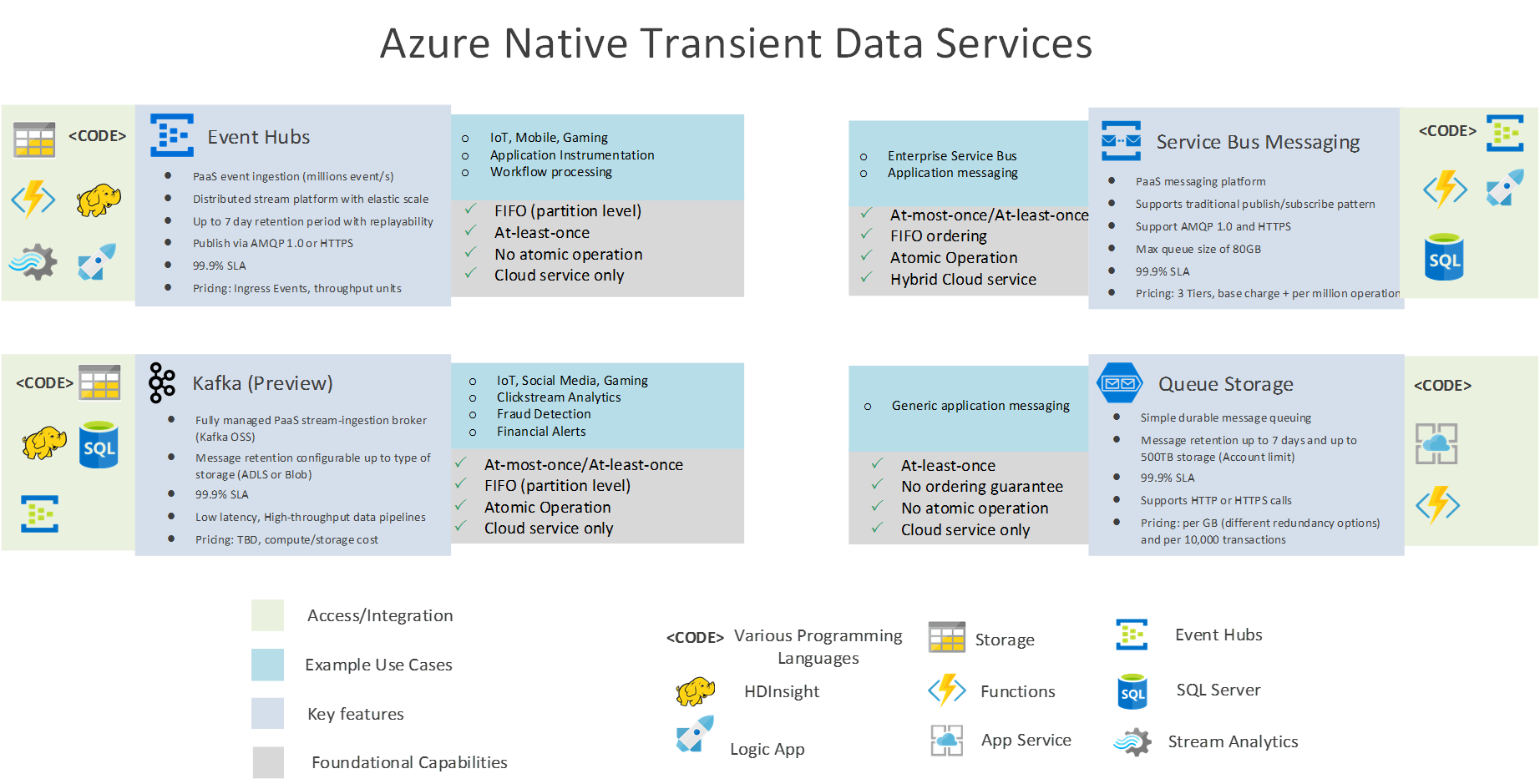

A couple of months ago, the topic for Data & Analytics partners was about persistent data stores. In this post, we’ll cover four Microsoft Azure transient data services. I have excluded Azure IoT Hubs from this discussion, as it is built specifically for IoT use cases and has additional features that do not apply to the services covered here.

The image below provides more details about each of these services, including access and integration, key features, and foundational capabilities. Understanding these features can help you make a preliminary decision about which transient data service may be best suited for your targeted use case.

Use case considerations

As any consultant will tell you, the most common answer for selecting the right tool is “it depends.” Not much is different here with this discussion, however, you could argue that each of these services fall into two main patterns – stream data pipelines and application messaging.

Discussions about big data typically also involve volume, velocity, and variety. For this discussion, we’ll focus on velocity, as well as streaming or continuous data. A subset of streaming or continuous data is considered an event pattern, meaning something has happened. With that comes a larger number of events (volume) and a more diverse set of protocols and data types (variety). Lambda architecture is a popular choice where you see stream data pipelines applied (speed layer). Architects can combine Apache Kafka or Azure Event Hubs (ingest) with Apache Storm (event processing), Apache HBase (speed layer), Hadoop for storing the master dataset (batch layer), and, finally, Microsoft Power BI for reporting and visualization (serving layer).

Here is an example of applying Azure Event Hubs or Apache Kafka:

You’re a new online retailer that would like to reach your customers as they peruse your website. Your reach is global and potentially could have millions of sessions at any one point in time. However, you are just getting started and can’t afford the upfront costs for data center deployments and other expenses. In the past, you had limited options that could meet your requirements and you couldn’t scale as fast as you wanted because of the costs.

Considering what Azure offers, you see that both Azure Event Hubs and Apache Kafka look like good options for data ingest. Both can easily scale out to meet the demand for ingesting events from your weblogs. You also have a few use cases where message ordering is important, so both Event Hubs and Kafka would be applicable. Finally, you would like to retain messages and replay events for up to a month at a time. Based on this, Kafka is the better choice as Event Hubs currently limits your retention to seven days.

This is a simple scenario that emphasizes the factors to consider when selecting components for decoupling your architecture. I’ve listed a few high-level requirements and questions further down.

Application messaging has been around for many years and is more commonly implemented as enterprise application integration (EAI) or enterprise service bus (ESB) patterns. Components in this space have been slowly evolving. Azure has taken some of the best features from these toolsets and deployed them in service bus messaging and storage queues. A typical application messaging pattern is more about getting a transport layer to do something or get something in return.

Below is an example of how you might apply service bus or queues:

You’re a web architect looking to build a new web application backend. In the past, you may have looked at tools, such as ActiveMQ, MSMQ, ZeroMQ, Tibco EMS, and others. Your management has indicated that any new development needs to be cloud centric as they are planning to migrate away from their on-premises data center. Your teams have encountered operations overhead and scalability issues with tools they used in the past. Your company is now considering Azure to host most of their new workloads, and you are looking at Queue and Service Bus. At the very beginning, you won’t have any hybrid requirements as this new backend will be hosted only in the cloud. The web application message size will not be higher than 64KB and queue storage will be less than 80GB. Finally, you will need more advanced features, such as no duplicate messages guarantee and atomic operation (grouping messages together in a long-lived transaction). Based on these requirements, both Service Bus and Queues are worthy contenders, with Service Bus fulfilling all the requirements.

I would like to call out some high-level differences between these services and you can find more detailed comparisons in the links below.

- High level use case – New analytics application versus web application backend communication

- On-premises (Hybrid options) – On-premises applications and systems versus on-premises data sources

- Message retention (time, storage) – Can I replay messages or potentially reprocess (restate) data from the beginning?

- Message delivery guarantee – Do I have a way to track if messages make it? How many times?

- Message ordering guarantee – Does your use case need ordered messages (think database CRUD operations)?

- Source data protocols – AMQP, MQTT (IoT), REST (HTTP) endpoints. Do I need multiple source protocols (databases, files)?

- Atomic Operations – Can I process or group operations together to create a long-lived transaction?

Partner opportunity

Integration architecture is complex, but the information above should help you make an informed decision when evaluating. I hope I have provided you with enough information to help you make an informed decision about Azure transient data services. With business requirements changing rapidly, architects, data engineers, and developers will need to become more familiar with how to make multiple services work together to create the end solution.

Resources

- Navigating the Azure data services jungle

- Azure Event Hubs documentation

- Get started with Azure Queue storage using .NET

- Storage queues and Service Bus queues: compared and contrasted

- Overview of the Azure IoT Hub service

- Comparison of Azure IoT Hub and Azure Event Hubs

- Apache Kafka for HDInsight

- Apache Kafka documentation

- Lambda Architecture implementation using Microsoft Azure