SQL Server 2016 SSIS Data Flow Buffer Auto Sizing capability benefits data loading on Clustered Columnstore tables

Reviewers: Joe Sack, Arvind Shyamsundar, Murshed Zaman, Sanjay Mishra

The focus of this blog is to introduce SSIS changes in the Data Flow task in SQL Server 2016 that help data loading into Columnstore tables.

On SQL Server relational data warehouses, Columnstore indexes are being widely used due to the immense value realized by customers from both a performance aspect as well as cost savings due to compressed size on storage. SSIS is a popular ETL tool that is often used to load data into the warehouse. We covered some of the Data loading best practices with Data Loading Performance Considerations with Clustered Columnstore indexes on SQL Server 2014 and had mentioned a few caveats with choosing appropriate Maximum insert commit size and its implications on rows potentially landing in a delta store uncompressed due to the Maximum buffer size in SSIS. For details see "SSIS Data Flow Task Max Insert Commit Size and implications on Row group sizes section" here.

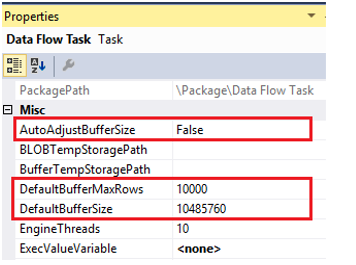

On SQL Server 2016, looking at the default SSIS Data Flow Properties you see that the default value for “DefaultBufferSize” is 10MB and the DefaultBufferMaxRows is 10,000.

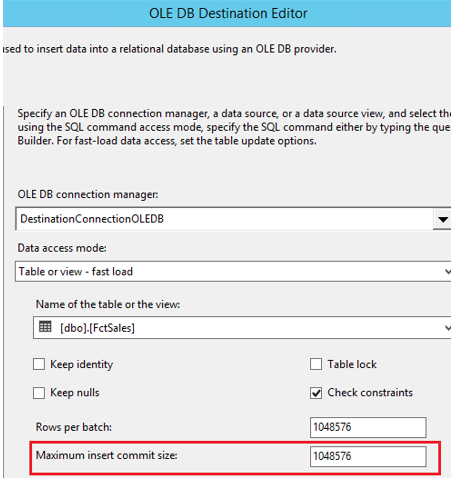

Given the rowgroup max size of 1,048,576 if you try to force your Maximum insert commit size as shown below, you could end up getting very few rows committed per insert and more importantly they can all end up in the delta store which is a rowstore. How many rows are inserted in one transaction totally depends on the row size as to how many rows would fit in a 10MB buffer. Prior to SQL Server 2016, you could only adjust the DefaultBufferSize to a maximum of 100MB. For additional details see the article: SSIS Buffer Sizing

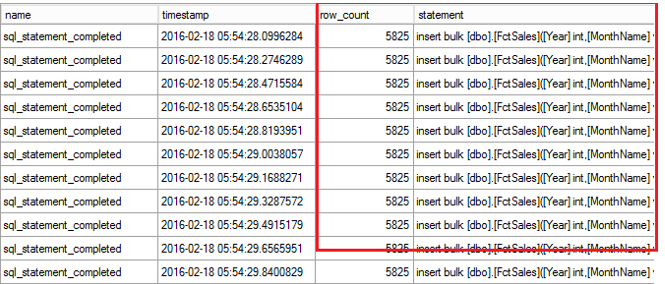

An extended event capture below shows us that each bulk insert ended up with a batch size of 5825 rows.

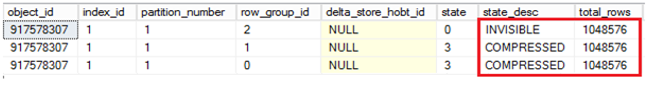

From the DMV output below, you can see that all the rows end up in the Delta store. You could adjust the BufferSize to 100MB and that row count could increase to a higher number but it all depended on the size of the row. Ultimately the rowgroup will fill up and close and will be compressed by Tuple mover but that is not the most efficient way to load data as soon after loading the data is not compressed.

select * from sys.dm_db_column_store_row_group_physical_stats

where object_id = object_id('FctSales')

AutoAdjustBufferSize Data Flow Property

SQL Server 2016 introduces a new Data Flow property called AutoAdjustBufferSize which when set to “true” ignores the DefaultBufferSize setting and the actual buffer size is calculated based on the DefaultBufferMaxRows. The formula used for calculating the Buffersize is specified below

BufferSize = DefaultBufferMaxRows (set at Design time) * Rowsize calculated at runtime.

Given this, you can set my Maximum Insert commit size to an appropriate batch size (a good start is 1,048,576) which is very useful in case of a large data transfer so you are not committing the entire large transfer in one transaction. Looking at the prior example, if I set AutoAdjustBufferSize property to true and set the DefaultBufferMaxRows to 1,048,576 which is the max row group size, you see a difference in behavior where that Max Insert commit size is

honored as the Buffer size is adjusted automatically. ![clip_image010[8] clip_image010[8]](https://msdntnarchive.z22.web.core.windows.net/media/2016/02/clip_image0108_thumb.jpg)

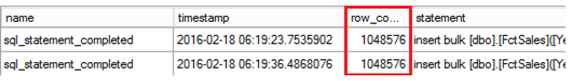

Extended Events showing the inserts from the SSIS package show us honoring the Max Insert commit size.

And the goal is to get the data into compressed rowgroups directly as you can see below. As an aside, the state INVISIBLE is an in-memory state of a compressed rowgroup before made available to users.

Performance test results

From a performance perspective these settings do have ramifications on the load times. This is a sample table used in load tests depicted by the figure below, the row size of this sample table was 1,674 bytes. These test results are on a specific VM with a specific table definition, results of performance in other scenarios will depend on the configuration of the machine, and the table definition.

| Test | SSIS Data flow configuration | Effective Batch size | Rows | Elapsed Time |

| 1 | AutoAdjustBuffer = FalseDefaultBufferSize = 10485760 (default) | 10,000 | 30 million | 15:54 |

| 2 | AutoAdjustBuffer = FalseDefaultBufferSize = 104857600 | 62,638 | 30 million | 12:03 |

| 3 | AutoAdjustBuffer = TrueDefaultBufferMaxRows = 1048576 | 1,048,576 | 30 million | 5:56 |

In the test #2 in the table above, given the max default buffer size is 100MB (which was the maximum on SQL Server 2014), given the individual row was 1674 bytes, at max you would get 62638 rows to fill that buffer before SSIS issued a commit which means it all ended up in the Delta store. Only Test 3 in this scenario ended up in compressed row groups directly.

Note: Increasing the buffer size can result in paging which can be monitored with the “Buffers Spooled” performance counter if the machine running the SSIS package cannot sustain that buffer size in memory

AutoAdjustBufferSize property of the data flow task is a great addition in SQL Server 2016 to aid in data loading scenarios.

Comments

- Anonymous

April 20, 2016

Hi,it's possible to load data directly into compressed rowgroups - so no delta stores - using SSIS 2014.You have to set the Max Number of Rows to 1,048,576, the Max Buffer Size to 100MB.Then in the OLE DB Destination, you set the Batch Size to 1,048,576 as well, and the Max Insert Commit Size to 0.If your row size is not too big, you should get the 1,048,576 row group size in the CCI without delta stores.- Anonymous

July 10, 2016

Exactly the point, if rowsize is small it doesn't matter, if it is a very wide row there are cases even setting 100 MB won't be enough to get even 102,400 rows into compressed storage.

- Anonymous

- Anonymous

May 13, 2016

One question. If the AutoAdjustBuffer option is used (as in case #3), is the 100MB limit ignored? If all 1,048,576 rows are in one buffer does this mean the buffer is ~1.6GB? Clarification would be great (is there still a 100MB limit if not using AutoAdjustBuffer, does AutoAdjustBuffer ignore the limit?).Thanks,Ken

![clip_image008[8] clip_image008[8]](https://msdntnarchive.z22.web.core.windows.net/media/2016/02/clip_image0088_thumb.jpg)