Scaling Heavy Network Traffic with Windows

Under Windows Server 2000 and 2003 (RTM), the interrupts from a single network adaptor (NIC) cannot be handled by multiple CPU Cores. A Deferred Procedure Call (DPC) call gets scheduled to run as a consequence of the NIC firing an interrupt. The DPC will deliver the received packets from the NIC to the networking subsystem of the OS. The NIC driver will block interrupts from the network card until the DPC has been handled. If your system makes heavy use of network bandwidth, sending all interrupts and DPC to one CPU may overwhelm the system and cause a bottleneck. In extreme cases the system may even become unresponsive as the amount of DPC traffic consumes all CPU cycles.

One workaround, if you run Windows 2000 and 2003 Server, is to use the Interrupt Affinity Filter Tool. This tool, which applies only to x86 version of for Windows 2000/2003 is documented here: KB 252867. Using the Interrupt Affinity Filter Tool, you can affinitize different network adaptors to different CPU cores. This overcomes parts of the problem and increases scalability by distributing interrupts between CPU cores. Be aware that the Windows 2008 tool for network affinity is not the same tool as under Windows 2000/2003 Server. You can specify network affinity in Window 2008 by editing the registry directly or by using the new Interrupt-Affinity Policy Tool.

The Windows 2003 Scalable Networking Pack (SNP) improves network scaling further by introducing Receive Side Scaling (RSS). RSS is also included in both Windows 2003 SP2 and Windows 2008. This improvement includes the ability to concurrently and asynchronously handle DPCs from a single network adaptor. The improvements are documented here: Scalable Networking with RSS. Do be aware that your network card must support this feature to gain the full advantage of the implementation.

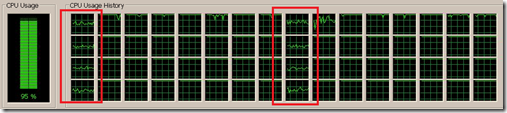

Even with RSS, high bandwidth workloads can still benefit from affinitizing the network cards to specific CPU cores. For example, using interrupt affinity and RSS was a crucial optimization for the ETL World Record. When scaling Integration Services to 64 cores, we dedicated every 8th core – one core and NIC for each NUMA node – to network traffic. Using once NIC per NUMA node keeps the memory local to the node. The rest of the cores we affinitized to SQL Server. At full bulk insert speed on the 64 cores Unisys ES/7000, our CPU load looked like this:

Notice the highlighted cores – these cores are dedicated to handling network traffic. The system had throughput of over 800 MB/sec at the point above.

Other uses of network affinity and RSS include: Analysis Services Process Data, Data Mart Loading, Heavy ETL activity and other scenarios where the network cards on the server are under heavy load.

/Thomas Kejser

Reviewers: Stuart Ozer, Carl Rabeler, Denny Lee, Prem Mehra, Ashit Gosalia, Ahmed Talat, Kun Cheng, Burzin Patel, Special Thanks to Rade Trimceski

Comments

Anonymous

September 18, 2008

PingBack from http://www.easycoded.com/scaling-heavy-network-traffic-with-windows/Anonymous

October 09, 2008

After the great work from the ETL World Record (for more information, refer to our other blog at: http://blogs.msdn.com/sqlcat/archive/2008/09/18/scaling-heavy-network-traffic-with-windows.aspx