Optimizing Replication Agent profile parameters for better performance

When SQL Server replication is used on environments with high traffic OLTP systems, users often need to adjust the agent profile parameters to increase the throughput of the log reader and distribution agents to keep up with the workload. We recently performed a series of tests to measure the performance of log reader and distribution agents while changing some of the parameters for these agents. This blog summarizes the outcomes and conclusions from this testing.

Machine Specifications

3 VMs hosted on a HP DL-380 were used in the testing. All VM's were running with the following configurations.

Storage - SSD

Processor: Intel(R) Xeon(R) CPU E5-2698 v3 @ 2.30GHz, 16 Core(s), 16 Logical Processor(s)

Physical Memory: 16GB

Test Scenarios and Workload

A workload generator tool was used to generate a mixed workload with about 30% insert, 60% update and 10% delete. The tables (or articles) used in the testing avg row size of 1KB.

During the testing, two scenarios were tested:

Scenario 1: Log Reader agent and Distribution agent separately after the workload generation completed. As part of the workload generation approximately one million rows were inserted, deleted or updated.

Scenario 2: Log Reader agent and Distribution agents run as SQL Server Agent jobs continuously and concurrently for 25 to 30 minutes.

Test Specifications

Publisher, distributor and subscriber are on different VMs.

Database data and log files are placed on different SSD disks. Distribution database was pre-created to 20 GB. Publication and subscription databases were pre-created at 5 GB.

Network performance was not accounted for during the testing. It was assumed that the network can withstand the increase or decrease in data flow.

Scenario 1

Included below are the runtimes for the Log Reader agent and Distribution agent to process approximately one million DML operations, with different settings for the ReadBatchSize for Log Reader Agent and CommitBatchSize and/or Subscription Streams for the Distribution agent.

| Log Reader Agent | |

| ReadBatchSize | Execution time in seconds |

| 500 (default) |

199.08 |

| 1000 |

186.15 |

| 2500 |

181.54 |

| 5000 |

163.81 |

| 10000 |

175.67 |

| Distribution Agent | |

| CommitBatchSize | Execution time in seconds |

| 100 (default) |

554.79 |

| 150 |

529.33 |

| 200 |

534.25 |

| 250 |

541.86 |

| 500 |

570.18 |

| 1000 |

583.7 |

| SubscriptionStreams | Execution time in seconds |

| 1 (default) |

554.79 |

| 2 |

535.58 |

| 4 |

347.63 |

| 8 |

304.06 |

| 16 |

439.24 |

Scenario 2

Included below are the runtimes for the Log Reader agent and Distribution agent, running continuously for 25-30 minutes, with different settings for the ReadBatchSize for Log Reader Agent and CommitBatchSize and/or Subscription Streams for the Distribution agent.

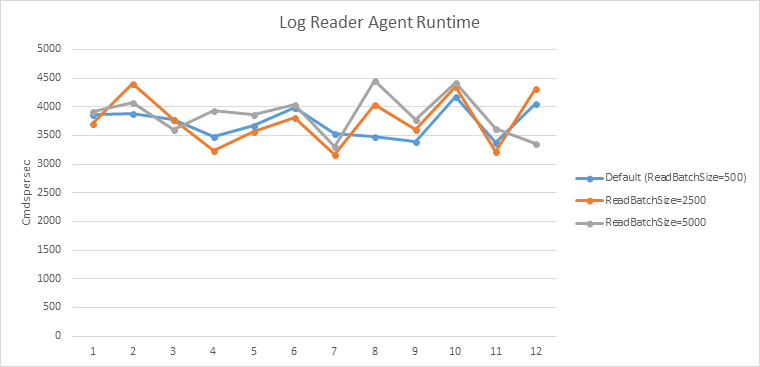

Log Reader

With singleton transactions, the following results were obtained. Each data point represents one test run.

Observation: With singleton transactions, when we increase read batch size, we see increase in the throughput (number of commands processed per second) in Log Reader.

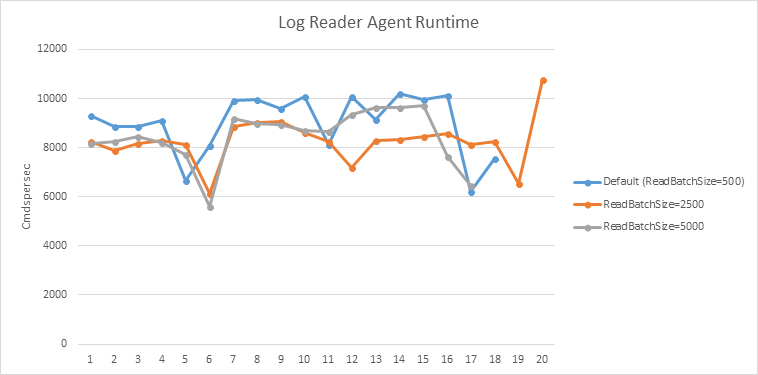

With large transactions, for example transactions with 500-1000 commands, the following results were observed.

Observation: From the testing with larger transactions it can be concluded that increasing ReadBatchSize doesn't necessarily improve the throughput. Based on the number of commands in each transaction, the round trip from publisher to distributor may be costlier, as compared to the time saved on moving more transactions in one trip.

Another test was performed with three log reader agents running simultaneously (with large transactions). With larger ReadBatchSize, a lot of contention was noticed on the distribution database (which was not the case with the default scenario). The agents were taking longer to write the huge amount of transactions in the distribution database. Meanwhile a bigger ReadBatchSize means that until all the transactions and commands processed within the batch are written to the distribution database, the transactions cannot be picked up and delivered to the subscription database by the distribution agent. This slows down the overall throughput.

Distribution Agent

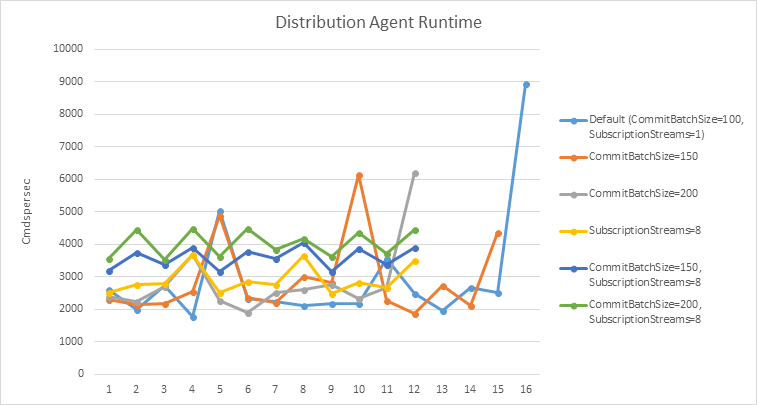

With singleton transactions, the following results were obtained. Each data point represents one test run.

Observation: A visible improvement was seen by increasing the number of parallel streams (SubscriptionStreams). At an average, by increasing both CommitBatchSize to 200 and SubscriptionStreams to 8, throughput of the Distribution agent improved significantly.

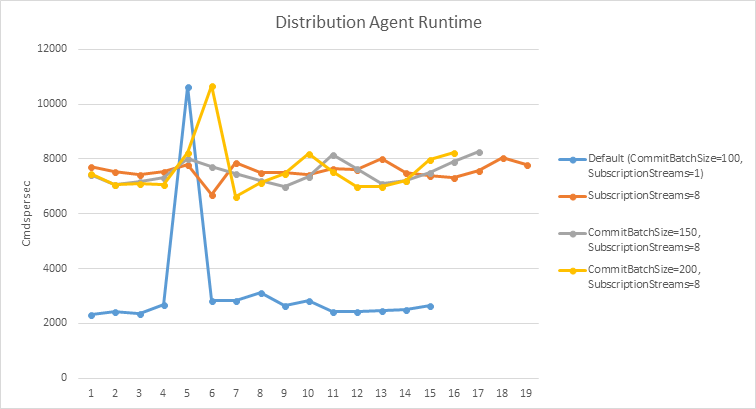

With each transaction ranging from 500-1000 commands, the following results were obtained. Each data point represents one test run.

Observation: A drastic improvement was seen with SubscriptionStreams set to 8. But with bigger transactions, there isn't a significant difference in the throughput of the distribution agent with the increase in CommitBatchSize.

Conclusion

Log Reader Agent

ReadBatchSize

From the testing, it is evident that there is no one correct value that can be applicable in the profile to make the throughput of the log reader agent to improve. At a high level, for workloads with smaller transactions, a higher value of ReadBatchSize may benefit throughput. However, for workloads with large transactions, changing the ReadBatchSize will not improve performance. Increasing ReadBatchSize would essentially mean more number of transactions are written to the distribution database in one roundtrip, which would mean an increased latency in making the transactions and commands visible for the distribution agent to deliver to the subscriber.

Distribution Agent

CommitBatchSize and SubscriptionStreams

From the testing, it is evident that increasing the number of parallel streams and CommitBatchSize have a significant impact on the overall throughput of the system. Increasing CommitBatchSize would mean more transactions committed on the subscriber with an overhead of writing larger set of transactions. The trade-off is – if a failure happens, Distribution agent must rollback and start over to reapply a larger number of transactions. Increasing SubscriptionStreams value does help in the overall throughput of the Distribution agent, since it means multiple connections to a Subscriber to apply batches of changes in parallel. But depending on the number of processors and other metadata conditions like primary key, foreign keys, unique constraints, indexes, the higher value of SubscriptionStreams might have an adverse effect. Also, if a stream fails to execute or commit, Distribution agent falls back to using a single stream to retry the failed batches.

Additional tests were performed with three log reader agents and three distribution agents running in parallel (on three publication and subscription databases). With higher value of ReadBatchSize, it was observed that there was a lot of contention on distribution database. The writer threads of Log Reader agent were competing to write the transactions in distribution database. Since the number of transactions were higher (due to higher ReadBatchSize value), it took long enough for Log Reader agents to commit the operation; as a result, Distribution agent didn't have any transactions to pick from distribution database to apply to subscribers for extended periods, often for minutes at stretch. This introduced higher latency for Log Reader agent and low throughput for Distribution agent, thereby increasing overall Replication Latency.

Sourabh Agarwal (@SQLSourabh)

Senior Program Manager, SQL Server

Follow us on Twitter: @mssqltiger | Team Blog: Aka.ms/sqlserverteam