How to do performance tests on Azure Linux VM and a little comparison to AWS

Hi, long time since my last blog and the first time to write a blog in English as some people asked me to share this information so that I decided to do it in detail.

This blog will show some comparison between AWS and Azure VM performance. But, that's just a small part of this blog as I have no account on AWS yet. I just borrowed the performance data from my colleague.

Instead, I'm going to describe how I did in detail to make others do the same quickly on their own. and Public Clouds like Azure seem to change so fast. So, you mihgt need this when you need to do your test later.

The target VM is Ubuntu in this case as I was requested by my customer.

I did the performance test in the topics below.

- VM creation time by Powershell script

- Geekbench for CPU and Memroy performance test

- fio package for disk IO performance tests

- iperf package for network performance tests

VM Creation for my test

I made a simple script to automate creating VMs for my test and it shows how long it took to create the VMs. When I wrote the script in the first place, I used the time when VM status became 'ReadyRole' (from my testing, when I use -WaitForBoot with New-AzureVM, it returns when VM is the status of 'ReadyRole'). But, I found out that it took more than 30 seconds when VM became the status of 'ReadyRole' though VM was already accepting connections like SSH.). So, I changed the script to report the time when psping command returns TCP port, SSH, open. I also checked if there was any delay between the time when TCP port opened and the time when SSH actually started to work. But, from my test, I could access VM using SSH as soon as SSH port responded to psping. So, my conclusion was that the time taken to deploy new VM successfully was the time taken to get psping response from SSH port since New-AzureVM command was thrown. Please, see the script attached at the bottom of this blog, 'New-TestVMs'.

pre-requisite of the script

- sysinternal tool(psping) and PATH set which makes my script can run psping command

- Azure subscription with more than 31 cores

- virtual network for this temporary test cloud service

command usage

.\New-TestVMs.ps1 -cloudname $yourcloudsvcname -virtualnetwork $yourvirnet `

-vmprefix $yourvmnameprefix ` #VM name with prefix

-location "Japan West" `

-userid $linuxadminusername -password $yourpassword

-VMType "D" # or "A" Standard A series VMs or "B" for basic VMs

If it runs well, the following result comes out.

I ran a few times, and got the result below.

With Excel histogram analysis(https://support.office.com/en-US/Article/Create-a-histogram-b6814e9e-5860-4113-ba51-e3a1b9ee1bbe), I got the nice graph below. The average was 2 minutes and 16 seconds for my 39 VM creation trials.

I was told that the time taken to create VMs would be different for different sizes. But, my test showed there was no big difference. (But, while i was blogging, I found out that creating D1 VM took a lot more than others. not sure why... it would be temporary or some changes was applied to Azure)

Geekbench Test

Geekbench was the choice of my performance test as my customer has used this for their test for various Cloud Service Provider's VMs. There is no particular reason to choose the tool for me. The tool is available for free in a simple test. But, it didn't go well with unlicensed version. So, I bought it in a discounted price for black Friday sales. :) and you know that this performance tool is specialized for CPU and memory performance. So, this performance test will not represent actual workloads you will run on Azure.

To do Geekbench test, you need to access one of the VMs created by the script above via PuTTy or MobaxTerm. And copy and paste the following commands. That will install Geekbench and run the test.

sudo -s

apt-get update

wget https://cdn.primatelabs.com/Geekbench-3.2.2-Linux.tar.gz

tar -xvf ./Geekbench-3.2.2-Linux.tar.gz

cd dist/Geekbench-3.2.2-Linux/

./geekbench_x86_64 -r #put_your_e-mail_licensed##put_your_Geekbench_license_code#

./geekbench_x86_64 --no-upload

Following is the last a few lines from the command set above.

Following is the table from the result.

and in the chart to have a quick look at the differences.

One thing to catch my eyes was that AWS VMs reported that they were running on the host with better CPUs than Azure. But, Azure VMs outperformed them though the CPU clock was lower. We don't know what made the differences. But, I guess Hyper-V might be a lot better than Xen hypervisor AWS started with or AWS might be running more VMs or throttling to share cycles between VMs. and I would like to emphosize that this result doesn't guarantee your workloads would get the same performance boost when you choose higher VM sizes as there would be bottleneck which comes first among CPU, momory, disk IO, and network.

There are no AWS performance figures for more than 4 vCPUs as I have no direct access to AWS. Instead, I asked my colleague the same test with his AWS account.

Disk IO Test

Next is Disk IO test. We have to add data disks to VMs. Run the following script. It will add the max number of data disks in each VM of cloud service you choose. I choose 10GB disk size as this is just for performance test that doesn't need the full size disk. The smaller the disk size is, the faster you could make a single software RAID disk.

#Add disks

$vms=Get-AzureVM -ServiceName $yourcloudsvcname

$sizes=Get-AzureRoleSize

foreach ($vm in $vms) {

$maxdisk=($sizes|?{$_.InstanceSize -eq $vm.InstanceSize}).MaxDataDiskCount

for($i=0; $i -lt $maxdisk; $i++){

#Remove-AzureDataDisk -LUN $i -DeleteVHD -VM $vm > $null

Add-AzureDataDisk -CreateNew -DiskSizeInGB 10 -VM $vm -DiskLabel "temp disk $i" -LUN $i -Verbose >$null

$k=$i+1

write-host "added $k disks out of $maxdisk"

}

$vm|Update-AzureVM -verbose > $null

}

After running the script above, you can see the data disks attached in the portal.

Yes, it's D14 and have 32 data disks. HOST CACHE doesn't always guarantee higher performance. The recommendation is to test your workload with HOST CACHE setting that yield the best. One more thing you have to consider is that HOST CACHE can be enabled for up to four disks. You will get error when you enable HOST CACHE for more than four data disks.

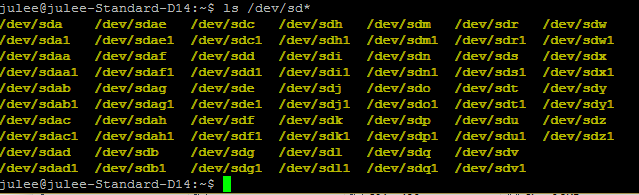

and when you connect D14 Ubuntu VM you created, you can see there are many folders(actually disks) in Ubuntu like below. The folders from /dev/sdc to /dev/sdah are the disks attached.

Running fdisk for 32 disks is really tedious tasks that might hurt your fingers. So, I found a little bit easier way. Copy the texts in the attached file below, "fdisk.txt" and paste them on the cnosole. You can see below that all fdisk commands for 32 disks were executed by the single click.

The next thing to do is to install MDADM package that makes the 32 data disks into a single SOFTRAID disk. Just copy and paste the command as we did before.

sudo apt-get update

sudo apt-get install mdadm -y

When you execute the script above, you need to just enter twice. and then, run mdadm command below to make a single 32 TB striped disk. You don't have to have redundancies such as RAID5, RAID10, or RAID1 as Azure have 3 replicas for all data disks. just copy and paste to the console. If you are testing a VM with less than 32 disks, you can adjust the number 32 behind --raid-devices option and remove the disk folder in the first command. and there is nothing else to change. Please, see the great blog of my colleague, Neil

sudo mdadm --create /dev/md127 --level 0 --raid-devices 32 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 /dev/sdl1 /dev/sdm1 /dev/sdn1 /dev/sdo1 /dev/sdp1 /dev/sdq1 /dev/sdr1 /dev/sds1 /dev/sdt1 /dev/sdu1 /dev/sdv1 /dev/sdw1 /dev/sdx1 /dev/sdy1 /dev/sdz1 /dev/sdaa1 /dev/sdab1 /dev/sdac1 /dev/sdad1 /dev/sdae1 /dev/sdaf1 /dev/sdag1 /dev/sdah1

sudo mkfs -t ext4 /dev/md127

sudo mkdir /testdrive

sudo mount /dev/md127 /testdrive

df -h /dev/md127

It takes a while and come back with the screen below. Yes, 315GB file system, /dev/md127 was mounted on /testdrive.

The next thing is to install the test tool, fio package and run the tool.

sudo apt-get install fio -y

and run the tool like below. Disk IOPS test is definitely one of the complex topics and the parameters below somtimes seriously affect the number of IOPS. It's beyond my topic of this blog and you can see the great blog from Jose if you want to learn more though it's dependent on SQLIO tool on Windows(https://blogs.technet.com/b/josebda/archive/2013/03/28/sqlio-powershell-and-storage-performance-measuring-iops-throughput-and-latency-for-both-local-disks-and-smb-file-shares.aspx ). The parameters below was given by my customer and I have no idea why the values were chosen. It might be their workload specific. and you may have to increase the number of --numjobs if you have more than 4 data disks to measure the max IOPS.

sudo fio --directory=/testdrive --name=tempfile.dat --direct=1 --rw=randwrite --bs=16k --size=2G --numjobs=16 --time_based --runtime=20 --group_reporting

You may see the different performance result when you iterate the test as it's tenant storage and you may be temporarily throttled if you run the test for a long time. and you could get better performance if you warm up the storage. It's the characteristics of tenant based Cloud Storage. and some of you may feel the performance is not good and even it doesn't meet your workload requirements. Don't worry you can try the premium storage in its preview stage providing more than 10 times IOPS performance.

(I had an issue with Ubuntu 14.10 and mdadm. I need to investigate why it sometimes hangs when I run mkfs command. So, the IOPS test was done with CentOS 7.0 which is provided from Gallery. In CentOS, the commands are a little bit different when you install some packages. I added the commands below)

To install mdadm,

sudo yum install mdadm -y

To install fio,

sudo yum install wget -y

sudo yum install libaio -y

wget https://pkgs.repoforge.org/fio/fio-2.1.10-1.el7.rf.x86_64.rpm

sudo rpm -iv fio-2.1.10-1.el7.rf.x86_64.rpm

Other commands are the same with Ubuntu.

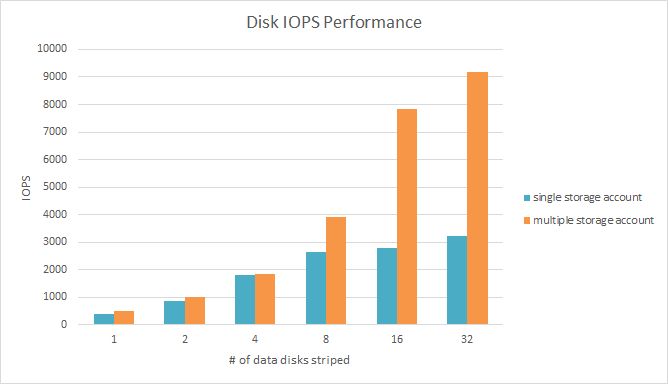

Following is my test result. As you can see, the number of IOPS increase accordingly as I made the disks as one single stripe disk.

Azure says the maximum single blob disk provides 500 IOPS in the link.(https://msdn.microsoft.com/en-us/library/azure/dn249410.aspx )

My test reult is a lot less than the link above says. It might be overhead by SOFTRAID like mdadm. But, it's too much. So, I decided to test a better configuration which is to put each data disk into a separate storage account. Following is the script I used.

#add disks in different storage account

$vms=Get-AzureVM -ServiceName $yourcloudsvc #all the VMs in this cloud service will have the max data disks in separate storage accounts

$sizes=Get-AzureRoleSize

$storageaccountprefix=$YourPreferedPrefixNotToConflict

$cursub=Get-AzureSubscription -Current

$location=$yourVmLocation

foreach ($vm in $vms) {

$maxdisk=($sizes|?{$_.InstanceSize -eq $vm.InstanceSize}).MaxDataDiskCount

for($i=0; $i -lt $maxdisk; $i++){

#Remove-AzureDataDisk -LUN $i -DeleteVHD -VM $vm > $null

$accname=$storageaccountprefix+$i

New-AzureStorageAccount -StorageAccountName $accname -Location $location -erroraction silentlycontinue

Set-AzureSubscription -SubscriptionName $cursub.subscriptionname -CurrentStorageAccountName $accname

Add-AzureDataDisk -CreateNew -DiskSizeInGB 10 -VM $vm -DiskLabel "temp disk $i" -LUN $i -Verbose >$null

$k=$i+1

write-host "added $k disks out of $maxdisk"

$vm|Update-AzureVM -verbose > $null

}

}

Set-AzureSubscription -SubscriptionName $cursub.subscriptionname -CurrentStorageAccountName $cursub.currentstorageaccountname

OK. let's do the test again and the table below shows a lot better performance. At least, until eight data disks, the IOPS increase linearly as ideal though it finally faces a limit under 10,000 IOPS. So, if you have a workload sensitive to Disk IO performance and you don't want to spend too mcuh on Premium Storage based on SSD, the separate storage accounts for data disks are the way to go. Once again, no major cloud service providers such as Microsoft Azure and AWS don't provide the performance SLA.

Let's see the disk IOPS test in the chart.

VM Network Throughput Test

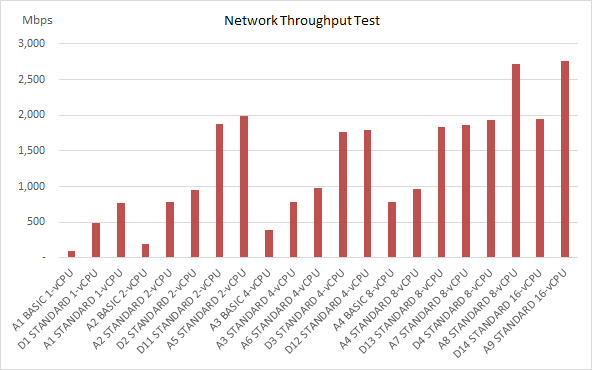

The last topic in IaaS is a network performance. I tested with iperf. In this test, I deployed more than two VMs in the same vNet. The bigger the size of VM is, the higher the network throughput is provided. So, the biggest one is a server and the testing target VM is a client.

Run the commands below in all VMs.

sudo apt-get install iperf

and run the command below in the biggest VM, D14 for me.

iperf -s

Run the commands below in the target VM you want to measure the throughput. The IP address of 192.168.0.5 is the IP address of the VM you ran the command "iperf -s"

iperf -c 192.168.0.5 -P 10 -t 100

Following is the result what I got. Again, again, all performance data here is the result of my configuration and it changes every time when you run. Azure and AWS don't provide any performance related SLA.

Summary

I've done the basic IaaS performance test of CPU, memory, disk and network.

Everything worked as expected and Azure Powershell was really powerful to automate my test.

I'm going to test above on Windows VM as well when I have enough time to do that.

Any corrections, advices, or comments will be happily accepted.

Thanks.

Comments

- Anonymous

January 01, 2003

Hi, jun seok

the comparison would be unfair as the underlying hardware is different significantly. - Anonymous

January 01, 2003

Patrick, I heard there was an issue with Ubuntu image. It should be fixed now. - Anonymous

December 29, 2014

Hi! Nice to see you here !!^^

I'm also wondering about Azure VM Performance vs On-Premise VM.

and I'm testing Azure VM and On-Premise VM.

I will share the result later.... - Anonymous

January 12, 2015

The comment has been removed - Anonymous

January 16, 2015

I did with G5 and the score is from 56904 to 54928 on a few tests. - Anonymous

January 18, 2015

Při prezentacích a školeních na téma Azure služeb se poměrně často setkávám - Anonymous

February 26, 2015

The comment has been removed