SQLIO, PowerShell and storage performance: measuring IOPs, throughput and latency for both local disks and SMB file shares

IMPORTANT NOTE:

SQLIO has been deprecated, as shown at https://blogs.msdn.com/b/sql_server_team/archive/2015/12/11/sqlio-disk-subsystem-benchmark-tool-is-being-retired.aspx DiskSpd is the suitable replacement for SQLIO. You can find details on that at https://blogs.technet.com/b/josebda/archive/2014/10/13/diskspd-powershell-and-storage-performance-measuring-iops-throughput-and-latency-for-both-local-disks-and-smb-file-shares.aspx

----------------

1. Introduction

I have been doing storage-related demos and publishing blogs with some storage performance numbers for a while, and I commonly get questions such as “How do you run these tests?” or “What tools do you use to generate IOs for your demos?”. While it’s always best to use a real workload to test storage, sometimes that is not convenient. So, I very frequently use a free tool from Microsoft to simulate IOs called SQLIO. It’s a small and simple tool that simulate several types of workloads, including common SQL Server ones. And you can apply it to several configurations, from a physical host or virtual machine, using a local disk, a LUN on a SAN, a Storage Space or an SMB file share.

2. Download the tool

To get started, you need to download and install the SQLIO tool. You can get it from https://www.microsoft.com/en-us/download/details.aspx?id=20163. The setup will install the tool in a folder of your choice. In the end, you really only need one file: SQLIO.EXE. You can copy it to any folder and it runs in pretty much every Windows version, client or server. In this blog post, I assume that you installed SQLIO on the C:\SQLIO folder.

3. Prepare a test file

Next, you need to create a file in the disk or file share that you will be using for your demo or test.

Ideally, you should create a file as big as possible, so that you can exercise the entire disk. For hard disks, creating a small file causes the head movement to be restricted to a portion of the disk. Unless you’re willing to use only a fraction of the hard disk capacity, these numbers show unrealistically high random IO performance. Storage professionals call this technique “short stroking”. For SANs, small files might end up being entirely cached in the controller RAM, again giving you great numbers that won’t hold true for real deployments. You can actually use SQLIO to measure the difference between using a large file and a small file for your specific configuration.

To create a large file for your test, the easiest way is using the FSUTIL.EXE tool, which is included with all versions of Windows.

For instance, to create a 1TB file on the X: drive, using the following command from a PowerShell prompt:

FSUTIL.EXE file createnew X:\testfile.dat (1TB)

FSUTIL.EXE file setvaliddata X:\testfile.dat (1TB)

Note 1: You must do this from PowerShell, in order to use the convenient (1TB) notation. If you run this from an old command prompt, you need to calculate 1 terabyte in bytes, which is 1099511627776 (2^40). Before you Storage professionals rush to correct me, I know this is technically incorrect. One terabyte is 10^12 (1000000000000) and 2^40 is actually one Tebibyte (1TiB). However, since both PowerShell and SQLIO use the TB/GB/MB/KB notation when referring to powers of 2, I will ask you to give me a pass here.

Note 2: The “set valid data” command lets you move the “end of file” marker, avoiding a lengthy initialization of the file. This is much faster than writing over the entire file. However, there are security implications for “set valid data” (it might expose leftover data on the disk if you don’t properly initialize the file) and you must be an administrator on the machine to use it.

Here’s another example, with output, using a smaller file size:

PS C:\> FSUTIL.EXE File CreateNew X:\TestFile.DAT (40GB)

File X:\TestFile.DAT is created

PS C:\> FSUTIL.EXE File SetValidData X:\TestFile.DAT (40GB)

Valid data length is changed

4. Run the tool

With the tool installed and the test file created, you can start running SQLIO.

You also want to make sure there’s nothing else running on the computer, so that other running process don’t interfere with your results by putting additional load on the CPU, network or storage. If the disk you are using is shared in any way (like a LUN on a SAN), you want to make sure that nothing else is competing with your testing. If you’re using any form of IP storage (iSCSI LUN, SMB file share), you want to make sure that you’re not running on a network congested with other kinds of traffic.

WARNING: You could be generating a whole lot of disk IO, network traffic and/or CPU load when you run SQLIO. If you’re in a shared environment, you might want to talk to your administrator and ask permission. This could generate a whole lot of load and disturb anyone else using other VMs in the same host, other LUNs on the same SAN or other traffic on the same network.

From an old command prompt or a PowerShell prompt, issue a single command line to start getting some performance results. Here is your first example, with output, generating random 8KB reads on that file we just created:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 -t8 -o16 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

8 threads reading for 10 secs from file X:\TestFile.DAT

using 8KB random IOs

enabling multiple I/Os per thread with 16 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 36096.60

MBs/sec: 282.00

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 3

Max_Latency(ms): 55

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 30 19 12 8 6 5 4 3 3 2 2 2 1 1 1 1 0 0 0 0 0 0 0 0 0

So, for this specific disk (a simple Storage Space created from a pool of 3 SSDs), I am getting over 36,000 IOPs of 8KB each with an average of 3 milliseconds of latency (time it takes for the operation to complete, from start to finish). Not bad in terms of IOPS, but the latency for 8KB IOs seems a little high for SSD-based storage. We’ll investigate that later.

Let’s try now another command using sequential 512KB reads on that same file:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t2 -o16 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

2 threads reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 16 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1376.09

MBs/sec: 688.04

latency metrics:

Min_Latency(ms): 6

Avg_Latency(ms): 22

Max_Latency(ms): 23

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 33 67 0

I got about 688 MB/sec with an average latency of 22 milliseconds per IO. Again, good throughput, but the latency looks high for SSDs. We’ll dig deeper in a moment.

5. Understand the parameters used

Now let’s inspect the parameters on those SQLIO command line. I know it’s a bit overwhelming at first, so we’ll go slow. And keep in mind that, for SQLIO parameters, lowercase and uppercase mean different things, so be careful.

Here is the explanation for the parameters used above:

| Parameter | Description | Notes |

| -s | The duration of the test, in seconds. | You can use 10 seconds for a quick test. For any serious work, use at least 60 seconds. |

| -k | R=Read, W=write | Be careful with using writes on SSDs for a long time. They can wear out the drive. |

| -f | Random of Sequential | Random is common for OLTP workloads. Sequential is common for Reporting, Data Warehousing. |

| -b | Size of the IO in KB | 8KB is the typical IO for OLTP workloads. 512KB is common for Reporting, Data Warehousing. |

| -t | Threads | For large IOs, just a couple is enough. Sometimes just one. For small IOs, you could need as many as the number of CPU cores. |

| -o | Outstanding IOs or queue depth | In RAID, SAN or Storage Spaces setups, a single disk can be made up of multiple physical disks. You can start with twice the number of physical disks used by the volume where the file sits. Using a higher number will increase your latency, but can get you more IOPs and throughput. |

| -LS | Capture latency information | Always important to know the average time to complete an IO, end-to-end. |

| -BN | Do not buffer | This asks for no hardware or software buffering. Buffering plus a small file size will give you performance of the memory, not the disks. |

For OLTP workloads, I commonly start with 8KB random IOs, 8 threads, 16 outstanding. 8KB is the size of the page used by SQL Server for its data files. In parameter form, that would be: -frandom -b8 -t8 -o16. For reporting or OLAP workloads with large IO, I commonly start with 512KB IOs, 2 threads and 16 outstanding. 512KB is a common IO size when SQL Server loads a batch of 64 data pages when using the read ahead technique for a table scan. In parameter form, that would be: -fsequential -b512 -t2 -o16. These numbers will need to be adjusted if you machine has many cores and/or if you volume is backed up by a large number of physical disks.

If you’re curious, here are more details about parameters for SQLIO, coming from the tool’s help itself:

Usage: D:\sqlio\sqlio.exe [options] [<filename>...]

[options] may include any of the following:

-k<R|W> kind of IO (R=reads, W=writes)

-t<threads> number of threads

-s<secs> number of seconds to run

-d<drv_A><drv_B>.. use same filename on each drive letter given

-R<drv_A/0>,<drv_B/1>.. raw drive letters/number for I/O

-f<stripe factor> stripe size in blocks, random, or sequential

-p[I]<cpu affinity> cpu number for affinity (0 based)(I=ideal)

-a[R[I]]<cpu mask> cpu mask for (R=roundrobin (I=ideal)) affinity

-o<#outstanding> depth to use for completion routines

-b<io size(KB)> IO block size in KB

-i<#IOs/run> number of IOs per IO run

-m<[C|S]><#sub-blks> do multi blk IO (C=copy, S=scatter/gather)

-L<[S|P][i|]> latencies from (S=system, P=processor) timer

-B<[N|Y|H|S]> set buffering (N=none, Y=all, H=hdwr, S=sfwr)

-S<#blocks> start I/Os #blocks into file

-v1.1.1 I/Os runs use same blocks, as in version 1.1.1

-F<paramfile> read parameters from <paramfile>

Defaults:

-kR -t1 -s30 -f64 -b2 -i64 -BN testfile.dat

Maximums:

-t (threads): 256

no. of files, includes -d & -R: 256

filename length: 256

6. Tune the parameters for large IO

Now the you have the basics down, we can spend some time looking at how you can refine your number of threads and queue depth for your specific configuration. This might help us figure out why we had those higher than expected latency numbers in the initial runs. You basically need to experiment with the -t and the -o parameters until you find the one that give you the best results.

Let’s start with queue depth. You first want to find out the latency for a given system with a small queue depth, like 1 or 2. For 512KB IOs, here’s what I get from my test disk with a queue depth of 1 and a thread count of 1:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t1 -o1 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

1 thread reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 1 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 871.00

MBs/sec: 435.50

latency metrics:

Min_Latency(ms): 1

Avg_Latency(ms): 1

Max_Latency(ms): 1

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

For large IOs, we typically look at the throughput (in MB/sec). With 1 outstanding IO, we are at 435 MB/sec with just 1 millisecond of latency per IO. However, if you don’t queue up some IO, we’re not extracting the full throughput of the disk, since we’ll be processing the data while the disk is idle waiting for more work. Let’s see what happens if we queue up more IOs:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t1 -o2 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

1 thread reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 2 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1377.70

MBs/sec: 688.85

latency metrics:

Min_Latency(ms): 1

Avg_Latency(ms): 1

Max_Latency(ms): 2

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

OK. We are now up to 688 MB/sec with 2 outstanding IOs, and our average latency is still at the same 1 milliseconds per IO. You can also see that we now have a max latency of 2 milliseconds to complete, although in the histogram shows that most are still taking 1ms. Let’s double it up again to see what happens:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t1 -o4 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

1 thread reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 4 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1376.70

MBs/sec: 688.35

latency metrics:

Min_Latency(ms): 1

Avg_Latency(ms): 2

Max_Latency(ms): 3

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 0 67 33 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Well, at a queue depth of 4, we gained nothing (we are still at 688 MB/sec), but our latency is now solid at 2 milliseconds, with 33% of the IOs taking 3 milliseconds. Let’s give it one more bump to see what happens. Trying now 8 outstanding IOs:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t1 -o8 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

1 thread reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 8 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1376.50

MBs/sec: 688.25

latency metrics:

Min_Latency(ms): 2

Avg_Latency(ms): 5

Max_Latency(ms): 6

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 0 0 0 0 68 32 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

As you can see, increasing the –o parameter is not helping. After we doubled the queue depth from 4 to 8, there was no improvement in throughput. All we did was more than double our latency to an average of 5 milliseconds, with many IOs taking 6 milliseconds. That’s when you know you’re queueing up too much IO.

So, it seems like 2 outstanding IOs is a reasonable number for this disk. Now we can see if we can gain by spreading this across multiple threads. What we want to avoide here is bottlenecking on a single CPU core, which is very common we doing lots and lots of IO. A simple experiment is to double the number of threads while halfing the queue depth. Let’s now try 2 threads with 1 outstanding IOs each. This will give us the same 2 outstanding IOs total:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t2 -o1 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

2 threads reading for 10 secs from file X:\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 1 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1377.90

MBs/sec: 688.95

latency metrics:

Min_Latency(ms): 1

Avg_Latency(ms): 1

Max_Latency(ms): 2

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Well, it seems like using two threads here did not buy us anything. We’re still at about the same throughput and latency. That pretty much proves that 1 thread was enough for this kind of configuration and workload. This is not surprising for large IO. However, for smaller IO size, the CPU is more taxed and we might hit a single core bottleneck. Just in case, I looked at the CPU via Task Manager and confirmed we were only using 7% of the CPU and obviously none of the 4 cores were too busy.

7. Tune queue depth for for small IO

Performing the same tuning exercise for small IO is typically more interesting. For this one, we’ll automate things a bit using a little PowerShell scripting to run SQLIO in a loop and parse its output. This way we can try a lot of different options and see which one works best. This might take a while to run, though… Here’s a script that you can run from a PowerShell prompt, trying out many different queue depths:

1..64 | % {

$o = "-o $_";

$r = C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 $o -t1 -LS -BN X:\testfile.dat

$i = $r.Split("`n")[10].Split(":")[1].Trim()

$m = $r.Split("`n")[11].Split(":")[1].Trim()

$l = $r.Split("`n")[14].Split(":")[1].Trim()

$o + ", " + $i + " iops, " + $m + " MB/sec, " + $l + " ms"

}

The script basically runs SQLIO 64 times, each time using a different queue depth, from 1 to 64. The results from SQLIO are stored in the $r variable and parsed to show IOPs, throughput and latency on a single line. There is some fun string parsing there, leveraging the Split() function to break the output by line and then again to break each line in half to get the actual numbers. Here’s the sample output from my system:

-o 1, 9446.79 iops, 73.80 MB/sec, 0 ms

-o 2, 15901.80 iops, 124.23 MB/sec, 0 ms

-o 3, 20758.20 iops, 162.17 MB/sec, 0 ms

-o 4, 24021.20 iops, 187.66 MB/sec, 0 ms

-o 5, 26047.90 iops, 203.49 MB/sec, 0 ms

-o 6, 27559.10 iops, 215.30 MB/sec, 0 ms

-o 7, 28666.40 iops, 223.95 MB/sec, 0 ms

-o 8, 29320.90 iops, 229.06 MB/sec, 0 ms

-o 9, 29733.70 iops, 232.29 MB/sec, 0 ms

-o 10, 30337.00 iops, 237.00 MB/sec, 0 ms

-o 11, 30407.50 iops, 237.55 MB/sec, 0 ms

-o 12, 30609.78 iops, 239.13 MB/sec, 0 ms

-o 13, 30843.40 iops, 240.96 MB/sec, 0 ms

-o 14, 31548.50 iops, 246.47 MB/sec, 0 ms

-o 15, 30692.10 iops, 239.78 MB/sec, 0 ms

-o 16, 30810.40 iops, 240.70 MB/sec, 0 ms

-o 17, 31815.00 iops, 248.55 MB/sec, 0 ms

-o 18, 33115.19 iops, 258.71 MB/sec, 0 ms

-o 19, 31290.40 iops, 244.45 MB/sec, 0 ms

-o 20, 32430.40 iops, 253.36 MB/sec, 0 ms

-o 21, 33345.60 iops, 260.51 MB/sec, 0 ms

-o 22, 31634.80 iops, 247.14 MB/sec, 0 ms

-o 23, 31330.50 iops, 244.76 MB/sec, 0 ms

-o 24, 32769.40 iops, 256.01 MB/sec, 0 ms

-o 25, 34264.30 iops, 267.68 MB/sec, 0 ms

-o 26, 31679.00 iops, 247.49 MB/sec, 0 ms

-o 27, 31501.60 iops, 246.10 MB/sec, 0 ms

-o 28, 33259.40 iops, 259.83 MB/sec, 0 ms

-o 29, 33882.30 iops, 264.70 MB/sec, 0 ms

-o 30, 32009.40 iops, 250.07 MB/sec, 0 ms

-o 31, 31518.10 iops, 246.23 MB/sec, 0 ms

-o 32, 33548.30 iops, 262.09 MB/sec, 0 ms

-o 33, 33912.19 iops, 264.93 MB/sec, 0 ms

-o 34, 32640.00 iops, 255.00 MB/sec, 0 ms

-o 35, 31529.30 iops, 246.32 MB/sec, 0 ms

-o 36, 33973.50 iops, 265.41 MB/sec, 0 ms

-o 37, 34174.62 iops, 266.98 MB/sec, 0 ms

-o 38, 32556.50 iops, 254.34 MB/sec, 0 ms

-o 39, 31521.00 iops, 246.25 MB/sec, 0 ms

-o 40, 34337.60 iops, 268.26 MB/sec, 0 ms

-o 41, 34455.00 iops, 269.17 MB/sec, 0 ms

-o 42, 32265.00 iops, 252.07 MB/sec, 0 ms

-o 43, 31681.80 iops, 247.51 MB/sec, 0 ms

-o 44, 34017.69 iops, 265.76 MB/sec, 0 ms

-o 45, 34433.80 iops, 269.01 MB/sec, 0 ms

-o 46, 33213.19 iops, 259.47 MB/sec, 0 ms

-o 47, 31475.20 iops, 245.90 MB/sec, 0 ms

-o 48, 34467.50 iops, 269.27 MB/sec, 0 ms

-o 49, 34529.69 iops, 269.76 MB/sec, 0 ms

-o 50, 33086.19 iops, 258.48 MB/sec, 0 ms

-o 51, 31157.90 iops, 243.42 MB/sec, 1 ms

-o 52, 34075.30 iops, 266.21 MB/sec, 1 ms

-o 53, 34475.90 iops, 269.34 MB/sec, 1 ms

-o 54, 33333.10 iops, 260.41 MB/sec, 1 ms

-o 55, 31437.60 iops, 245.60 MB/sec, 1 ms

-o 56, 34072.69 iops, 266.19 MB/sec, 1 ms

-o 57, 34352.80 iops, 268.38 MB/sec, 1 ms

-o 58, 33524.21 iops, 261.90 MB/sec, 1 ms

-o 59, 31426.10 iops, 245.51 MB/sec, 1 ms

-o 60, 34763.19 iops, 271.58 MB/sec, 1 ms

-o 61, 34418.10 iops, 268.89 MB/sec, 1 ms

-o 62, 33223.19 iops, 259.55 MB/sec, 1 ms

-o 63, 31959.30 iops, 249.68 MB/sec, 1 ms

-o 64, 34760.90 iops, 271.56 MB/sec, 1 ms

As you can see, for small IOs, we got consistently better performance as we increased the queue depth for the first few runs. After 14 outstanding IOs, adding more started giving us very little improvement until things flatten out completely. As we keept adding more queue depth, all he had was more latency with no additional benefit in IOPS or throughput. Here’s that same data on a chart:

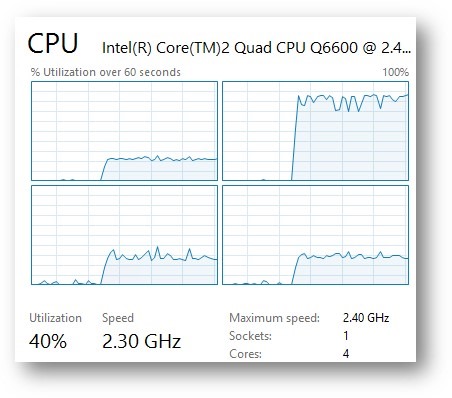

So, in this setup, we seem to start losing steam at around 10 outstanding IOs. However, I noticed in Task Manager that one core was really busy and our overall CPU utilization was at 40%.

In this quad-core system, any overall utilization above 25% could mean there was a core bottleneck when using a single thread. Maybe we can do better with multiple threads. Let’s try increasing the number of threads with a matching reduction of queue depth so we end up with the same number of total outstanding IOs.

$o = 32

$t = 1

While ($o -ge 1) {

$pt = "-t $t";

$po = "-o $o";

$r = C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 $po $pt -LS -BN X:\testfile.dat

$i = $r.Split("`n")[10].Split(":")[1].Trim()

$m = $r.Split("`n")[11].Split(":")[1].Trim()

$l = $r.Split("`n")[14].Split(":")[1].Trim()

$pt + “ “ + $po + ", " + $i + " iops, " + $m + " MB/sec, " + $l + " ms"

$o = $o / 2

$t = $t * 2

}

Here’s the output:

-t 1 -o 32, 32859.30 iops, 256.71 MB/sec, 0 ms

-t 2 -o 16, 35946.30 iops, 280.83 MB/sec, 0 ms

-t 4 -o 8, 35734.80 iops, 279.17 MB/sec, 0 ms

-t 8 -o 4, 35470.69 iops, 277.11 MB/sec, 0 ms

-t 16 -o 2, 35418.60 iops, 276.70 MB/sec, 0 ms

-t 32 -o 1, 35273.60 iops, 275.57 MB/sec, 0 ms

As you can see, in my system, adding a second thread improved things by about 10%, reaching nearly 36,000 IOPS. It seems like we were a bit limited by the performance of a single core. We call that being “core bound”. See below the more even per-core CPU utilization when using 2 threads.

However, 4 threads did not help and the overall CPU utilization was below 50% the whole time. Here’s the full SQLIO.EXE output with my final selected parameters for 8KB random IO in this configuration:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 -t2 -o16 -LS -BN X:\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337894 counts per second

2 threads reading for 10 secs from file X:\TestFile.DAT

using 8KB random IOs

enabling multiple I/Os per thread with 16 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: X:\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 35917.90

MBs/sec: 280.60

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 0

Max_Latency(ms): 4

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 66 26 7 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

For systems with more capable storage, it’s easier to get “core bound” and adding more threads can make a much more significant difference. As I mentioned, it’s important to to monitor the per-core CPU utilization via Task Manager or Performance monitor to look out for these bottlenecks.

8. Multiple runs are better than one

One thing you might have notice with SQLIO (or any other tools like it) is that the results are not always the same given the same parameters. For instance, one of our “-b8 -t2 -o16” runs yielded 35,946 IOPs while another gave us 35,917 IOPs. How can you tell which one is right? Ideally, once you settle on a specific set of parameters, you should run SQLIO a few times and average out the results. Here’s a sample PowerShell script to do it, using the last set of parameters we used for the 8KB IOs:

$ti=0

$tm=0

$tl=0

$tr=10

1..$tr | % {

$r = C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 -t2 -o16 -LS -BN X:\TestFile.DAT

$i = $r.Split("`n")[10].Split(":")[1].Trim()

$m = $r.Split("`n")[11].Split(":")[1].Trim()

$l = $r.Split("`n")[14].Split(":")[1].Trim()

"Run " + $_ + " = " + $i + " IOPs, " + $m + " MB/sec, " + $l + " ms"

$ti = $ti + $i

$tm = $tm + $m

$tl = $tl + $l

}

$ai = $ti / $tr

$am = $tm / $tr

$al = $tl / $tr

"Average = " + $ai + " IOPs, " + $am + " MB/sec, " + $al + " ms"

The script essentially runs SQLIO that number of times, totalling the numbers for IOPs, throughput and latency, so it can show an average at the end. The $tr variable represents the total number of runs desired. Variables starting with $t hold the totals. Variables starting with $a hold averages. Here’s a sample output:

Run 1 = 36027.40 IOPs, 281.46 MB/sec, 0 ms

Run 2 = 35929.80 IOPs, 280.70 MB/sec, 0 ms

Run 3 = 35955.90 IOPs, 280.90 MB/sec, 0 ms

Run 4 = 35963.30 IOPs, 280.96 MB/sec, 0 ms

Run 5 = 35944.19 IOPs, 280.81 MB/sec, 0 ms

Run 6 = 35903.60 IOPs, 280.49 MB/sec, 0 ms

Run 7 = 35922.60 IOPs, 280.64 MB/sec, 0 ms

Run 8 = 35949.19 IOPs, 280.85 MB/sec, 0 ms

Run 9 = 35979.30 IOPs, 281.08 MB/sec, 0 ms

Run 10 = 35921.60 IOPs, 280.63 MB/sec, 0 ms

Average = 35949.688 IOPs, 280.852 MB/sec, 0 ms

As you can see, there’s a bit of variance there and it’s always a good idea to capture multiple runs. You might want to run each iteration for a longer time, like 60 seconds each.

9. Performance Monitor

Performance Monitor is a tool built into Windows (client and server) that shows specific performance information for several components of the system. For local storage, you can look into details about the performance of physical disks, logical disks and Hyper-V virtual disks. For remote storage you can inspect networking, SMB file shares and much more. In any case, you want to keep an eye on your processors, as a whole and per core.

Here are a few counters we can inspect, for instance, while running that random 8KB IO workload we just finished investigating:

| Counter Set | Counter | Instance | Notes |

| Logical Disk | Avg. Disk Bytes/Transfer | Specific disk and/or Total | Average IO size |

| Logical Disk | Avg. Disk Queue Length | Specific disk and/or Total | Average queue depth |

| Logical Disk | Avg. Disk sec/Transfer | Specific disk and/or Total | Average latency |

| Logical Disk | Disk Bytes/sec | Specific disk and/or Total | Throughput |

| Logical Disk | Disk Transfers/sec | Specific disk and/or Total | IOPs |

| Processor | % Processor Time | Specific core and/or Total | Total CPU utilization |

| Processor | % Privileged Time | Specific core and/or Total | CPU used by privileged system services |

| Processor | % Interrupt Time | Specific core and/or Total | CPU used to handle hardware interrupts |

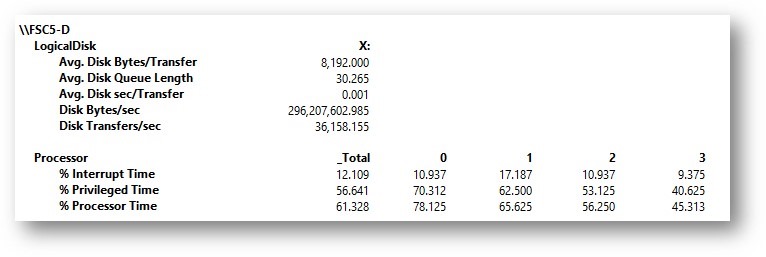

Performance Monitor defaults to a line graph view, but I personally prefer to use the report view (you can get to it from the line chart view by pressing CTRL-G twice). Here’s an example of what I see for my test system while running “C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 -t2 -o16 -LS -BN X:\TestFile.DAT”.

Note 1: Disk counters here are in bytes, base 10. That means that what SQLIO defines as 8KB shows here as 8,192 and the 282.49 MB/sec shows as 296,207,602 bytes/sec. So, for those concerned with the difference between a megabyte (MB) and a mebibyte (MiB), there’s some more food for thought and debate.

Note 2: Performance Monitor, by default, updates the information once every second and you will sometimes see numbers that are slightly higher or slightly lower than the SQLIO full run average.

10. SQLIO and SMB file shares

You can use SQLIO to get the same type of performance information for SMB file shares. It is as simple as mapping the file share to a drive letter using the old “NET USE” command or the new PowerShell cmdlet “New-SmbMapping”. You can also use a UNC path directly instead of using drive letters. Here are a couple of examples:

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -fsequential -b512 -t1 -o3 -LS -BN \\FSC5-D\X$\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337892 counts per second

1 thread reading for 10 secs from file \\FSC5-D\X$\TestFile.DAT

using 512KB sequential IOs

enabling multiple I/Os per thread with 3 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: \\FSC5-D\X$\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 1376.40

MBs/sec: 688.20

latency metrics:

Min_Latency(ms): 2

Avg_Latency(ms): 2

Max_Latency(ms): 3

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 0 0 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Notice I bumped up the queue depth a bit to get the same throughput as we were getting on the local disk. We’re at 2 milliseconds of latency here. As you can probably tell, this SMB configuration is using an RDMA network interface.

PS C:\> C:\SQLIO\SQLIO.EXE -s10 -kR -frandom -b8 -t2 -o24 -LS -BN \\FSC5-D\X$\TestFile.DAT

sqlio v1.5.SG

using system counter for latency timings, 2337892 counts per second

2 threads reading for 10 secs from file \\FSC5-D\X$\TestFile.DAT

using 8KB random IOs

enabling multiple I/Os per thread with 24 outstanding

buffering set to not use file nor disk caches (as is SQL Server)

using current size: 40960 MB for file: \\FSC5-D\X$\TestFile.DAT

initialization done

CUMULATIVE DATA:

throughput metrics:

IOs/sec: 34020.69

MBs/sec: 265.78

latency metrics:

Min_Latency(ms): 0

Avg_Latency(ms): 0

Max_Latency(ms): 6

histogram:

ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+

%: 44 33 15 6 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

Again I increased the queue depth a bit to get the best IOPs in this configuration. This is also close to the local performance and average latency is still under 1 millisecond.

11. Performance Monitor and SMB shares

When using Performance Monitor to look at SMB Shares, you should use the “SMB Client Shares” set of performance counters. Here are the main counters to watch:

| Counter Set | Counter | Instance | Notes |

| SMB Client Shares | Avg. Data Bytes/Request | Specific share and/or Total | Average IO size |

| SMB Client Shares | Avg. Data Queue Length | Specific share and/or Total | Average queue depth |

| SMB Client Shares | Avg. Sec/Data Request | Specific share and/or Total | Average latency |

| SMB Client Shares | Data Bytes/sec | Specific share and/or Total | Throughput |

| SMB Client Shares | Data Requests/sec | Specific share and/or Total | IOPs |

Also, here is a view of performance monitor while running the random 8KB workload shown above:

12. Conclusion

I hope you have learned how to use SQLIO to perform some storage testing of your own. I encourage you to use it to look at the performance of the new features in Windows Server 2012, like Storage Spaces and SMB 3.0. Let me know if you were able to try it out and feel free to share some of your experiments via blog comments.

Comments

- Anonymous

March 28, 2013

awesome very good work. - Anonymous

April 01, 2013

I've used this in my DEMO tests and it worked very wellThanks for sharing this, awesome work! - Anonymous

December 17, 2014

Hi, long time since my last blog and the first time to write a blog in English as some people asked me - Anonymous

February 10, 2016

Hi Jose,Thank you for this post, it is very helpful and very well written.I have a question for you, can you please take a look, here are 2 SQLIO runs to SMB share, 1st run against SMB2 share on Windows server 2012R2, 2nd against SMB2 share on SMB server (our implementation) on Linux, in both cases same server hardware used : Intel Xeon E5-2609 v3 1.90GHz , 32 GB RAM , 500 GB HDD and 10GbE interface card.The result with our SMB server is very good in comparison with Windows, although hard to beleive, do you have any thoughts?with Windows:C:\MngNQServer\sqlio>sqlio.exe -s60 -kR -frandom -b4 -t1 -o8 -LS -BN Z:\testfile.datsqlio v1.5.SGusing system counter for latency timings, 1853321 counts per second1 thread reading for 60 secs from file Z:\testfile.dat using 4KB random IOs enabling multiple I/Os per thread with 8 outstanding buffering set to not use file nor disk caches (as is SQL Server)using current size: 100 MB for file: Z:\testfile.datinitialization doneCUMULATIVE DATA:throughput metrics:IOs/sec: 828.81MBs/sec: 3.23latency metrics:Min_Latency(ms): 0Avg_Latency(ms): 9Max_Latency(ms): 207histogram:ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+%: 16 26 8 5 3 3 3 3 3 3 2 1 1 1 1 1 1 1 1 1 1 1 1 1 12with our SMB server:C:\MngNQServer\sqlio>sqlio.exe -s60 -kR -frandom -b4 -t1 -o8 -LS -BN Z:\testfile.datsqlio v1.5.SGusing system counter for latency timings, 1853321 counts per second1 thread reading for 60 secs from file Z:\testfile.dat using 4KB random IOs enabling multiple I/Os per thread with 8 outstanding buffering set to not use file nor disk caches (as is SQL Server)using current size: 100 MB for file: Z:\testfile.datinitialization doneCUMULATIVE DATA:throughput metrics:IOs/sec: 25342.30MBs/sec: 98.99latency metrics:Min_Latency(ms): 0Avg_Latency(ms): 0Max_Latency(ms): 20histogram:ms: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24+%: 100 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0Thank you in advance,Lilia - Anonymous

October 23, 2016

awesome ,thanks for sharing this