Replace your Cron/Batch servers with Azure Functions

In my last blog post, I talked about a solution that I built for one of my customers that leveraged Azure Functions. That particular solution used an Event Trigger to actually force the function to be processed. What I mean by that is that the function was not going to work unless something happened in another Azure Resource and in that particular case, it was a Blog based Storage Account. However, Azure Functions allow for two other triggering mechanisms to be used to force a function to be processed, Timers and Webhooks.

It is the Timer triggering capability that I would like to talk about this time as it is a perfect mechanism for customers to potentially get rid of their backend batch or cron servers that are doing regular scheduled tasks, especially those that are interacting with Azure. As long as the process that is being performed is either written in or can be converted to one of the supported Azure Function programming languages (see below), then it should be pretty straight forward to move the code and retire either the VMs or hardware that you are currently supporting today.

Available Languages

- Javascript (Node.JS)

- C#/F#

- PowerShell

- Java

- PHP

Timer Trigger

Let’s start by providing a quick understanding of what the Timer trigger is within the scope of an Azure Function. It is very simple, I have a piece of code that I want to run over and over again based on a schedule. Some of you may be asking yourself, “Can’t Azure Automation do that today for PowerShell scripts?”. Yes, it absolutely can, but not down to the minute like a Cron based timer can. The Azure Automation run books can only be scheduled down to the hour and they only support PowerShell. The Timer Trigger within Azure Functions provides down to the minute scheduling and Azure Functions supports many more languages beyond PowerShell.

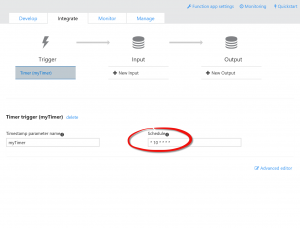

[caption id="attachment_1765" align="aligncenter" width="300"] Example of a Timer Trigger within the Azure Functions Integrate tab[/caption]

Example of a Timer Trigger within the Azure Functions Integrate tab[/caption]

NOTE: All rules that apply to cron scheduling within Linux apply to the Azure Functions Timer Trigger

Why Azure Functions?

Why would a customer want to move to Azure Functions rather than keep their Cron/Batch servers in place? The biggest and most obvious reason is efficiencies of operation. What if your IT team didn’t have to worry about patching servers anymore or replacing hardware when it dies or is hitting its end of life? What if you could save money on power consumption and get back some well needed real estate in your data center? Those are some of the reasons why you should use Azure Functions.

In addition to the savings that you get, there are many additional benefits from going with Azure Functions that there is just no way to achieve without the use of a cloud service. The primary one being the dynamic nature of the Azure Functions service. What I mean by that is that unlike a VM or AppService where you pay for every hour that the Service is in use, with Azure Functions you can configure it so that you are only charged for each time that the Function is actually processed. Another major benefit is the direct integration with other Azure services, such as Storage, Event Hubs, and Cloud based Databases that you would have to build yourself otherwise.

Use Case

The most common use case that I have seen for customers to use Cron/Batch servers & processes is around the movement of data. As long as that data is not being accessed from local hard drives, then Azure Functions is a perfect place to manage those types of processes. In the case of my customer, they are pulling down a lot of different types of data from different FTP and REST based services. They are then making modifications to the data before combining it with their own data to perform some kind of analytics against it.

Yes, this does sound an awful lot like ETL (Extract, Transform, & Load) based processing which Azure does have a service to handle (Azure Data Factory), but a lot of our customers have their own ETL processes already built and with Azure Functions they can move them almost as-is and therefore remove the burden of managing separate servers to handle them. The only thing that will definitely have to change is the way that logging messages are handled.

In this particular instance, we need to download log files from a third-party source, change the name of them and then save them in a Azure Storage Account. Once they are saved within the Storage Account, a secondary Azure Function will be used to automatically make a copy to a Cool Tier Storage Account for geo-redundancy and disaster recovery purposes. The function was developed using Node.JS as the development language and the process was pretty straight forward. All development and testing was actually done on a development laptop using all of the same libraries and resources as would be done in the Azure Portal.

The code for the deployed function can be found below:

var client = require('ftp'); var azure = require('azure-storage'); var async = require('async');// Configurations for the FTP connection var ftpconfig = { host: "<ftpserver_URL>", user: "<user_name>", password: "<password>" };var path = "/92643/logs/fd/api";// Configurations for the Azure Storage Account connection var storageConfig = { account: "apilogfilesprimary", key: "<storage_key>", container: "logs" };// Create the Connection to the Azure Storage Account and List entries to validate connection var blobService = azure.createBlobService(storageConfig.account, storageConfig.key);// Open the FTP connection using the information in the ftpconfig object var ftp = new client(); ftp.on('ready', function() { console.log("Connected to Akamai Accuweather Logs FTP"); // After connecting, change the work directory to the path for all API logs. ftp.cwd(path, function(err, currentDir){ if (err) console.log('FTP CWD Error: ', err); else { // Create a list of all the objects that are currently in the FTP Path ftp.list(function(err, list) { if (err) console.log('FTP List Error: ', err); else { // Loop through each file within the directory async.each(list, function(file, callback){ console.log('Downloading File: ', file.name, ' with Size: ', file.size); ftp.get(file.name, function(err, stream) { if (err) console.log('FTP Get Error: ', err); else { azureFileName = returnUpdatedFileName(file.name); // Upload the files to the Storage Account blobService.createBlockBlobFromStream(storageConfig.container, azureFileName, stream, file.size, function(err, result, response) { if (err) console.log('Azure Storage Error: ', err); else console.log("Successfully uploaded ", azureFileName, " to Azure Storage."); }); // After the file is uploaded, we should delete it ftp.delete(file.name, function(err) { if (err) console.log('FTP Delete Error: ', err); else { console.log('Successfully deleted: ', file.name); ftp.end(); } }); } }); }, function(err){ if (err) console.log('A file failed to either download or get created within Azure: ', err); else console.log('All files were downloaded and subsequently created within Azure'); }); } }); } }); });function returnUpdatedFileName(file) { var results = file.split("."); var year = results[2].substring(0,4); var month = results[2].substring(4,6); var day = results[2].substring(6,8); return year + "/" + month + "/" + day + "/" + file; }ftp.connect(ftpconfig); NOTE: Please note that there are three libraries that are being loaded within the code above. These libraries were loaded using the standard Command-Line tools and Node Package Manager that is available within a deployed App Service. In addition, all logging was done using standard console logging during development and then switched to the WebJobs SDK logging once deployed into the Azure Function based App Service.

Conclusion

Needless to say this is just one simple example, but a worthwhile one as far as my customer is concerned. Hopefully though it will provide you with a glimpse into how the Timer Trigger within Azure Functions can be used to replace your Cron Jobs or Batch Processes and there by reduce your operational and administrative requirements.