Azure Storage Account Security

There have been a number of recent high profile data exposures related to storage of data within cloud services. Often times data isn't properly secured in these services, leaving sensitive data exposed and putting customers and businesses at risk. Azure Blob storage is a great method to cost effectively store data, from key/value pairs to individual binary files, and Microsoft provides several mechanisms to ensure that you can properly secure your data. In this post, we'll take a look at methods you can use to secure data that is stored as blobs in Azure Storage Accounts.

Create a storage account

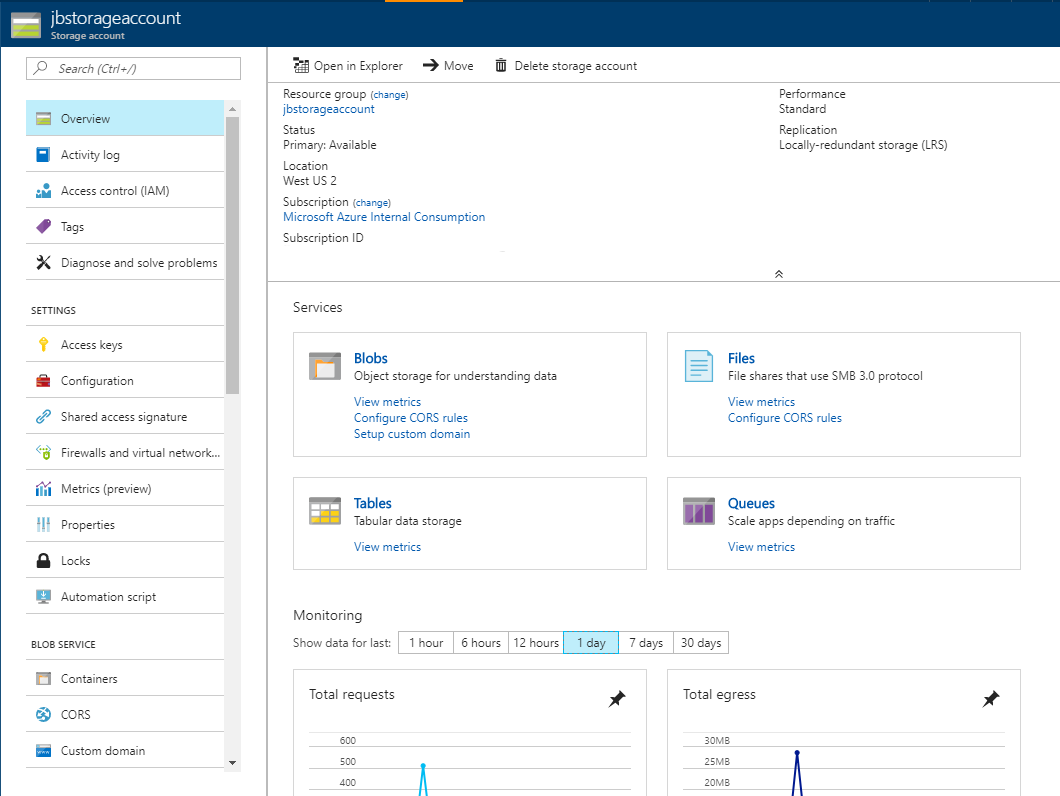

Let's create a storage account that we can use for demonstration purposes. In the Azure Portal click the + New link in the top left and search for "storage account". The first result should be "Storage account - blob, file, table, queue". Select it and click create to open up the create storage account dialog. Select a name (this must be a globally unique DNS name), select or create a resource group and a region, and accept the default for the remaining options. Click create, and in a few seconds you should have your storage account.

Creating a storage account on its own gives you the virtual container to store and manage data of varying types. To store blob data you'll need to create a container to hold blob objects. Go to the main blade of your storage account and click the blobs link to open up the Blob service blade. Click the + Container link at the top to open up the New container dialog. Give your container a name and click OK.

Securing access to blob data

Access to blob data within a storage account can be secured at two levels: through access at the storage account level and access at the container level.

At the storage account level you can either use storage account keys to access resources, or generate a shared access signature (SAS) to provide more granular access. Storage account keys should be treated as highly sensitive credentials, think of these as 'root' credentials for your storage account. Do not share these keys and avoid hardcoding them into unencrypted or shared locations. You should regularly rotate these keys to ensure that any compromised keys are no longer use; integrate this into your overall credential rotation policies and procedures. For applications that need access to access the storage account keys you can leverage the newly announced Managed Service Identity to authenticate your application to Azure resources without exposing any keys.

SAS is the method to delegate access to applications or requests that need access to blob storage, and can also designate access to the other services in a storage account (files, tables & queues). You can further restrict access to specific resource types, grant specific permissions, set a timebox around the access, restrict via IP and enforce HTTPS. SAS URI's are generated with one of your account keys, so if you regenerate your key this will also invalidate any SAS URI's generated with that key. SAS takes the format of a URI, and can be generated in your application by Azure REST calls or Azure Storage libraries for your development language of choice.

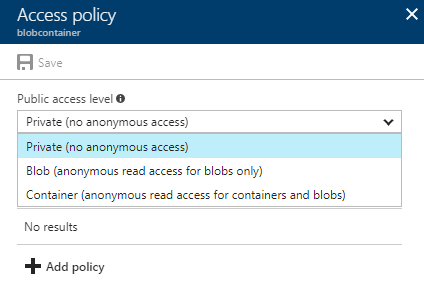

At the container level, access is configured either through the SAS or the public access level of the container. The public access level specifies what type of access unauthenticated users will have. By default, containers will have a Private access level, meaning unauthenticated users will not have access to the container or any underlying blobs. There are two other access levels, Container and Blob. Container will allow access to the properties, metadata and contained blobs, and Blob will allow access to only the blobs themselves. Unless your data is truly public in nature, it's recommended to keep container level access configured at Private, which ensures that unauthenticated users have no access to blobs.

Securing data at rest

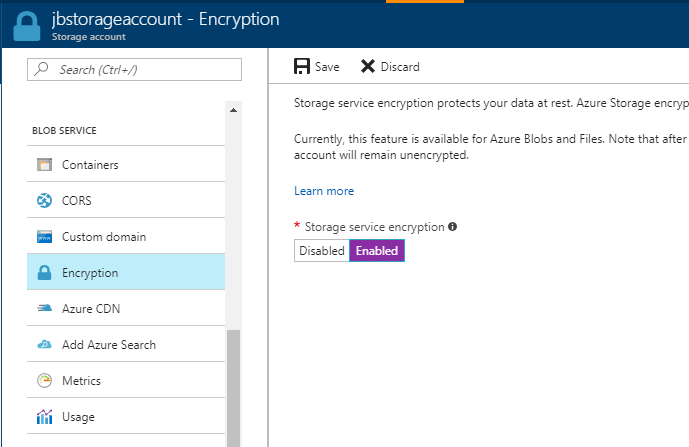

To ensure that your data at rest is secure there are two key methods you can leverage. You can use storage service encryption (SSE) to encrypt the data at rest on Azure storage, or you can use client-side encryption to encrypt the data itself. The key difference here is at what levels the data is encrypted. With SSE the data as it resides on the physical disks in Azure is encrypted, but it does not encrypt the data contained within the file itself. When using client-side encryption, the data is encrypted by an outside application (the client) before it is written to Azure storage. With client-side encryption, if the data is exposed it would still be encrypted and would require access to the encryption keys to decrypt. Leveraging client-side encryption can add resource requirements to your application and requires integrating encryption functionality into your application, but does provide an additional layer of security that can be advantageous.

On 8/31/2017 we announced that storage accounts would have SSE enabled by default, ensuring a base level of encryption for data at rest. If you have an existing account, you can enable encryption on the Encryption panel within Blob Service. Note that this will not encrypt existing data, only newly written data to the storage account.

Securing data in transit

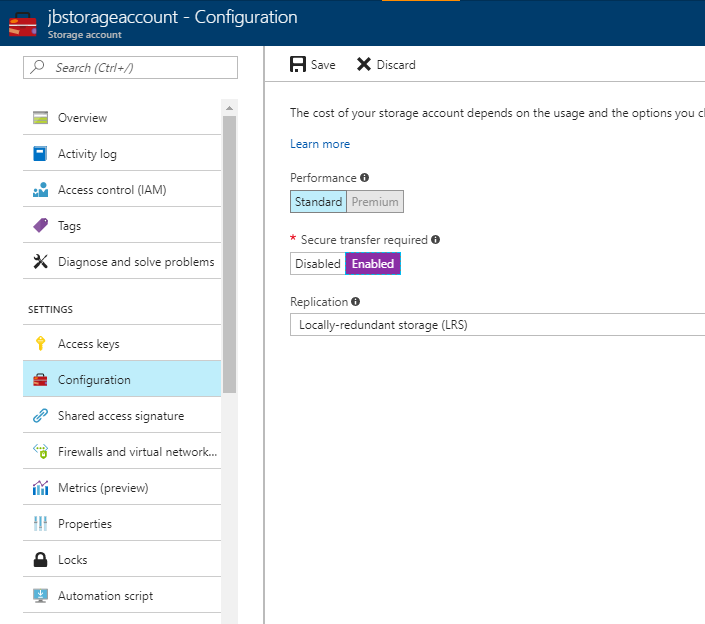

By default, requests to Azure Storage can be transmitted over HTTP or HTTPS. If you want to enforce HTTPS only, you can enable the secure transfer required option within the Configuration panel. With this enabled, requests over HTTP will be refused. Note that this is a storage account level setting and will not work with custom domain names and may cause some requests to Azure Files to no longer work, depending on the type of client.

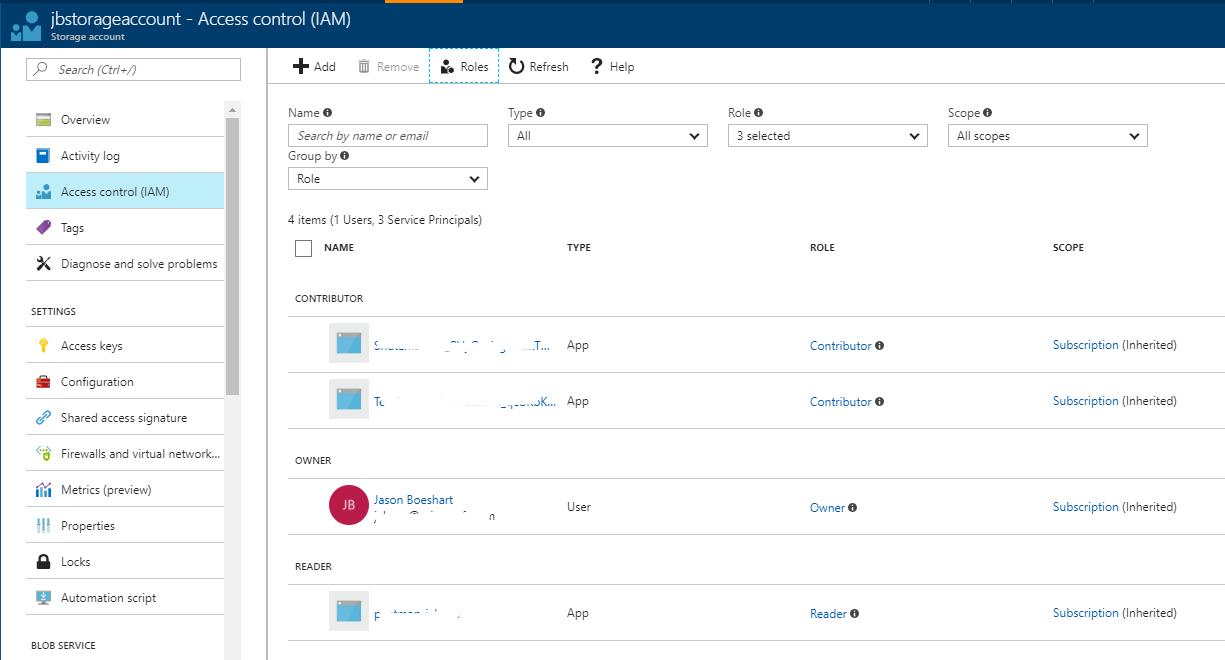

Securing access to administer storage accounts

Azure role based access control (RBAC) is used to secure access to manage storage accounts, typically done through the portal, PowerShell or CLI. Access to the storage account keys is secured through RBAC, so you will want to make sure that only the individuals who need access to storage accounts are granted access. Along with the Contributor, Owner and Reader roles there are a couple of built-in roles that you can leverage to grant users access to storage accounts: Storage Account Contributor and Storage Account Key Operator Service Role. These grant a more limited set of permissions to only storage accounts, and could be used to grant limited permissions to storage administrators. The bottom line is that you'll want to limit access to the storage account keys so that you reduce the likelihood of key exposure.

Key takeaways

So what is important to remember out of all of this?

- Access to blobs by default (using the default creation options) will not allow any anonymous access to blobs stored within. Unless you have specific requirements to make these public, keep this at the default setting.

- Use Managed Service Identities to allow applications to access keys, eliminating the need to add them to directly into code or configuration files.

- Generate SAS when accessing storage through applications. This provides a more granular set of capabilities to access storage accounts.

- Regularly rotate your storage account keys. Add this to your regular credential rotation process. Keep in mind that any SAS generated with the rotated key will no longer work, so you'll need to generate new SAS for those instances.

- Use HTTPS wherever possible, and enforce secure transfer on storage accounts to prevent HTTP access.

- Enable encryption at rest for your storage accounts, and leverage client-side encryption for sensitive data.

- Grant access to storage account administration through RBAC only to the individuals who specifically need access.

Using these recommendations will help ensure your Azure Blob data remains secure. For more details and in-depth documentation on Azure Storage security, take a look at the following links.

Azure Storage Security Guide: /en-us/azure/storage/common/storage-security-guide

Manage Anynymous Read Access to Containers and Blobs: /en-us/azure/storage/blobs/storage-manage-access-to-resources