Parallelism: In the Cloud, Cluster and Client

Recently I had the opportunity to keynote at the Intel Tech Days conference, and I chose to talk about the trends in Parallelism. I thought I’d share the “presentation” with you, and in the end I will certainly weave in the topic of “testing” into this. I hope you enjoy the post.

Software and digital experience is now becoming ubiquitous in our lives. Software is everywhere – it is stocking supermarket shelves, delivering electricity and water to our homes, storing our personal information in computers around the world, running nuclear plants and even controlling doomsday weapons! Moreover, with the popularity of the Internet and mobile devices, and with the advances in graphical and natural user interfaces, the creative human minds and the innovative companies are making the digital age more personal, social and indispensible.

This is leading to three fundamental trends in the world today:

- Data explosion – the phenomenal growth of raw digital data

- Demand for computational power for transforming raw data into meaningful information

- Exponential growth in the number of users getting on to the digital super highway

The explosion of data, information and users

The data explosion itself is mindboggling. Research analysts revealed that:

- Last year, despite the recession, the total amount of digital data in the world has grown by an astonishing 62 percent to 0.8 zettabytes (a billion terabytes). If you were to create DVDs with all of these data, and stack them up on the earth’s surface, the pile would reach the moon!

- At this rate of growth, in 2020, the digital information will be 47 times of what it is today, that is 35 zettabytes, and the stack of DVDs would be high enough to touch Mars!

Raw data by itself is not of much use to individuals and businesses. However, if we can harness the right amount of computational power and processing speed, we can transform the data to experiences that will completely transform the lives of individuals and business users. Think about some of the experiences we can potentially enable in areas such as:

- Financial modeling – personalized investment tools with rich analytics of complex financial scenarios

- Personalized Immune System

- Real-time Model based Computing

- Individualized Semantic Search Engines

These advances and possibilities will, of course, lead to a huge explosion of individual users accessing these services over the Internet or using their computers and personalized digital devices. Here is a chart that predicts the explosion we are talking about:

Will advances in computational power be ready for this explosion?

All the above sounds promising. But transforming raw data into life-transforming experiences—that caters to a few billion people—will require a tremendous amount of computational power. Will the revolution in PC performance of the last few decades be ready for this challenge? We obviously cannot go back to expensive and huge super computers that individuals cannot have easy and personalized access to.

Fortunately, there are three great trends here, which hold the promise of solving this problem. These are:

- Multi-core and Manycore client systems

- The Cloud

- HPC environment for the Cluster

Depending on the class of problem you are trying to solve, one of the trends can be utilized in an effective manner.

To understand the promise and the need of Manycore systems, it is important to review the advances we have made in the last several decades in the performance of the microprocessors that power the PCs and mobile devices.

For several decades now, Gordon Moore’s famous prediction in the 60s—better known as Moore’s Law now—has been driving the improvements in processor performance. The law states that the number of transistors placed on an integrated circuit would double every 18 to 24 months, which would roughly lead to similar increase in clock speed and processor performance in that time period. Companies like Intel have demonstrated this in reality, and the last several decades have seen this impact on clock speed and processor performance.

However, we are now nearing the limits of what can be physically accomplished in fabrication and layout. And as you see in the graph above, the clock speed and processor performance from Moore’s Law has hit a plateau.

Manycore systems is the answer

Further improvements in processor performance are now following a new model! Instead of relying on the increase of clock speed alone (which eventually leads to power consumption and heat emission that becomes unmanageable), chip manufacturers are now adding more CPUs or ’cores’ to the microprocessor package.

Most mainstream desktops and laptops now come with at least a dual-core microprocessor (a single processor package that contains two separate CPUs). This is a new trend. Quad and higher core processors are now entering the market. In fact, I am writing this post on a laptop which has 4-core (in addition to hyper-threading—not the topic of today’s discussion—that essentially looks like a 8-core machine!)

The ’Manycore shift’ in hardware performance will now lead to the next phase of computational power growth over the next decade. The same Moore’s Law can now apply to this type of scaling of processor performance. In fact, the following bold statement from Justin Ratner, CTO, Intel, is very relevant in this context:

“Moore’s Law scaling should easily let us hit the 80-core mark in mainstream processors within the next ten years and quite possibly even sooner”

Manycores need an equal progress in Parallel Computing

With Manycores’ processing power improvements of 10 to 100 times over the next few years, developing applications that harness the full power of Manycore systems become the next big challenge for software developers. Developers must now shift from writing serial programs to writing parallel programs!

Hardware companies have done a tremendous job in ushering the Manycore revolution. But software companies now have the responsibility of bringing in similar advances in operating systems, runtimes and developer tools. And these advances should enable developers to simply embrace the concept of parallelism and allow the platform and the tools to extract most of the parallelism that is available in a system.

The goal would be to ensure that if you have a compute intensive application, it is able to fully harness all the computational power available in the system. For example, if I have a 16-core system and a compute intensive application, the CPU usage and the spread across all the cores should ideally look like this:

The final frontier: Parallelism in the Cloud, Cluster and Client

Only harnessing the power of a single Manycore system may not be sufficient to bring the kind of experiences that we have discussed earlier to our daily lives. There are many sceanrios where we need to harness the power of many systems. Moreover, the operating system and the platform should provide the necessary abstractions to give that power and yet hide the complexities from the lives of the developers of the software. A business or enterprise providing rich information and computational intensive service to individuals, need combination of Cluster, Client and the Cloud. This enables them to optimize their investment for on-demand computing power and utilize idle computing power in desktops. This is typically the vision of teams like the High Performance Computing (HPC) group in Microsoft. An HPC solution here will look as follows:

Imagine a financial company providing the next generation financial analytics and risk management solutions to its clients. To provide those solutions it needs enormous processing capacity. The organization probably has a computational infrastructure similar to that shown above and a cluster of machines in its data center that takes care of the bulk of the computation. The organization also likely has many powerful client machines in the workplace that are unused during inactive periods. Thus, it would benefit from adding those machines during unused times as compute nodes to its information processing architecture. Finally, it will need the power of the Cloud to provide elastic compute capacity for short periods of intensive needs (or bursts) for which it is not practical to reserve computational power.

The RiskMetrics Group is a financial company that uses an Azure-based HPC architecture like the one outlined above. It provides risk management services to the world’s leading asset managers, banks and institutions to help them measure and model complex financial instruments. RiskMetrics runs its own data center, but to accommodate high increase in demand for computing power at specific periods of time, the company needed to expand its technical infrastructure. Rather than buying more servers and expanding its data center, RiskMetrics decided to leverage the Windows Azure Platform to handle the surplus loads. This is particularly necessary for a couple of hours, and the organization needs to complete its financial computations within window . This leads to bursts of computationally intensive activities as shown in the chart below:

Courtesy Microsoft PDC Conference 2009 and case study https://www.microsoft.com/casestudies/Case_Study_Detail.aspx?CaseStudyID=4000005921

By leveraging the highly scalable and potentially limitless capacity of Windows Azure, RiskMetrics is able to satisfy the peak bursts of computational needs without permanently reserving its resources. It eventually expects to provision 30,000 Windows Azure instances per day to help with the computational needs! This model enables the organization to extend from fixed scaling to elastic scaling for satisfying its on-demand and ‘burst’ computing needs.

You then get a sense of how Parallelism in Cloud, Cluster and Client is the solution for today’s and tomorrow’s innovative and information rich applications that need to be scaled to millions of users.

Finally, let us take a look at how the platform and developer tools will allow developers to easily build these massively scalable parallel applications.

How does the development platform look like?

Here’s a picture of how the development platform should look like.

‘Parallel Technologies’ stack is natively a part of Microsoft’s Visual Studio 2010 product as shown below - both for native and managed applications.

Microsoft has invested in the Parallel Computing Platform to leverage the power of Parallel Hardware/Multi-core Evolution

Developers can now:

- Move from fine grain parallelism models to coarse grained application components – both in the managed world and in the native world

- Express concurrency at an algorithmic level and not worry about the plumbing of thread creation, management and control

- The concurrency runtime by itself is extensible. Intel has announced integration with the concurrency runtime for TBB, OpenMP and Parallel Studio

A demonstration of this capability

I will show a small demonstration of the example that supports parallelism in the Microsoft platform. I have a simple application called ‘nBody’ that is a simulation of ’n’ planetary bodies, where each body positions itself based on the gravitational pull of all the other bodies in its vicinity. This is clearly a very compute intensive application, and also one that can benefit immensely from executing ‘tasks’ in parallel.

The code for this application is just a native C++ code.

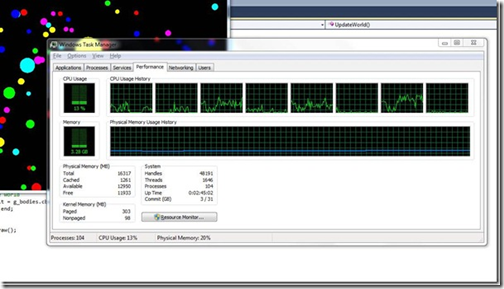

If I execute the application ’as-is’ without relying on the Visual Studio PPL libraries, the output of the application and the processor utilization looks as follows:

If you look at the Task Manager closely, you will see that only 13 percent of the CPU is being utilized. That means, with an 8-core, each core is utilized to only about 1/8th of its capacity.

The main part of the program, which draws the bodies, is encapsulated in an update loop, which looks as follows:

To take care of [n1] the multi-core presence and the platform support for parallelism, namely the PPL library, we can simply modify the update loop to use the parallel for construct shown below:

If I now rerun the program using the UpdateWorldParallel code, the results look like something like this:

You can now see that we are using 90 percent of the processing capacity in the machine, and all the cores are operating almost at their full capacity. Of course, if you are able to see the output of the program, you will notice the fluency of the movement, which is perceptibly faster than the jerky motion of the bodies with the earlier run.

What about advances in testing tools for parallelism

Testing such programs of course is going to be a challenge, and essentially the problem here is about finding ways to deal with the non-deterministic nature of the execution of parallel programs. Microsoft research has tool, integrated with Visual Studio, that helps out here. Check out CHESS: Systematic Concurrency Testing available at Codeplex. CHESS essentially runs the test code in a loop and it controls the execution, via a custom scheduler, in a way that it becomes fully deterministic. When a test fails, you can fully reproduce the sequence of the execution and this really helps narrow down and fix the bug!

In conclusion

Current global trends in information explosion and innovative experiences in the digital age require immense computational power. The only answer to this need is leveraging parallelism and the presence of multiple cores in today’s processors. This ’Multi-core shift’ is truly going well on its way. To make this paradigm shift seamless for the developers, Microsoft is providing parallel processing abstractions in its platforms and development tools. These allow developers to take advantage of parallelism—both from managed code as well as native code—and apply them for computation in local clients, clusters clouds and a mix of all the three!

Creative minds can focus on developing the next generation experiences into reality without worrying about processor performance limitations or requiring advanced education in concurrency.

Comments

- Anonymous

December 22, 2010

this is a good programe plz se d me my e mail brief plz ( singhboy.005@rediffmail.com ) I am a software engingeer - Anonymous

February 21, 2014

Cloud Computing - cloudcomputing.blogspot.in