The Abyss

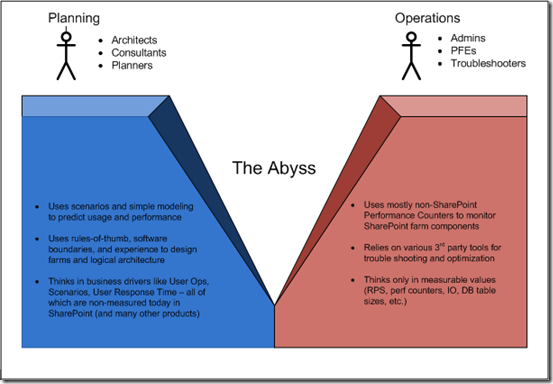

We use the following diagram to illustrate “The Abyss”:

An explanation follows – however the essential points are in the diagram.

It is an interesting fact that most people who deal with SharePoint Architecture in the field usually belong in one of two camps. There are the Planners – the people who design and build SharePoint deployments to satisfy business requirements. Then there are the Operationalists - who maintain, extend and troubleshoot SharePoint deployments. While there are indeed people who do both activities most concepts tools and guidance are directed at one or the other group, and we will show that even these people who perform both roles end up in a somewhat schizophrenic situation, since it is almost impossible to relate the two in our current state.

The Planner Side

It is tempting to invoke the classic theoretical/pragmatic dichotomy and say that the planners are being theoretical, and the operationalists are being practical about the same problem. However in fact the planners are being pragmatic as well. They are in the position of having to design a system to meet business requirements and are forced to use measures to estimate the demand. The most important quantities in this “demand model” are:

- The workloads (previously called scenario) for which the system will be used.

- The number of users using the system for a required workload.

- The pattern of concurrent usage by these users.

- The number of requests (operations of business value) that they will be required per user per unit time.

These values are supposed to be used in the well known RPS equation (about which I will blog later):

N * C * R = RPS

Where:

- N = Number of active users of the system

- C = Peak concurrency rate

- R = Average Request Rate per User (LTHE classification - Light = 20 R/hour, Typical=36 R/hour, Heavy=60 R/hour, Extreme=120 R/hour)

- RPS = Peak request/sec rate

This equation was documented in various places. And the spirit of this equation is correct - the number of users, pattern of concurrent users, Transactions (which were sometimes documented to be operations of business value, and sometimes merely IIS requests of various nature), Transactions per Second are all closely-enough linked to business drivers. But the problems become apparent when a planner tries to actually fill out this equation.

However it is not at all clear what these values really mean and little agreement both inside and outside of Microsoft as to what they should mean. Some experts had their own conventions, but they where not built-in into any of our metrics, but different experts used different conventions which merely added to the confusion. Thus well-meaning planners who use this demand model were likely to use all kinds of values, and different planners can justify widely varying values based on their understanding of the meaning of the terms – and came up with similarly widely varying resource requirement estimates as a result.

Also since there are no clear-cut and accepted ways to measure these values on a real system, experience turned out to be of little help. So a planner who visits a system a year or two after deployment to check the validity of his or her assumptions will find it practically impossible to do so.

We should also mention that our now frustrated planner even finds it very difficult to use an existing functional system with a similar workload to help, as the values that are needed are simply not being measured – these include:

- What is the workload being served?

- How many users are active?

- What is the user concurrency?

- What and how many operations of business value are being executed?

The Operationalist Side

The Operationalist in their highest developed form is a person with experience on a wide range of SharePoint systems. They have extensive experience troubleshooting performance problems and usually have a tight community and share experiences and lessons with each other in an exemplary manner. More to the point - they know where bottlenecks have occurred in the past (and are thus are likely to re-occur) and have a suite of methods that they can use to check these.

It is an interesting and sad fact that they will rarely (never?) be interested in or even exposed to the original planning estimates and documents for the system. In fact they will just assume it is probably not useful or wrong (there are problems, right?) and go straight to the system and make measurements, frequently from performance counters that measure resource utilization of one sort or another. Eventually (hopefully) they will find the bottleneck and take remedial measures. They have a high success rate (eventually) and work closely with the product group (at least more closely than the planners to my experience) to ensure that this knowledge is preserved and propagated.

However it is not a really satisfactory state-of-affairs, since there is usually a lot of suffering that occurs before problem resolution that damages our reputation and slows adoption.

And since there is no systematic methodology to relate their measurements to the actual planning values (which are not well-defined or measurable anyway) it is unclear how this knowledge should feedback in any case.

Capacity Management vs. Capacity Planning

So this is “The Abyss”. We have our planners, who create designs based on estimates and hope to get things right, but the guidance does not change (even from version to version). And we have our Operationalists, who adapt to changing situations and fix problems as they occur, but have no way to feedback their experience into a model that can be used for planning. Note that the Microsoft field has people in both camps. And it is well-known that the Operationalists enjoy informing the Planners that they have had to clean up after their mess.

The way out of course is to design a “Capacity Management” practice, that can be used by both parties in the same way. Planners and Operationalists should be the same people speaking the same measurable language. But they cannot do it alone. We must give them a framework that satisfies both of their needs.

Have we done this for the new version – SharePoint 2010? There are some very positive developments in this direction. However it is hard – in fact very hard – to come up with a solid predictive model that can handle SharePoint with all its workloads and possible configurations. So I expect the Abyss will only go away slowly, but I expect it to be much narrower now with SharePoint 2010.