Measurable Concepts

Here we talk about how to make demand concepts measurable. This is an extremely important part of enabling a continuously improving capacity management methodology.

Measureable Demand Concepts

Precisely defining our concepts in measurable quantities is a difficult task and we do not pretend to have done it here. Each concept need a study in itself backed with supporting data to show that the measurement technique is successful. Investment (not a huge amount – but a significant amount) needs to be made to accomplish this.

What we really need is a demand model that derives from business drivers. Although we can use RPS as a measure of our demand (and we do in some later chapters), it is in no way a business driver and should be considered a temporary substitute. A business driver would be something that a BDM (Business Decision Maker) can provide technical assistance, like how many users need to use the system and what operations will they perform against it.

We will run through the major concepts we need here and list the alternatives we have for definition.

- Workload – While workload seems to be a well-defined concept, in fact it is a problematic one. If we plan for one workload and our deployment is in fact used for another then we will have a problem. A related question is when two different workloads can perhaps be considered to be the same from the point-of-view of resource consumption. A workable measureable definition could probably be obtained by looking at the incoming requests in the IIS logs and categorizing them. Although some work has been done by SharePoint test teams in this area, much more remains to be done.

- Total User Number – This is easy for most cases, namely the number of users in the user profile database. However for an anonymous situation it is more problematic since we do not have the users name and cannot actually distinguish between the users. Fortunately this is not a hugely important planning case.

- Active Users – This is a controversial – but probably necessary abstraction. The idea is that there are a certain number of users who are active – i.e. about to use SharePoint. An alternate formulation would be those that are deriving value from SharePoint at this instant. This is a somewhat smaller number than the Total User Number.

Note that it is unclear how to measure this number – techniques range from installing user agents to register it directly, taking some fixed factor of the distinct users seen in logs a time period, or setting up an advanced Markov-process model and estimating the parameters numerically have all been suggested, but they all have obvious drawbacks.

- Concurrency – This is one of the toughest concepts to define. Architects frequently refer to “active users”, who are requesting pages, then reading them (i.e. experiencing think time). However it is not clear that this is a well-defined concept (see above) and it is in any case very difficult to measure. However if we insist on having something measurable there are a couple of usable alternatives:

- Requests in flight – This is interpreted as the number of users who have requested a page load and are waiting for it to complete. This is not easy to measure as one would think since IIS log resolution is only one second and many page loads return in less than one second. While this could be rectified with some kind of IIS filter but we do not know of anyone who has pursued this, but we mention it here for completeness.

The actual numerical values of requests in flight tend to be very low, thus on a collaboration system serving 150k users one will probably only be serving no more than 30 users at a single time (and 300 Requests per second). So percent concurrency on this measurement will be small fractions of a percent. - Distinct Users per unit time – while this is an attractive and fairly straight forward value to use, it has some problems. The major one is that the unit of time to use is not clear. It is clear that the longer the unit of time used, the bigger the measured value will be. One could hope that there would be a sudden discontinuity at some time which would reveal the time interval to use – but this is not the case; it is a smoothly continuously increasing function. None the less it seems to be the best value we have and is occasionally used by the more savvy planners. To our experience the best values (corresponding to our guidance) seem to be in the 3-5 minute range – i.e. Distinct Users per 3 minutes, or Distinct users per 5 minutes. Divide this by the total user number to get the “concurrency”.

- Requests in flight – This is interpreted as the number of users who have requested a page load and are waiting for it to complete. This is not easy to measure as one would think since IIS log resolution is only one second and many page loads return in less than one second. While this could be rectified with some kind of IIS filter but we do not know of anyone who has pursued this, but we mention it here for completeness.

- Request Rate – This is bound up very heavily with the way we measure concurrency, since concurrency times this number (presumably the average request rate) will give us our actual RPS (which is fixed).

So for example if we use “distinct users seen in 3 minutes” for our concurrency value, we will get a different average request rate per user than if we decide to use “distinct users seen in 5 minutes” since the product of the N*C*R remains fixed. - Peak RPS - While peak RPS seems clear, a little thought will reveal that the number is always bound to a definite time interval. Thus we have the Peak RPS (i.e. the max RPS for any one second), the Peak Average RPS for a minute, the Peak Average RPS for an hour etc. Analysis show that RPS tends to closely follow a normal distribution, thus an average and a standard deviation characterize these values fairly well.

But which RPS should be planned for? Of course it depends on your taste, but since this follows a normal distribution you can use standard bell-shaped curves and use fairly simple statistics to calculate the amount of time your deployment will exceed whatever value you plan for. This approach will be expanded upon in the following.

We believe a better approach is to abandon completely the idea of a peak and talk of distributions instead. - User Operation – what is a real user operation – i.e. the smallest unit of business value to the customer? A page load is one alternative for this, however this is already somewhat far removed from an IIS request, so it is not clear how to measure it.

Once common classification begin used in the PG refers to page loads as PLT1 (the first time a page is loaded on a client – with the client cache uncharged), PLT2 (second time) and others – and these all have rather different ratios of requests per usage operation – the values seem to vary strongly depending on the workload, client configuration, patch level, customization, etc, and it is very hard to measure at the server. However as a rule-of-thumb values between 5-1 and 20-1 tend to be used when absolutely necessary. Clearly more investigation needs be done here.

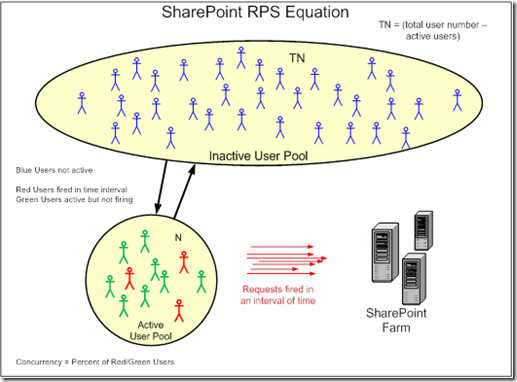

Note that some of these concepts were used in the RPS Equation mentioned above. A diagram showing a possible interpretation of the RPS equation and the relationships follows:

Although this may seem fairly clear, the exposition above should have made it clear that we have different ways to measure and interpret the values and arrows in this diagram resulting in disagreement in the field as to what is correct. Since there is no authoritative guidance in this area, the conflicting interpretations of all these concepts co-exist which adds to the confusion.

So our situation is some of these values can be measured with the help of log files, but some cannot. Rarely is this done in the field – clearly more research needs to be performed and published in order to enable the ultimate goal of being able to estimate usage directly from business drivers. The measurement process for each value needs to be documented, and perhaps software written and the product instrumented to actually make these measurements. It is a not inconsiderable amount of work.

However the current situation is not hopeless even without this investment. We have rules of thumb for many of these values and a good idea where the values need to lie. However the definitions need to be firmed up and agreed up – along with sanctioned measurement techniques. These can form the basis of a calibratable model.

This is well defined and relatively easy to measure, but it suffers from not being a business driver and is thus not exactly what we need.

We do not mention the economic cost and green cost measures (like TCO and the Carbon Footprint), but they must be questioned as well, i.e. people think they are well-defined and measurable (to judge by the way they are talked about), but are they really?