7 on 7: Windows Multitouch

Windows Multitouch, in my opinion, is the most emotive new feature of Windows 7. From the kids at home to the audiences to whom I’m demoed Windows 7, there’s something fresh and fun about being able to manipulate an application with simple hand gestures.

Windows Multitouch, in my opinion, is the most emotive new feature of Windows 7. From the kids at home to the audiences to whom I’m demoed Windows 7, there’s something fresh and fun about being able to manipulate an application with simple hand gestures.

We’ve all used touch screens in some form before, whether it be a tablet PC, your ATM, or a mall kiosk, but generally you’re just substituting your finger for a mouse. Multitouch is different; it mimics the way we work with physical objects and as such opens up a new world of user interaction scenarios.

When characterizing existing or new applications running on a multitouch device, three categories of the user experience emerge: good, better, and best. As we examine those categories in this post, you should get a good idea of the technical aspects that enable these experiences as well.

The Good Experience

Legacy applications will automatically provide a touch experience on touch devices, even though those applications were not designed for the Windows 7 touch capabilities. Basic interactions like panning with one or two fingers, resizing using a pinch gesture, and right clicking with a tap-and-press gesture are automatically translated by Windows 7 into analogous mouse messages, such as WM_VSCROLL and WM_HSCROLL. As a result, applications like Microsoft Word 2007 will ‘do the right thing’: when you pan the document, it scrolls; when you use the resize gesture, it changes the zoom factor as if you’d used the slider at the bottom right or the ribbon’s Zoom option.

You may want to check out the MSDN article Legacy Support for Panning with Scroll Bars for additional guidance to ensure your legacy application works well with the ‘out-of-the box’ touch capabilities. Troubleshooting Applications also has good tips for diagnosing unexpected behaviors in touch-enabled applications; for example, disabling flicks is generally recommended.

The Better Experience

Windows 7 introduces three new notifications and messages to support touch-enabled applications; two of these notifications (WM_GESTURENOTIFY and WM_GESTURE) form the basis of “the better experience” for multitouch. To respond to these new messages, managed developers need to include code in WndProc since the the .NET Framework classes do not include explicit touch events. Additionally be sure to filter these message up the chain, via DefWindowProc (unmanaged) or a call to the WndProc of the base class in managed code.

WM_GESTURENOTIFY is your opportunity to indicate which of the built-in gestures that your application supports. Respond to this message via a call to SetGestureConfig, passing in an array of GESTURECONFIG structures that detail which gestures your application will recognize. There are five specific gestures:

| Gesture | ID | Behavior | Gesture Diagram |

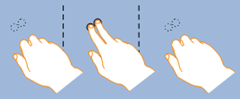

| GID_ZOOM | 3 | zoom |  |

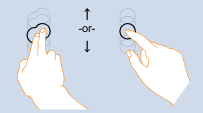

| GID_PAN | 4 | pan / scroll |  |

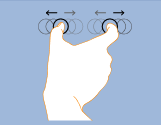

| GID_ROTATE | 5 | rotate |  |

| GID_TWOFINGERTAP | 6 |  |

|

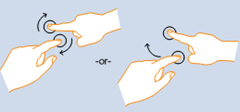

| GID_PRESSANDTAP | 7 | right click |  |

The ID numbers in the chart above refer to the dwID field of the GESTUREINFO structure, which you would retrieve via the GetGestureInfo method as part of the message processing loop. Two other ID values, GID_START (1) and GID_END (2), complete the list and enable detecting the beginning and end of a long-running gesture, like panning across a document. The GESTUREINFO structure contains additional fields (ptsLocation and ullArguments) that store data pertinent to a specific gesture, like the center point of a rotation or the distance between two fingers.

Putting this all together, below is a bit of code from the MTGestures sample provided with the Windows 7 SDK. The two cases for the WndProc function (lines 8 and 22) handle the two new notifications for gesture handling. Here, the application subscribes to all of the gestures (line 13), and defers the gesture handling to the DecodeGesture method (line 23). Each gesture has its own case within the switch statement (lines 46, 49, 52, 80, 102, 127, and 132) where gesture-specific code accesses the additional information in ptsLocation and ullArguments to carry out the processing.

1: protected override void WndProc(ref Message m)

2: {

3: bool handled;

4: handled = false;

5:

6: switch (m.Msg)

7: {

8: case WM_GESTURENOTIFY:

9: {

10:

11: GESTURECONFIG gc = new GESTURECONFIG();

12: gc.dwID = 0;

13: gc.dwWant = GC_ALLGESTURES;

14: gc.dwBlock = 0;

15:

16: bool bResult = SetGestureConfig( Handle, 0, 1,

17: ref gc, _gestureConfigSize);

18: }

19: handled = true;

20: break;

21:

22: case WM_GESTURE:

23: handled = DecodeGesture(ref m);

24: break;

25:

26: default:

27: handled = false;

28: break;

29: }

30: base.WndProc(ref m);

31:

32: if (handled)

33: m.Result = new System.IntPtr(1);

34: }

35:

36:

37: private bool DecodeGesture(ref Message m)

38: {

39: GESTUREINFO gi;

40: gi = new GESTUREINFO();

41: gi.cbSize = _gestureInfoSize;

42: GetGestureInfo(m.LParam,ref gi)

43:

44: switch (gi.dwID)

45: {

46: case GID_BEGIN:

47: break;

48:

49: case GID_END:

50: break;

51:

52: case GID_ZOOM:

53: switch (gi.dwFlags)

54: {

55: case GF_BEGIN:

56: _iArguments = (int)(gi.ullArguments &

ULL_ARGUMENTS_BIT_MASK);

57: _ptFirst.X = gi.ptsLocation.x;

58: _ptFirst.Y = gi.ptsLocation.y;

59: _ptFirst = PointToClient(_ptFirst);

60: break;

61:

62: default:

63: _ptSecond.X = gi.ptsLocation.x;

64: _ptSecond.Y = gi.ptsLocation.y;

65: _ptSecond = PointToClient(_ptSecond);

66: Point ptZoomCenter = new Point(

(_ptFirst.X + _ptSecond.X) / 2,

67: (_ptFirst.Y + _ptSecond.Y) / 2);

68: double k = (double)(gi.ullArguments &

ULL_ARGUMENTS_BIT_MASK) /

69: (double)(_iArguments);

70:

71: _dwo.Zoom(k, ptZoomCenter.X, ptZoomCenter.Y)

72: Invalidate();

73:

74: _ptFirst = _ptSecond;

75: _iArguments = (int)(gi.ullArguments &

ULL_ARGUMENTS_BIT_MASK);

76: break;

77: }

78: break;

79:

80: case GID_PAN:

81: switch (gi.dwFlags)

82: {

83: case GF_BEGIN:

84: _ptFirst.X = gi.ptsLocation.x;

85: _ptFirst.Y = gi.ptsLocation.y;

86: _ptFirst = PointToClient(_ptFirst);

87: break;

88:

89: default:

90: _ptSecond.X = gi.ptsLocation.x;

91: _ptSecond.Y = gi.ptsLocation.y;

92: _ptSecond = PointToClient(_ptSecond);

93:

94: _dwo.Move(_ptSecond.X - _ptFirst.X,

_ptSecond.Y - _ptFirst.Y);

95: Invalidate();

96:

97: _ptFirst = _ptSecond;

98: break;

99: }

100: break;

101:

102: case GID_ROTATE:

103: switch (gi.dwFlags)

104: {

105: case GF_BEGIN:

106: _iArguments = 0;

107: break;

108:

109: default:

110: _ptFirst.X = gi.ptsLocation.x;

111: _ptFirst.Y = gi.ptsLocation.y;

112: _ptFirst = PointToClient(_ptFirst);

113:

114: _dwo.Rotate(

115: ArgToRadians(gi.ullArguments &

ULL_ARGUMENTS_BIT_MASK)

116: - ArgToRadians(_iArguments),

117: _ptFirst.X, _ptFirst.Y

118: );

119:

120: Invalidate();

121:

122: _iArguments = (int)(gi.ullArguments &

ULL_ARGUMENTS_BIT_MASK);

123: break;

124: }

125: break;

126:

127: case GID_TWOFINGERTAP:

128: _dwo.ToggleDrawDiagonals();

129: Invalidate();

130: break;

131:

132: case GID_PRESSANDTAP:

133: if (gi.dwFlags == GF_BEGIN)

134: {

135: _dwo.ShiftColor();

136: Invalidate();

137: }

138: break;

139: }

140:

141: return true;

142: }

The Best Experience

Gestures (“the better experience”) are limited in that they cannot be combined, so while you can rotate or zoom, you can’t rotate and zoom. Additionally, you can’t manipulate more than one object at a time, like say drag two pictures on a photo album application. To ratchet up the experience another level, applications need to opt into handling the raw touch messages, namely WM_TOUCH, by registering each window subject to touch treatment via the RegisterTouchWindow method.

As with the WM_GESTURE message, WM_TOUCH messages should be intercepted in the WndProc and the GetTouchInputInfo method used to return information about the current touch event. That information is returned within an array of TOUCHINPUT structures, in much the same way as the GESTUREINFO structure was leveraged above, but this time returning information about multiple touch points.

Below is some code from the MTScratchPadWMTouch example from the Windows 7 SDK. Similar the above code sample, DecodeTouch is used to extract information from the WM_TOUCH message and carry out appropriate actions. In lines 15 though 20, the dwFlags information for each touch point is consulted and an associated event handler assigned: Touchdown if the touch message indicates a touch was initiated; Touchup if a finger was removed from the screen; and TouchMove if a finger was moved. Once the action is complete (handlers are executed in line 39), the touch handle, which comes from the LPARAM of the WM_TOUCH message, must be closed (line 44).

1: private bool DecodeTouch(ref Message m)

2: {

3: int inputCount = LoWord(m.WParam.ToInt32()); // # of inputs

4: TOUCHINPUT[] inputs;

5: inputs = new TOUCHINPUT[inputCount];

6:

7: GetTouchInputInfo(m.LParam, inputCount, inputs, touchInputSize);

8:

9: bool handled = false;

10: for (int i = 0; i < inputCount; i++)

11: {

12: TOUCHINPUT ti = inputs[i];

13:

14: EventHandler<WMTouchEventArgs> handler = null;

15: if ((ti.dwFlags & TOUCHEVENTF_DOWN) != 0)

16: handler = Touchdown;

17: else if ((ti.dwFlags & TOUCHEVENTF_UP) != 0)

18: handler = Touchup;

19: else if ((ti.dwFlags & TOUCHEVENTF_MOVE) != 0)

20: handler = TouchMove;

21:

22: if (handler != null)

23: {

24: WMTouchEventArgs te = new WMTouchEventArgs();

25:

26: te.ContactY = ti.cyContact/100;

27: te.ContactX = ti.cxContact/100;

28: te.Id = ti.dwID;

29: {

30: Point pt = PointToClient(

new Point(ti.x/100, ti.y/100));

31: te.LocationX = pt.X;

32: te.LocationY = pt.Y;

33: }

34: te.Time = ti.dwTime;

35: te.Mask = ti.dwMask;

36: te.Flags = ti.dwFlags;

37:

38:

39: handler(this, te);

40: handled = true;

41: }

42: }

43:

44: CloseTouchInputHandle(m.LParam);

45: return handled;

46: }

This example is a simple paint application, so the events track individual strokes on a canvas. Touchdown, for instance, initiates a stroke and assigns it a color. Touchup removes the stroke for a collection of active strokes, and TouchMove draws the stroke corresponding to the given touch point on the canvas. Below is the code for the TouchMove handler:

1: private void OnTouchMoveHandler(object sender,

2: WMTouchEventArgs e)

3: {

4: // Find the stroke in the collection of the strokes in drawing.

5: Stroke stroke = ActiveStrokes.Get(e.Id);

6: Debug.Assert(stroke != null);

7:

8: // Add contact point to the stroke

9: stroke.Add(new Point(e.LocationX, e.LocationY));

10:

11: // Partial redraw: only the last line segment

12: Graphics g = this.CreateGraphics();

13: stroke.DrawLast(g);

14: }

Manipulations and Inertia

While WM_TOUCH provides all the low level details, the jump from translating individual touch messages to end-user interactions like rotating and resizing may seem a bit overwhelming. That’s where the manipulation and inertia processors come in.

The manipulation processor (IManipulationProcessor and _IManipulationEvents) takes as input the raw WM_TOUCH messages and converts them into 2-d affine transformations, combining scale, rotation, and translation, essentially providing a superset of the more basic gesture support. IManipulationProcessor exposes a number of methods depending on the source gesture. For instance, you’d call ProcessMoveWithTime to feed the manipulation processor information on the movement of a finger on the screen as reported by one of the WM_TOUCH messages.

As you’re feeding raw touch information into the processor, it’s firing events defined on the _IManipulationEvents interface, namely ManipulationStarted, ManipulationDelta, and ManipulationCompleted. The ManipulationDelta event gives you access to the affine transformation, so you can carry out whatever update is required to the object being manipulated.

Most high-end touch applications will also want to take advantage of inertia mechanics. As users interact with objects on the screen in a tactile fashion, they expect objects to behave with similar physics to the real world. Flick a photo across the screen, and you expect it to move fast at the start and then slow down, and you may expect it to bounce gently off the edge of the screen if it reaches the perimeter of the display. That’s where the inertia processor (IInertiaProcessor) comes in.

Similar to the manipulation processor (with which is shares the _IManipulationEvents interface), you instantiate a reference to the processor and invoke the Process or ProcessTime method to carry out the physics calculations and raise the ManipulationDelta or perhaps ManipulationCompleted event, just as the manipulation processor does.

WPF 4 and the Evolution of Multitouch

The touch capabilities in Windows 7 are obviously implemented at the core operating system level, namely via COM objects. Managed code developers tend to want to operate at a higher level of abstraction, and currently, if you’re using WPF, you would likely do so by integrating with the Real-Time Stylus interface. For example, the MTScratchpad I described above has an alternative implementation in the SDK as well, one using stylus events and other functionality enabled by the IRealTimeStylus3 and ITablet3 interfaces.

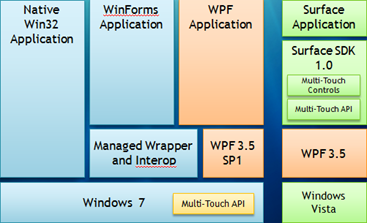

You may also be familiar with Microsoft Surface, the multi-touch, multi-user tabletop device that you’ve seen on Extra Entertainment, Boston's Channel 7, or perhaps in the Sheraton Boston lobby. The Surface is based on Windows Vista and the .NET Framework 3.5, and its touch capability is provided via a Surface-specific SDK and controls. On the traditional computing end, Windows 7 provides a native touch API, and WPF 3.5 can tap into it via either interop or the real-time stylus functionality. In pictures, it’s something like the following; the key point to take away is that a touch application for Surface will not run on Windows 7 and vice-versa… today, anyway.

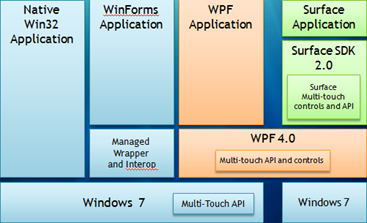

With .NET 4 and the next iteration of Microsoft Surface, there will be considerable interoperability. The architecture changes from the above to what you see below. Most notably, both devices will be built on a core of Windows 7. Additionally, WPF 4 has been enhanced to support touch events directly on the core UI classes, UIElement and UIElement3D, so implementation via interop or the stylus events will no longer be necessary. Of course, Surface will still deliver additional functionality and controls relevant for that device’s use and form factor, but presuming you confine your application to core .NET 4 (and WPF 4) functionality, you should have nearly seamless interoperability.

If you’re considering building multitouch capabilities into your WPF applications, there’s no time like the present. You can download the Beta 2 release of Visual Studio 2010 and .NET Framework 4 now (it was just released last week), and there is a go-live license!

More Hands-On (pun intended!) Information

Windows Touch (MSDN)

Troubleshooting Applications (MSDN)

Windows Touch: Developer Resources (CodeGallery)

MultiTouch Capabilities in Windows 7 (MSDN Magazine)

Windows Touch Pack for Windows 7

Where the Multitouch Devices Are (Channel 10 blog)

Comments

Anonymous

October 28, 2009

I tried out a multi-touch display at my local staples, very nice, if a little pricey for a machine, and way out of my price range. However apparently it does not cost the monitor manufacturers much more to make a touch enabled monitor, so hopefully touch screens will become more common once Windows 7 hits the market. Maybe the next killer desktop or web app will be touch enabled. Touch enabled Instant messaging could make Emoticons, Hugs and Pokes way more fun!Anonymous

November 03, 2009

I cannot locate the Touch Pack anywhere on my Windows 7 Ultimate installation. I think it is internal to Microsoft? Is there anyway to get it? (It is not in the ScreenSave control panel of my installation). Thanks.Anonymous

November 03, 2009

Dan, The Touch Pack is not part of the Windows 7 distribution, as it will only work on touch devices. It should be available at the link I included at the end of the post; however, it's not been put up for download yet. I was under the impression it would be available when Windows 7 launched on the 22nd, but I hear 'they are still working on it' in terms of putting it out there for download. I'll update this article (or send out a quick blog post/tweet) when I hear it's available for download.